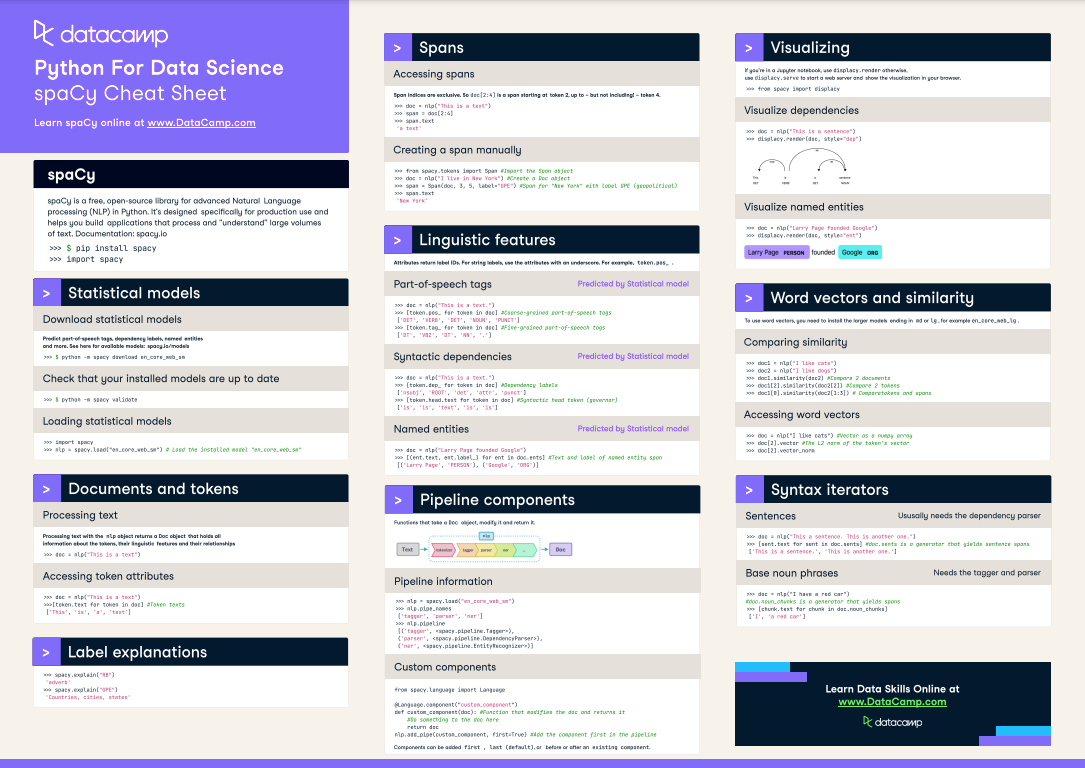

spaCy Cheat Sheet: Advanced NLP in Python

Check out the first official spaCy cheat sheet! A handy two-page reference to the most important concepts and features.

Aug 2021 · 6 min read

RelatedSee MoreSee More

You’re invited! Join us for Radar: AI Edition

Join us for two days of events sharing best practices from thought leaders in the AI space

DataCamp Team

2 min

How to Check if a File Exists in Python

Learn how to check if a file exists in Python in this simple tutorial

Adel Nehme

Writing Custom Context Managers in Python

Learn the advanced aspects of resource management in Python by mastering how to write custom context managers.

Bex Tuychiev

Serving an LLM Application as an API Endpoint using FastAPI in Python

Unlock the power of Large Language Models (LLMs) in your applications with our latest blog on "Serving LLM Application as an API Endpoint Using FastAPI in Python." LLMs like GPT, Claude, and LLaMA are revolutionizing chatbots, content creation, and many more use-cases. Discover how APIs act as crucial bridges, enabling seamless integration of sophisticated language understanding and generation features into your projects.

Moez Ali

How to Convert a List to a String in Python

Learn how to convert a list to a string in Python in this quick tutorial.

Adel Nehme

How to Improve RAG Performance: 5 Key Techniques with Examples

Explore different approaches to enhance RAG systems: Chunking, Reranking, and Query Transformations.

Eugenia Anello