What is GPT-4 and Why Does it Matter?

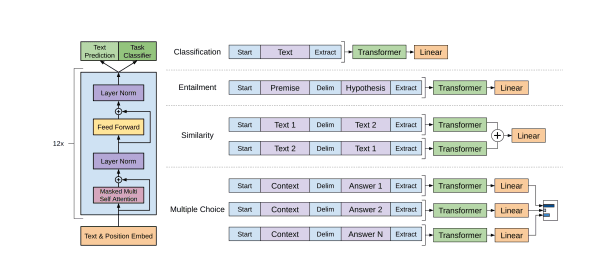

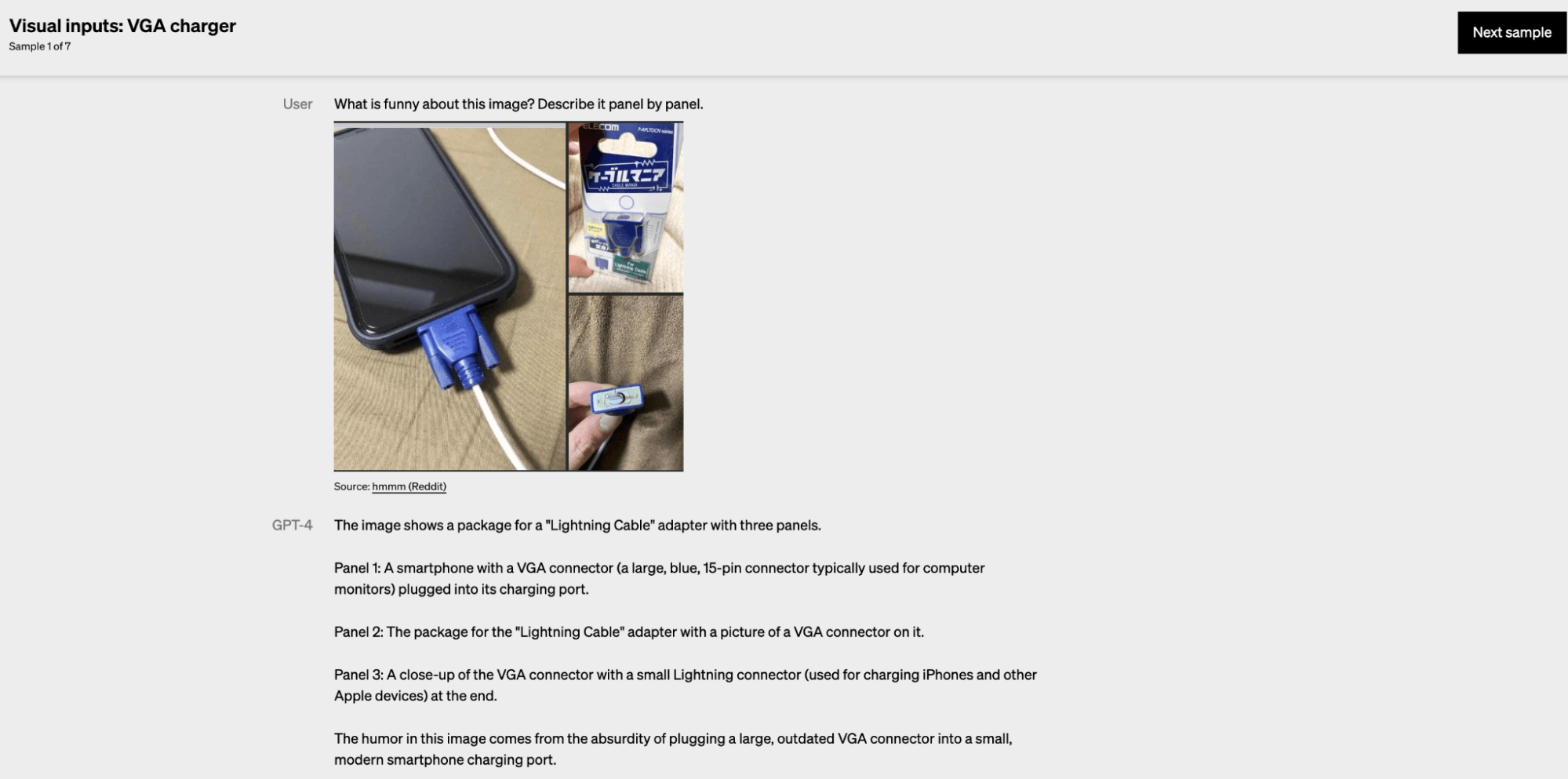

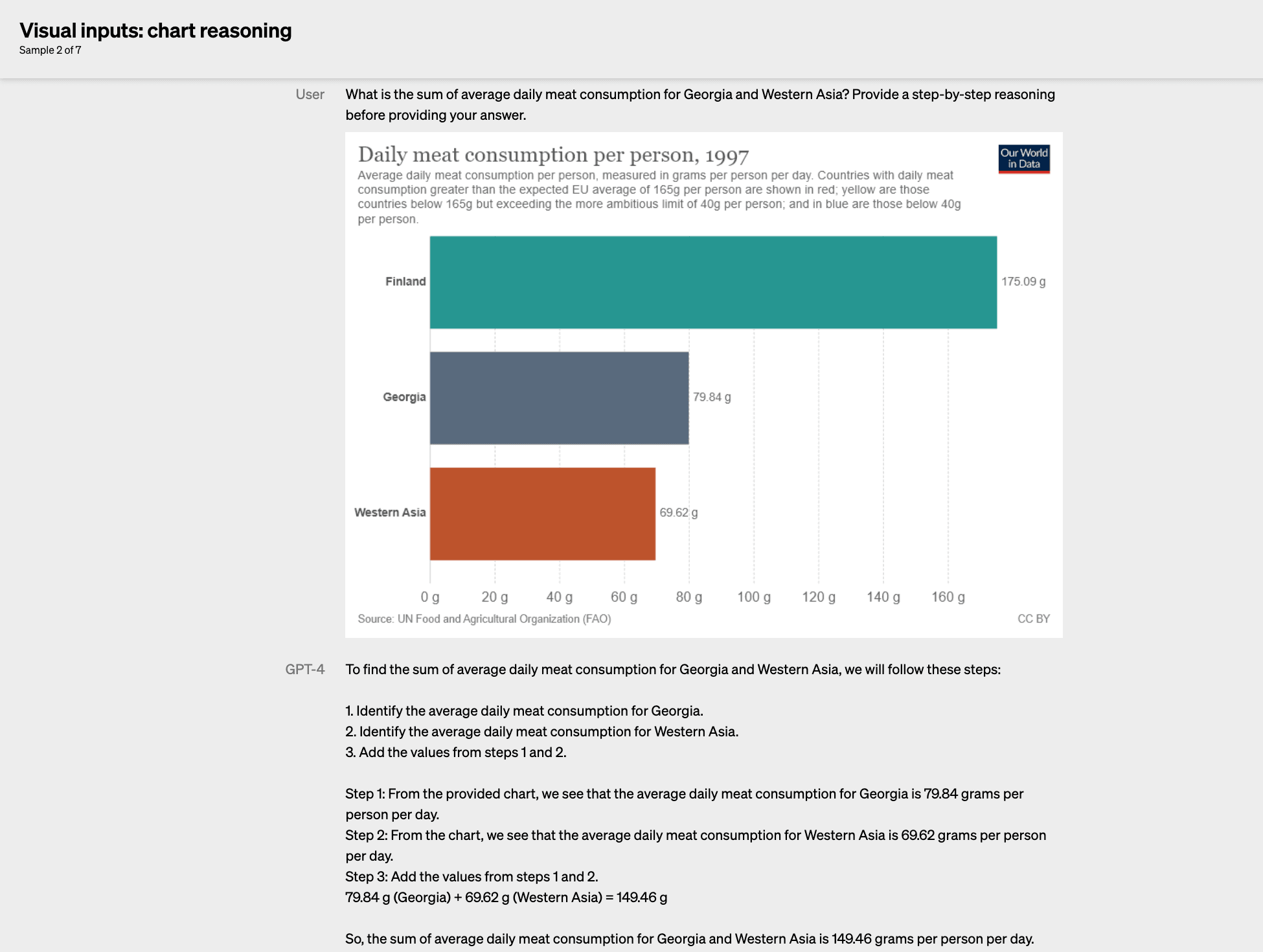

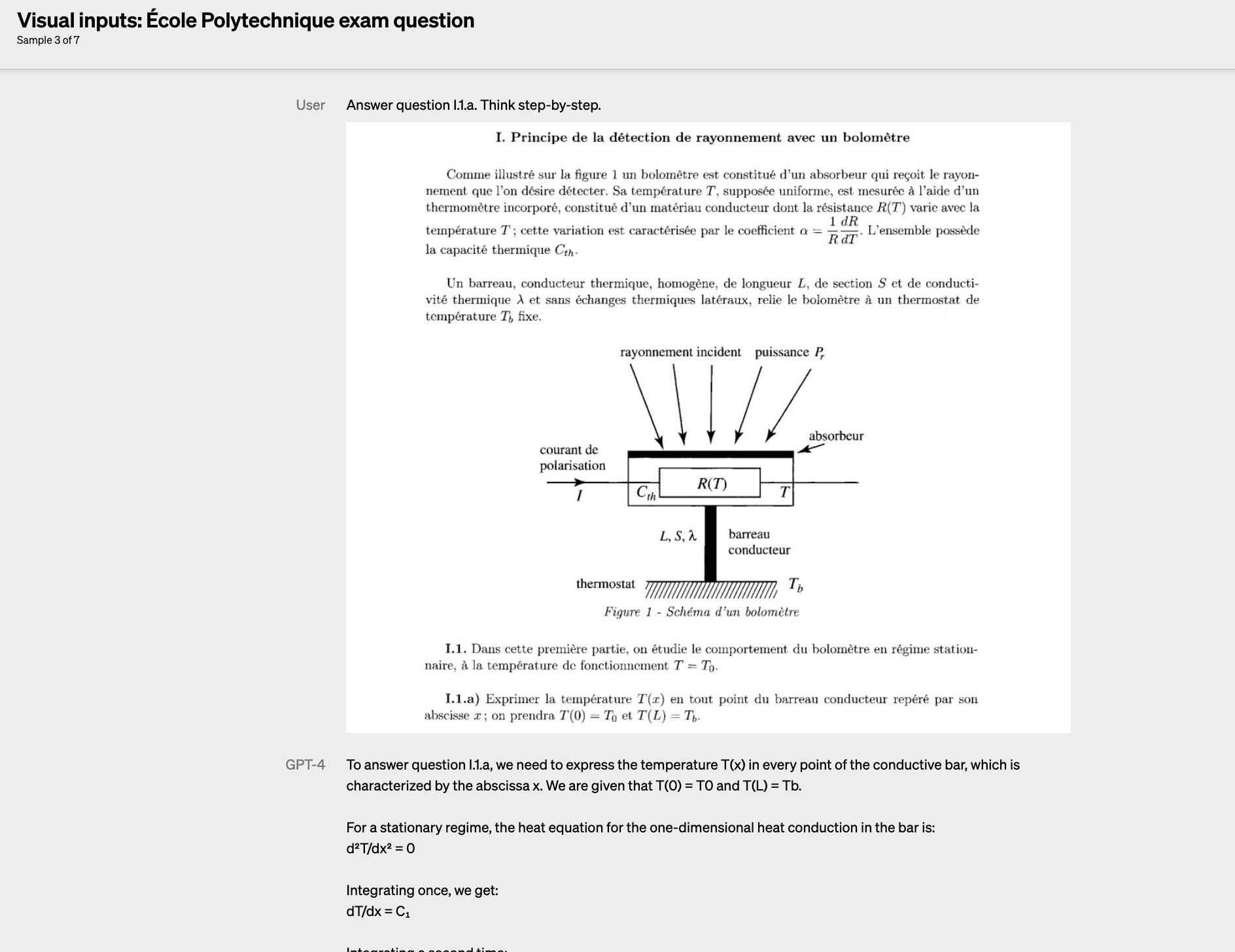

OpenAI has announced the release of its latest large language model, GPT-4. This model is a large multimodal model that can accept both image and text inputs and generate text outputs.

Updated Mar 2023 · 8 min read

Introduction to ChatGPT Course

Get Started with ChatGPT

What is GPT-4?

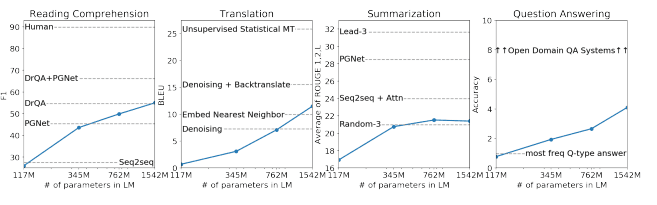

What are the capabilities of GPT models?

What is the history of GPT models?

How does GPT-4 improve on previous models?

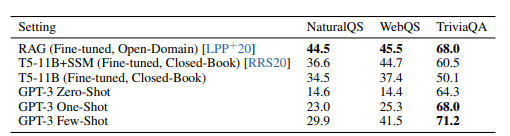

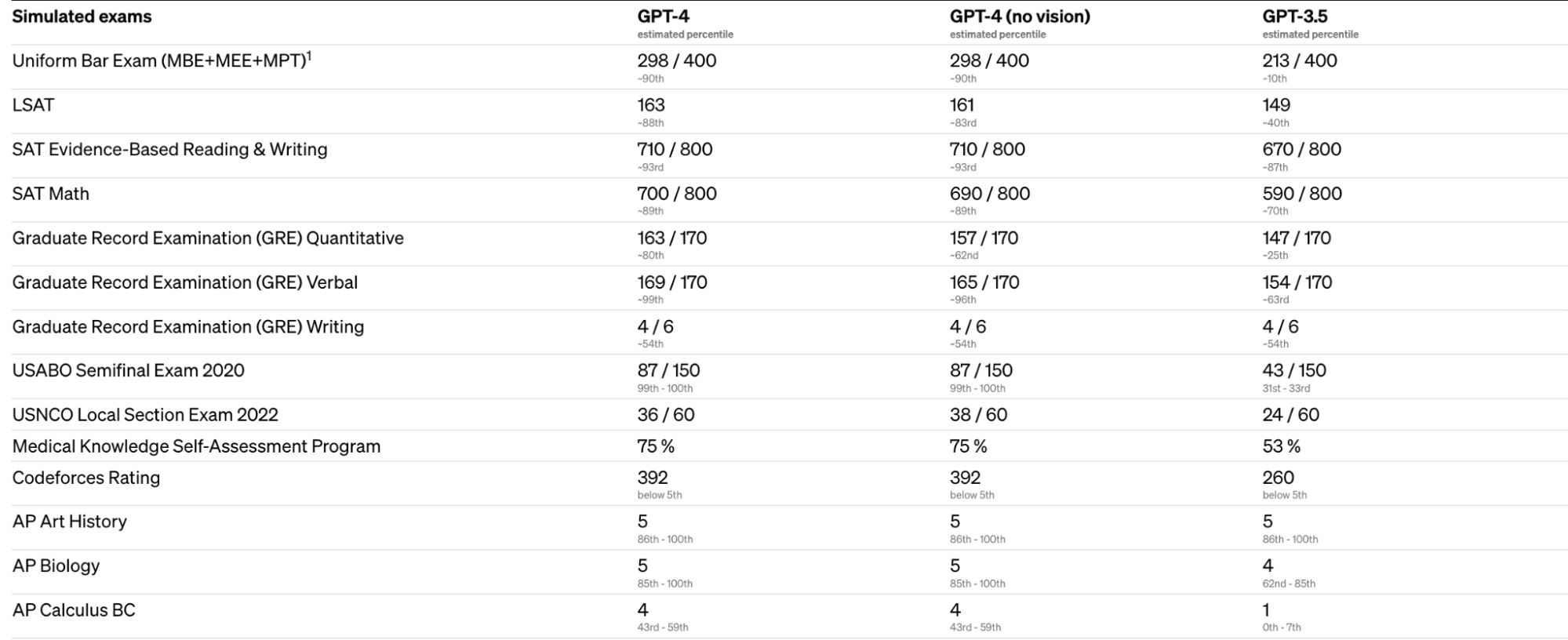

How does GPT-4 perform on benchmarks?

How can I gain access to GPT-4?

Topics

RelatedSee MoreSee More

How to Learn Deep Learning in 2024: A Complete Guide

Discover how to learn deep learning with DataCamp’s 2024 guide. Explore topics from basics to neural networks, with key applications and learning resources.

Adel Nehme

14 min

The Top 20 Deep Learning Interview Questions and Answers

Dive into the top deep learning interview questions with answers for various professional profiles and application areas like computer vision and NLP

Iván Palomares Carrascosa

Deep Learning with PyTorch Cheat Sheet

Learn everything you need to know about PyTorch in this convenient cheat sheet

Richie Cotton

6 min

Introduction to Activation Functions in Neural Networks

Learn to navigate the landscape of common activation functions—from the steadfast ReLU to the probabilistic prowess of the softmax.

Moez Ali

11 min

An Introduction to Convolutional Neural Networks (CNNs)

A complete guide to understanding CNNs, their impact on image analysis, and some key strategies to combat overfitting for robust CNN vs deep learning applications.

Zoumana Keita

14 min

Introduction to Autoencoders: From The Basics to Advanced Applications in PyTorch

A walkthrough of Autoencoders, their variations, and potential applications in the real world.

Pier Paolo Ippolito

10 min