Course

Deep Learning (DL) vs Machine Learning (ML): A Comparative Guide

Whether you’re an aspiring data scientist or simply someone with an interest in the latest developments in artificial intelligence, you’ve likely heard terms such as machine learning and deep learning. But what do they really mean? And what are the differences between them? In this post, we look at machine learning vs deep learning to determining the similarities, differences, use cases, and benefits of these two crucial disciplines.

What is Artificial Intelligence?

We have a comprehensive AI Quick-Start Guide for Beginners which explores this topic in more depth. However, as a quick primer, artificial intelligence (AI) is a field of computer science that aims to create intelligent systems that can perform tasks that typically require human levels of intelligence. This can include things like recognizing natural language, recognizing patterns, and making decisions to solve complex problems.

We can think of AI as a set of tools we can use to make computers behave intelligently and automate tasks. Uses of artificial intelligence include self-driving cars, recommendation systems, and voice assistants.

As we’ll see, terms like machine learning and deep learning are facets of the wider field of machine learning. You can check out our separate guide on artificial intelligence vs machine learning for a deeper look at the topic.

What is Machine Learning?

As with AI, we have a dedicated guide covering what machine learning is. To summarise, machine learning (ML) is a way to implement artificial intelligence; effectively it’s a specialized branch within the expansive field of AI, which in turn is a branch of computer science.

With machine learning, we can develop algorithms that have the ability to learn without being explicitly programmed. These algorithms include:

- Decision trees

- Naive Bayes

- Random forest

- Support vector machine

- K-nearest neighbor

- K-means clustering

- Gaussian mixture model

- Hidden Markov model

So how do computers learn automatically?

The key is data. You provide data, characterized by various attributes or features, for the algorithms to analyze and understand. These algorithms create a decision boundary based on the provided data, allowing them to make predictions or classifications. Once the algorithm has processed and understood the data—essentially training itself—you can move to the testing phase. Here, you introduce new data points to the algorithm, and it will give you results without any further programming.Example:

Imagine you want to predict house prices. You have a dataset containing information on 1000 houses, including the price and the number of rooms—these are your features. Your task is to input these features into an algorithm, say, a decision tree algorithm, to enable it to learn the relationship between the number of rooms and the price of the house.

In this scenario, you input the number of rooms, and the algorithm predicts the house price. For instance, during the testing phase, if you input ‘three’ for the number of rooms, the algorithm should accurately predict the corresponding price of the house, having learned from the relationship between the number of rooms and house prices in the training data.

What is Deep Learning?

Deep learning is a sub-category of machine learning focused on structuring a learning process for computers where they can recognize patterns and make decisions, much like humans do. For instance, if we are teaching a computer to distinguish between different animals, we start with simpler, foundational concepts like the number of legs and gradually introduce more complex ones like habitats and behaviors.

In the realm of machine learning, deep learning is distinguished by the use of neural networks with three or more layers. These multi-layered neural networks strive to replicate the learning patterns of the human brain, enabling the computer to analyze and learn from vast volumes of data. A single-layered network can make rudimentary predictions, but as we add more layers, the network becomes capable of understanding intricate patterns and relationships, enhancing its predictive accuracy.

Deep learning is essentially a type of sophisticated, multi-layered filter. We input raw, unorganized data at the top, and it traverses through various layers of the neural network, getting refined and analyzed at each level. Eventually, what emerges at the bottom is a coherent, structured piece of information or a precise ‘prediction.’

This process excels in deciphering data with multiple abstraction levels, making it indispensable for tasks like image and speech recognition, natural language processing, and devising game strategies. It’s the backbone of many innovations, from the virtual assistants on our phones to the development of autonomous vehicles.

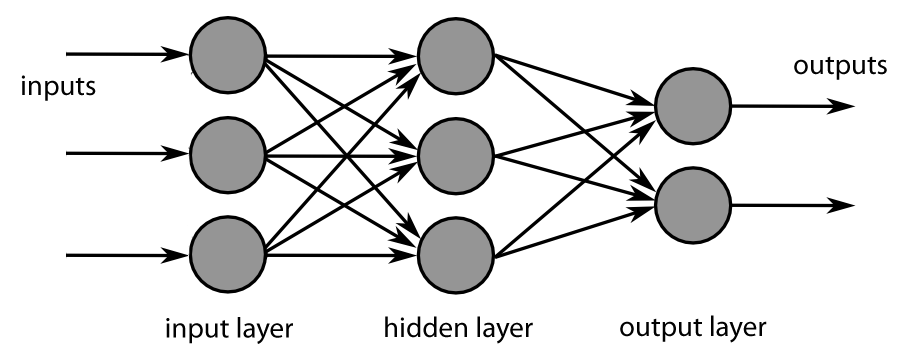

As you can see from the above figure, this shallow neural network has several layers:

- Input layer. This input can be pixels of an image or a range of time series data.

- Hidden layer. Commonly known as weights, which are learned while the neural network is trained

- Output layer. The final layer gives you a prediction of the input you fed into your network.

So, the neural network is an approximation function in which the network tries to learn the parameters (weights) in hidden layers, which when multiplied with the input, gives you a predicted output close to the desired output.

Deep learning is the stacking of multiple such hidden layers between the input and the output layer, hence the name deep learning.

Deep Learning vs Machine Learning: Key Similarities and Differences

Functioning

As we’ve established, deep learning is a specialized subset of machine learning which employs artificial neural networks with multiple layers to analyze data and make intelligent decisions. It delves deeper into data analysis, hence the term "deep," allowing for more nuanced and sophisticated insights. In contrast, machine learning, which encompasses deep learning, focuses on developing algorithms capable of learning from and making predictions or decisions based on data, utilizing a variety of methods including, but not limited to, neural networks.

Feature extraction

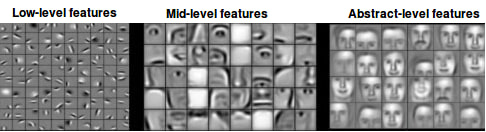

Deep Learning autonomously extracts meaningful features from raw data. A “feature” in this context is an individual measurable property or characteristic of the phenomenon being observed. Deep Learning does not depend on manually programmed feature extraction methods, such as local binary patterns or histograms of gradients, which are predefined ways to summarize the raw data. Instead, it learns the most useful features for accomplishing the task at hand, starting with simple ones and progressively learning more complex representations. Traditional Machine Learning, on the other hand, often relies on these hand-crafted features and requires careful engineering to perform optimally.

Data dependency

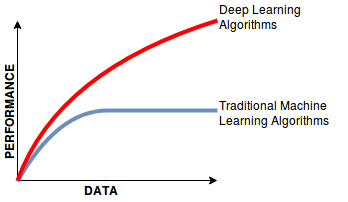

Deep learning models are data-hungry; they perform better with access to abundant data. In contrast, many machine learning algorithms can deliver satisfactory results even with smaller datasets. This is crucial for aspiring data scientists to consider when choosing between methodologies, especially when dealing with limitations in data availability.

From the above figure, you can see that as the data increases, the performance of deep learning algorithms increases compared to traditional machine learning algorithm, in which the performance almost saturates after a while even if the data is increased.

Computation Power

Deep learning requires advanced computational capabilities, typically provided by Graphical Processing Units (GPUs), due to its extensive data and depth (number of layers in neural networks). Traditional machine learning algorithms can often be executed with standard Central Processing Units (CPUs), making them more accessible for beginners in data science.

Training and Inference Time

Training a deep learning network can be extensive, potentially extending to months. “Training” refers to the process of teaching the model to make accurate predictions by feeding it data. The “inference time,” or the time it takes for the model to make predictions once it’s trained, can also be substantial in deep learning due to the complexity of the models. In contrast, traditional machine learning algorithms usually have faster training times and varied inference times.

Problem-solving technique

In machine learning, solving a problem involves breaking it down into parts and applying specific algorithms to each part. For instance, recognizing objects in an image might involve finding the objects first and then applying an algorithm to identify them. In deep learning, the network learns to perform both tasks together, making it a more integrated and holistic solution.

Industry uses

Machine learning algorithms are widely deployed in various industries due to their interpretability. However, the superior performance of deep learning models in certain tasks is sometimes overshadowed by their “black box” nature, making them less preferable in situations where model interpretability is crucial, such as in healthcare or finance.

Output

While machine learning typically yields outputs in numerical values, scores, or classifications, deep learning can produce a diverse range of outputs including text and speech, offering more versatile solutions in fields like natural language processing and speech recognition.

| Criteria | Machine Learning (ML) | Deep Learning (DL) |

|---|---|---|

| Functioning | Focuses on developing algorithms capable of learning from and making predictions or decisions based on data. | A specialized subset of ML that employs multi-layered artificial neural networks to analyze data and make intelligent decisions. It delves deeper into data analysis. |

| Feature Extraction | Often relies on hand-crafted features and requires careful engineering to perform optimally. | Autonomously extracts meaningful features from raw data, learning the most useful features for the task progressively. |

| Data Dependency | Can deliver satisfactory results even with smaller datasets. | Requires abundant data and performs better with access to extensive datasets. |

| Computation Power | Can often be executed with standard Central Processing Units (CPUs). | Requires advanced computational capabilities, typically provided by Graphical Processing Units (GPUs). |

| Training and Inference Time | Usually have faster training times and varied inference times. | Training can be extensive, potentially extending to months, and inference time can also be substantial due to model complexity. |

| Problem-solving Technique | Solves a problem by breaking it down into parts and applying specific algorithms to each part. | The network learns to perform tasks together, providing a more integrated and holistic solution. |

| Industry Uses | Widely deployed due to their interpretability. Suitable where model interpretability is crucial. | Superior performance in certain tasks but less preferable where interpretability is crucial due to their “black box” nature. |

| Output | Typically yields outputs in numerical values, scores, or classifications. | Can produce a diverse range of outputs including text and speech. |

Machine Learning vs Deep Learning: Optimal Use Cases

Machine learning and deep learning serve as the backbone of a myriad of applications across diverse domains, each having its unique requirements and challenges. Here’s a more detailed exploration of when to use each, illustrated with examples:

1. Medical field

- Use case. Cancer cell detection, brain MRI image restoration, and gene printing.

- Choice. Both machine learning and deep learning.

- Rationale. Machine learning is suitable for analyzing structured data and can be used for predictions in diagnostics based on patient records. Deep learning excels in image and speech recognition, making it ideal for interpreting medical images and analyzing patient’s speech patterns for neurological assessments.

2. Document analysis

- Use case. Super-resolving historical document images and segmenting text in document images.

- Choice. Deep learning.

- Rationale. Deep learning models, especially Convolutional Neural Networks (CNNs), are adept at handling image data and can extract intricate patterns and features from historical documents, making them suitable for tasks like image super-resolution and text segmentation.

3. Banking sector

- Use case. Stock prediction and making financial decisions.

- Choice. Machine learning.

- Rationale. Machine learning models, such as regression models and decision trees, are effective in analyzing numerical, structured data, making them suitable for predicting stock prices and aiding in financial decision-making processes.

4. Natural Language Processing (NLP)

- Use case. Recommendation systems (like the ones used by Netflix to suggest movies to users based on their interests), sentiment analysis, and photo tagging.

- Choice. Both machine learning and deep learning.

- Rationale. Machine learning is effective for analyzing user behavior and preferences for recommendation systems, while deep learning is powerful in understanding and generating human language for tasks like sentiment analysis.

5. Information retrieval

- Use case. Search engines, both text search, and image search like the ones used by Google, Amazon, Facebook, LinkedIn, etc.

- Choice. Both machine learning and deep learning.

- Rationale. Machine learning algorithms can efficiently handle and process queries in search engines, while deep learning models, particularly CNNs, are proficient in image recognition and analysis, enhancing the image search capabilities of search engines.

Decision Criteria:

When deciding whether to use Machine Learning or Deep Learning, consider the following aspects:

- Data availability. Deep learning requires vast amounts of data; if your dataset is small, machine learning might be more appropriate.

- Computational power. Deep learning models necessitate high computational power, usually provided by GPUs. If resources are limited, machine learning models, which can run on CPUs, might be more feasible.

- Task complexity. For simpler tasks and structured data, machine learning models are often sufficient. For more complex tasks involving unstructured data like images or natural language, deep learning is more suitable.

- Interpretability. In scenarios where understanding the model’s decision-making process is crucial, the interpretability of machine learning models gives them an edge over the “black box” nature of deep learning models.

By understanding the strengths and limitations of machine learning and deep learning in different scenarios, aspiring data scientists can make informed decisions on the most suitable approach for their specific needs and constraints.

Conclusion

In this guide, we’ve navigated the intricate landscapes of machine learning (ML) and deep lLearning (DL), two pivotal subsets of artificial intelligence (AI). We’ve explored the foundational concepts, the distinctive characteristics, and the myriad of applications each holds in today’s technologically driven world. From understanding the nuances of algorithms to discerning the optimal use cases across various domains like medicine and natural language processing, we’ve endeavored to provide clarity and insight for enthusiasts stepping into the realm of AI.

This exploration is merely a glimpse into the vast and ever-evolving world of AI. The journey of learning and discovery is extensive, with countless nuances and facets awaiting your exploration. For those eager to delve deeper and expand their knowledge, our Deep Learning in Python skill track is a highly recommended next step.

FAQs

How do I choose between using a deep learning model or a traditional machine learning model for my project?

The choice between deep learning and traditional machine learning models depends on several factors including the availability of data, computational resources, task complexity and the need for interpretability of decisions. For simpler tasks or when data is scarce, traditional machine learning models might be more appropriate. Deep learning models are better suited for tasks involving large amounts of data or when the task involves complex pattern recognition.

Can machine learning and deep learning be used together in a project?

Yes, machine learning and deep learning can be used together in a single project, leveraging the strengths of each approach. For example, a project might use machine learning models for data preprocessing and feature engineering, and deep learning models to handle complex pattern recognition tasks within the same pipeline. This hybrid approach can optimize performance and efficiency for certain types of problems.

What skills are needed to work in deep learning and machine learning?

To work in deep learning and machine learning, you typically need a strong foundation in mathematics (especially statistics, calculus, and linear algebra), programming (Python is most common due to its extensive ecosystem of data science libraries), and a good understanding of the algorithms and principles behind machine learning and deep learning models. Practical experience through projects or contributions to research are also highly valued.

Can machine learning or deep learning models be biased? How can this be addressed?

Yes, both machine learning and deep learning models can exhibit bias if the data they are trained on is biased. This can lead to unfair or discriminatory outcomes. Addressing bias involves carefully curating the training data to ensure it is representative and diverse, regularly testing the model's outputs for bias, and employing techniques like algorithmic fairness approaches to mitigate any detected biases.

What is the significance of neural network layers in deep learning?

The layers in a neural network allow deep learning models to learn features at different levels of abstraction. For instance, in image recognition, initial layers might learn basic features like edges, while deeper layers can identify more complex patterns such as shapes or specific objects. This hierarchical learning process enables deep learning models to handle very complex tasks with high accuracy.

What role does data augmentation play in enhancing deep learning models?

Data augmentation is a technique used to increase the diversity of your training set by applying random transformations (such as rotation, scaling, and cropping) to the existing data. This helps in preventing overfitting and improves the model's ability to generalize from the training data to new, unseen data. It's especially useful in domains where deep learning models benefit from varied examples.

Learn more about Machine Learning and Deep Learning

Course

Machine Learning with Tree-Based Models in R

Course

Introduction to Deep Learning in Python

You’re invited! Join us for Radar: AI Edition

DataCamp Team

2 min

How to Check if a File Exists in Python

Writing Custom Context Managers in Python

Bex Tuychiev

Serving an LLM Application as an API Endpoint using FastAPI in Python

How to Convert a List to a String in Python

How to Improve RAG Performance: 5 Key Techniques with Examples

Eugenia Anello