Course

Python Pickle Tutorial: Object Serialization

Introduction to Object Serialization

Practice loading a Python Pickled file with this hands-on exercise.

Are you tired of rerunning your Python code every time you need to access a previously created data frame, variable, or machine learning model?

Object serialization may be the solution you’re looking for.

It is the process of storing a data structure in memory so that you can load or transmit it when required without losing its current state.

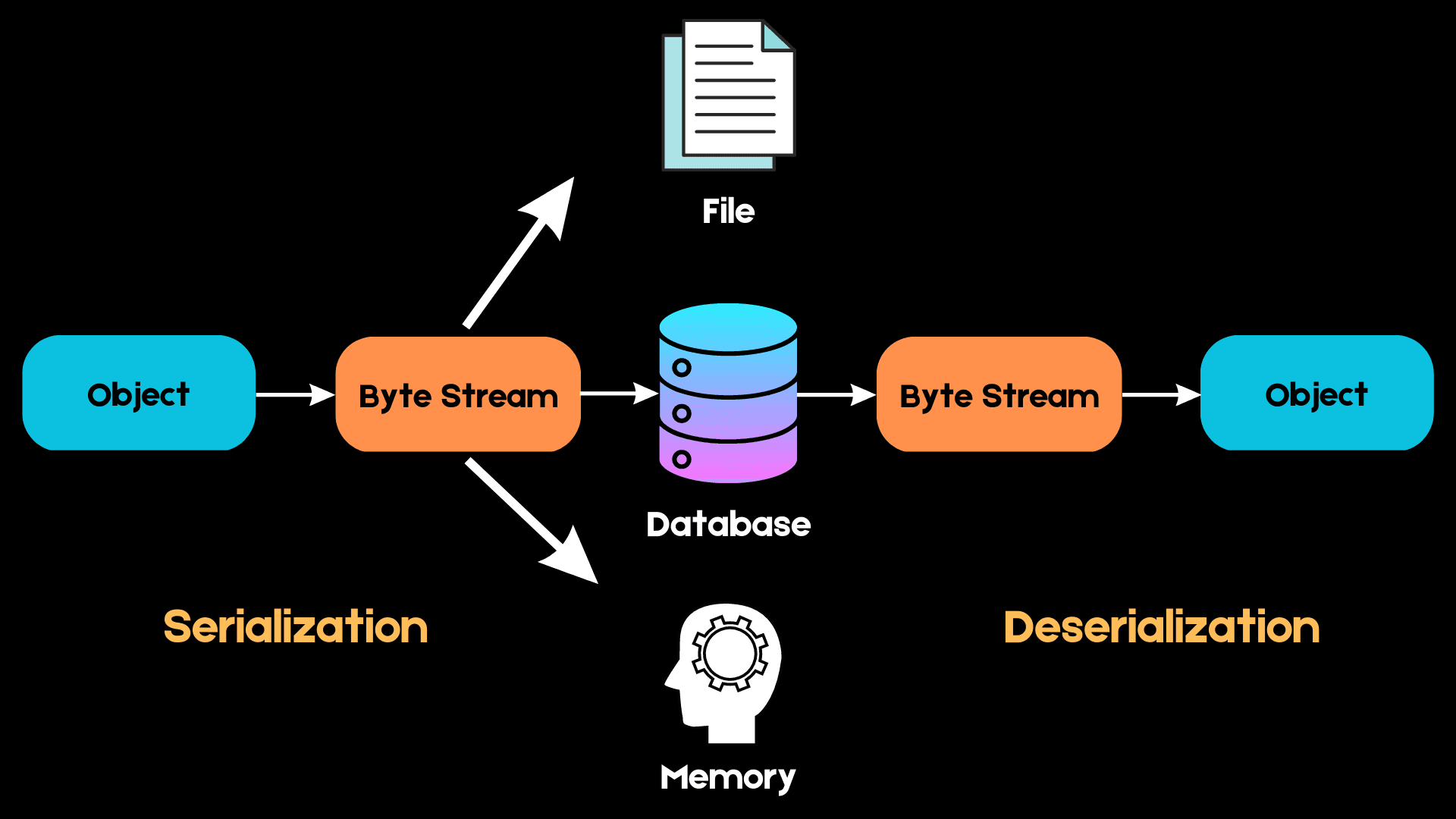

Here is a simple diagram explaining how serialization works:

Image by author

In Python, we work with high-level data structures such as lists, tuples, and sets. However, when we want to store these objects in memory, they need to be converted into a sequence of bytes that the computer can understand. This process is called serialization.

The next time we want to access the same data structure, this sequence of bytes must be converted back into the high-level object in a process known as deserialization.

We can use formats such as JSON, XML, HDF5, and Pickle for serialization. In this tutorial, we will learn about the Python Pickle library for serialization. We will cover its uses and understand when you should choose Pickle over other serialization formats.

Finally, we will learn how to use Pickle Python library to serialize lists, dictionaries, Pandas data frames, machine learning models, and more.

Master your data skills with DataCamp

Learn the skills you need at your own pace—from non-coding essentials to data science and machine learning.

Why Do We Need Object Serialization?

Before we get started with Python Pickle, let’s understand why object serialization is so important.

You might be wondering why we can’t just save data structures into a text file and access them again when required instead of having to serialize them.

Let’s go through a simple example to understand the benefits of serialization.

Here is a nested dictionary containing student information like name, age, and gender:

students = {

'Student 1': {

'Name': "Alice", 'Age' :10, 'Grade':4,

},

'Student 2': {

'Name':'Bob', 'Age':11, 'Grade':5

},

'Student 3': {

'Name':'Elena', 'Age':14, 'Grade':8

}

}Let’s examine the data type of the “students” object:

type(students)dictNow that we have confirmed that the student object is a dictionary type, let’s proceed to write it to a text file without serialization:

with open('student_info.txt','w') as data:

data.write(str(students))Notice that since we can only write string objects to text files, we have converted the dictionary to a string using the str() function. This means that the original state of our dictionary is lost.

Now, let’s read the dictionary, print it out, and check its type again:

with open("student_info.txt", 'r') as f:

for students in f:

print(students)

type(students)strThe nested dictionary is now being printed as a string, and will return an error when we try to access its keys or values.

This is where serialization comes in.

When dealing with more complex data types like dictionaries, data frames, and nested lists, serialization allows the user to preserve the object’s original state without losing any relevant information.

Later in this article, we will learn to store this same dictionary in a file using Pickle and deserialize it using the library. You can read more about Python dictionary comprehension in a separate tutorial.

Introduction to Pickle in Python

Python’s Pickle module is a popular format used to serialize and deserialize data types. This format is native to Python, meaning Pickle objects cannot be loaded using any other programming language.

Pickle comes with its own advantages and drawbacks compared to other serialization formats.

Advantages of using Pickle to serialize objects

- Unlike serialization formats like JSON, which cannot handle tuples and datetime objects, Pickle can serialize almost every commonly used built-in Python data type. It also retains the exact state of the object which JSON cannot do.

- Pickle is also a good choice when storing recursive structures since it only writes an object once.

- Pickle allows for flexibility when deserializing objects. You can easily save different variables into a Pickle file and load them back in a different Python session, recovering your data exactly the way it was without having to edit your code.

Disadvantages of using Pickle

- Pickle is unsafe because it can execute malicious Python callables to construct objects. When deserializing an object, Pickle cannot tell the difference between a malicious callable and a non-malicious one. Due to this, users can end up executing arbitrary code during deserialization.

- As mentioned previously, Pickle is a Python-specific module, and you may struggle to deserialize pickled objects when using a different language.

- According to multiple benchmarks, Pickle appears to be slower and produces larger serialized values than formats such as JSON and ApacheThrift.

Saving and Loading Objects with the Pickle Dump Python Function and Load Function

Pickle uses the following functions for serializing and deserializing Python objects:

pickle.dump(obj, file, protocol=None, *, fix_imports=True, buffer_callback=None)

pickle.dumps(obj, protocol=None, *, fix_imports=True, buffer_callback=None)

pickle.load(file, *, fix_imports=True, encoding='ASCII', errors='strict', buffers=None)

pickle.loads(data, /, *, fix_imports=True, encoding=”ASCII”, errors=”strict”, buffers=None)The Pickle dump() and dumps() functions are used to serialize an object. The only difference between them is that dump() writes the data to a file, while dumps() represents it as a byte object.

Similarly, load() reads pickled objects from a file, whereas loads() deserializes them from a bytes-like object.

In this tutorial, we will be using the dump() and load() functions to pickle Python objects to a file and unpickle them.

Serializing Python Data Structures with Pickle

Lists

First, let’s create a simple Python list:

import pickle

student_names = ['Alice','Bob','Elena','Jane','Kyle']Now, let’s open a text file, write the list to it using the dumps() function, and close the file:

with open('student_file.pkl', 'wb') as f: # open a text file

pickle.dump(student_names, f) # serialize the listWe first created a file called “student_file.pkl.” The extension does not have to be .pkl. You can name this anything you’d like, and the file will still be created. However, it is good practice to use the .pkl extension so that you are reminded that this is a Pickle file.

Also, notice that we opened the file in “wb” mode. This means that you are writing the file in binary mode so that the data is returned in a bytes object.

Then, we use the dump() function to store the “student_names” list in the file.

Finally, you can close the file with the following line of code:

f.close()Now, let’s deserialize the file and print the list:

with open('student_file.pkl', 'rb') as f:

student_names_loaded = pickle.load(f) # deserialize using load()

print(student_names_loaded) # print student namesThe output of the above code should be as follows:

['Alice', 'Bob', 'Elena', 'Jane', 'Kyle']Notice that to deserialize the file, we need to use the “rb” mode which stands for read binary. Then, we unpickle the object using the load() function, after which we can store the data in a different variable and use it as we see fit.

Let’s now check the data type of the list we just unpickled:

type(student_names_loaded)listGreat! We have preserved the original state and data type of this list.

Numpy Arrays

Now, let’s try to serialize and deserialize a slightly more complex data type - Numpy arrays.

Let’s first create a 10 by 10 array of ones:

import numpy as np

numpy_array = np.ones((10,10)) # 10x10 arrayThen, just like we did before, let’s call the dump() function to serialize this array to a file:

with open('my_array.pkl','wb') as f:

pickle.dump(numpy_array, f)Finally, let’s unpickle this array and check its shape and data type to ensure that it has retained its original state:

with open('my_array.pkl','rb') as f:

unpickled_array = pickle.load(f)

print('Array shape: '+str(unpickled_array.shape))

print('Data type: '+str(type(unpickled_array)))You should get the following output:

Array shape: (10, 10)

Data type: <class 'numpy.ndarray'>The unpickled object is a 10X10 Numpy array, which is of the same shape and data type as the object we just serialized.

pandas DataFrames

A data frame is an object that data scientists work with daily. The most popular way to load and save a Pandas dataframe is to read and write it as a csv file. Learn more about importing data in our pandas read_csv() tutorial.

However, this process is slower than serialization and can become extremely time-consuming if the data frame is large.

Let's contrast the efficiency of saving and loading a pandas dataframe using Pickle versus csv by comparing the respective time taken.

First, let’s create a pandas dataframe with 100,000 rows of fake data:

import pandas as pd

import numpy as np

# Set random seed

np.random.seed(123)

data = {'Column1': np.random.randint(0, 10, size=100000),

'Column2': np.random.choice(['A', 'B', 'C'], size=100000),

'Column3': np.random.rand(100000)}

# Create Pandas dataframe

df = pd.DataFrame(data)Now, let’s calculate the amount of time taken to save this dataframe as a csv file:

import time

start = time.time()

df.to_csv('pandas_dataframe.csv')

end = time.time()

print(end - start)0.19349145889282227It took us 0.19 seconds to save a Pandas dataframe with three rows and 100,000 columns to a csv file.

Let’s see if using Pickle can help improve performance. The pandas library has a method called to_pickle() that allows us to serialize dataframes to pickle files in just one line of code:

start = time.time()

df.to_pickle("my_pandas_dataframe.pkl")

end = time.time()

print(end - start)0.0059659481048583984It only took us 5 milliseconds to save the same Pandas dataframe to a Pickle file, which is a significant performance improvement when compared to saving it as a csv.

Now, let’s read the file back to Pandas and see if loading a Pickle file offers any performance benefits as opposed to simply reading a csv file:

# Reading the csv file into Pandas:

start1 = time.time()

df_csv = pd.read_csv("my_pandas_dataframe.csv")

end1 = time.time()

print("Time taken to read the csv file: " + str(end1 - start1) + "\n")

# Reading the Pickle file into Pandas:

start2 = time.time()

df_pkl = pd.read_pickle("my_pandas_dataframe.pkl")

end2 = time.time()

print("Time taken to read the Pickle file: " + str(end2 - start2))The above code should render the following output:

Time taken to read the csv file: 0.00677490234375

Time taken to read the Pickle file: 0.0009608268737792969It took 6 milliseconds to read the csv file into Pandas, and only 0.9 milliseconds for us to read it into Pickle.

Although this difference may appear minor, serializing large Pandas dataframes with Pickle can result in considerable time savings. Pickle will also help us preserve the data type of each column in every case and takes up less disk space than a csv file.

Dictionaries

Finally, let’s serialize the dictionary that we wrote to a text file in the first section of the tutorial:

students = {

'Student 1': {

'Name': "Alice", 'Age' :10, 'Grade':4,

},

'Student 2': {

'Name':'Bob', 'Age':11, 'Grade':5

},

'Student 3': {

'Name':'Elena', 'Age':14, 'Grade':8

}

}Recall that when we saved this dictionary as a text file, we had to convert it to a string and lost its original state.

Let’s now serialize it using Pickle and read it back to ensure that it still contains all the properties of a Python dictionary:

# serialize the dictionary to a pickle file

with open("student_dict.pkl", "wb") as f:

pickle.dump(students, f)

# deserialize the dictionary and print it out

with open("student_dict.pkl", "rb") as f:

deserialized_dict = pickle.load(f)

print(deserialized_dict)You should get the following output:

{'Student 1': {'Name': 'Alice', 'Age': 10, 'Grade': 4}, 'Student 2': {'Name': 'Bob', 'Age': 11, 'Grade': 5}, 'Student 3': {'Name': 'Elena', 'Age': 14, 'Grade': 8}}Let’s now check this variable’s type:

type(deserialized_dict)dictLet’s try to access some information about the first student in this dictionary:

print(

"The first student's name is "

+ deserialized_dict["Student 1"]["Name"]

+ " and she is "

+ (str(deserialized_dict["Student 1"]["Age"]))

+ " years old."

)The first student's name is Alice and she is 10 years old.Great! The dictionary retained all its original properties and can be accessed just like it was before serialization. Check out our Python dictionary comprehension tutorial to find out more.

Serializing Machine Learning Models with Pickle

Training a machine learning model is a time-consuming process that can take hours, and sometimes even many days. It simply is not feasible to retrain an algorithm from scratch when you need to reuse or transfer it to a different environment.

If you’d like to learn about best practices when building machine learning algorithms, you can take our Designing Machine Learning Workflows in Python course.

Pickle allows you to serialize machine learning models in their existing state, making it possible to use them again as needed.

In this section, we will learn to serialize a machine learning model with Pickle.

To do this, let’s first generate some fake data and build a linear regression model with the Scikit-Learn library:

from sklearn.linear_model import LinearRegression

from sklearn.datasets import make_regression

# generate regression dataset

X, y = make_regression(n_samples=100, n_features=3, noise=0.1, random_state=1)

# train regression model

linear_model = LinearRegression()

linear_model.fit(X, y)Now, let’s print out some summary model parameters:

# summary of the model

print('Model intercept :', linear_model.intercept_)

print('Model coefficients : ', linear_model.coef_)

print('Model score : ', linear_model.score(X, y))Model intercept : -0.010109549594702116

Model coefficients : [44.18793068 98.97389468 58.17121618]

Model score : 0.9999993081899219We can then serialize this model using Pickle’s dump() function:

with open("linear_regression.pkl", "wb") as f:

pickle.dump(linear_model, f)The model is now saved as a Pickle file. Let’s deserialize it using the load() function:

with open("linear_regression.pkl", "rb") as f:

unpickled_linear_model = pickle.load(f)The serialized model is now loaded and saved into the “unpickled_linear_model” variable. Let’s check this model’s parameters to ensure that it is the same as the one we initially created:

# summary of the model

print('Model intercept :', unpickled_linear_model.intercept_)

print('Model coefficients : ', unpickled_linear_model.coef_)

print('Model score : ', unpickled_linear_model.score(X, y))You should get the following output:

Model intercept : -0.010109549594702116

Model coefficients : [44.18793068 98.97389468 58.17121618]

Model score : 0.9999993081899219Great! The parameters of the model we just unpickled are the same as the one we initially created.

We can now proceed to use this model to make predictions on a test dataset, train on top of it, or transfer it to a different environment.

Increasing Python Pickle Performance For Large Objects

Pickle is an efficient serialization format that has often proved to be faster than JSON, XML, and HDF5 in various benchmarks.

When dealing with extremely large data structures or huge machine learning models, however, Pickle can slow down considerably, and serialization can become a bottleneck in your workflow.

Here are some ways you can reduce the time taken to save and load Pickle files:

Use the ‘PROTOCOL’ argument

The default protocol used when saving and loading Pickle files is currently 4, which is the most compatible protocol with different Python versions.

However, if you want to speed up your workflow, you can use the HIGHEST_PROTOCOL argument which is Pickle’s fastest available protocol.

To compare the performance difference between Pickle’s most compatible protocol and the default protocol, let’s first serialize a Pandas dataframe using the default protocol. Note that this is the protocol version that Pickle uses if no specific protocol is explicitly stated:

import pickle

import time

import numpy as np

# Set random seed

np.random.seed(100)

data = {'Column1': np.random.randint(0, 10, size=100000),

'Column2': np.random.choice(['A', 'B', 'C'], size=100000),

'Column3': np.random.rand(100000)}

# serialize to a file

start = time.time()

with open("df1.pkl", "wb") as f:

pickle.dump(data, f)

end = time.time()

print(end - start)0.006001710891723633It took around 6 milliseconds for us to serialize the dataframe using Pickle’s default protocol.

Now, let’s pickle the dataframe using the highest protocol:

start = time.time()

with open("df2.pkl", "wb") as f:

pickle.dump(data, f, protocol=pickle.HIGHEST_PROTOCOL)

end = time.time()

print(end - start)0.0030384063720703125With the highest protocol, we managed to serialize the dataframe in half the amount of time.

Use ‘cPickle’ instead of ‘Pickle’

The ‘cPickle’ module is a faster version of ‘Pickle’ that is written in C. This makes it faster than the ‘Pickle’ library that is implemented purely in Python.

Note that in Python3, ‘cPickle’ has been renamed to ‘_pickle’, which is the library that we will be importing.

import _pickle as cPickle

start = time.time()

with open("df3.pkl", "wb") as f:

cPickle.dump(data, f)

end = time.time()

print(end-start)0.004027366638183594Serialization with ‘cPickle’ took approximately 4 milliseconds, which represents a substantial improvement over the ‘Pickle’ Python module.

Only Serialize What You Need

Even with workarounds to make serialization faster, the process can still be very slow for large objects.

To improve performance, you can break the data structure down and only serialize necessary subsets.

When working with dictionaries, for instance, you can specify key-value pairs that you want to access again. Reduce the size of the dictionary before serializing it since this will cut down the object’s complexity and speed up the process significantly.

Serialization with Pickle in Python: Next Steps

Congratulations! You have learned a wide range of topics related to serialization in Python and the use of the Pickle library.

You should now have a solid understanding of what serialization is, how to use Pickle to serialize Python data structures, and how to optimize Pickle’s performance using different arguments and modules.

Here are some steps you can take to improve your understanding of serialization and leverage it to enhance your data science workflows:

- Learn About New Serialization Formats. While we only covered the Pickle module in this tutorial, it isn’t necessarily the best serialization format to use in every scenario. It is worth learning about other formats such as JSON, XML, and HDF5, so that you know which one to pick for different use cases.

- Try it Yourself. Apply the concepts taught in this tutorial to your data science workflows. The next time you create a new data structure or store the output of a calculation in a variable, serialize it for later use instead of running all your code again and again.

- Take an Online Course. To optimize your data science workflows and perform tasks like serialization efficiently, it is imperative to have a strong understanding of Python data structures and objects. To achieve this, you can take our Data Types for Data Science in Python course.

Learn more about Python and Machine Learning

Course

Machine Learning with Tree-Based Models in Python

Course

Unsupervised Learning in Python

A Data Science Roadmap for 2024

Mark Graus

10 min

Python NaN: 4 Ways to Check for Missing Values in Python

Seaborn Heatmaps: A Guide to Data Visualization

Test-Driven Development in Python: A Beginner's Guide

Exponents in Python: A Comprehensive Guide for Beginners

Satyam Tripathi

9 min