1. Inspecting transfusion.data file

Blood transfusion saves lives - from replacing lost blood during major surgery or a serious injury to treating various illnesses and blood disorders. Ensuring that there's enough blood in supply whenever needed is a serious challenge for the health professionals. According to WebMD, "about 5 million Americans need a blood transfusion every year".

Our dataset is from a mobile blood donation vehicle in Taiwan. The Blood Transfusion Service Center drives to different universities and collects blood as part of a blood drive. We want to predict whether or not a donor will give blood the next time the vehicle comes to campus.

The data is stored in datasets/transfusion.data and it is structured according to RFMTC marketing model (a variation of RFM). We'll explore what that means later in this notebook. First, let's inspect the data.

# Print out the first 5 lines from the transfusion.data file

!head -n 5 transfusion.data2. Loading the blood donations data

We now know that we are working with a typical CSV file (i.e., the delimiter is ,, etc.). We proceed to loading the data into memory.

# Import pandas

import pandas as pd

# Read in dataset

transfusion = pd.read_csv('transfusion.data')

# Print out the first rows of our dataset

# ... YOUR CODE FOR TASK 2 ...

transfusion.head()3. Inspecting transfusion DataFrame

Let's briefly return to our discussion of RFM model. RFM stands for Recency, Frequency and Monetary Value and it is commonly used in marketing for identifying your best customers. In our case, our customers are blood donors.

RFMTC is a variation of the RFM model. Below is a description of what each column means in our dataset:

- R (Recency - months since the last donation)

- F (Frequency - total number of donation)

- M (Monetary - total blood donated in c.c.)

- T (Time - months since the first donation)

- a binary variable representing whether he/she donated blood in March 2007 (1 stands for donating blood; 0 stands for not donating blood)

It looks like every column in our DataFrame has the numeric type, which is exactly what we want when building a machine learning model. Let's verify our hypothesis.

# Print a concise summary of transfusion DataFrame

# ... YOUR CODE FOR TASK 3 ...

transfusion.info()4. Creating target column

We are aiming to predict the value in whether he/she donated blood in March 2007 column. Let's rename this it to target so that it's more convenient to work with.

# Rename target column as 'target' for brevity

transfusion.rename(

columns={'whether he/she donated blood in March 2007': 'target'},

inplace=True

)

# Print out the first 2 rows

# ... YOUR CODE FOR TASK 4 ...

transfusion.head(2)5. Checking target incidence

We want to predict whether or not the same donor will give blood the next time the vehicle comes to campus. The model for this is a binary classifier, meaning that there are only 2 possible outcomes:

0- the donor will not give blood1- the donor will give blood

Target incidence is defined as the number of cases of each individual target value in a dataset. That is, how many 0s in the target column compared to how many 1s? Target incidence gives us an idea of how balanced (or imbalanced) is our dataset.

# Print target incidence proportions, rounding output to 3 decimal places

# ... YOUR CODE FOR TASK 5 ...

transfusion.target.value_counts(normalize=True).round(3)6. Splitting transfusion into train and test datasets

We'll now use train_test_split() method to split transfusion DataFrame.

Target incidence informed us that in our dataset 0s appear 76% of the time. We want to keep the same structure in train and test datasets, i.e., both datasets must have 0 target incidence of 76%. This is very easy to do using the train_test_split() method from the scikit learn library - all we need to do is specify the stratify parameter. In our case, we'll stratify on the target column.

# Import train_test_split method

from sklearn.model_selection import train_test_split

# Split transfusion DataFrame into

# X_train, X_test, y_train and y_test datasets,

# stratifying on the `target` column

X_train, X_test, y_train, y_test = train_test_split(

transfusion.drop(columns='target'),

transfusion.target,

test_size=0.25,

random_state=42,

stratify=transfusion.target

)

# Print out the first 2 rows of X_train

# ... YOUR CODE FOR TASK 6 ...

X_train.head(2)7. Selecting model using TPOT

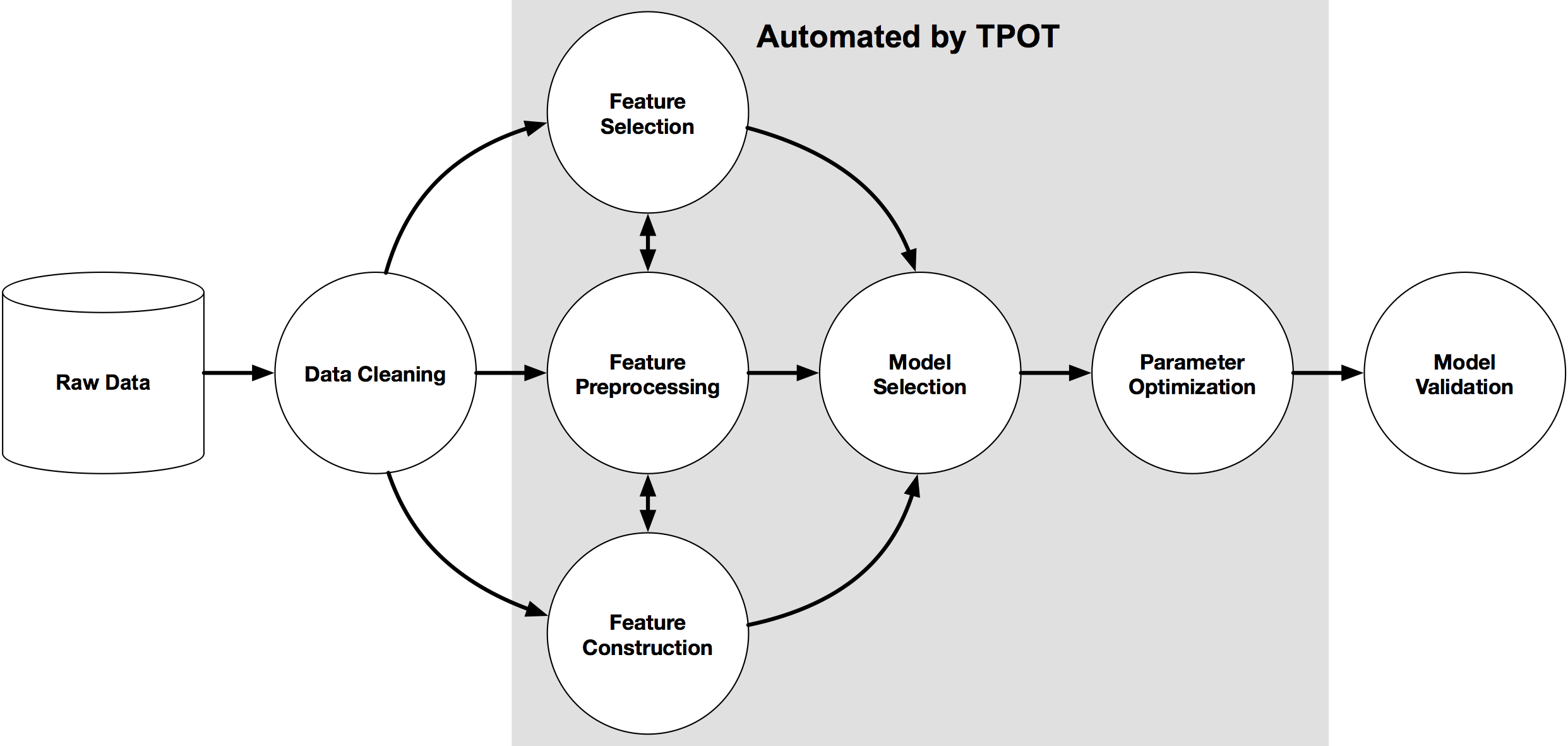

TPOT is a Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

TPOT will automatically explore hundreds of possible pipelines to find the best one for our dataset. Note, the outcome of this search will be a scikit-learn pipeline, meaning it will include any pre-processing steps as well as the model.

We are using TPOT to help us zero in on one model that we can then explore and optimize further.

# Import TPOTClassifier and roc_auc_score

from tpot import TPOTClassifier

from sklearn.metrics import roc_auc_score

# Instantiate TPOTClassifier

tpot = TPOTClassifier(

generations=5,

population_size=20,

verbosity=2,

scoring='roc_auc',

random_state=42,

disable_update_check=True,

config_dict='TPOT light'

)

tpot.fit(X_train, y_train)

# AUC score for tpot model

tpot_auc_score = roc_auc_score(y_test, tpot.predict_proba(X_test)[:, 1])

print(f'\nAUC score: {tpot_auc_score:.4f}')

# Print best pipeline steps

print('\nBest pipeline steps:', end='\n')

for idx, (name, transform) in enumerate(tpot.fitted_pipeline_.steps, start=1):

# Print idx and transform

print(f'{idx}. {transform}')8. Checking the variance

TPOT picked LogisticRegression as the best model for our dataset with no pre-processing steps, giving us the AUC score of 0.7850. This is a great starting point. Let's see if we can make it better.

One of the assumptions for linear models is that the data and the features we are giving it are related in a linear fashion, or can be measured with a linear distance metric. If a feature in our dataset has a high variance that's orders of magnitude greater than the other features, this could impact the model's ability to learn from other features in the dataset.

Correcting for high variance is called normalization. It is one of the possible transformations you do before training a model. Let's check the variance to see if such transformation is needed.