This tutorial is a valued contribution from our community and has been edited for clarity and accuracy by DataCamp.

Interested in sharing your own expertise? We’d love to hear from you! Feel free to submit your articles or ideas through our Community Contribution Form.

As a data practitioner, you are likely well aware of the importance of building a robust data cleaning and preparation pipeline in any machine learning project. However, when dealing with textual data, the significance of such a pipeline is even greater due to the unique challenges that textual data presents. Problems such as noisy data, spelling errors, abbreviations, slang, and grammatical errors can all negatively impact the performance of machine learning models.

To ensure that the textual data used in machine learning models is accurate, consistent, and free from errors, a well-designed data cleaning and preparation pipeline is crucial. This typically involves a series of tasks such as text normalization, tokenization, stopword removal, stemming, lemmatization, and data masking.

Although popular libraries such as NLTK, gensim, and spaCy offer a wide range of tools for text processing and cleaning, dealing with textual data can still pose unique challenges that can significantly impact the performance of machine learning models. These challenges can range from character normalization to data masking and much more. While these issues can be addressed using the libraries above, they often require significant time and effort to solve.

This is where Textacy shines. Textacy is a Python library that offers a simple and easy way to tackle a range of common problems that arise when dealing with textual data. While other libraries offer similar functionality, Textacy's focus on solving specific problems, such as character normalization and data masking, sets it apart from the rest in this context.

Textacy Character Normalization

Character normalization is the process of converting text data into a standard format, which can be particularly important when dealing with multilingual text data.

Text = ‘ the cafe “Saint-Raphaël” is loca-/nted on Cote d’Azur.’

Accented characters are a common issue in text normalization that can significantly impact the accuracy of machine learning models.

The problem arises because people do not consistently use accented characters, which can lead to inconsistencies in the data.

For example, the tokens "Saint-Raphaël" and "Saint-Raphael" may refer to the same entity, but without normalization, they will not be recognized as identical. In addition, texts often contain words separated by a hyphen, and apostrophes like the ones used in the above text can be a problem for tokenization. For all of these issues, it makes sense to normalize the text and replace accents and fancy characters with ASCII equivalents.

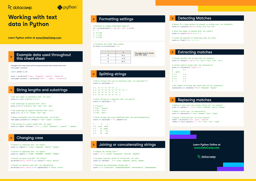

We will use Textacy for that purpose as it has a nice collection of pre-defined functions to solve this problem in an easy and efficient manner. The following table shows a selection of Textacy’s preprocessing functions that can work independently from any other libraries.

|

Function |

Description |

|---|---|

|

normalize _hyphentated_words |

Reassembles words that were separated by a line break |

|

normalize_quotation_marks |

Replaces all kinds of fancy quotation marks with an ASCII equivalent |

|

normalize_unicode |

Unifies different codes of accented characters in Unicode |

|

remove_accents |

Replaces accented characters with ASCII |

|

replace_emails |

Replaces emails with __EMAIL__ |

|

replace_urls |

Replaces urls with __URL__ |

text = "The café "Saint-Raphaël" is loca-\nted on Cote dʼAzur."

import textacy

import textacy.preprocessing as tprep

def normalize(text):

text = tprep.normalize_hyphenated_words(text)

text = tprep.normalize_quotation_marks(text)

text = tprep.normalize_unicode(text)

text = tprep.remove_accents(text)

return textUsing the above function normalize, which is based on the pre-defined Textacy preprocessing functions, will help us tackle the normalization problem with minimal effort.

print(normalize(text)Out:

The cafe Saint-Raphael is located in Cote d' AzureTextacy Data Masking

Textual data often contains not only ordinary words but also several kinds of identifiers, such as URLs, email addresses, or phone numbers. Sometimes we are interested especially in those items. In many cases, though, it may be better to remove or mask this information, either because it is not relevant or for privacy concerns.

Textacy has some convenient replace functions for data masking, as shown in the table above. These replace functions are pre-defined, making it easy to mask sensitive or irrelevant information without the need to write custom regular expression functions.

text = "Check out https://spacy.io/usage/spacy-101"print(replace_urls(text))Out:

Check out __URL__Summary

In this article, we showed how textacy can be used to simplify the data preprocessing process for textual data. With its range of built-in functions, Textacy makes it easy to handle common preprocessing challenges, such as character normalization and data masking.

By streamlining the preprocessing process, Textacy paves the way for focusing on the more complex and challenging aspects of natural language processing. Whether you’re working on sentiment analysis, topic modeling, or any other NLP task, Textacy can help you get your data ready for analysis quickly and efficiently.

So, next time you’re faced with a preprocessing challenge, consider using Textacy to simplify your workflow and save yourself some valuable time and effort.

If you found this guide on Textacy helpful and you're looking to deepen your understanding of text data preprocessing and natural language processing, consider enrolling in DataCamp's Natural Language Processing Fundamentals in Python course.