Creating code is an essential part of many data professions. But creating code that functions is only half the job. The code also needs to be clear, easy to hand off, and robust to disturbances. By following a few coding guidelines in your projects, you can save yourself time restructuring your code later on and make your collaborators happy, too.

Here, we explore some of the coding best practices and guidelines that can help make your code clearer and more accessible.

Code Best Practices: Structure and Organization

A clear structure provides more readability to your code, making it easier to debug and share. There are several things you can do while writing your code to make the structure more clear and organized.

Choose meaningful variable and function names

When choosing names for variables and functions, it’s important to choose names that are relevant and meaningful.

For example, let’s assume you are creating a program to handle bank account information, and you need a variable to hold the account number. You may be tempted to call this variable “number” or “n”. However, those are not very informative names for someone who may be looking at your code for the first time. The name “account_number” provides much more information and can be easier to follow later in the code.

For example, imagine you find the following equation halfway through a long stretch of code. Can you tell what this equation is doing?

ab=pb+d-wThis may be a challenging equation to come across during a code review. Consider this alternative.

account_balance=previous_balance+deposit-withdrawalWith more informative variable names, it is much less frustrating to follow the logic in a piece of code. This same concept applies to function names. A function called “name_change” is much more informative than “change”, “update”, or “nc”.

Camel case vs snake case

There are two generally accepted conventions for creating variable or function names: camel case and snake case. Camel case uses capital letters to separate words in a variable name. Snake case uses underscores to separate words in variables. For example, we would have the variable name “accountNumber” in camel case and “account_number” in snake case.

Which convention you use depends on your personal preference, your company’s coding standards, and the programming language you are using. However, whichever case you choose, it is important to stick with it throughout your entire project. Switching between different naming conventions looks sloppy and can be visually confusing.

Use of comments and whitespace effectively

An easy way to make your code more readable is to add descriptive comments throughout. Good commenting will ensure your code is decipherable by someone else. You should add comments to explain what each section of code is doing, especially any complex equations or functions. You may also want to add comments to variable definitions, to give credit to any copied code, to include a reference to source data, or to leave yourself “to do” notes within the code.

When leaving yourself “to do” notes, consider starting the comment with “TODO”. This capitalization will stand out visually, and it is easily searchable, so you can find all the notes you left for yourself.

Comments are used to make the code clearer and more understandable, not to make up for badly structured code. They should be clear and consistent and enhance well-structured code blocks.

Whitespace is also useful to visually format your code. Think of whitespace like paragraphs. Paragraphs help to break up large chunks of text so you can quickly scan it. Similarly, adding whitespace strategically in your code makes it easier to scan through the code to find bugs and follow what it’s doing. Consider adding space between different sections or modules.

Consider the following examples:

product_price=materials_cost+manufacturing_cost+shipping_cost

state_tax=product_price*state_tax_rate(state)

federal_tax=product_price*federal_tax_rate

total_tax=state_tax+federal_tax

total_cost=product_price+total_taxIn this first example, the text is squished together and challenging to decipher. However, by separating out the content and using comments and whitespace, we can make this section much more readable.

#Calculate the price of the product

product_price=materials_cost+manufacturing_cost+shipping_cost

#Calculate the tax owed

state_tax=product_price*state_tax_rate(state)

federal_tax=product_price*federal_tax_rate

total_tax=state_tax+federal_tax

#Calculate the total cost

total_cost=product_price+total_tax

#TODO create function for looking up state tax rates Using indentation and consistent formatting

Throughout your code, consistency is key. In some languages, you can use indentation to visually separate different sections. This can be useful to differentiate sections that work inside of loops, for example. Beware: some languages, like Python, use indentation functionally, so you may be unable to use it for visual differentiation.

Consistent formatting is important as it improves readability and meets reader expectations.

Documentation and communication

Many programming tasks in data professions are team efforts. Even if you spend long periods coding in solitude, that code will often be sent around to a team for review and use. This makes it imperative that communication about the code be clear within the team.

When sending code to a teammate, it’s important to send information about the code’s purpose, proper use, and any quirks they need to consider about the code while running it. This type of communication is called documentation and should always accompany the code.

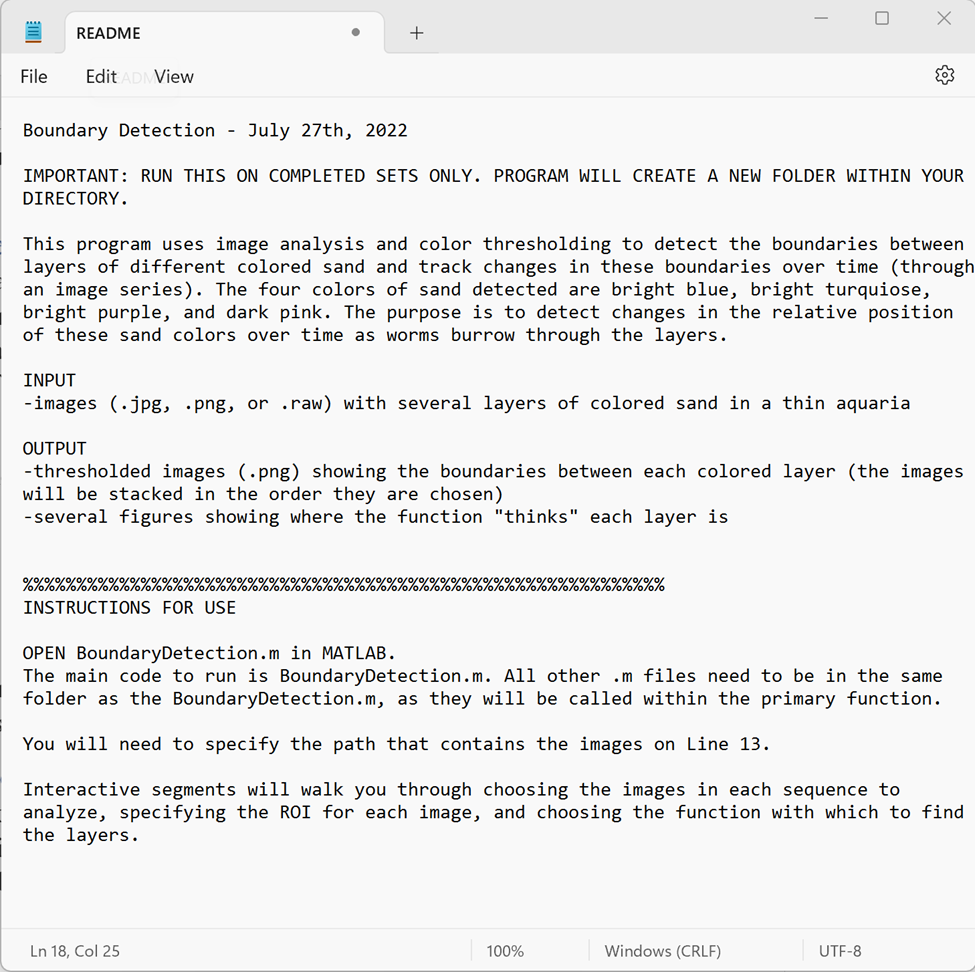

The convention is to provide this documentation within a text file called README.txt that is stored in the same folder as the main code file. However, specific teams may have different standards for documentation, such as using Notion or a Google Doc.

What should be documented?

The documentation file should include everything someone would need to know to take over the project. There should be information about how to use the code, the code’s purpose, architecture, and design. You should include notes about what the inputs and outputs are when the code is run, as well as any quirks.

It’s also useful to add information about error detection and maintenance. Depending on your company’s coding standards, you may also include author information, project completion dates, or other information.

Creating reader-friendly README files

When writing README files, it’s important to maintain a clear structure. Clearly label your inputs and outputs and the different sections of your document. Put the most important information for your user at the top. Anything that is critical should be labeled and made to stand out with either all caps, a series of dashes, or something else.

Docstrings

A docstring can be useful for someone who is using your code for the first time. This is a string literal written into your code that provides information about the code. In Python, if you use the command line to find documentation on a class, method, or function, the text that is displayed is the docstring within that code.

Here is an example of a docstring for a function:

def calculate_total_price(unit_price, quantity):

"""

Calculate the total price of items based on unit price and quantity.

Args:

unit_price (float): The price of a single item.

quantity (int): The number of items purchased.

Returns:

float: The total price after multiplying unit price by quantity.

Example:

>>> calculate_total_price(10.0, 5)

50.0

"""

total_price = unit_price * quantity

return total_priceDocumenting your code may seem like a lot of work, especially when you already know the ins and outs of your program. But proper documentation can save tons of time when passing your code off to someone else or when revisiting an old project you haven’t worked with in a while. Here’s an article where you can read more about best practices for documenting Python code.

Coding Best Practices: Efficient Data Processing

In addition to clarity, good code should run efficiently. You can include a few practices in your writing to ensure your code processes data efficiently.

Avoiding unnecessary loops and iterations

Loops are often very processor-heavy tasks. One or two loops may be unavoidable, but too many loops can quickly bog down an otherwise efficient program. By limiting the number of loops and iterations you have in your code, you can boost your code’s performance.

Vectorizing operations for performance

One way to reduce the number of loops in your code is to vectorize operations. This means performing an operation on an entire vector at once instead of going through each value one at a time.

list_a = [1, 2, 3, 4, 5]

list_b = [6, 7, 8, 9, 10]

result = []

for i in range(len(list_a)):

result.append(list_a[i] + list_b[i])

print(result)In this example, we use a for loop to add two lists together. By vectorizing, we can remove the loop and concatenate the two lists without iterating.

import numpy as np

list_a = [1, 2, 3, 4, 5]

list_b = [6, 7, 8, 9, 10]

array_a = np.array(list_a)

array_b = np.array(list_b)

result = array_a + array_b

print(result)Another technique for reducing loops in Python is to use list comprehensions, which you can learn more about in DataCamp’s Python list comprehension tutorial.

Memory management and optimization techniques

Efficient memory management is crucial for data processing apps. Inefficient memory usage can lead to performance bottlenecks and even app crashes. To optimize memory usage, consider the following techniques:

Memory profiling

Use memory profiling tools to identify memory leaks and areas of excessive memory consumption in your code. Profilers help pinpoint the parts of your program that need optimization and allow you to focus your efforts on the most critical areas.

Data serialization and compression

When dealing with large datasets, consider serializing data to disk or using data compression. Serialization reduces memory usage by storing data in a compact format, while compression further reduces storage requirements.

Data chunking

If you're processing extremely large datasets that don't fit into your allotted memory, try data chunking. This involves dividing the data into smaller, manageable chunks that can be processed sequentially or in parallel. It helps avoid excessive memory usage and allows you to work with larger datasets.

DataCamp has a great course on writing efficient Python code.

Coding Best Practices: Scaling and Performance

It is a good idea to keep performance in mind while coding. After you’ve designed and written your initial code, you should edit it to further improve performance.

Profiling code for performance bottlenecks

A process called profiling allows you to find the slowest parts of your program so you can focus your editing efforts there. Many IDEs (Integrated Development Environments) have profiling software built in that allows you to easily find the bottlenecks in your code and improve them.

Parallel processing

Once you have identified bottlenecks, you need to find the best methods of resolving them. One technique is parallel processing. This is a technique that involves splitting a task between multiple processors on your computer or in the cloud. This can be very useful if you have thousands of calculations that need to be computed.

Strategies for handling larger datasets

As your program scales, you'll likely encounter larger datasets that need to be processed efficiently. Implementing the right strategies is essential to avoid performance degradation.

Data partitioning

Partition large datasets into manageable chunks. This approach, known as data partitioning, allows you to process data in parallel and distribute the workload across multiple processing units. Additionally, it minimizes the memory requirements for processing.

Data compression

Consider using data compression techniques to reduce the storage and transmission overhead of large datasets. Compression libraries like zlib and Snappy can significantly decrease the size of data without compromising its integrity.

Distributed databases

Distributed database solutions like Apache Cassandra, Amazon DynamoDB, or Google Cloud Bigtable can help manage large datasets. These databases are designed to handle massive datasets and provide efficient data storage and retrieval mechanisms.

Balancing optimization with code readability

Some optimization techniques also improve the readability of the code. However, other optimizations may make it harder to follow what’s going on. It’s important to balance these two goals when writing and optimizing your code.

If a technique will greatly improve the efficiency of your program, it might be worth it looking a little more convoluted. If you do this, you should be sure to document it well. On the other hand, a technique that will save you only a little bit of time may not be worth it if it makes it much harder to read.

Best Practices For Version Control and Collaboration

When writing code, a useful tool is version control software. By far the most popular version of this is Git. Git saves previous versions of your code, allowing you to make changes and always revert to an earlier version if you make a catastrophic mistake. It’s essentially a backup. Git also facilitates collaboration on a project by easily highlighting differences and resolving conflicts.

Check out our introduction to version control with Git course for more details.

Importance of version control systems (e.g., Git)

Using a version control system is almost as vital as saving your work. It allows for a record of your progress, a backup of successful versions, and an easy venue to publish your work. Let’s go over the advantages of using git for independent as well as collaborative coding projects.

Collaborative coding

One way to collaborate on a project is to pass versions back and forth one at a time. In this system, each programmer essentially “checks out” the code, works on their section, and passes it on to the next programmer. This is slow and inefficient. It can also result in problems if two people accidentally work on the file at the same time, resulting in two different versions of the same code.

A better solution is to use a version control system like Git. With Git, multiple programmers can work on the code simultaneously. When they push their code changes to the main repository, there is a simple process used to merge the different parts of the code so everything works together. Once merged, the newly updated code is freely available to everyone with access to the repository. This allows each programmer to work on the newest version of the code.

Git also provides an easy way to initiate a code review process.

Independent coding

When you are the only person working on a project, it can be tempting to skip using Git for simplicity. However, there are several compelling reasons to use Git as part of your workflow, even for independent projects.

One of the most compelling reasons to use Git on independent projects is to retain the ability to revert to an earlier version of the code if it stops performing the way you expect. For example, say you add a new analysis to a recommender system you created. The analysis seems to be working fine, but suddenly, the original recommender system starts having problems. It seems obvious that the problem is due to the new analysis, but where specifically did the problem crop up? It can be useful to have a version without the analysis to look at side by side with the new version to trace the problem.

Git also allows you to easily publish your code so others can view it or use it. This is very useful for setting up a portfolio, creating open-source programs, or sending code to customers. Then, if you need to update your code for any reason, it is easy to push a new version.

Setting up and managing repositories

If you are working on a team, you may contribute to an already established repository. However, you may need to start a repository yourself. Fortunately, platforms like GitHub and Bitbucket have very user-friendly instructions for creating a new repository.

Once established, you will need to share your repository with your collaborators, keep on top of pull requests and merges, and ensure every contributor is following similar commit rules.

Collaborative workflows (branching, merging, pull requests)

There are a few terms that are useful to know when working with Git.

Branching

When two different versions of the same code are created, this is referred to as branching.

Merging

Merging is the process of resolving the differences between two or more branches to create a single version of the code.

Pull requests

When a programmer wants a version of the code in a repository, they will issue a pull request. This is essentially permission to download a version of the code to work with.

Pushes

When a programmer adds a new version of the code to the repository, this is called pushing a new version. DataCamp’s Git Push/Pull tutorial explains the differences between these terms and how to use each.

Handling conflicts and maintaining a clean commit history

If multiple contributors modify the same lines of code, Git will flag it as a merge conflict. Resolving conflicts involves manually editing the conflicting code to reconcile the changes, essentially choosing which version of that line of code to keep. After resolution, you can commit the changes and continue with the merge.

Maintain a clean and informative commit history by writing clear and concise commit messages. Follow a consistent format and describe the purpose of each commit. This helps track the changes over time so everyone can understand the project's history.

For more information about Git, I highly recommend DataCamp’s Introduction to Git and GitHub Concepts courses.

Code Review and Refactoring Best Practices

Conducting effective code reviews for quality assurance

A code review is a fantastic way to improve your code and your programming skills. This is basically a peer review, where someone else will go through your code and provide feedback.

If you work on a team, you may have mandatory code reviews on a regular basis.

However, even if you work alone, it is a good idea to solicit occasional code reviews to keep your code up to standard. It’s also a great way to learn new ways of doing things and to learn about security issues you may not already be familiar with.

Identifying code smells and when to refactor

Have you ever opened your refrigerator and noticed a bad smell that put you on a search for what had spoiled? If so, you are familiar with using smell as an indicator of something going bad. This same idea is used in code reviews.

Of course, when doing a code review, you are not literally using your nose to sniff the code. But reviewers look for indicators of something gone wrong, which are called code smells.

Some problems may require a simple change to one line of code to repair. However, other problems may require you to rethink an entire section or the entire document.

These larger fixes, where you are changing the structure of the underlying code without changing the functionality of the code, are called refactoring. For example, this can be done to repair a security flaw while keeping the user experience identical.

Error Handling and Testing

Importance of error handling and testing

Testing your code is imperative to ensure that your code is doing what you think it should. Try testing your code with small, fictional datasets where you know what the outcome should be and check that your program gives the expected answer. If you have the time and resources, testing your code on multiple datasets that test different aspects of your program can ensure your code is working the way you expect.

If you create code that is going to be in place for a while, like a data pipeline or an app, it’s particularly important to consider error handling. Errors can occur when your data sources have changed or when your end user does something unexpected. Adding blocks of code that handle expected errors can keep your program running without crashes.

Test-driven development

Test-Driven Development (TDD) is a foundational principle in software engineering that you should incorporate into your coding projects. This approach places testing at the forefront of the development process, ensuring that every piece of code is rigorously evaluated before it's considered complete.

By adhering to TDD principles, you create a safety net of tests that not only verify the correctness of your code but also help to guide the development process itself. It’s a proactive stance on testing which results in code that is more resilient, easier to maintain, and less prone to defects.

Writing unit tests to validate code functionality

Unit tests are tests written to validate certain parts of your code. For example, you may run a unit test on a function you write to convert units from Celsius to Fahrenheit. In this unit test, you ask whether your code gets the correct answer to a specific example.

Python has two libraries that are particularly useful for writing unit tests, unittest and pytest. Writing comprehensive unit tests not only boosts the reliability of your code but also serves as documentation, illustrating how different parts of your software should behave.

import unittest

# The function we want to test

def square(x):

return x ** 2

# Create a test class that inherits from unittest.TestCase

class TestSquare(unittest.TestCase):

# Define a test case for the square function

def test_square_positive_number(self):

result = square(5)

self.assertEqual(result, 25) # Assert that the result is equal to 25

if __name__ == '__main__':

unittest.main()This is an example of a unit test for a simple function and its output.

#OUTPUT

.

----------------------------------------------------------------------

Ran 1 test in 0.001s

OKUsing try-except blocks for robust code execution

Incorporating try-except blocks into your code is a fundamental error-handling technique that can significantly enhance code robustness.

These blocks allow you to gracefully handle unexpected situations or exceptions that may arise during program execution.

By anticipating potential errors and defining how your code should react to them, you can prevent crashes and unexpected behavior, leading to a more user-friendly and reliable app. Whether it's handling file I/O errors, network connectivity issues, or input validation problems, judicious use of try-except blocks can make your code more resilient and user-friendly.

try:

num = int(input("Enter a number: "))

result = 10 / num # Attempt to perform division

except ZeroDivisionError:

result = None # Set result to None if division by zero occurs

print(f"Result of the division: {result}")Security and Privacy Considerations

Safeguarding sensitive data

You may work on a project with some sensitive data such as health information, passwords, or personally identifying information. There are several laws in place restricting the way these types of data can be used and how they must be safeguarded. It is important to work these safeguards into your code as you create it.

In other cases, you may be working with code that is important to keep secure for nonlegal reasons, such as dealing with company secrets. When writing your code, and definitely before deploying any code, you should ensure that this data is kept secure. Below are a few coding security best practices.

Data minimization

It’s important to collect only the data that is absolutely necessary for your project. Avoid collecting excessive information that could be misused if your system is compromised. Additionally, you can implement data retention policies to delete data that is no longer needed.

Access control

Implement robust access controls to ensure that only authorized users and processes can access sensitive data. Role-based access control can help secure sensitive data. Regularly review and audit access permissions to detect and rectify any unauthorized access.

Data encryption

Encryption is a fundamental technique for protecting data. Use strong encryption algorithms and protocols to secure data stored in databases, on disk, and during data transmission over networks. Implement encryption libraries and APIs that are well-vetted and maintained to avoid common vulnerabilities.

Encryption and secure coding practices

Secure coding practices are essential for building apps that can withstand security threats. When it comes to encryption and secure coding, consider the following recommendations:

Input validation

Always validate and sanitize user inputs to prevent common security vulnerabilities such as SQL injection, cross-site scripting, and command injection. Input validation ensures that malicious input cannot compromise your app's security.

Secure libraries and components

When using third-party libraries or components, verify their security posture. Keep them updated to patch known vulnerabilities. Additionally, consider using security-focused libraries and frameworks that are designed to mitigate common security risks.

Regular security testing

Incorporate regular security testing into your development process. This includes conducting penetration testing, code reviews, and vulnerability assessments. Automated tools can help identify security flaws, but manual testing by security experts is highly recommended.

Secure authentication and authorization

Implement secure authentication mechanisms, such as multi-factor authentication, and robust authorization controls to ensure that users only have access to the resources they need. Avoid hardcoding credentials or sensitive information in your code or configuration files.

Keeping up to date on security threats is a constantly moving target as bad actors continually update their tactics. DataCamp has an introduction to data privacy course to help you get started. Once you’ve got some fundamentals, try a security wargame like Bandit to test out your new skills.

Continued Learning and Growth

Data is a dynamic field, with new technologies, languages, and libraries constantly emerging. To stay competitive and relevant in the industry, it's essential to make continued learning and growth a central part of your career. One crucial aspect of this is staying updated with coding trends and libraries.

Make it a habit to allocate time for learning new concepts, languages, and tools. Subscribe to newsletters, follow tech blogs, and attend webinars or conferences relevant to your field. Explore online courses and tutorials that provide hands-on experience with the latest technologies. By staying informed, you can leverage new tools and methodologies to improve your coding skills and productivity.

Engaging with the coding community and forums

Join online forums

Participate in coding forums like Stack Overflow, GitHub discussions, or specialized forums related to your programming languages and interests. Contribute by answering questions and sharing your knowledge. Engaging in discussions and solving real-world problems not only helps others but also reinforces your own understanding of coding concepts.

Attend meetups and conferences

Local and virtual coding meetups and conferences provide excellent opportunities to connect with like-minded individuals, share experiences, and learn from experts. These events often feature workshops, talks, and networking sessions that can expand your knowledge and professional network. Check out this list of data conferences to get started.

Leveraging online resources for continuous improvement

The internet is a treasure trove of resources for developers seeking continuous improvement. Take advantage of online courses, tutorials, and coding challenges to hone your skills and tackle new challenges.

Online courses

Online Courses offered by DataCamp provide high-quality, structured learning experiences. These courses cover a wide range of topics, from coding fundamentals to advanced topics like data science and cybersecurity. A good place to start is with general coding courses like Introduction to Python, Writing Functions in Python, and Intermediate R. You may also want to try more focused courses at an advanced level, like a course on object-oriented programming.

Coding challenges and practice platforms

Websites like LeetCode, Kaggle, HackerRank, and CodeSignal offer coding challenges and competitions that allow you to practice problem-solving and algorithmic skills. Regularly participating in these challenges sharpens your coding abilities and prepares you for technical interviews. DatCamp also occasionally runs challenges, and has a wide range of practical problems and real data science projects you can use to hone your skills.

Open-source contributions

Consider contributing to open-source projects. This not only allows you to work on real-world data analysis projects but also exposes you to collaborative coding practices and diverse coding styles.

Conclusion

Programming is more than just writing code that is functional. Your code needs to be clear, organized, efficient, and scalable, while keeping in mind quality and security. By embracing these coding best practices, you will not only create better code but also elevate your skills. Consider taking courses about software engineering principles as well as language-specific best practices guides, like those outlined in this Python best practices tutorial. In a world where precision and reliability are paramount, these coding practices serve as the guiding principles that empower data professionals to excel, innovate, and make a lasting impact.

I am a PhD with 13 years of experience working with data in a biological research environment. I create software in several programming languages including Python, MATLAB, and R. I am passionate about sharing my love of learning with the world.