Curso

A todo el mundo le gusta destacar, ser diferente. Pero esa no es la calidad que quieres en tus puntos de datos como científico de datos. Los puntos de datos divergentes o las anomalías en un conjunto de datos son uno de los problemas de calidad de datos más peligrosos que afectan a casi todos los proyectos de datos.

Esta última frase puede sorprenderte si sólo has estado trabajando con pulidos conjuntos de datos de código abierto que a menudo vienen sin valores atípicos. Sin embargo, los conjuntos de datos del mundo real siempre presentan algunas diferencias respecto a las muestras normales. Es tu trabajo detectarlos y tratarlos adecuadamente.

En este artículo, aprenderás las ideas fundamentales de este proceso, que suele denominarse detección de anomalías:

- El efecto perjudicial que tienen las anomalías en tu proyecto.

- La importancia de detectar anomalías.

- Aplicaciones reales de la detección de anomalías.

- La diferencia entre anomalías, valores atípicos y novedades.

- Tipos de anomalías y métodos de detección de anomalías.

- Cómo construir algoritmos de detección de anomalías en Python.

- Cómo afrontar los retos de la detección de anomalías.

Al final, dominarás los fundamentos de la detección de anomalías y ganarás confianza para mitigar la influencia perturbadora de los valores atípicos en tus proyectos.

¿Qué es la detección de anomalías?

La detección de anomalías, a veces llamada detección de valores atípicos, es un proceso de búsqueda de patrones o casos en un conjunto de datos que se desvían significativamente del comportamiento esperado o "normal".

La definición de datos "normales" y anómalos varía significativamente según el contexto. A continuación te mostramos algunos ejemplos de detección de anomalías en acción.

1. Operaciones financieras

Normal: Compras rutinarias y gastos constantes de una persona en Londres.

Fuera de lo normal: Una retirada masiva de dinero de Irlanda desde la misma cuenta, lo que apunta a un posible fraude.

2. Tráfico de red en ciberseguridad

Normal: Comunicación regular, transferencia de datos constante y cumplimiento del protocolo.

Fuera de lo normal: Aumento brusco de la transferencia de datos o uso de protocolos desconocidos que indican una posible brecha o malware.

3. Monitorización de las constantes vitales del paciente

Normal: Frecuencia cardiaca estable y tensión arterial constante

Fuera de lo normal: Aumento repentino de la frecuencia cardiaca y disminución de la tensión arterial, lo que indica una posible emergencia o un fallo del equipo.

La detección de anomalías incluye muchos tipos de métodos no supervisados para identificar muestras divergentes. Los especialistas en datos los eligen en función del tipo de anomalía, el contexto, la estructura y las características del conjunto de datos de que se trate. Los trataremos en las próximas secciones.

Aplicaciones reales de la detección de anomalías

Aunque ya hemos visto algunos ejemplos, veamos una historia real de cómo funciona la detección de anomalías en las finanzas.

Shaq O'Neal, cuatro veces ganador de la NBA, es traspasado de los Miami Heat a los Phoenix Suns. Cuando Shaq llega al apartamento vacío que le han proporcionado los Phoenix Suns, quiere amueblarlo inmediatamente en mitad de la noche. Así que va a Walmart y hace la mayor compra de la historia de Walmart por 70.000 dólares. O al menos, lo intenta; su tarjeta es rechazada.

Se pregunta cuál podría ser el problema (¡no puede estar arruinado!) A las 2 de la madrugada, la seguridad de American Express le llama y le dice que se sospechaba que le habían robado la tarjeta porque alguien estaba intentando hacer una compra de 70.000 dólares en Walmart de Phoenix (mira la anécdota para oír la historia completa).

Hay muchas otras aplicaciones de la detección de anomalías en el mundo real, más allá de las finanzas y la detección de fraudes:

- Ciberseguridad

- Sanidad

- Monitorización de equipos industriales

- Detección de intrusiones en la red

- Monitorización de la red energética

- Comercio electrónico y análisis del comportamiento de los usuarios

- Control de calidad en la fabricación

La detección de anomalías está profundamente entretejida en los servicios cotidianos que utilizamos y, a menudo, ni siquiera nos damos cuenta.

La importancia de la detección de anomalías en la ciencia de datos

Los datos son el bien más preciado en la ciencia de datos, y las anomalías son las amenazas más perturbadoras para su calidad. La mala calidad de los datos significa mala:

- Pruebas estadísticas

- Cuadros de mando

- Modelos de aprendizaje automático

- Decisiones

y, en última instancia, una base comprometida para una toma de decisiones informada.

Las anomalías distorsionan los análisis estadísticos al introducir patrones inexistentes, lo que lleva a conclusiones erróneas y predicciones poco fiables. Como suelen ser los valores extremos de un conjunto de datos, las anomalías suelen sesgar las dos características más importantes de las distribuciones: la media y la desviación típica.

Como el funcionamiento interno de casi todos los modelos de aprendizaje automático depende en gran medida de estas dos métricas, la detección oportuna de anomalías es crucial.

Tipos de anomalías

La detección de anomalías engloba dos prácticas generales: la detección de valores atípicos y la detección de novedades.

Los valores atípicos son puntos de datos anormales o extremos que sólo existen en los datos de entrenamiento. En cambio, las novedades son instancias nuevas o no vistas anteriormente en comparación con los datos originales (de entrenamiento).

Por ejemplo, considera un conjunto de datos de temperaturas diarias en una ciudad. La mayoría de los días, las temperaturas oscilan entre 20°C y 30°C. Sin embargo, un día, hay un pico de 40ºC. Esta temperatura extrema es un valor atípico, ya que se desvía significativamente del intervalo habitual de temperaturas diarias.

Ahora, imagina que la ciudad instala una nueva estación de control meteorológico más precisa. Como resultado, el conjunto de datos empieza a registrar sistemáticamente temperaturas ligeramente más altas, que oscilan entre los 25°C y los 35°C. Este aumento sostenido de las temperaturas es una novedad, ya que representa un nuevo patrón introducido por el sistema de vigilancia mejorado.

Anomalías, por otra parte, es un término amplio que abarca tanto los valores atípicos como las novedades. Puede utilizarse para definir cualquier instancia anormal en cualquier contexto.

Identificar el tipo de anomalías es crucial, ya que te permite elegir el algoritmo adecuado para detectarlas.

Tipos de valores atípicos

Como hay dos tipos de anomalías, también hay dos tipos de valores atípicos: univariantes y multivariantes. Según el tipo, utilizaremos distintos algoritmos de detección.

- Los valores atípicos univariantes existen en una sola variable o característica de forma aislada. Los valores atípicos univariantes son valores extremos o anormales que se desvían del rango típico de valores para esa característica específica.

- Los valores atípicos multivariantes se encuentran combinando los valores de varias variables a la vez.

Por ejemplo, considera un conjunto de datos sobre los precios de la vivienda en un barrio. La mayoría de las casas cuestan entre 200.000 y 400.000 dólares, pero hay una Casa A con un precio excepcionalmente alto de 1.000.000 de dólares. Si analizamos sólo el precio, la Casa A es un claro valor atípico.

Ahora, añadamos dos variables más a nuestro conjunto de datos: los metros cuadrados y el número de dormitorios. Si tenemos en cuenta los metros cuadrados, el número de dormitorios y el precio, es la Casa B la que parece extraña:

- Tiene la mitad de metros cuadrados que el precio medio de la vivienda.

- Sólo tiene un dormitorio.

- Cuesta el tope de gama 380.000 $.

Cuando observamos estas variables individualmente, parecen ordinarias. Sólo cuando los juntamos descubrimos que la Casa B es un claro valor atípico multivariante.

Métodos de detección de anomalías y cuándo utilizar cada uno de ellos

Los algoritmos de detección de anomalías difieren según el tipo de valores atípicos y la estructura del conjunto de datos.

Para la detección univariante de valores atípicos, los métodos más populares son:

- Puntuación Z (puntuación estándar): la puntuación z mide cuántas desviaciones estándar se aleja un punto de datos de la media. En general, los casos con una puntuación z superior a 3 se consideran valores atípicos.

- Intervalo intercuartílico (IQR): El IQR es el intervalo entre el primer cuartil (Q1) y el tercer cuartil (Q3) de una distribución. Cuando una instancia está más allá de Q1 o Q3 para algún multiplicador de IQR, se consideran valores atípicos. El multiplicador más habitual es 1,5, lo que hace que el intervalo de valores atípicos sea [Q1-1,5 * IQR, Q3 + 1,5 * IQR].

- Puntuaciones z modificadas: similares a las puntuaciones z, pero las puntuaciones z modificadas utilizan la mediana y una medida llamada Desviación Absoluta de la Mediana (DAM) para encontrar valores atípicos. Como la media y la desviación típica se sesgan fácilmente por los valores atípicos, las puntuaciones z modificadas suelen considerarse más robustas.

Para los valores atípicos multivariantes, solemos utilizar algoritmos de aprendizaje automático. Gracias a su profundidad y fuerza, son capaces de encontrar patrones intrincados en conjuntos de datos complejos:

- Bosque de aislamiento: utiliza una colección de árboles de aislamiento (similares a los árboles de decisión) que dividen recursivamente conjuntos de datos complejos hasta aislar cada instancia. Los casos que se aíslan más rápidamente se consideran atípicos.

- Factor atípico local (LOF): LOF mide la desviación de la densidad local de una muestra en comparación con sus vecinas. Los puntos con una densidad significativamente menor se eligen como valores atípicos.

- Técnicas de agrupación: técnicas como k-means o la agrupación jerárquica dividen el conjunto de datos en grupos. Los puntos que no pertenecen a ningún grupo o están en sus propios grupitos se consideran valores atípicos.

- Detección de valores atípicos basada en el ángulo (ABOD): ABOD mide los ángulos entre puntos individuales. Los casos con ángulos impares pueden considerarse atípicos.

Aparte del tipo de anomalías, debes tener en cuenta las características del conjunto de datos y las limitaciones del proyecto. Por ejemplo, el Bosque de Aislamiento funciona bien en casi cualquier conjunto de datos, pero es más lento y requiere más cálculos, ya que es un método de conjunto. En comparación, LOF es muy rápido en el entrenamiento, pero puede no rendir tan bien como el Bosque de Aislamiento.

Puedes ver una comparación de los algoritmos de Detección de Anomalías más comunes en 55 conjuntos de datos del paquete Python Outlier Detection (PyOD).

Construir un modelo de detección de anomalías en Python

Como prácticamente cualquier tarea, existen muchas bibliotecas en Python para realizar la detección de anomalías. Los mejores contendientes son:

- Detección de valores atípicos en Python (PyOD)

- Scikit-learn

Mientras que scikit-learn ofrece cinco algoritmos clásicos de aprendizaje automático (puedes utilizarlos tanto para valores atípicos univariantes como multivariantes), PyOD incluye más de 30 algoritmos, desde métodos sencillos como MAD hasta complejos modelos de aprendizaje profundo. También puedes utilizar TensorFlow o PyTorch para modelos personalizados, pero están fuera del alcance de este artículo.

Detección univariante de anomalías

Prefiero pyod por su rica biblioteca de algoritmos y una API coherente con sklearn. Sólo hacen falta unas pocas líneas de código para encontrar y extraer valores atípicos de un conjunto de datos utilizando PyOD. Aquí tienes un ejemplo de utilización de MAD en un conjunto de datos univariante:

import pandas as pd

import seaborn as sns

from pyod.models.mad import MAD

# Load a sample dataset

diamonds = sns.load_dataset("diamonds")

# Extract the feature we want

X = diamonds[["price"]]

# Initialize and fit a model

mad = MAD().fit(X)

# Extract the outlier labels

labels = mad.labels_

>>> pd.Series(labels).value_counts()

0 49708

1 4232

Name: count, dtype: int64Repasemos el código línea por línea. En primer lugar, cargamos las bibliotecas necesarias para manipular los datos, cargar un conjunto de datos y pyod para el modelo de detección de valores atípicos. A continuación, tras cargar el conjunto de datos Diamantes incorporado en Seaborn, extraemos los precios de los diamantes.

A continuación, inicializamos y ajustamos un modelo de Desviación Absoluta Mediana (MAD) a X en una sola línea. A continuación, extraemos las etiquetas inlier y outlier utilizando el atributo labels_ de mad en labels.

Cuando imprimimos al final los recuentos de valores de labels, vemos que 49708 pertenece a la categoría 0 (inliers), mientras que 4232 pertenece a la 1 (outliers). Si queremos eliminar los valores atípicos del conjunto de datos original, podemos utilizar el subconjunto de pandas en diamonds:

outlier_free = diamonds[labels == 0]

>>> len(outlier_free)

49708labels == 0 crea una matriz de valores Verdadero/Falso (matriz booleana) donde True denota un inlier.

Detección multivariante de anomalías

El proceso de creación de un modelo multivariante de detección de anomalías también es el mismo. Pero la detección multivariante de valores atípicos requiere pasos de procesamiento adicionales si hay rasgos categóricos presentes:

>>> diamonds.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 53940 entries, 0 to 53939

Data columns (total 10 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 carat 53940 non-null float64

1 cut 53940 non-null category

2 color 53940 non-null category

3 clarity 53940 non-null category

4 depth 53940 non-null float64

5 table 53940 non-null float64

6 price 53940 non-null int64

7 x 53940 non-null float64

8 y 53940 non-null float64

9 z 53940 non-null float64

dtypes: category(3), float64(6), int64(1)

memory usage: 3.0 MBComo pyod espera que todas las características sean numéricas, necesitamos codificar variables categóricas. Para ello utilizaremos Sklearn:

from sklearn.preprocessing import OrdinalEncoder

# Initialize the encoder

oe = OrdinalEncoder()

# Extract the categorical feature names

cats = diamonds.select_dtypes(include="category").columns.tolist()

# Encode the categorical features

cats_encoded = oe.fit_transform(diamonds[cats])

# Replace the old values with encoded values

diamonds.loc[:, cats] = cats_encoded

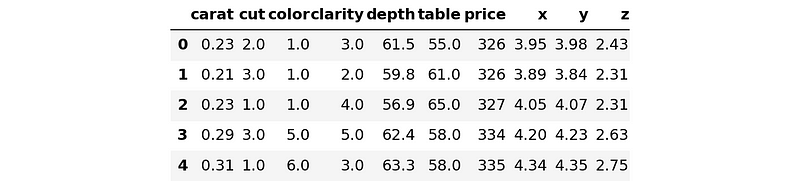

>>> diamonds.head()

Repasemos de nuevo el código línea por línea. En primer lugar, importamos la clase OrdinalEncoder que codifica los rasgos categóricos ordinales y la inicializamos. Los rasgos ordinales son variables que tienen categorías naturales y ordenadas, como en el caso de las mediciones de la calidad de los diamantes. El corte y la claridad son ordinales, mientras que el color no lo es. Pero para no complicar las cosas, por ahora lo consideraremos ordinal.

A continuación, extraemos los nombres de las características categóricas utilizando el método select_dtypes de Pandas DataFrames. Encadenamos los atributos .columns y .tolist() para obtener los nombres de las columnas en una lista llamada gatos.

A continuación, utilizaremos la lista para transformar las características que queramos con oe. Por último, utilizando un ingenioso truco de Pandas con .loc, sustituimos los antiguos valores de texto por otros numéricos.

Antes de ajustar un modelo multivariante, extraeremos la matriz de características X. El objetivo del conjunto de datos diamonds es predecir el precio de los diamantes dadas sus características. Así, X contendrá todas las columnas menos price:

X = diamonds.drop("price", axis=1)

y = diamonds[["price"]]

Now, let’s build and fit the model:

from pyod.models.iforest import IForest

# Create a model with 10000 trees

iforest = IForest(n_estimators=10000)

iforest.fit(X) # This will take a minute

# Extract the labels

labels = iforest.labels_Cuantos más árboles tenga el estimador IForest, más tiempo tardará en ajustarse el modelo al conjunto de datos.

Una vez que tenemos las etiquetas, podemos eliminar los valores atípicos de los datos originales:

X_outlier_free = X[labels == 0]

y_outlier_free = X[labels == 0]

>>> len(X_outlier_free)

48546

>>> # The length of the original dataset

>>> len(diamonds)

53940¡El modelo encontró más de 5000 valores atípicos!

Retos en la detección de anomalías

La detección de anomalías puede plantear mayores retos que otras tareas de aprendizaje automático debido a su naturaleza no supervisada. Sin embargo, la mayoría de estos retos pueden mitigarse con diversos métodos (consulta la siguiente sección para ver los recursos).

En la detección de valores atípicos, tenemos que hacernos estas dos preguntas:

- ¿Los valores atípicos encontrados son realmente valores atípicos?

- ¿Encontró el modelo todos los valores atípicos de los datos?

En el aprendizaje supervisado, podemos comprobar fácilmente si el modelo funciona bien cotejando sus predicciones sobre los datos de prueba con las etiquetas reales. Pero no podemos hacer lo mismo en la detección de valores atípicos, ya que no hay etiquetas preparadas que nos digan qué muestras son inliers y cuáles son outliers.

Así pues, ¡algunos o la mayoría de los +5000 valores atípicos encontrados en el conjunto de datos de los diamantes pueden no ser realmente valores atípicos! No hay forma de saberlo con seguridad. Puede haber etiquetado algunos valores anómalos como valores atípicos y no haber detectado algunos valores atípicos reales.

Este problema de no conocer el nivel de contaminación (el porcentaje de valores atípicos en un conjunto de datos) es el mayor en la detección de anomalías. Por ello, no podemos medir de forma fiable el rendimiento de los clasificadores de valores atípicos, ni verificar sus resultados.

Por esta razón, todos los estimadores de pyod tienen un parámetro llamado contamination, que está fijado en 0,1 por defecto. Como ingeniero de aprendizaje automático, tienes que afinar este parámetro por ti mismo.

Por supuesto, a veces hay alternativas. Por ejemplo, el modelo IsolationForest que ofrece Sklearn tiene un algoritmo interno para encontrar automáticamente el nivel de contaminación. Pero IsolationForest no es una bala de plata para todos los problemas de detección de valores atípicos.

Otro problema en la detección de anomalías es el desequilibrio de los datos. Las anomalías suelen ser poco frecuentes en comparación con los casos normales, lo que provoca que los conjuntos de datos estén desequilibrados. Este desequilibrio puede provocar dificultades para distinguir entre anomalías reales e irregularidades dentro de la clase mayoritaria.

Abordar estas cuestiones (y muchas más que no hemos tratado) implica elegir algoritmos adecuados, ajustar hiperparámetros, seleccionar características, tratar el desequilibrio de clases, etc.

Resumen y próximos pasos

Hemos terminado tu (probablemente) primera exposición al fascinante mundo de la detección de anomalías. Los conceptos y habilidades fundamentales de este tutorial pueden ser de gran ayuda para explorar más a fondo la detección de anomalías.

Para profundizar en tu comprensión, aquí tienes algunos recursos que puedes consultar a continuación:

- Curso de Detección de Anomalías en Python de DataCamp: cubre los métodos y técnicas tratados más a fondo en este artículo y analiza cómo abordar los problemas presentados en la última sección.

- Curso de Detección de Anomalías en R de DataCamp: un curso para gente que prefiere el buen R

Soy un creador de contenidos de ciencia de datos con más de 2 años de experiencia y uno de los mayores seguidores en Medium. Me gusta escribir artículos detallados sobre IA y ML con un estilo un poco sarcastıc, porque hay que hacer algo para que sean un poco menos aburridos. He publicado más de 130 artículos y un curso DataCamp, y estoy preparando otro. Mi contenido ha sido visto por más de 5 millones de ojos, 20.000 de los cuales se convirtieron en seguidores tanto en Medium como en LinkedIn.