programa

We are now living in a world where creativity has fewer boundaries. Thanks to generative AI capabilities in creating natural language, images, and video, our most vivid imaginations can be transformed into stunning visual realities.

Scuh possibilities seemed like fiction a few years ago, but this is no longer the case, especially with the groundbreaking technology of the DALL-E 3 API.

This article provides a complete guide to understanding the DALL-E 3 technology, exploring its features, hands-on and real-world applications, and how it is reshaping the creative landscape.

An Overview of DALL-E 3 API

Before diving into the API aspect, let’s first understand what DALL-E 3 is. We’ve got a full introduction to using DALL-E 3 via Bing and ChatGPT, whereas this guide will focus mainly on integrating the API.

What is DALL-E 3?

It is OpenAI’s latest image generation model and was announced in September 2023. This model is capable of understanding significantly more nuance and detail than its predecessors. DALL-E 3 allows users to generate exceptional visuals from textual descriptions.

Multiple versions, such as DALL-E 1 and DALL-E 2, have been featured respectively in January 2021, and April 2022. DALL-E 3 is by far the most improved version, and below is the table comparing it to the previous versions.

Pricing details

The pricing details are provided for each one of the DALL-E versions. As we can see, only DALL-E 3 provides standard and high-definition image qualities and their corresponding resolutions.

|

Model |

Quality |

Resolution |

Price per image (in US $) |

|

DALL-E |

1024x1024 |

0.13 |

|

|

DALL·E 2 |

1024×1024 |

0.020 |

|

|

512×512 |

0.018 |

||

|

256×256 |

0.016 |

||

|

DALL·E 3 |

Standard |

1024×1024 |

0.040 |

|

1024×1792, 1792×1024 |

0.080 |

||

|

HD |

1024×1024 |

0.080 |

|

|

1024×1792, 1792×1024 |

0.120 |

Additional characteristics

The table above provides a comparative overview of three different iterations of the DALL-E AI system, which is designed to generate images from textual descriptions. These versions are DALL-E, DALL-E 2, and DALL-E 3, each with its own release date and set of features.

- DALL-E, the first version, was introduced in January 2021, utilizing GPT-3 as its underlying language model. It marked the initial step in text-to-image generation technology, available to users with an OpenAI API account.

- The second version, DALL-E 2, was released in July 2022, advancing the technology with the CLIP language model. CLIP's capabilities enhanced the system's understanding of text prompts, leading to more accurate image generation.

- The most recent version is DALL-E 3, which was released in October 2023. It integrates the more advanced GPT-4 language model, likely offering further improved text understanding and image creation. This version is accessible not only to OpenAI API account holders but also to "Plus" subscribers via the ChatGPT interface.

Each version signifies an evolutionary step in text-to-image AI capabilities, with advancements in language models and accessibility reflecting OpenAI's commitment to improving and democratizing AI technology.

OpenAI provides additional capabilities through its “Plus” subscription, and one of them is its GPT-4 Vision. To learn more about this, our GPT-4 Vision: A Comprehensive Guide for Beginners introduces you to everything you need to know about GPT-4 Vision, from accessing it to going hands-on into real-world examples and its limitations.

|

DALL-E |

DALL-E 2 |

DALL-E 3 |

|

|

Release date |

January 2021 |

July 2022 |

October 2023 |

|

Input data type |

Text prompt |

Text prompt |

Text prompt |

|

Language model |

GPT-3 |

CLIP |

GPT-4 |

|

Available to |

Anyone with an OpenAI API account |

Anyone with an OpenAI API account |

Anyone with an OpenAI API account and the “Plus“ subscribers via ChatGPT |

Why an API Version?

OpenAI announced the launch of several APIs during its first-ever developer day, and DALL-E 3 was one of them.

The API version of DALL-E 3 offers more direct and versatile access to its capabilities. After coming to ChatGPT interface and Bing Chat, which provide a more controlled and guided experience, the API version allows developers and businesses to directly integrate DALL-E 3 capabilities into their own applications and workflows.

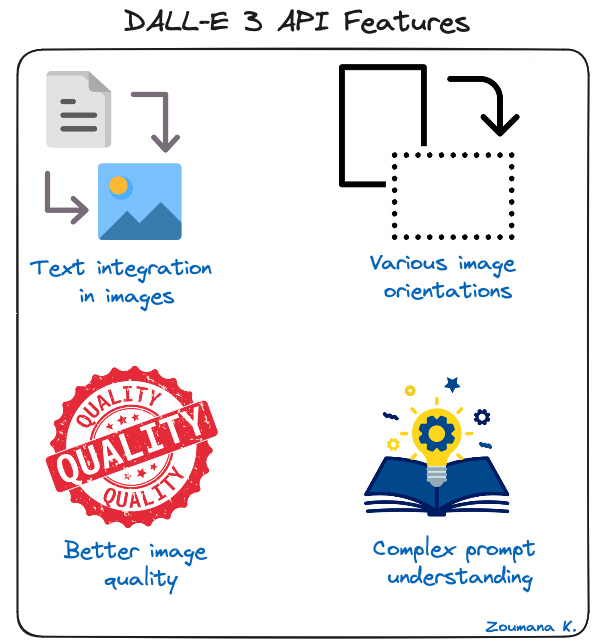

DALL-E 3 API Features

The API brings to the table a set of features designed to improve the users’ experience, and let’s explore these capabilities in detail:

DALL-E 3 API features

- Text integration in images: DALL-E 3 API can integrate desired text into a visual context, making it look like it was part of the original scene.

- Various image orientations: with DALL-E 3, users can generate both landscape and portrait formats of images, ensuring flexibility for different media and layout requirements.

- Better image quality: the model is not able to create visually attractive images, but also rich in details, hence providing a realistic and captivating visual experience

- Complex prompt understanding: DALL-E 3 is efficient at interpreting complex prompts, allowing users to create highly specific and detailed images.

Industry Use-Cases of DALL-E 3 API

Many industries have been taking advantage of the DALL-E technology since the initial versions. This current API provides more advanced image-generation capabilities, facilitating creativity and efficiency.

Even though there are countless of industries, this section focuses on three of them, such as advertising and marketing, education, and video game development.

- Advertising and marketing: Multiple agencies can leverage the API for a quick creation of customized visuals for marketing campaigns and branding. This has the benefit of improving their creativity and reducing time to market.

- Education: Online educational platforms can use the API to generate tailored illustrations and diagrams to make their learning materials more engaging and accessible to a broader audience.

- Video game development: This is, I believe, one of the fields that will take advantage of the DALL-E 3 API because gaming is all visuals. Game developers can integrate the technology to quickly design unique game visuals.

Hands-On: Getting Started with DALL-E 3 API

Now that we have a better understanding of the DALL-E 3 API and what it’s capable of, let’s get creative. This section focuses on guiding through the process of generating images using the DALL-E 3 API after setting up all the requirements.

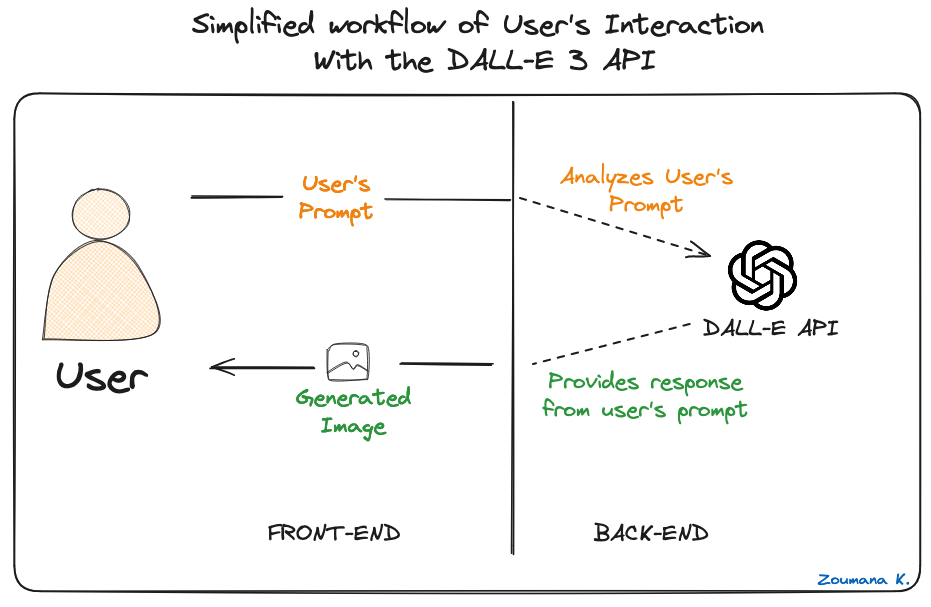

Image generation workflow

For any technical implementation, it is better to provide a visual workflow illustrating the interaction between the main components of the overall application being developed.

This workflow explains how the user interacts with the API, from providing the custom prompt to getting the final image.

Simplified workflow of User's Interaction With the DALL-E 3 API

There are two main parts in the workflow:

- The front-end is the the part that allows the user to provide the description for the desired image.

- The second part is the back-end, which is responsible for mapping the user’s prompt to the DALL-E 3 API.

Now it is time to dive into the technical implementation. The source code for this tutorial is available in this DataLab workbook; create a copy to edit and run it in the browser without installing anything on your computer.

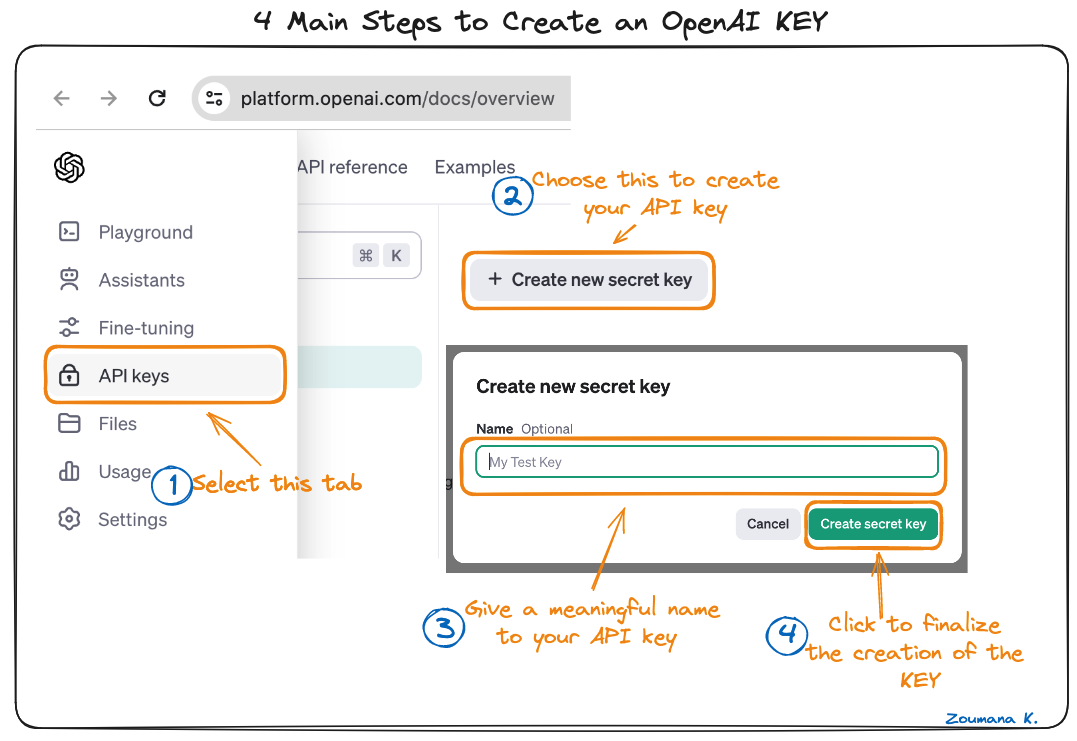

Set Up the OpenAI KEY

The main tools required to successfully reproduce the results of this tutorial are:

- Python: this is the main programming language we’ll use for this tutorial. An alternative option is NodeJS.

- OpenAI: the package to interact with OpenAI services

- OS: the operating system package to configure the environment variable

- Image: responsible for converting the DALL-E 3 response into the image format

The first step is to acquire the OpenAI KEY, which helps access the DALL-E 3 model. The main steps are illustrated below:

Four main steps to create an OpenAI KEY

The above main four steps are self-explanatory. However, it is important to create an account from the official OpenAI website.

Interact with DALL-E 3 API

Once you’ve acquired the KEY, make sure not to share it with anyone. The key should remain private. Next, set up the key to the environment variable as follows to be able to work with:

import os

OPENAI_API_KEY= “<YOUR PRIVATE KEY>”

os.environ["OPENAI_API_KEY"] = OPENAI_API_KEYThis os.environ statement initiates the interaction with any OpenAI services depending on the user’s private key scope, which can be Personal or Enterprise.

Then, using the generate function from the OpenAI clients, the user is able to specify:

- The model to use, which is dall-e-3 for this tutorial.

- The prompt to be sent to the model.

- The dimensions of the final image to be generated by the dall-e-3, and we use the same dimension of 1024x1024 for all the images being generated.

- The quality of the image, whether it is standard or high definition (hd). Let’s focus on generating only high-definition images.

- The user can request one image at time with the DALL-E 3 model or up to ten images at a time using the n parameter, which can be beneficial for parallel requests. This tutorial uses n=1 for simplicity’s sake.

The following helper function combines all the above information for better reproducibility, but before that, we need to install the OpenAI library and import all the required ones:

The installation is performed using the Python package manager pip as follows:

pip install --upgrade openaiThe upgrade option is to upgrade to the Python SDK v1.2, which is required to successfully interact with DALL-E 3.

from openai import OpenAI

# Instantiate the OpenAI client

client = OpenAI()

from IPython.display import ImageThe client is responsible for initiating the communication with the DALL-E 3 API.

The helper function is implemented below:

def get_image_from_DALL_E_3_API(user_prompt,

image_dimension="1024x1024",

image_quality="hd",

model="dall-e-3",

nb_final_image=1):

response = client.images.generate(

model = model,

prompt = user_prompt,

size = image_dimension,

quality = image_quality,

n=nb_final_image,

)

image_url = response.data[0].url

display(Image(url=image_url))Generate Realistic Images

With the above helper function, let’s experiment with the image generations using simple and advanced prompts. The advanced prompts uses the illustration of the previous industry use cases.

It is important to note that running the same code multiple times generates different results, and this is due to the “creative” part of the model.

Use simple prompt

Let’s imagine sending the following prompt to the model:

Create an image of a cute brown puppy sitting in a green meadow under a clear blue sky.

puppy_prompt = "Create an image of a cute brown puppy sitting in a green meadow under a clear blue sky."

get_image_from_DALL_E_3_API(puppy_prompt)The following image is generated after a successful execution of the above code snippet.

Image generated from the simple prompt

This result is the true reflection of the underlying prompt.

Use more complex prompts

Now, let’s consider the complex prompts for Education, advertisement, and video game development.

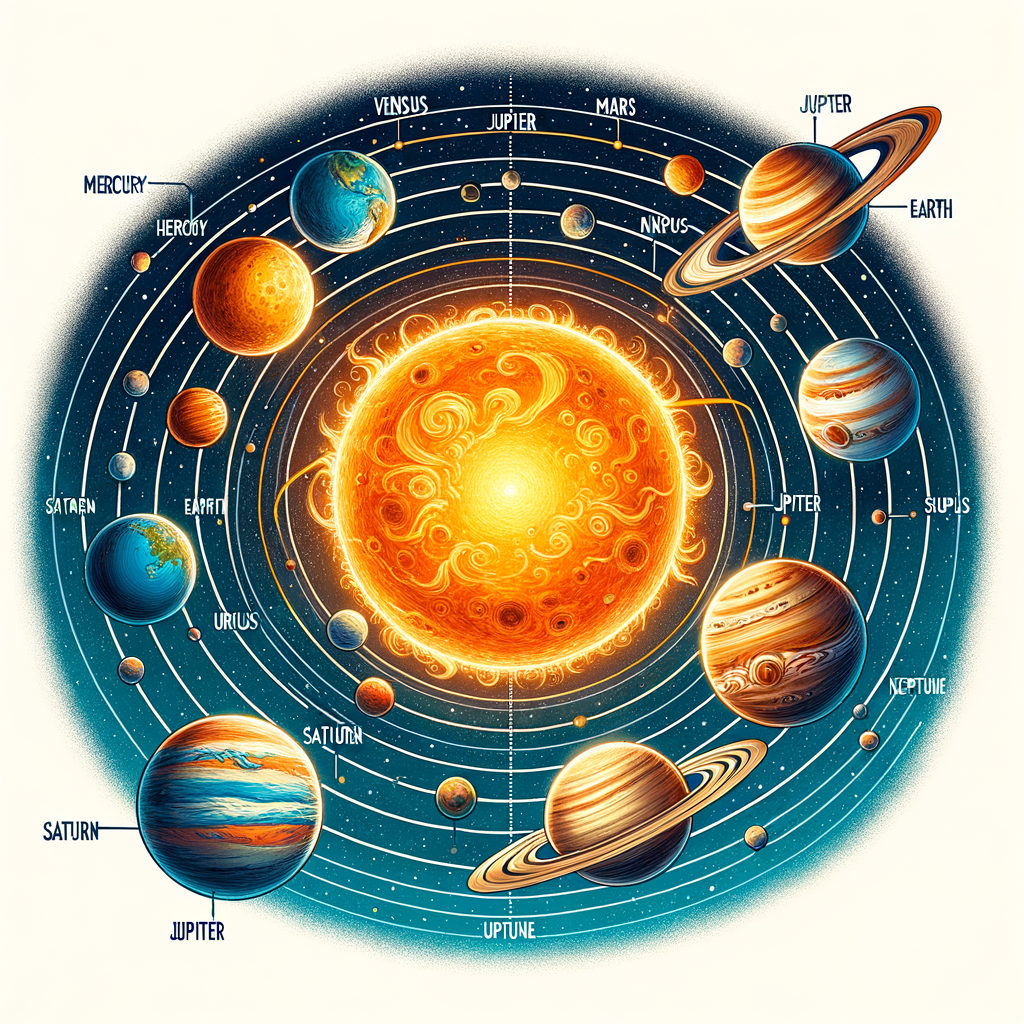

Educational Content

- Prompt:

Generate an illustration of the solar system with planets orbiting the sun, labeled in English, for a grade school science textbook.

- Code:

education_prompt = "Generate an illustration of the solar system with planets orbiting the sun, labeled in English, for a grade school science textbook"

get_image_from_DALL_E_3_API(education_prompt)- Result:

Image generated for “Education” prompt

Advertising and Marketing

- Prompt:

Create an image of a family enjoying a picnic in a futuristic city park, with skyscrapers in the background and a clear blue sky, to be used in a campaign promoting eco-friendly urban living.

- Code:

advertising_prompt = "Create an image of a family enjoying a picnic in a futuristic city park, with skyscrapers in the background and a clear blue sky, to be used in a campaign promoting eco-friendly urban living."

get_image_from_DALL_E_3_API(advertising_prompt)- Result:

Image generated for “Advertising” prompt

Game Development

For this final example, let’s ask the model to add textual information highlighted in orange in the prompt, and let’s see how it behaves.

- Prompt:

Design a concept art of a mystical forest at twilight, with glowing plants and a hidden entrance to an underground cave, for an adventure game setting. Include a signpost in the image with the text 'Beware: Mythical Creatures Ahead' in an ancient, mystical font style.

- Code:

game_dev_prompt = "Design a concept art of a mystical forest at twilight, with glowing plants and a hidden entrance to an underground cave, for an adventure game setting. Include a signpost in the image with the text 'Beware: Mythical Creatures Ahead' in an ancient, mystical font style"

get_image_from_DALL_E_3_API(game_dev_prompt)- Result:

Image generated for “Game Development” prompt

We can see that the model was able to successfully add the text “BEWARE MYTHICAL CREATURES AHEAD” after generating the image. That’s just fantastic.

Best Practices When Using DALL-E 3 API

To have a better experience with DALL-E 3 API, here are some best practices and guidelines:

- Prioritize detailed communication: Providing precise and detailed prompts to DALL-E 3 is key, just as clear and specific instructions help in human interactions. The more explicit the requests, the more accurate the end result

- Ethical considerations: It is important to be mindful of the ethical implications of the final creations by respecting copyright and privacy laws, and considering the potential impact of the generated images.

- Acknowledge and adapt to its limitations: Like any technology, DALL-E 3 has its own limitations as well. Familiarizing yourself with what the API can and can not do will help tailor the prompts for more realistic expectations.

- Stay informed and evolve: trying a variety of prompts can help discover the API’s range of possibilities and also one’s own creative boundaries. For painters, this is similar to exploring different brushes and colors.

Conclusion

In summary, this article has presented an exhaustive exploration of the DALL-E 3 API, detailing its setup and various applications. We began with an overview of the API, outlining its groundbreaking capabilities and setting the stage for a deeper dive.

We then delved into the specific features of DALL-E 3, providing clarity on its advanced image generation functionalities. This was followed by an insightful look into various industry use cases, demonstrating the API's versatility and wide-ranging impact.

The article also guided through the initial steps of using the DALL-E 3 API, from obtaining access to setting it up for different applications, highlighting the importance of understanding and effectively using the API's features.

Additionally, we discussed best practices for using the DALL-E 3 API, emphasizing ethical considerations and strategies for maximizing its potential. Practical tips, coupled with examples of the API's capabilities, were shared to assist users in optimizing their experience.

Are you ready to take your next step in the world of AI and creative technology? Enhance your skills and unlock your creative potential with the advanced tools used by AI innovators. Embark on your journey with our How to Use Midjourney: A Comprehensive Guide to AI-Generated Artwork Creation tutorial today. Or, learn how to work with other popular APIs, such as our Working with the OpenAI API course.

A multi-talented data scientist who enjoys sharing his knowledge and giving back to others, Zoumana is a YouTube content creator and a top tech writer on Medium. He finds joy in speaking, coding, and teaching . Zoumana holds two master’s degrees. The first one in computer science with a focus in Machine Learning from Paris, France, and the second one in Data Science from Texas Tech University in the US. His career path started as a Software Developer at Groupe OPEN in France, before moving on to IBM as a Machine Learning Consultant, where he developed end-to-end AI solutions for insurance companies. Zoumana joined Axionable, the first Sustainable AI startup based in Paris and Montreal. There, he served as a Data Scientist and implemented AI products, mostly NLP use cases, for clients from France, Montreal, Singapore, and Switzerland. Additionally, 5% of his time was dedicated to Research and Development. As of now, he is working as a Senior Data Scientist at IFC-the world Bank Group.