programa

La caché de preguntas almacena las respuestas a las preguntas más frecuentes. Esto permite modelos lingüísticos omitir el procesamiento redundante y recuperar respuestas pregeneradas. No sólo ahorra costes y reduce la latencia, sino que también hace que las interacciones impulsadas por la IA sean más rápidas y eficientes.

En este blog, exploraremos qué es la caché rápida, cómo funciona, sus ventajas y sus retos. También exploraremos aplicaciones del mundo real y ofreceremos las mejores prácticas para estrategias eficaces de almacenamiento en caché.

¿Qué es el caché de avisos?

En esta sección, recorreremos el mecanismo de funcionamiento de la caché rápida junto con algunas ventajas y consideraciones clave que hacen que esta técnica sea tan útil.

Desarrollar aplicaciones de IA

El mecanismo básico de la caché rápida

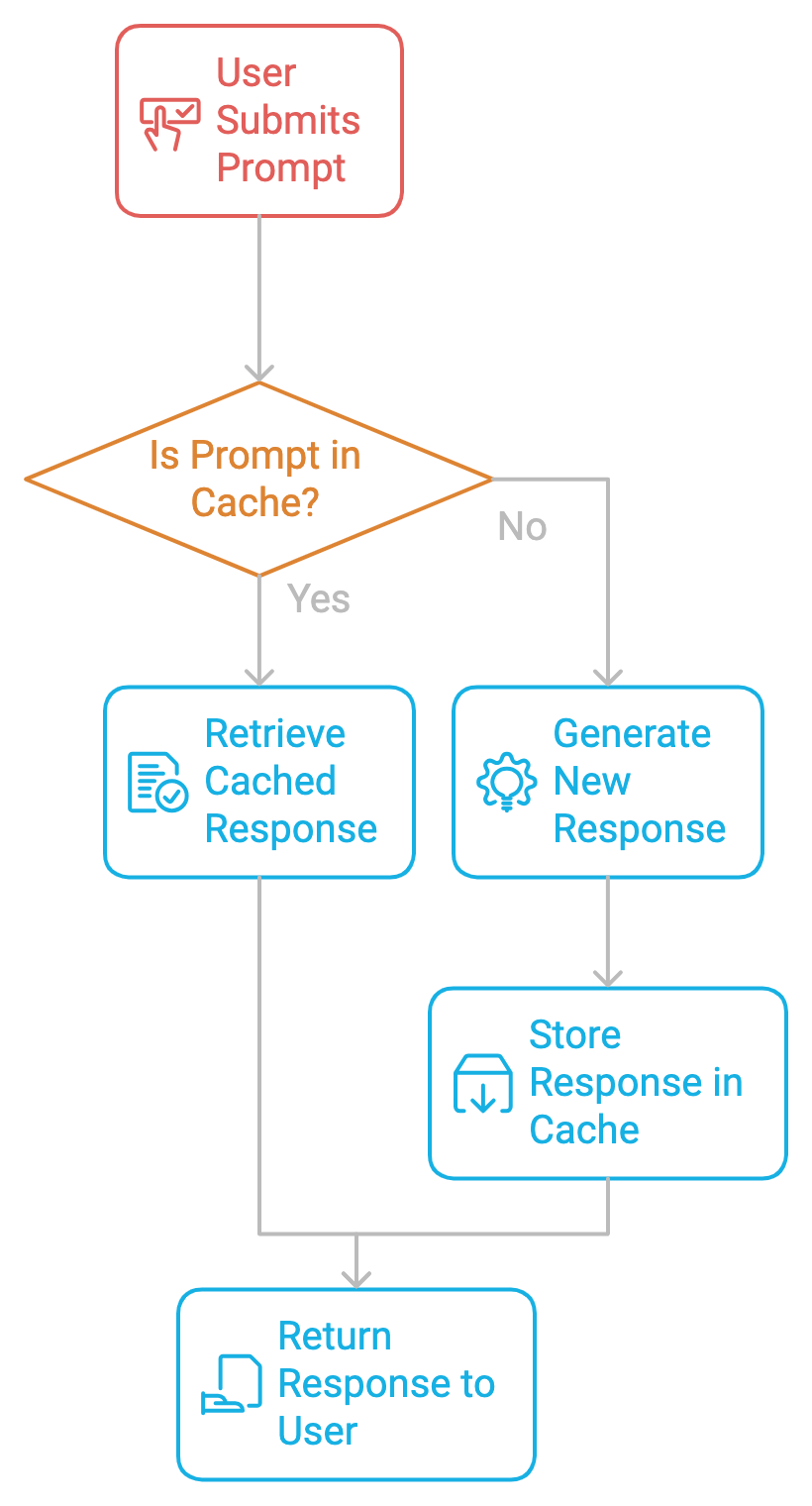

En esencia, la caché de peticiones funciona almacenando las peticiones y sus correspondientes respuestas en una caché. Cuando se vuelve a enviar la misma consulta o una similar, el sistema recupera la respuesta almacenada en caché en lugar de generar una nueva. Esto evita el cálculo repetitivo, acelera los tiempos de respuesta y reduce los costes.

Ventajas de la caché rápida

El almacenamiento en caché tiene varias ventajas:

- Reducción de costes: Los LLM suelen cobrar en función del uso de tokens, lo que significa que cada vez que se procesa una petición, los tokens de entrada (la propia petición) y de salida (la respuesta) se suman al total de uso. Al reutilizar las respuestas almacenadas en caché, los desarrolladores pueden evitar pagar por la generación redundante de tokens, reduciendo en última instancia los costes de la API.

- Reducción de la latencia: El almacenamiento en caché de los modelos de IA acelera los tiempos de respuesta, mejorando el rendimiento del sistema y liberando recursos.

- Experiencia de usuario mejorada: En aplicaciones sensibles al tiempo, como los robots de atención al cliente en tiempo real o las plataformas de aprendizaje interactivo, cada milisegundo de retraso puede afectar a la experiencia del usuario. El almacenamiento en caché hace que el sistema sea más eficiente con las respuestas almacenadas en caché, ya que puede gestionar un mayor tráfico sin sacrificar el rendimiento, lo que contribuye a un servicio más escalable y fiable.

Advertencias antes de implantar el caché de avisos

Antes de profundizar en la aplicación de la caché rápida, debemos tener en cuenta algunas consideraciones.

Tiempo de vida de la caché (TTL)

Para garantizar la frescura de los datos, cada respuesta almacenada en caché debe tener un Tiempo de Vida (TTL) en la memoria. El TTL determina cuánto tiempo se considerará válida una respuesta almacenada en caché. Una vez que expira el TTL, la entrada de la caché se elimina o se actualiza, y el indicador correspondiente se vuelve a calcular la próxima vez que se solicite.

Este mecanismo garantiza que la caché no almacene información obsoleta. En el caso de contenidos estáticos o que se actualizan con menos frecuencia, como documentos legales o manuales de productos, un TTL más largo puede ayudar a reducir el recálculo sin arriesgarse al anquilosamiento de los datos. Por tanto, ajustar adecuadamente los valores TTL es esencial para mantener un equilibrio entre la frescura de los datos y la eficiencia computacional.

Prompta similitud

A veces, dos peticiones son similares pero no idénticas. Determinar lo cerca que está una nueva petición de otra ya almacenada en caché es crucial para que el almacenamiento en caché sea eficaz. Implementar la similitud de las indicaciones requiere técnicas como la concordancia difusa o la búsqueda semántica, en las que el sistema utiliza incrustaciones vectoriales para representar las indicaciones y comparar su similitud.

Al almacenar en caché las respuestas a peticiones similares, los sistemas pueden reducir el recálculo, manteniendo al mismo tiempo una alta precisión en las respuestas. Sin embargo, fijar el umbral de similitud de forma demasiado laxa puede dar lugar a desajustes, mientras que fijarlo de forma demasiado estricta puede hacer que se pierdan oportunidades de almacenamiento en caché.

Estrategias de actualización de la caché

Estrategias como la de Uso Menos Reciente (LRU) ayudan a gestionar el tamaño de la caché. El método LRU elimina los datos menos accedidos cuando la caché alcanza su capacidad. Esta estrategia funciona bien en situaciones en las que determinadas consultas son más populares y deben permanecer en la caché, mientras que las consultas menos comunes pueden eliminarse para dejar espacio a las solicitudes más recientes.

Implementar el caché de avisos

Implementar la caché rápida es un proceso de dos pasos.

Paso 1: Identificar indicaciones repetidas

El primer paso para implantar la caché de avisos es identificar los avisos repetitivos frecuentes en el sistema. Tanto si construimos un chatbot, un asistente de codificación o un procesador de documentos, necesitamos controlar qué indicaciones se repiten. Una vez identificados, pueden almacenarse en caché para evitar cálculos redundantes.

Paso 2: Guardar el aviso

Una vez identificada una solicitud, su respuesta se almacena en la caché junto con metadatos como el tiempo de vida (TTL), el índice de aciertos y errores de la caché, etc. Cada vez que un usuario vuelve a enviar la misma consulta, el sistema recupera la respuesta almacenada en caché, saltándose el costoso proceso de generación.

Aplicación del código: El caché frente al no caché

Con todos los conocimientos teóricos, vamos a sumergirnos en un ejemplo práctico utilizando Ollama para explorar el impacto del almacenamiento en caché frente al no almacenamiento en caché en un entorno local. Aquí, utilizamos datos de un libro de aprendizaje profundo alojado en la web y modelos locales para resumir las primeras páginas del libro. Experimentaremos con varios LLM, incluidos Gemma2, Llama2 y Llama3para comparar su rendimiento.

Requisitos previos

Para este ejemplo práctico, utilizaremos BeautifulSoupun paquete de Python que analiza documentos HTML y XML, incluidos los que contienen marcas malformadas. Para instalar BeautifulSoup, ejecuta lo siguiente:

!pip install BeautifulSoupOtra herramienta que utilizaremos es Ollama. Simplifica la instalación y gestión de grandes modelos lingüísticos en sistemas locales.

Para empezar, descarga Ollama e instala Ollama en tu escritorio. Podemos variar el nombre del modelo según nuestras necesidades. Consulta el modelo biblioteca en el sitio web oficial de Ollama para buscar los distintos modelos compatibles con Ollama. Ejecuta el siguiente código en el terminal:

ollama run llama3.1 Ya estamos listos. ¡Empecemos!

Paso 1: Importa las bibliotecas necesarias

Comenzamos con las siguientes importaciones:

timepara seguir el tiempo de inferencia del código de caché y sin cachérequestspara hacer peticiones HTTP y obtener datos de páginas webBeautifulSouppara analizar y limpiar el contenido HTMLOllamapara utilizar localmente los LLM

import time

import requests

from bs4 import BeautifulSoup

import ollamaPaso 2: Recuperar y limpiar contenidos

En el código siguiente, definimos una función fetch_article_content que recupera y limpia el contenido del texto de una URL dada. Intenta obtener el contenido de la página web utilizando la biblioteca requests, con hasta tres reintentos en caso de fallos como errores de red o problemas con el servidor.

La función utiliza BeautifulSoup para analizar el contenido HTML y elimina las etiquetas

Obtén una certificación superior en IA

Soy experta Google Developers en ML (Gen AI), triple experta en Kaggle y embajadora de Women Techmakers, con más de tres años de experiencia en el sector tecnológico. Cofundé una startup de salud en 2020 y actualmente curso un máster en informática en Georgia Tech, con especialización en aprendizaje automático.