programa

If you have ever used ChatGPT with voice mode, you already know how impressive it feels. Talking to an AI that listens, understands, and responds almost like a real person can be surprisingly natural. It feels conversational, intelligent, and responsive. But there is still a gap. Small delays, limited interruption handling, and the sense that you are still speaking to a system rather than a person.

Now imagine something better. This is exactly where PersonaPlex comes in.

Imagine a real-time voice assistant that responds instantly, lets you interrupt naturally, adapts mid-sentence, and feels far closer to a real human conversation. No noticeable latency. No awkward pauses. No cloud dependency. Just a smooth, local, real-time dialogue that feels alive.

PersonaPlex is a real-time, local voice interaction system developed by NVIDIA. It is designed to push voice-based AI beyond simple speech-to-text and text-to-speech pipelines. Instead, it enables low-latency, streaming conversations where the assistant can speak, listen, and adapt continuously, much like a real person would in a face-to-face conversation.

In this tutorial, I will walk you through PersonaPlex step by step. We will start by understanding what PersonaPlex is and why it feels fundamentally different from typical voice assistants. Then, we will set up the local environment, install PersonaPlex from source, and launch the PersonaPlex WebUI server. After that, we will interact with the system through the web interface and finally test real-time voice conversations using a Python script.

I recommend checking out the Spoken Language Processing in Python course to learn about some of the fundamentals behind PersonaPlex.

What is PersonaPlex?

PersonaPlex is a new conversational AI system that makes voice interactions feel genuinely natural while still letting you fully customize the voice and persona.

Instead of sounding like a typical assistant with pauses and rigid turn-taking, it enables smooth, real-time conversations where interruptions, timing, and tone feel human.

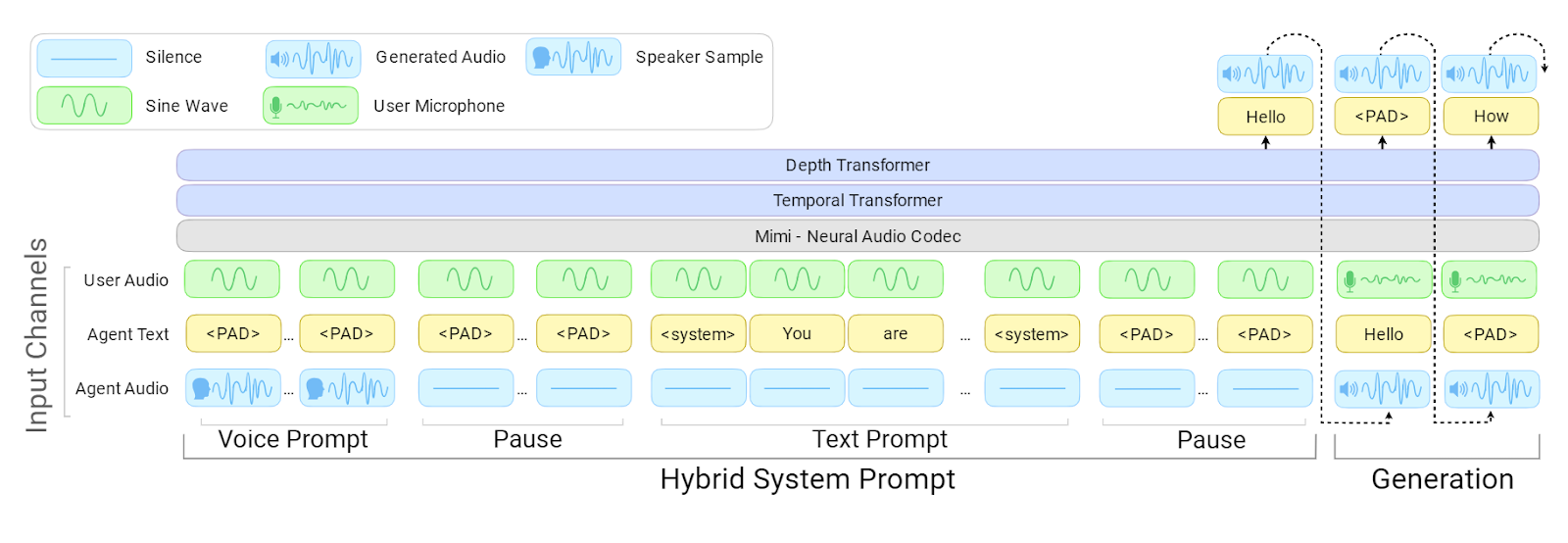

At its core, PersonaPlex uses a full-duplex architecture, meaning it can listen and speak at the same time.

Rather than chaining together separate models for speech recognition, language understanding, and speech generation, it relies on a single unified model that updates continuously as the user speaks.

Voice prompts define how the assistant sounds, while text prompts define who it is and how it should behave. This combination allows PersonaPlex to maintain a consistent persona while responding instantly and naturally.

PersonaPlex Architecture | Source: NVIDIA PersonaPlex

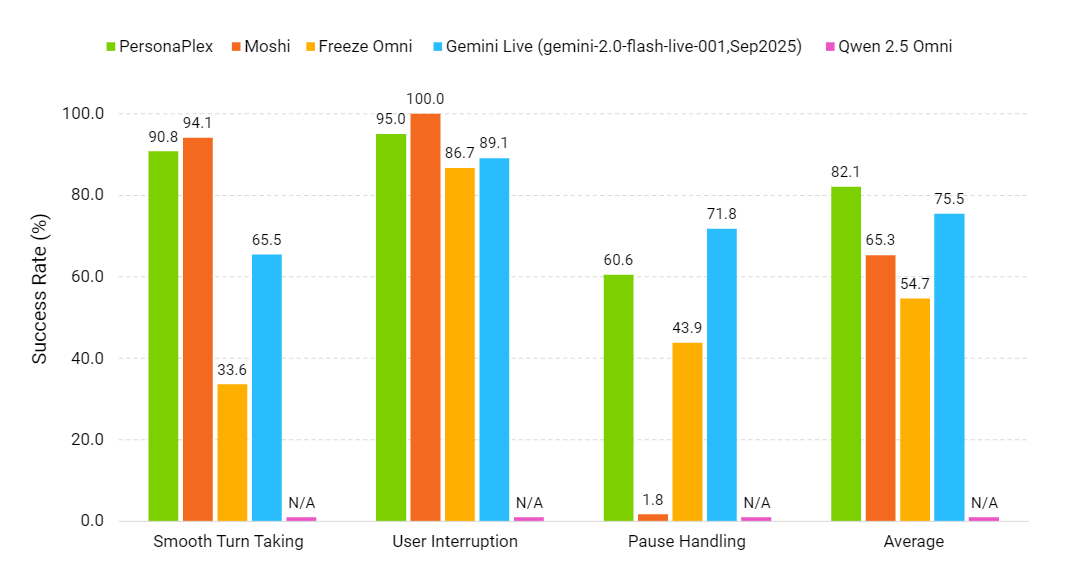

PersonaPlex is evaluated using FullDuplexBench and an extended customer service benchmark called ServiceDuplexBench.

It outperforms other open-source and commercial systems in conversational dynamics, low-latency responses, interruption handling, and task adherence across both assistant and customer service scenarios.

Conversation Dynamics (Higher is better) | Source: NVIDIA PersonaPlex

In the video demo below, you can clearly see the person having a fluid conversation with the model, exchanging banter and jokes in real time.

Setting Up the Environment

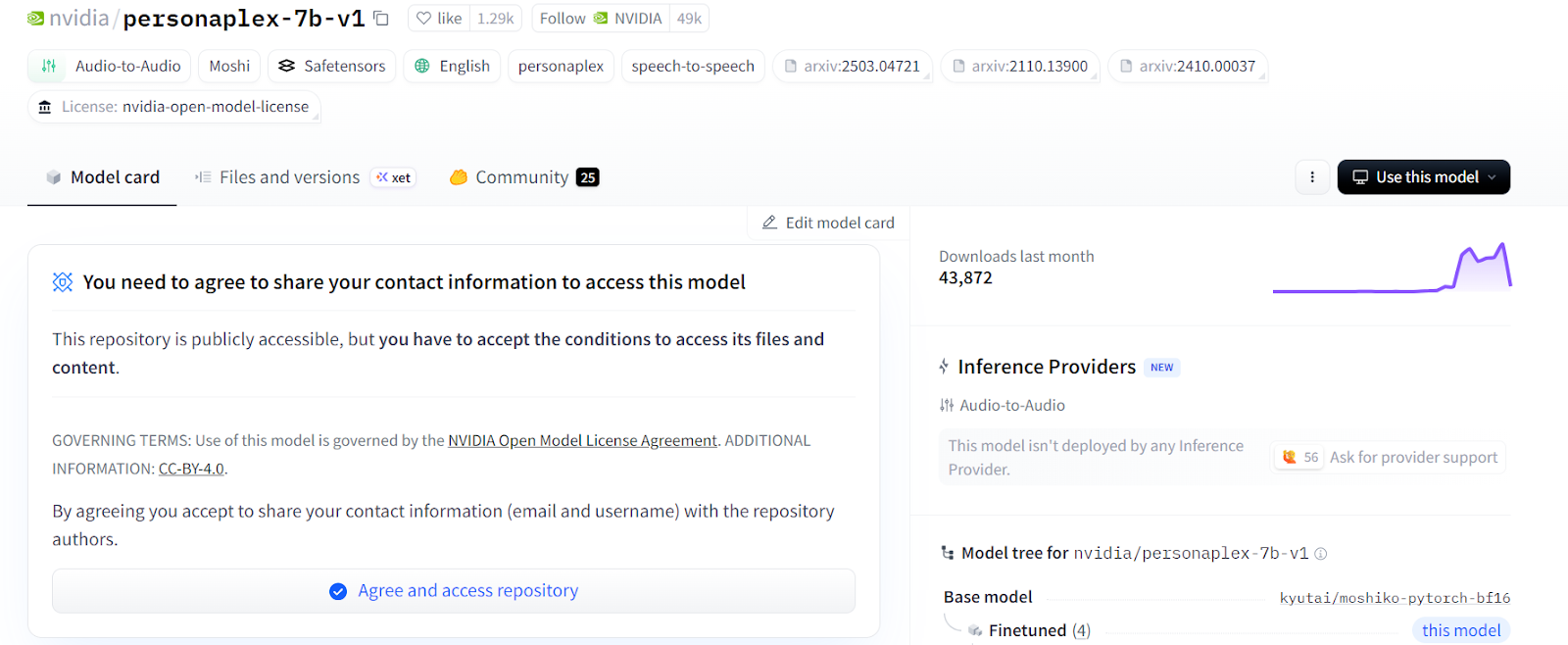

Before we start, visit the Hugging Face model page for nvidia/personaplex-7b-v1 and accept the model usage conditions.

PersonaPlex is a gated model, so you will need a Hugging Face API token. Generate the token from your Hugging Face account and keep it handy, as we will add it to the environment variables later to allow model access.

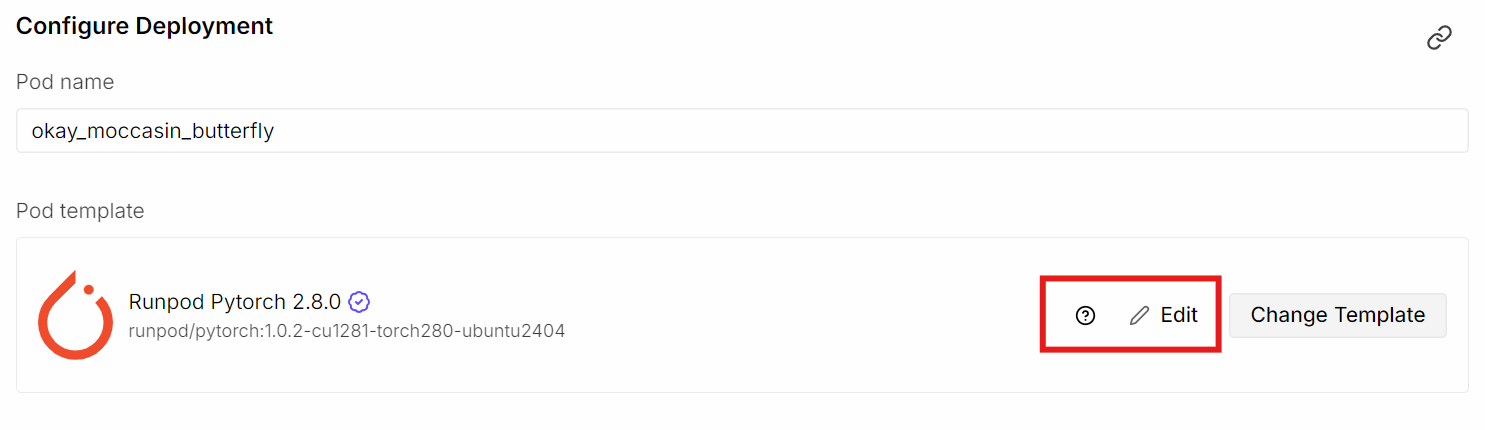

Next, go to RunPod and launch a new A40 GPU pod. Select the latest PyTorch image, then click Edit to customize the environment.

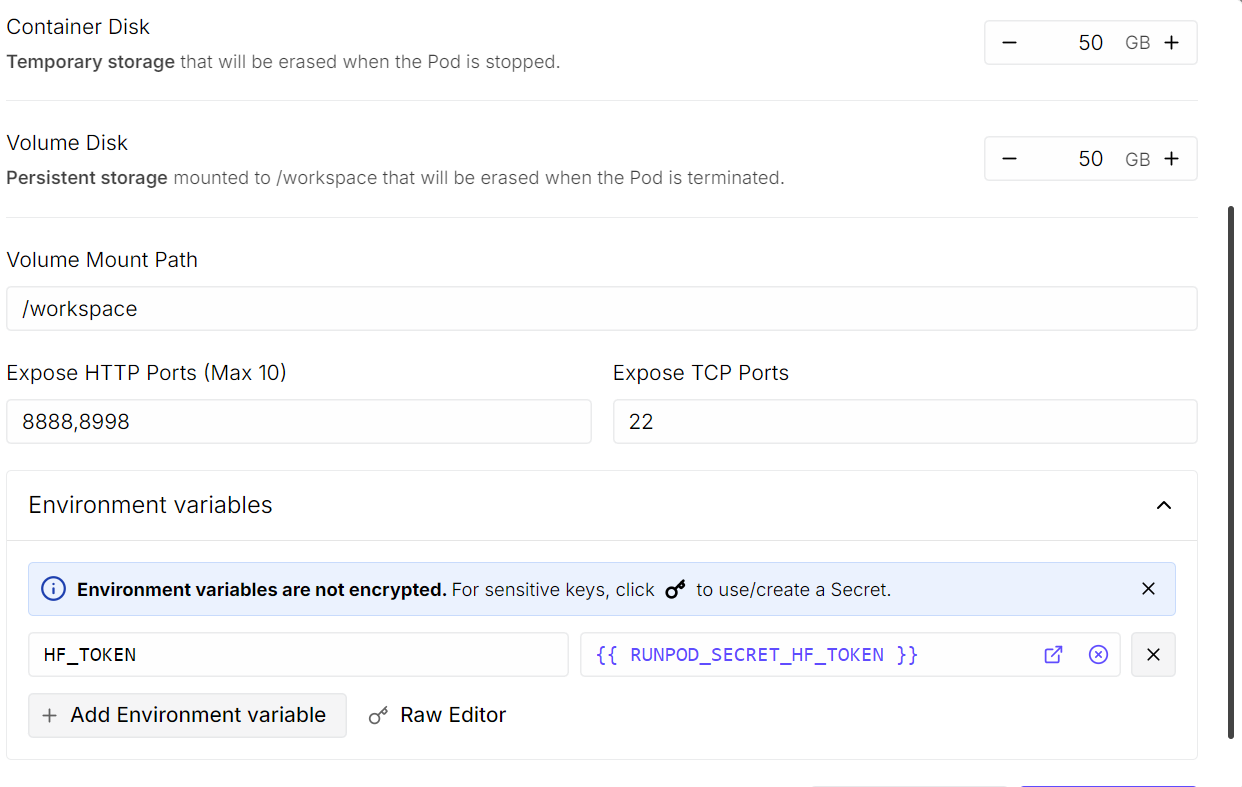

Increase the container disk size to 50 GB, since the model itself is around 20 GB, and additional dependencies will also be downloaded. In the exposed HTTP ports section, add port 8998. Under environment variables, add HF_TOKEN and paste your Hugging Face API token.

Once everything is configured, save the overrides and deploy the pod.

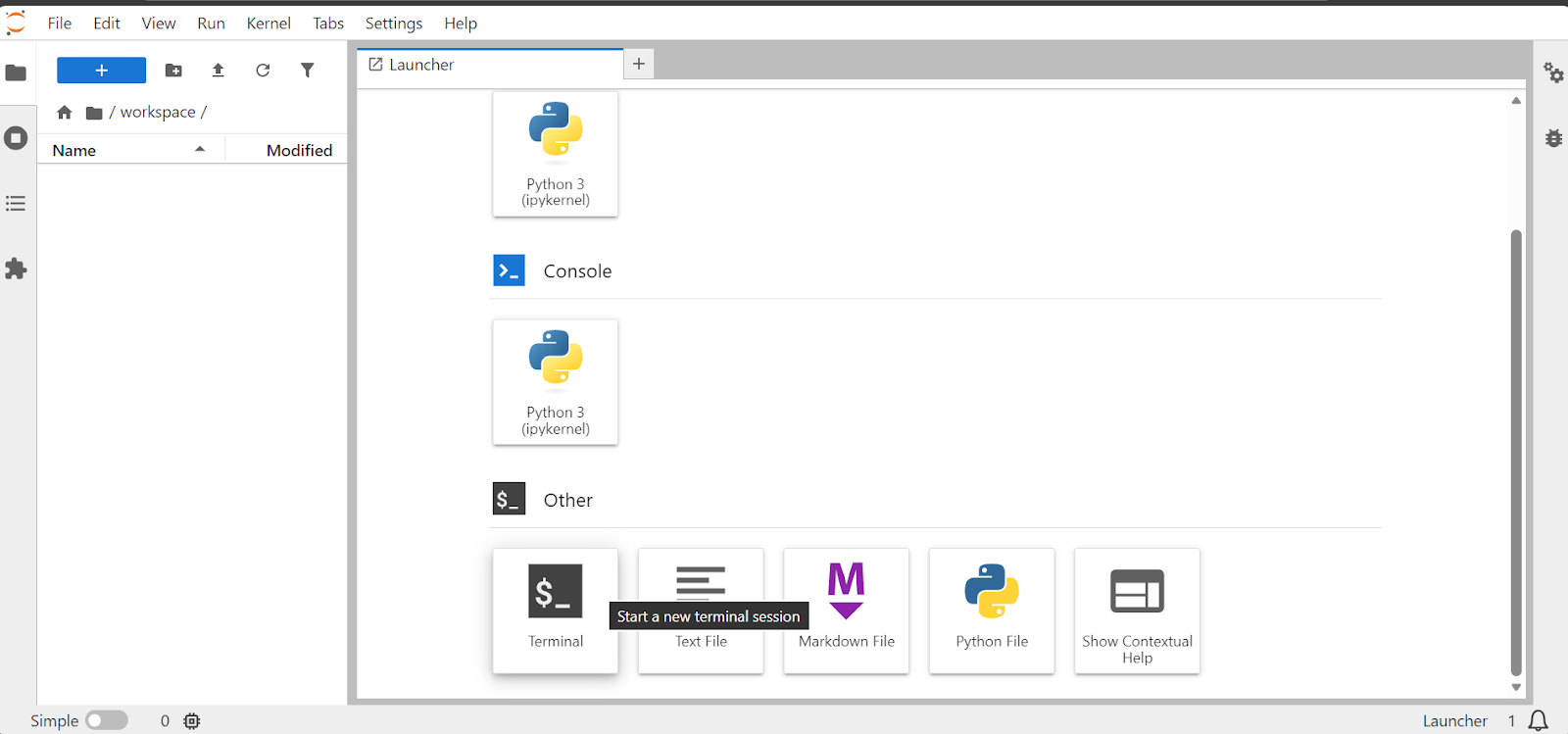

After the pod starts, you will see a link to the JupyterLab instance. Open it, then launch a terminal. You can access the machine via SSH or the web terminal, but using the Jupyter terminal is the simplest option.

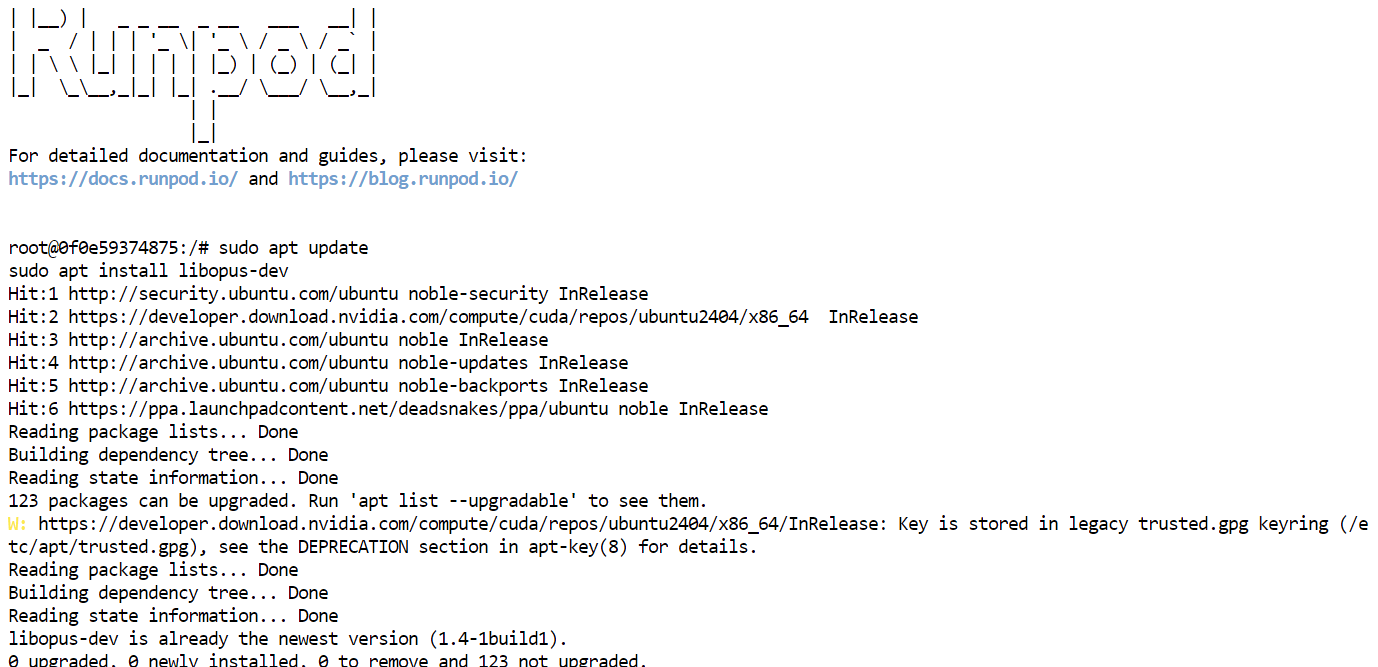

In the terminal, install the Opus audio codec development library, which is required for audio processing:

sudo apt update

sudo apt install libopus-dev

Installing PersonaPlex From Source

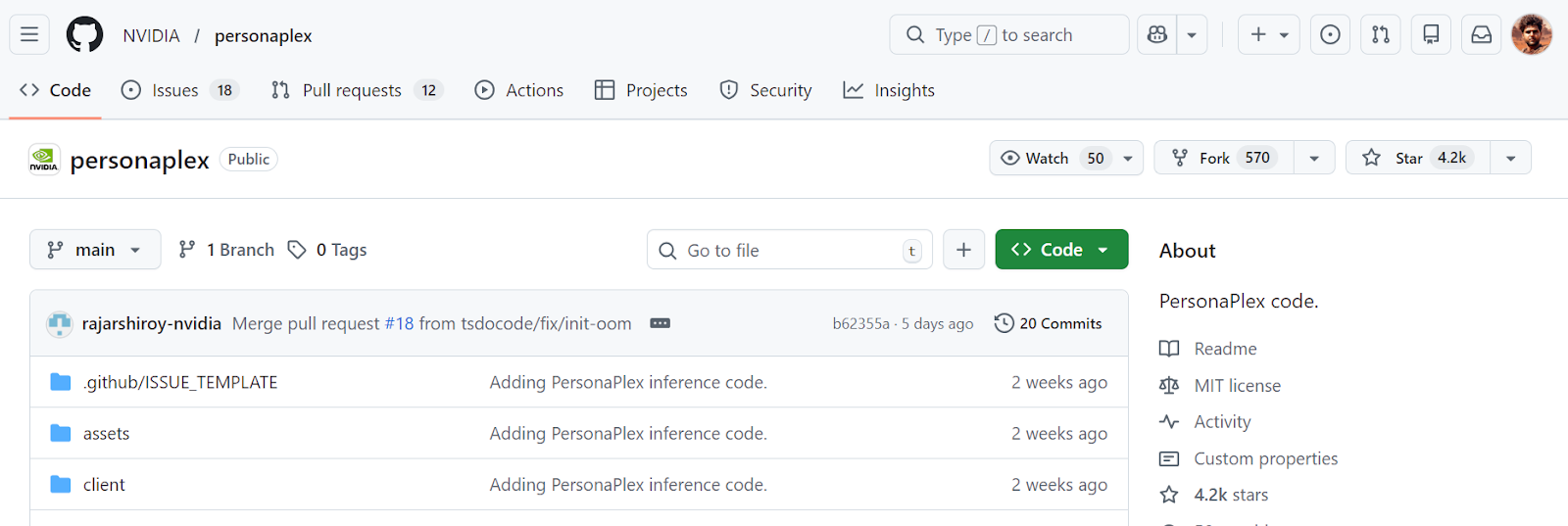

The PersonaPlex codebase is open-sourced by NVIDIA and available on GitHub (NVIDIA/personaplex: PersonaPlex code), making it easy to explore, customize, and run locally.

Installing from source gives you full control over the setup and ensures compatibility with the latest updates in the repository.

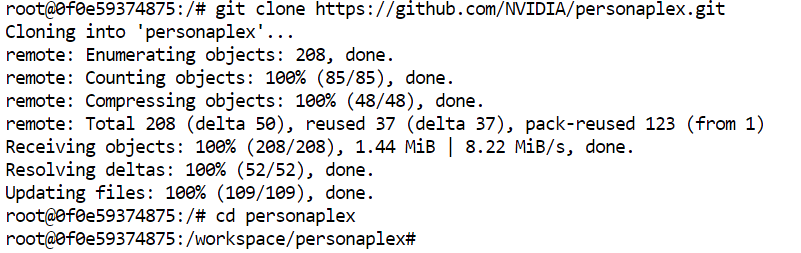

To get started, clone the PersonaPlex repository:

git clone https://github.com/NVIDIA/personaplex.git

cd personaplex

Then install Moshi, the core full-duplex speech model that PersonaPlex is built on.

Moshi is responsible for real-time listening and speaking, enabling PersonaPlex to handle interruptions, pauses, and natural conversational timing without relying on a traditional ASR → LLM → TTS pipeline.

Installing it from source ensures that all audio, streaming, and conversational components are correctly set up for local execution.

pip install moshi/.Once Moshi is installed, your environment is fully prepared to start the PersonaPlex server and begin interacting with the model in real time.

Starting the PersonaPlex WebUI Server

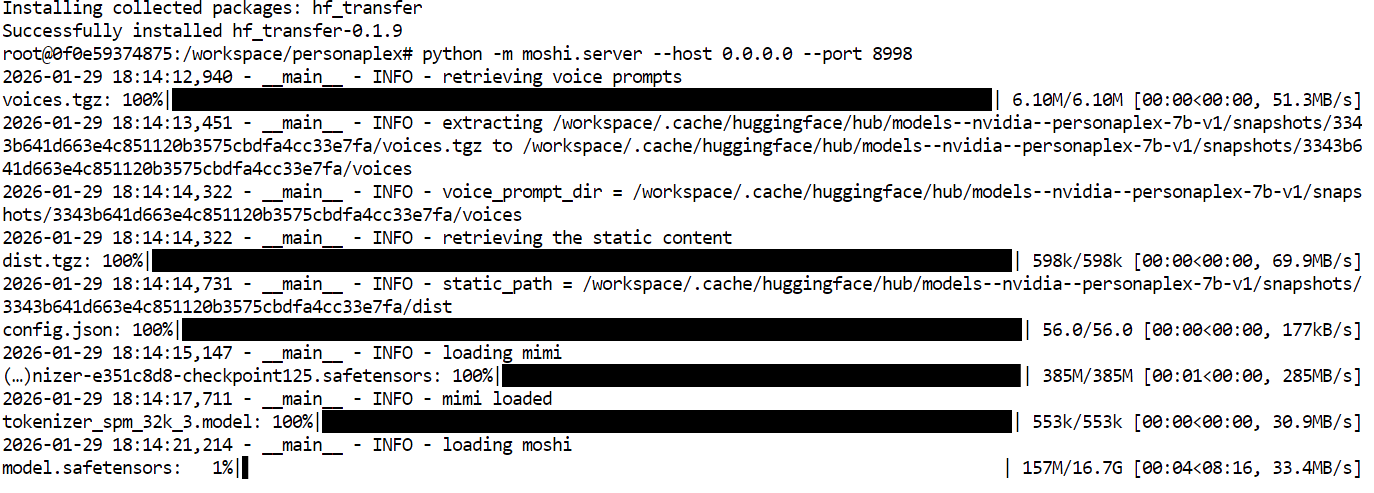

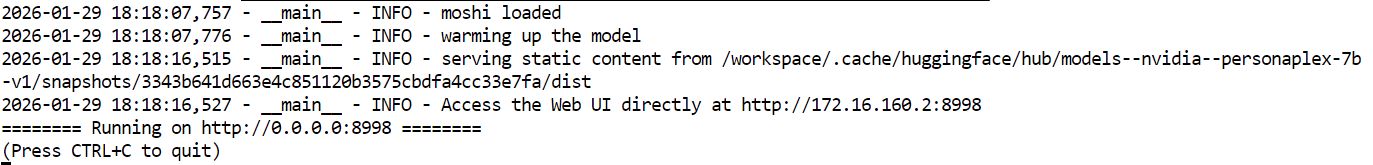

With the environment and dependencies in place, you can now start the PersonaPlex WebUI server. In the terminal, run the following command to launch the Moshi server that powers PersonaPlex:

python -m moshi.server --host 0.0.0.0 --port 8998The first time you run this command, the server will automatically download the PersonaPlex model and other required files. This step can take a few minutes, depending on your network speed, as the model is fairly large.

Once the download is complete, the server will start listening on port 8998.

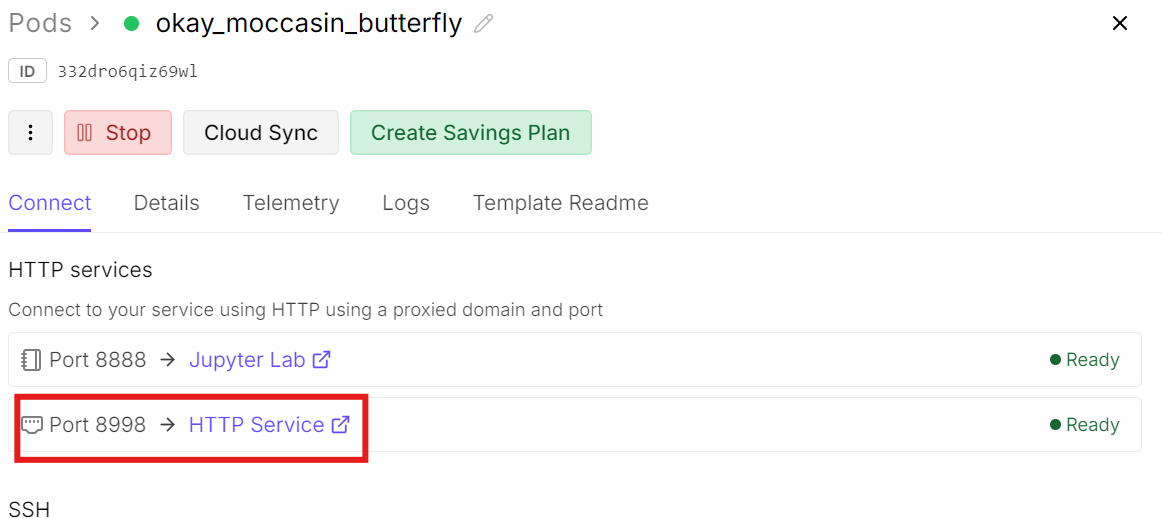

To access the WebUI, go back to your RunPod dashboard.

In the Connect section, find the exposed port 8998 and click the link provided. This will open the PersonaPlex WebUI in your browser, where you can start interacting with the model through real-time voice conversations.

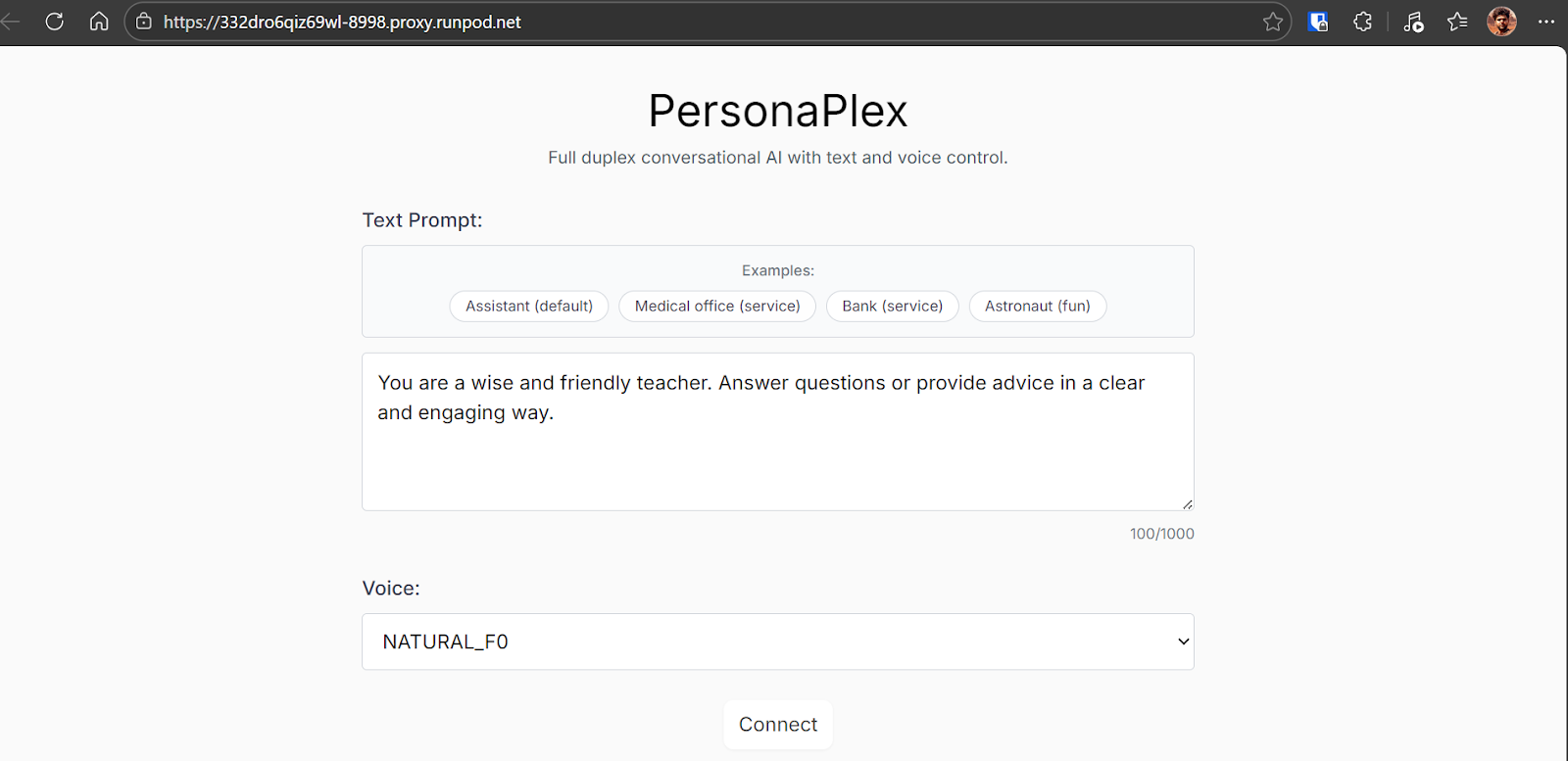

Testing PersonaPlex Using the WebUI

The PersonaPlex WebUI comes with several example prompts to help you get started, and you can also create your own custom prompt to define the assistant’s role and behavior.

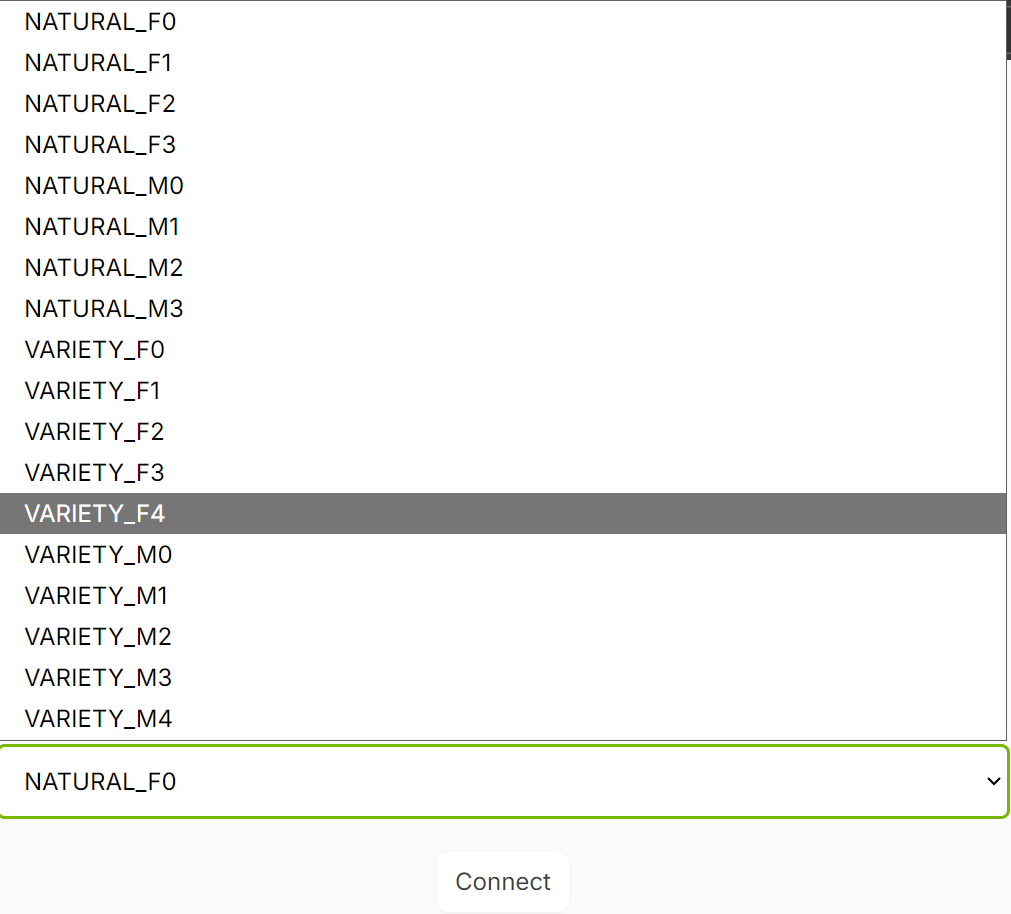

You can select a voice before connecting, which determines how the persona sounds during the conversation.

For this tutorial, it is best to start with the default settings to get a feel for the system.

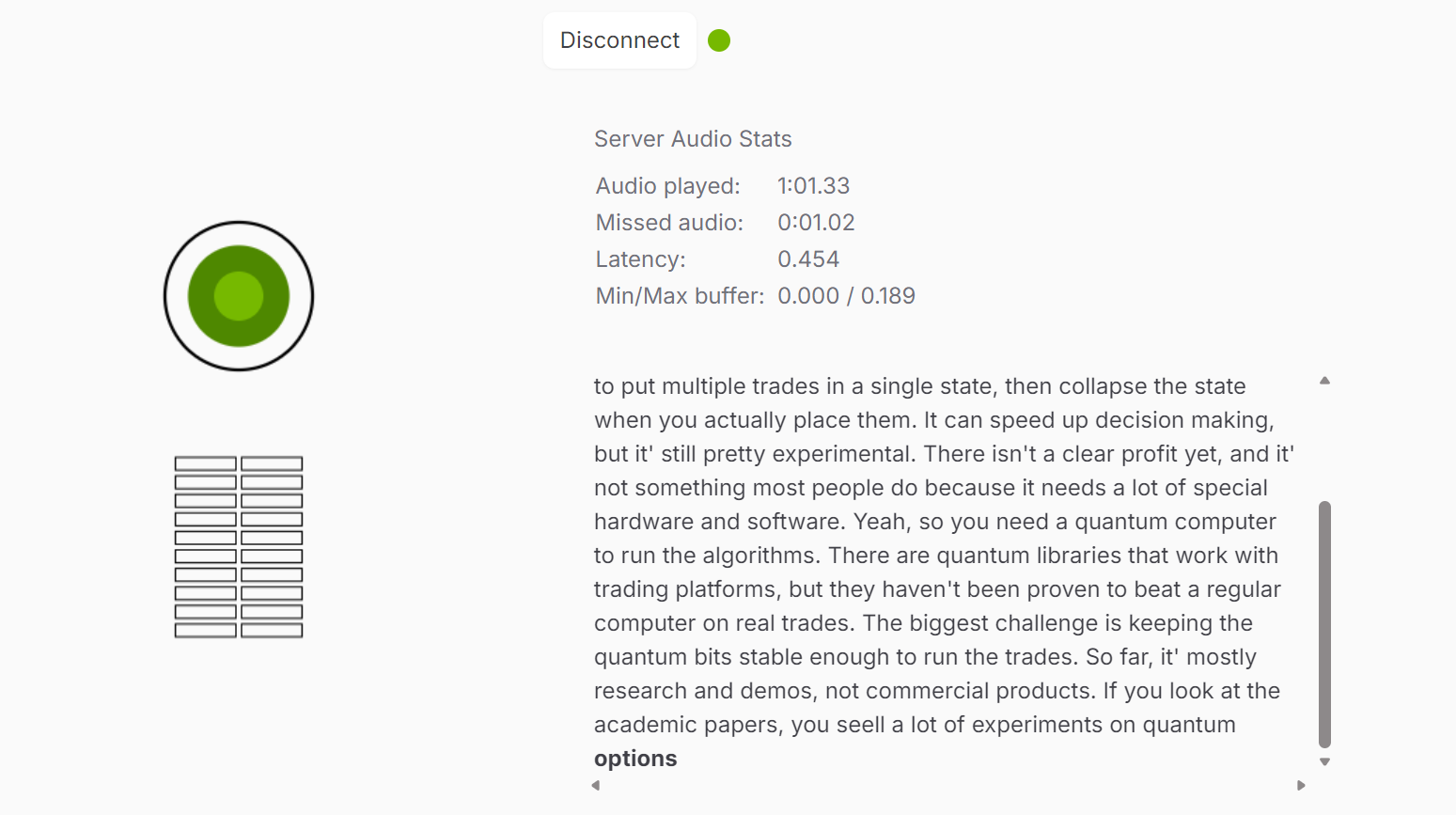

After clicking the Connect button, you will be taken to the interactive session. Here, you can speak directly to the model, hear its responses in real time, and see a live transcript of the conversation as it happens.

The experience feels fluid, with natural timing and the ability to interrupt or respond quickly.

During my testing, the interaction felt surprisingly natural and engaging.

I did notice some occasional stuttering, which was likely due to browser or system load rather than the model itself. Aside from that, the conversation felt very close to speaking with a real person.

Once you are comfortable, try experimenting with different prompts and voices. PersonaPlex supports a wide range of pre-packaged voice embeddings, including more natural conversational voices and more expressive variants:

- Natural (female): NATF0, NATF1, NATF2, NATF3

- Natural (male): NATM0, NATM1, NATM2, NATM3

- Variety (female): VARF0, VARF1, VARF2, VARF3, VARF4

- Variety (male): VARM0, VARM1, VARM2, VARM3, VARM4

Switching voices and prompts is a great way to explore how PersonaPlex maintains personality, tone, and conversational flow across different roles and speaking styles.

Running PersonaPlex Offline With Python

The WebUI is great for real-time conversations, but you can also run PersonaPlex offline from Python. This is useful when you want repeatable outputs, want to test different prompts quickly, or want to generate audio files you can reuse in demos and experiments.

The offline flow is simple: you provide (1) a voice prompt, (2) a text prompt, and (3) an input WAV file. PersonaPlex then generates an output audio response and a JSON file containing the model’s transcript.

Start a new notebook in Jupyter and change the directory to the cloned repository:

%cd personaplexRun the following in a new cell. This calls the offline runner and writes both the generated audio and the transcript to disk:

%%capture

!python -m moshi.offline \

--voice-prompt "NATF2.pt" \

--text-prompt "You are a wise and friendly teacher. Answer questions in a clear, engaging way." \

--input-wav "assets/test/input_assistant.wav" \

--seed 42424242 \

--output-wav "out_teacher.wav" \

--output-text "out_teacher.json"What each flag does:

--voice-promptselects the voice embedding (here, a natural female voice).--text-promptdefines the role and behavior of the assistant.--input-wavis the recorded user audio the model will respond to.--seedmakes the output more reproducible between runs.--output-wavis the generated spoken response.--output-textsaves the transcript output as JSON.

Once the command finishes, you can play the response audio directly:

from IPython.display import Audio

Audio("out_teacher.wav")You should hear a clear, natural response in the selected voice, matching the persona defined in your text prompt.

The JSON transcript can sometimes contain tokenization artifacts such as extra spaces around punctuation or split word pieces. The helper below loads the JSON and cleans it into readable text.

import json

import re

with open("out_teacher.json") as f:

data = json.load(f)

def detokenize(tokens):

# 1) drop padding-like tokens

drop = {"PAD", "EPAD", "<pad>", "</s>", "<s>"}

toks = [t for t in tokens if t not in drop]

# 2) join with spaces first

s = " ".join(toks)

# 3) fix spacing around punctuation

s = re.sub(r"\s+([.,!?;:])", r"\1", s)

# 4) fix common split contractions: "it ' s" -> "it's"

s = s.replace(" ' s", "'s").replace(" n't", "n't").replace(" 're", "'re").replace(" 'm", "'m").replace(" 've", "'ve").replace(" 'd", "'d")

# 5) fix stray spaces around apostrophes

s = re.sub(r"\s+'\s+", "'", s)

# 6) fix cases like "for k" -> "fork" and "fl uff" -> "fluff"

# (general rule: merge single-letter fragments if they look like split wordpieces)

s = re.sub(r"\b([A-Za-z])\s+([A-Za-z]{1,3})\b", r"\1\2", s)

# 7) collapse multiple spaces

s = re.sub(r"\s{2,}", " ", s).strip()

return s

clean_text = detokenize(data) # replace with your list variable

clean_textYou should now see a readable transcript string that matches the generated audio. If your output still contains odd splits (for example, “fl uff” or “afor k”), that is normal for some runs and can be cleaned further with additional rules, but the main content should already be understandable.

"Hey, let me know if you have any questions.

Hmm, first rinse the rice a couple of times until the water runs clear, that cuts down on starch, then use apot with a tight fitting lid, bring to a boil,

give it a quick stir, then turn the heat down low and cover, let it s immer without lifting it, and when it'done fl uff it with afor k, that usually

You could to ss the hot rice with a nice handful of chopped fresh herbs like basil or par sley, or you could sprinkle a little g rated cheese,

a squeeze of lemon or lime, adr izzle of olive oil, some chopped fresh herbs, or even some to ast ed nuts, that adds color and flavor."Final Thoughts

Testing PersonaPlex genuinely surprised me. From the very first interaction, it felt less like experimenting with a model and more like having a real conversation. Being able to interrupt naturally, get instant responses, and maintain a consistent personality throughout made it feel far ahead of most voice systems I have tried. Running everything locally made the experience even more impressive, with no noticeable delay or loss of control.

There are still some drawbacks. I noticed occasional stuttering, and once the conversation moves on, it does not always handle returning to an earlier topic smoothly.

It also does not fully understand non-English accents yet, which can lead to mispronounced names or imperfect transcriptions. These feel like edge cases rather than fundamental issues, and I am confident they will improve quickly.

I am sure that in the future these issues will be resolved, and we will get an even better, fully local and real-time conversational AI that combines the reasoning of GPT-5.2 with the voice quality of ElevenLabs.

PersonaPlex FAQs

What hardware do I need to run PersonaPlex locally?

NVIDIA recommends a GPU with at least 24 GB of VRAM (like an A10G, A40, or RTX 3090/4090) to run the 7B model smoothly with low latency. You also need a Linux environment with CUDA support. While it is possible to offload layers to the CPU, this will significantly degrade the real-time performance that makes the model special.

Can PersonaPlex speak languages other than English?

Currently, the v1 release is English-only. The architecture supports multiple languages, and NVIDIA has indicated that support for other languages (such as Spanish) is on the roadmap for future updates, but for now, it is optimized for English conversation.

Can I use this for commercial applications?

Yes. The model weights are released under the NVIDIA Open Model License, which generally permits commercial use. However, you should review the specific license agreement on the Hugging Face model card to ensure your use case (e.g., hosting a paid service) complies with their terms.

How is this different from using faster-whisper and a fast LLM?

Traditional systems are "half-duplex"—they wait for you to finish speaking, transcribe the audio, think, and then speak. PersonaPlex is "full-duplex," meaning it processes audio and generates tokens continuously. This allows it to listen while speaking, handle interruptions naturally, and generate backchannels ("uh-huh", "right") without the awkward latency of turn-based systems.

Why does the model sometimes mispronounce names or split words in the transcript?

Because PersonaPlex streams audio tokens in real-time frames (24kHz), text tokens are sometimes generated across split frames. This can result in artifacts like "fl uff" or "afor k" in the raw transcript. These are normal side effects of streaming tokenization and can be cleaned up with simple post-processing scripts.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.