Cours

Sora 2 was released at the end of September with a promise that it would soon become available through the OpenAI API. That moment has finally come, and I’m here to teach you how to use it and make the most of it.

In this tutorial, I will walk you through how to use Python to generate videos using AI. If you're interested in reading more about Sora 2 and how to use it directly on OpenAI, I recommend checking out this Sora 2 article. To look at a competing tool, I recommend the Veo 3.1 tutorial.

Here's an example of the type of videos you'll be able to make by the end of this tutorial:

Getting Started With the OpenAI API

To get started, we need to create an OpenAI account and an API key. To do so, navigate to their API key page and click "Create new secret key" in the top right corner.

This key is used to make requests to the OpenAI API. We store it in a file named .env in the folder where we write our Python scripts. Make sure to keep the key secret because anyone can use it to interact with the API using your account.

Paste the API key into the .env file with the following format:

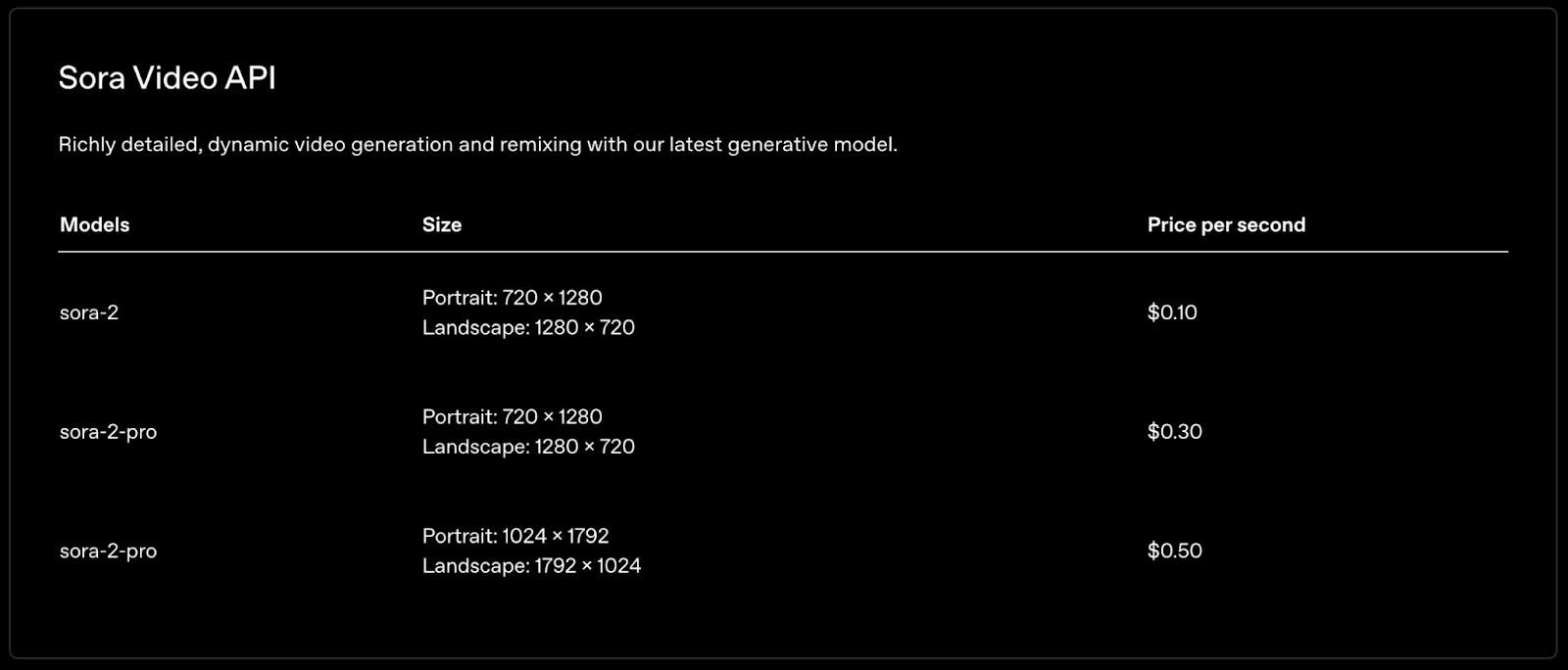

OPENAI_API_KEY=<paste_api_key_here>Note that using the API isn't free, so it's necessary to top up your account before being able to generate videos using Sora 2. For reference, here is the pricing per second of Sora 2:

Generating a Sora 2 Video With Python

All the code for this tutorial can be found in this GitHub repository.

To communicate with the OpenAI API using Python, we'll use the openai package. Note that since Sora is new on the API, we need a recent version of the package.

We can use this command to install it (or upgrade the current version if it is already installed):

pip install --upgrade openaiCreate a new script called generate_video.py in the same folder as the .env file we created before.

The first step is to import the necessary packages. Here's what we'll use:

os: A built-in package used to interact with the operating system;openai: The official OpenAI package used to interact with their API;dotenv: A built-in package that facilitates loading the environment variables from the.envfile. In this case, we use it to load the OpenAI API key.

import os

from openai import OpenAI

from dotenv import load_dotenvNext, we load the .env file:

load_dotenv()

API_KEY = os.getenv("OPENAI_API_KEY")With the API key loaded in memory, we can initialize the OpenAI client, which allows us to make requests to the OpenAI API. We use the os library to load the API key:

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

Finally, we use the videos.create function from the client to generate a video:

video = client.videos.create(

prompt="A cat and a dog dancing",

)

print(video.id)When we request video generation, we don't get a video immediately because it takes quite some time to generate. This means that the video variable isn't the video itself but rather an object that contains information about the video generation job.

That's why we printed the video identifier. This is the information we'll need to:

- Track the progress of the video generation.

- Retrieve the video.

We'll explain both these steps in detail below.

Here's the result I got:

Tracking video progress

We can retrieve the status of a job by providing the job identifier to the videos.retrieve() function, like so:

job = client.videos.retrieve(job_id)

status = job.status

progress = job.progress

print(f"Status: {status}, {progress}%")We need to wait for the progress to reach 100% to be able to download the video. For convenience, we build a function, wait_for_video_to_finish(), that monitors the status of a job until it completes:

def wait_for_video_to_finish(video_id, poll_interval=5, timeout=600):

"""

Poll status until the video is ready or timeout is reached.

Returns the final job info.

"""

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

elapsed = 0 # Keep track of the elapsed time

while elapsed < timeout:

job = client.videos.retrieve(video_id)

status = job.status

progress = job.progress

print(f"Status: {status}, {progress}%")

if status == "completed":

return job

if status == "failed":

raise RuntimeError(f"Failed to generate the video: {job.error.message}")

time.sleep(poll_interval)

elapsed += poll_interval

raise RuntimeError("Polling timed out")The function has three parameters:

video_id: The identifier of the video we want to track.poll_interval: How many seconds we wait between each tracking request.timeout: The maximum number of seconds to wait.

This function periodically checks the status of the video until it either completes or the polling time runs out.

Downloading the video

Now that we can track the video generation process, all we need is a way to download it when it finishes generating. We can achieve this using the videos.download_content() function.

Here's a function that does this:

def download_video(video_id):

client = get_client()

response = client.videos.download_content(

video_id=video_id,

)

video_bytes = response.read()

with open(f"{video_id}.mp4", "wb") as f:

f.write(video_bytes)Full Sora video generation workflow

We can put these steps together to build a video generation workflow with Sora:

prompt = "A cat and a dog dancing"

video = client.videos.create(

prompt="A cat and a dog dancing",

)

video_id = video.id

print(f"Started generating video with id {video_id}")

wait_for_video_to_finish(video_id)

download_video(video_id)Setting the video size and duration

The script above uses only a text prompt to generate the video. However, the OpenAI Sora 2 API provides other options, such as:

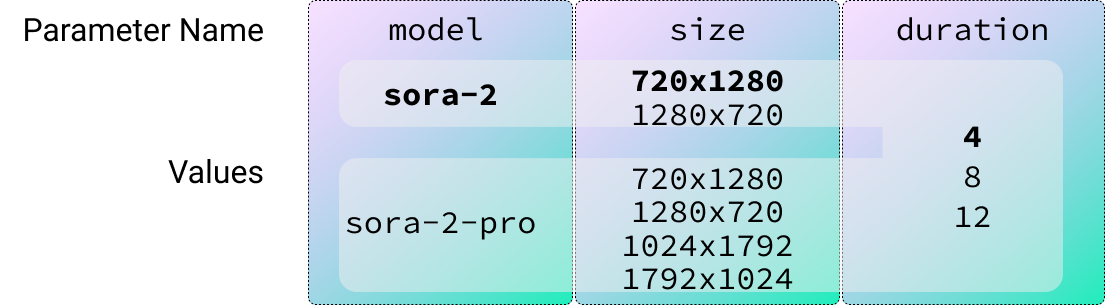

model: The model used to generate the video. By default, this issora-2.resolution: The size of the video. By default, the video size is720x1280.duration: How many seconds long the video is. By default, the video is 4 seconds long.

Here's a table with all possible values depending on the mode (the values in bold are the default values):

To make the script easier to use, we can utilize the argparse built-in package to allow the user to specify the value of each parameter.

Here's an example of how to set the prompt and model arguments with argparse:

parser = argparse.ArgumentParser()

parser.add_argument(

"--prompt", # Name of the argument

required=True, # Specify that the argument is required

help="The video prompt.", # Helper text

)

parser.add_argument(

"--model",

default="sora-2", # Specify a default value for the argument

choices=["sora-2", "sora-2-pro"], # Specify a list of possible values for the argument

help="Model to use (sora-2 or sora-2-pro).",

)To load the arguments, we call the parse_args() method, like so:

args = parser.parse_args()

prompt = args.prompt

model = args.modelThe generate_video_pipeline.py script in the GitHub repository puts all the pieces we learned together into a script that can generate videos using Sora 2.

Here's an example of how to run it in the terminal with specific parameters:

python generate_video_pipeline.py --prompt "A family of dogs driving a car" --model sora-2-pro --size 1280x720 --seconds 8This was the result:

Sora 2 API Prompt Tips

OpenAI provides a complete prompt guide for Sora 2.

The fundamental ideas for building a good Sora 2 prompt are:

- Balance detail and freedom: Specific prompts give control; simple ones invite creativity.

- Set parameters in the API: Define model (sora-2 or sora-2-pro), resolution, and clip length explicitly.

- Think in shots: Describe camera framing, lighting, subject, and one clear action per shot.

- Be visual and concrete: “Wet asphalt under neon lights” beats “a beautiful street.”

- Keep motion simple: One subject action + one camera move works best.

- Light: Define light quality, direction, and color palette for consistency.

- Use image references: Add a visual input to anchor style and composition.

- Dialogue: Short, natural lines; labeled clearly; limited per clip.

- Iterate with Remix: Adjust one element (lighting, lens, palette) at a time for precise control.

They put these ideas together into the following prompt template:

[Prose scene description in plain language. Describe characters, costumes, scenery, weather and other details. Be as descriptive to generate a video that matches your vision.]

Cinematography:

Camera shot: [framing and angle, e.g. wide establishing shot, eye level]

Mood: [overall tone, e.g. cinematic and tense, playful and suspenseful, luxurious anticipation]

Actions:

- [Action 1: a clear, specific beat or gesture]

- [Action 2: another distinct beat within the clip]

- [Action 3: another action or dialogue line]

Dialogue:

[If the shot has dialogue, add short natural lines here or as part of the actions list. Keep them brief so they match the clip length.]Providing long prompts in the terminal is cumbersome. To improve the user experience, we can change our script so that if the prompt ends in .txt, the script assumes that the user is providing the path to a text file with the prompt.

Here's how we can update the script to support this:

...

args = parser.parse_args()

prompt = args.prompt

if prompt.endswith(".txt"):

with open(prompt, "rt") as f:

prompt = f.read()

video_id = generate_video(prompt, args.model, args.size, args.seconds)

...To test it, I created a file in the same folder named prompt.txt with the following prompt:

A polite green alien wearing a beret struggles to order croissants in broken French at a bustling Paris café.

Cinematography:

Camera shot: eye-level shot, warm morning light

Mood: whimsical and awkward

Actions:

The alien studies the pastry display, fascinated.

It points to the baguette, then accidentally eats the napkin.

The waiter shrugs, unfazed, and brings it another napkin.

Dialogue:

Alien (content): “Très… chewy.”To generate the video, we provide prompt.txt on the --prompt parameter:

python generate_video_pipeline.py --prompt prompt.txt --model sora-2-pro --size 1280x720 --seconds 8Here's the video:

We see that it didn't follow the prompt entirely. In my experience working with AI video models, I always got better results by making several simpler videos and stitching them together.

However, to do that, we need to be able to make them consistent. We can achieve that by providing a reference image to the model. That's what we learn next.

Generating Sora 2 Videos Based on Images

To generate a video based on an image, we use the input_reference parameter.

Here's a code snippet on how to load an image and provide it to the model as a reference:

f = open("image_reference.jpeg", "rb")

# Generate the video

video = client.videos.create(

prompt="The two people walk away from each other.",

input_reference=f

)

f.close()

wait_for_video_to_finish(video.id)

download_video(video.id)The script generate_video_with_reference.py in the GitHub repository provides a fully working example of this. When executed on the reference image, we got this:

For the reference image to work, it needs to have the same size as the one that was requested for the video generation.

We can use the Pillow package to automatically resize the reference image before providing it to the model. Note, however, that if the aspect ratio of the original image is very different, it will be distorted, and the video will likely not be very good.

To install it, use the command:

pip install PillowThe sora.py script provides an implementation for the generate_video() function that adapts the code we made before to resize the image if one is provided.

The generate_video_pipeline_reference.py script shows how we can add the reference parameter to the video generation script.

Here's an example generated from a photo using the auto-resize feature we just implemented:

Using video references

The script we built here is ready to handle video as well as image references. However, whenever I tried generating a video while providing another video as a reference, I got an error:

Video inpaint is not available for your organizationAccording to their forum, it seems that this is a common issue, which points to the fact that this feature isn't available on the API to everyone yet. We'll have to wait a bit longer to be able to use it.

Other limitations

When I was working with Sora 2, I often ran into issues where Sora refused to generate the video, stating that the moderation system blocked the request:

RuntimeError: Video generation failed: Your request was blocked by our moderation system.I understand that these systems require strong moderation because a lot of harm can be done if users are allowed to generate whatever videos they want. However, I feel that the moderation algorithm needs to be refined because it blocked most of my requests, causing me to abandon my original idea.

The photos I tried to transform into videos didn’t have any sensitive content or copyright problems, as all the images belong to me. The error also lacks any information on why it was blocked.

This issue happened really often, making it impossible for me to create anything meaningful.

Conclusion

Sora 2 opens up exciting new possibilities for AI-powered video generation, and thanks to its availability through the OpenAI API, integrating these cutting-edge tools into your Python workflow is now accessible to developers of all backgrounds.

By following this tutorial, you now have a solid foundation for creating custom videos through simple prompts, fine-tuning your results with advanced parameters, and leveraging image references for greater consistency. As the API continues to evolve, even more features — including robust video-to-video capabilities — will become available, expanding your creative toolkit.

Whether you're experimenting with playful prompts, building complex storyboards, or developing next-gen multimedia apps, Sora 2 offers a powerful way to bring your ideas to life. I also recommend checking out our guide to Meta's new SAM3 model.

Sora 2 API FAQs

Is the Sora 2 API free?

No. Depending on the model, each second of video costs between $0.1 and $0.3.

Is it possible to generate a video based on an image?

Yes, we can provide a reference image using the input_reference parameter. However, the image must be the same size as the video.

Is it possible to edit an existing video using the API?

Although Sora 2 can edit videos, the feature isn’t available to everyone yet.

How long does it take to generate a video with Sora 2?

From our experience, it seems to take at most 2 minutes.

Can Sora 2 generate audio?

Yes. Unless specified otherwise with the prompt, videos generated by Sora 2 come with audio that matches the video content. We can also direct the audio using the prompt and even include dialogs.