Programa

Google recently released its latest iteration of the AI video generation model, Veo 3.1.

In this article, I'll explore the new features of Veo 3.1, teach you how to use them, and create a longer video using Veo 3.1.

If you're new to Veo, I recommend first reading our Veo 3 article. I also recommend our guide on using the Sora 2 API.

How to Access Veo 3.1

The easiest way to access Veo 3.1 is through Google's Flow platform. This requires a subscription, whose pricing may depend on your region. The subscription comes with 1,000 monthly credits, which equates to 50 videos using Veo 3.1 Fast and 10 videos using Veo 3.1.

It is also possible to use it on the Vertex AI studio, but this requires more configuration and some knowledge about the Google Cloud Platform.

Another alternative is to use the Gemini API with, for instance, Python. In both these cases, a subscription isn't required. However, a credit card needs to be linked to the API key or Google project, and we get charged per video.

What's New in Veo 3.1?

Veo 3.1 brings new features and improvements over Veo 3 on the videos it generates, namely:

- Richer Audio Capabilities: Veo 3.1 now enables the generation of richer, more realistic audio alongside video. Audio support has been added to all Flow features, including "Ingredients to Video", "Frames to Video", and "Extend", allowing for more immersive and narrative-rich videos.

- Improved Narrative and Realism: It is now possible to generate videos giving the start and end frames of a scene, leading to a much greater control of how the scene unfolds. Realism was also enhanced, including better texture fidelity, stronger prompt adherence, and improved audiovisual quality.

- Longer, Seamless Shots: Typical AI video generation models are limited to generating videos that are a few seconds long. With the extend feature, users can now extend an existing clip to create longer videos, making it possible to create seamless, long scenes.

- Enhanced Creative Control: New capabilities in Flow allow users to edit clips with more precision. For example, we can add and remove elements (objects, characters, etc.) from scenes with realistic handling of lighting and shadows.

Exploring Veo 3.1 Features

Let’s take a look in more detail at some of the new additions in Veo 3.1.

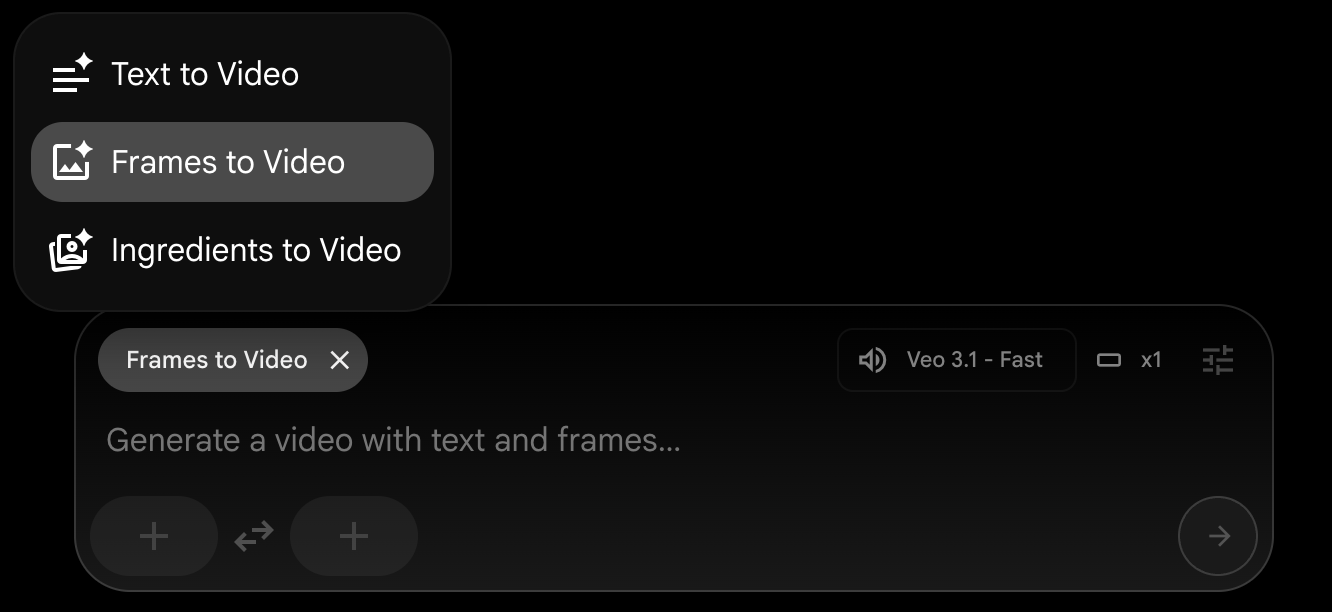

Frames to video

The frames-to-video feature makes it possible to generate a video by providing the first and last frames. The model will then generate a video where the first and last frames are the provided frames.

To use this feature, select the "Frames to Video" option:

For example, starting from a bowl of rice and having an image of a cat-shaped rice in the same bowl, we can ask Veo 3.1 to generate a video that makes this transformation:

To generate the video, simply select the first and last frames in the frame selector and write a prompt describing the video between them.

Here's the result:

This feature is especially valuable for creators aiming to maintain visual consistency across scenes or produce longer, more cohesive videos. By anchoring the start and end points, Veo 3.1 can ensure smooth transitions and coherent narratives, helping to avoid the jarring shifts that often occur in AI-generated footage.

This opens up possibilities for filmmakers, animators, and marketers who need precise control over story arcs and visual continuity while harnessing the efficiency of AI video generation.

I've tested it extensively, and it didn't always perform well. I had the idea of animating this photo I took a few years ago:

My idea was to start with a frame without people, and generate a video of them walking into the water from the left side. I created an initial frame by removing all the people and asked Veo 3.1 to generate a video:

However, despite multiple attempts, Veo 3.1 didn't manage to generate a video that smoothly animated between the two frames. Below are two failed attempts:

Both videos start and end at the given frames, but they don't transition naturally between the two. In that regard, I think there's still a lot of room for improvement in the model.

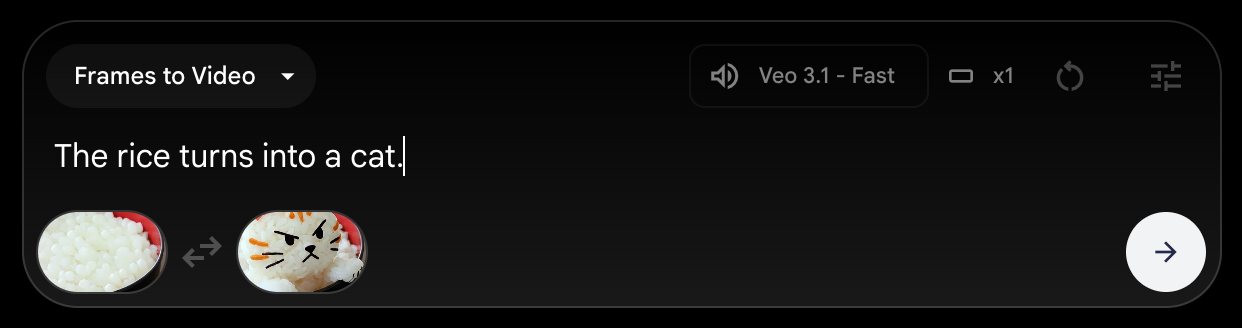

Video extend

One great feature of Veo 3.1 is the ability to extend a video. With this feature, Veo 3.1 takes an existing video and extends it based on the last frames consistently and seamlessly.

This feature addresses one of the greatest weaknesses of AI-generated video: length. Existing AI models are only able to generate videos that are a few seconds long. With the video extend feature, we can make longer videos that remain consistent throughout.

This feature requires us to have already generated a video with Veo 3.1 to be used in Flow. Unfortunately, at this time, we cannot upload a video to extend it. To access the feature, we first need to navigate to the scene builder:

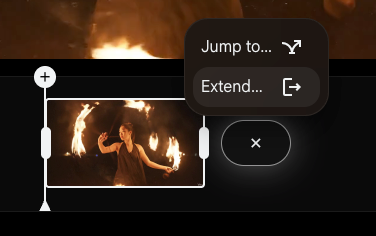

From there, we click the "+" button next to the clip and select the "Extend" option:

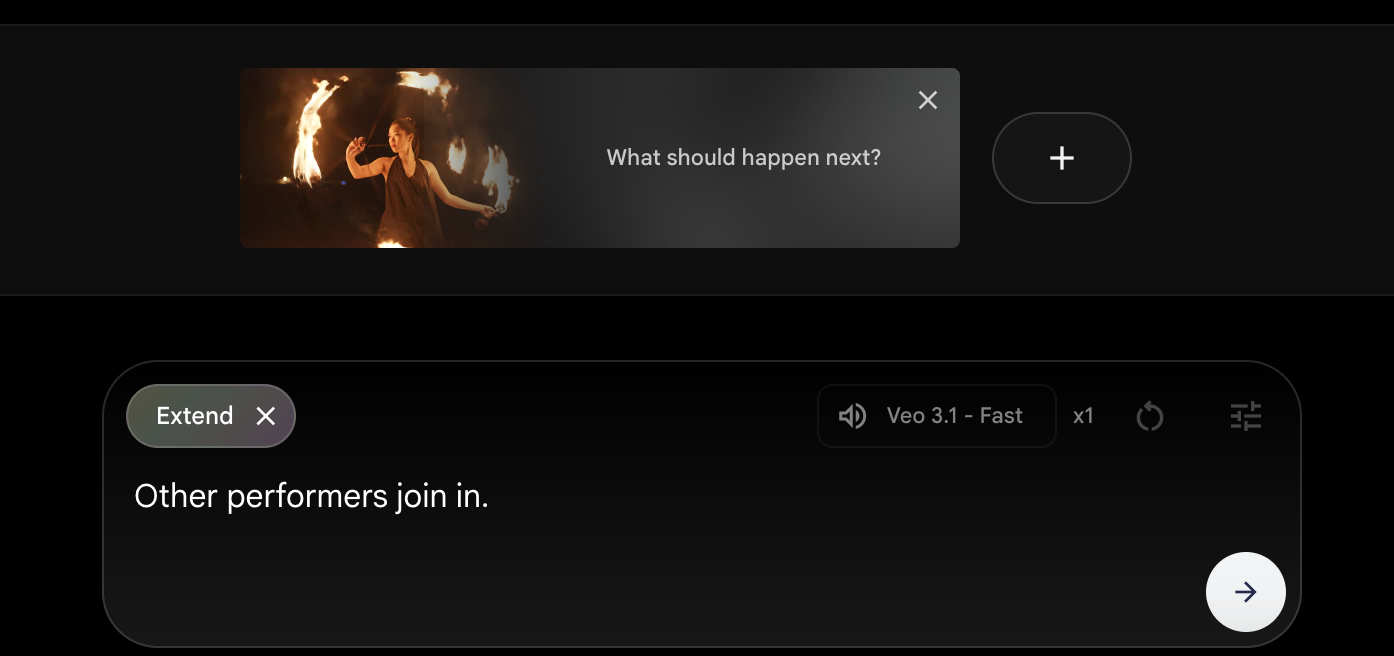

Finally, we type a prompt explaining how we want the extension to be:

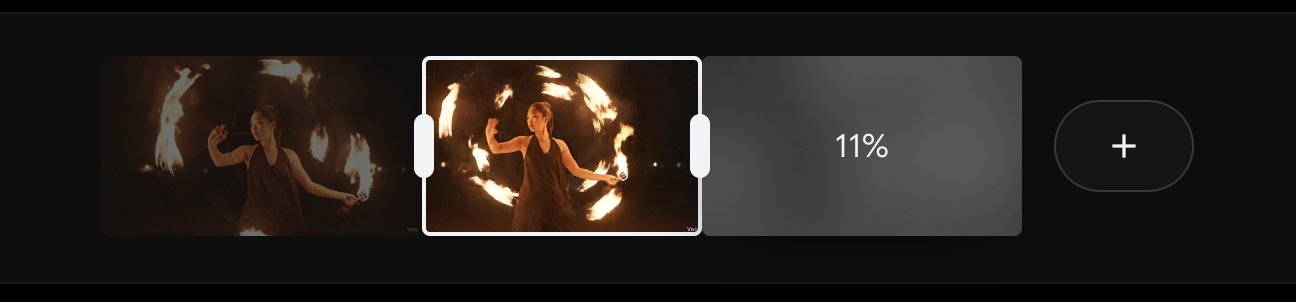

We can extend a video multiple times, allowing us to create a long, coherent video.

Here's an example of a video generated by extending a video three times. In the video, I show the four videos side by side to showcase what each extension added:

Ingredients to video

Veo 3.1 comes with a new feature that allows you to combine elements from three images into a video. In this example, I provided images of:

- A location

- A person

- An outfit

Then I asked Veo 3.1 to combine these elements into a video of that person walking in the provided location, wearing the given hiking outfit. I also specified that the person should complain about there being too many mosquitoes.

The video itself isn't great, especially the hand gestures of the person as she complains about the mosquitoes, but it illustrates the feature well. It's interesting to note that the location has elements combined from the location picture and the trees in the background of the outfit image. To avoid this, one should make sure that the elements have a neutral background.

Note that the feature doesn't necessarily require three images; it works perfectly fine with up to three reference images.

Video editing

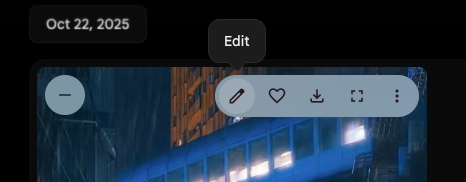

To edit a video, use the "Edit" button that pops up when we hover a video:

This opens a window where we can select an area to edit and provide a prompt with editing instructions.

We see from the image that it seems to allow only the insertion of elements in the video. According to Veo 3.1's promotional video, we should also be able to delete elements, but I couldn't find that option. I tried specifying what to remove in the prompt, but that didn't work.

I selected the top region and asked it to add moving trains. It did that quite well; however, it also added them outside of the selected area.

Making a Longer Video With Veo 3.1

A while ago, I wrote this article about Runway ML Gen 4. In it, I created a short video about a flame and a water droplet that fall in love. However, their love doesn't work out because they can't touch each other.

That video was very simple and didn't have sound, but it did manage to convey the emotion I wanted. I was curious to try to recreate a similar video using Veo 3.1 to see how much the technology has evolved.

Below is the new version of the video I made using Veo 3.1.

To get started, I generated the flame character using Nano Banana. Then I created the first scene with the flame walking around the jungle by providing a single image to the ingredients tools.

For the second scene, I asked Veo 3.1 to make a video where the flame character would meet a water droplet sunbathing on a leaf. As a byproduct, this also generated my second character. Alternatively, we could have also generated it separately and provided both characters as input to the video.

From that point on, I kept selecting a frame of each video as the first frame of my next video, leaving the end frame blank and guiding the video generation with a prompt only.

Flow makes this straightforward. When we select a frame, we can click the "+" button and save that frame as an asset and then use it as the first frame of the next video with the "Frames to video" tool.

To create this video, I mostly used the "Frames to Video" tool, providing a single starting frame. I often selected a frame in the middle of a clip.

To illustrate this, here are a few of the frames I transformed into assets to use as starting points:

As a consequence, the videos didn't chain well together, so I used external editing software to cut the clips at the right positions.

There were still some points of frustration where I couldn't make the video stick to my vision and had to adapt my vision to what the AI was producing. This has been a constant challenge with every AI video tool I've worked with.

Another issue I encountered in this process is that there are some inconsistencies with the sounds. The voice of the flame character keeps changing, and, because I input a single frame for generating the next video, the soundtrack isn't very coherent either.

This second issue can be overcome using the Video Extend feature instead. We can manually trim the clips on Flow and then extend the video from that point.

My Experience with Veo 3.1

I've experimented with using Veo 3.1 on both Google's Flow web app and through the API. My experience using it on the Flow platform was very frustrating, to say the least. Most of the time, the video generation would fail without a clear reason, showing a generic error message that didn't provide any information about the failure.

I also found the interface to be not very intuitive. It was hard to find some of the options, like video editing and video extension.

As a programmer, I found my experience using the API to be much smoother despite some hiccups along the way. Specifically, I found that the documentation was lacking and the code examples left crucial details out, which made it much more time-consuming than necessary to figure out some details. For example, it was hard to figure out exactly how to provide images to the model because their examples have some code that seems outdated and doesn't work.

I also wasn't able to figure out how to send a video to try the extend feature. Some research suggested that this feature might simply not be open on the API yet, but it's not clear if that's the issue.

In terms of results, I found that the videos are good overall despite still suffering from some serious limitations. I still feel there's a big gap between what I can imagine and what I can create with these models.

Conclusion

Veo 3.1 represents a step forward in AI video generation, offering more creative control, better audio, and the unique ability to produce longer and more coherent clips.

While the tool is undeniably powerful, especially for rapid prototyping and creative experimentation, its current limitations, such as clunky interfaces, occasional inconsistencies, and imperfect transitions, can be frustrating for those seeking precision.

Nevertheless, with continual improvements and increasing accessibility through APIs and platforms like Flow, Veo 3.1 signals a promising future where AI-driven video creation will become not only more intuitive but also more closely aligned with the artist’s vision. If you’re eager to explore the bleeding edge of generative video, Veo 3.1 is worth a test drive despite its quirks, and it will be exciting to watch how this technology continues to evolve in the coming months.

I also recommend checking out our guide to the new SAM3 model.