Track

Runway has introduced Gen-4, an image-to-video model designed to address a persistent issue in AI-generated video: maintaining consistency of characters and scenes across multiple shots.

The short films they showed in the release demo are very good, and the model holds a lot of promise. However, we’ll take a look for ourselves in this article and see whether Runway Gen-4 lives up to the expectations.

What Is Runway Gen-4?

Runway has released Gen-4, its latest model for generating short video clips from text or images. The model is a successor to Gen-3 Alpha, launched in early 2024, and is part of a series of efforts to make AI-generated video more coherent and narratively useful.

Unlike earlier models, Gen-4 places greater emphasis on temporal consistency—ensuring that characters and objects persist across frames and shots without warping or disappearing. For example, take a look at this short film named The Herd:

How to Acces Runway Gen-4

To access Runway Gen-4, we need a subscription. Be aware that it’s still rolling out, so depending on where you’re located, it might not be available yet or might only be available on higher-tier subscriptions. In the US, a $12 subscription gives us 625 credits to use Gen-4.

Moreover, even after subscribing, I had to wait one day for it to become available on my account.

How to Generate a Video With Runway Gen-4

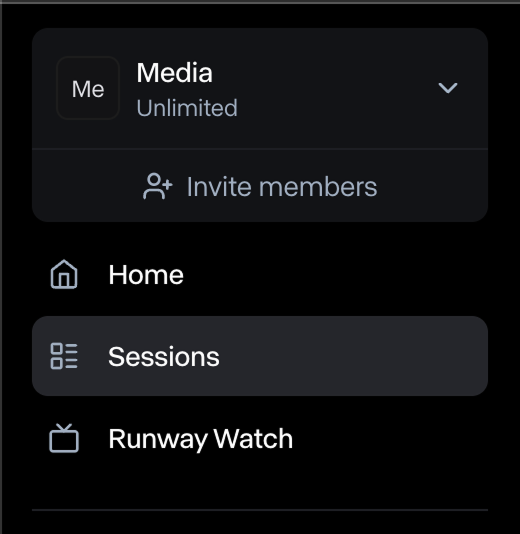

Videos in Runway ML are generated inside sessions. To create a video, we first need to create a new session by selecting the “Sessions” item in the menu on the left:

Then we click the “New Session” button on the right to create a session:

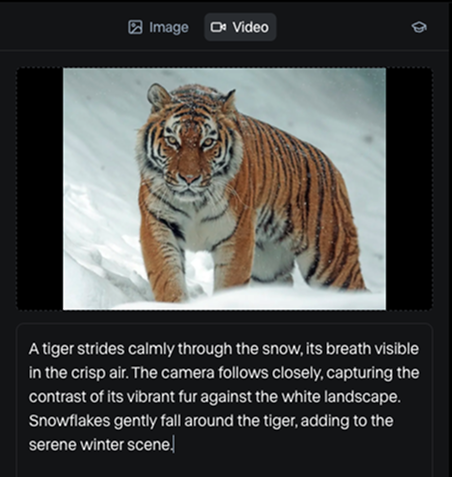

When we open the session, we’re presented with a simple interface where we can combine an image and a text prompt to generate a video:

Image source: Pexels

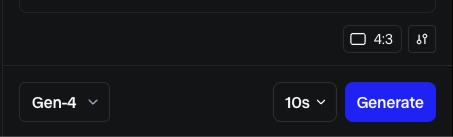

Make sure to select the Gen-4 model at the bottom:

Here’s the result:

The video is pretty good in my opinion.

If you need guidance creating a prompt, they provide this guideline page. The way I used the guidelines to generate prompts is that I created an AI bot with the guidelines, designed to transform my high-level ideas into usable Runway prompts.

Note that, unlike other video generation models out there, we can’t generate a video from text only—the model always requires an image.

How to create an image in Runway

In this first example, I used a free-to-use image that I found online. These can be a great resource for creating videos.

Runway also provides an image generation model. However, for some reason, it wasn’t available on the basic plan, and I had to subscribe to their most expensive plan to access it.

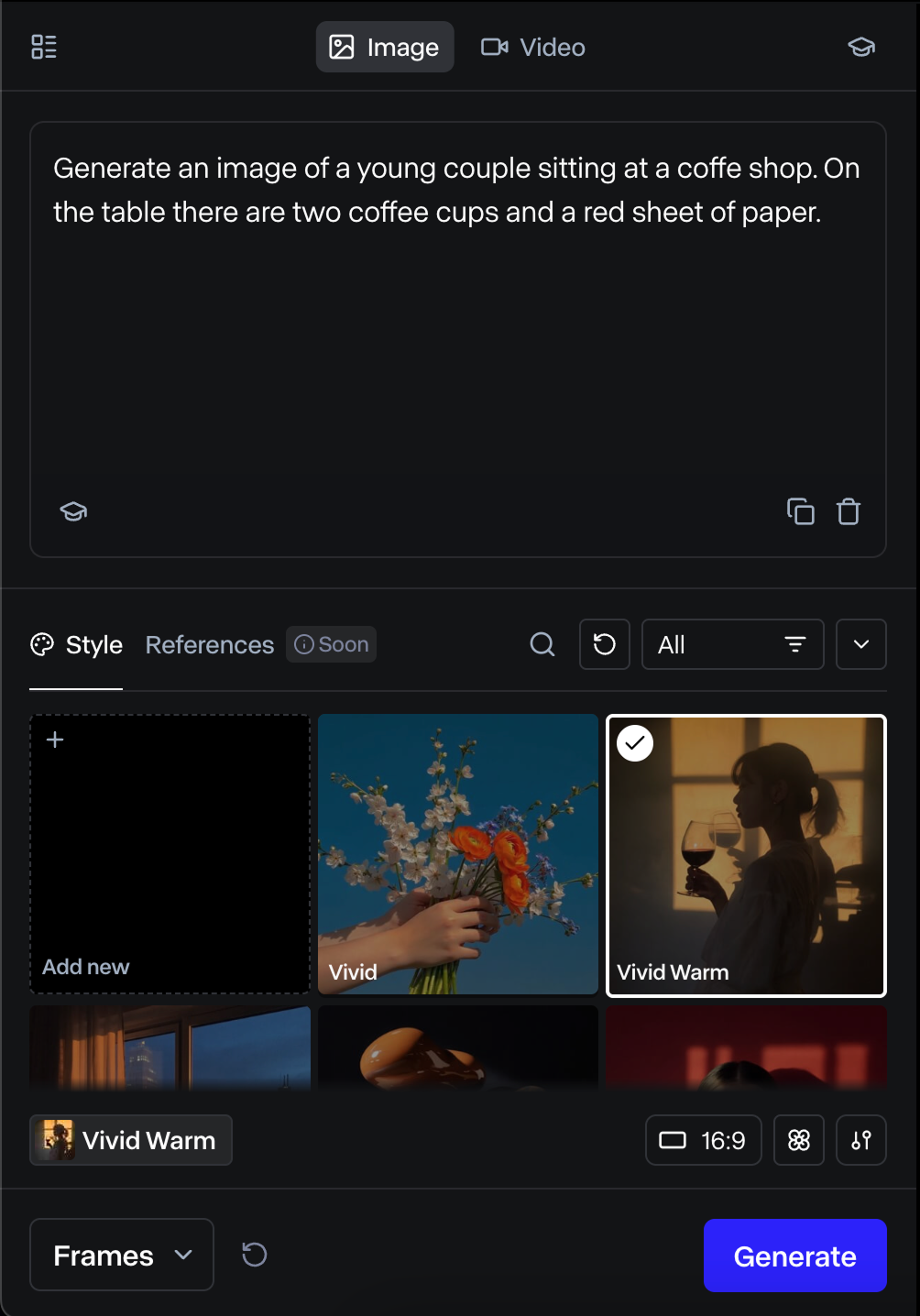

To generate an image:

- Select the “Image” mode

- Type a prompt describing the image

- Select the image style (this can be generated from an existing image you have or a preset provided by Runway).

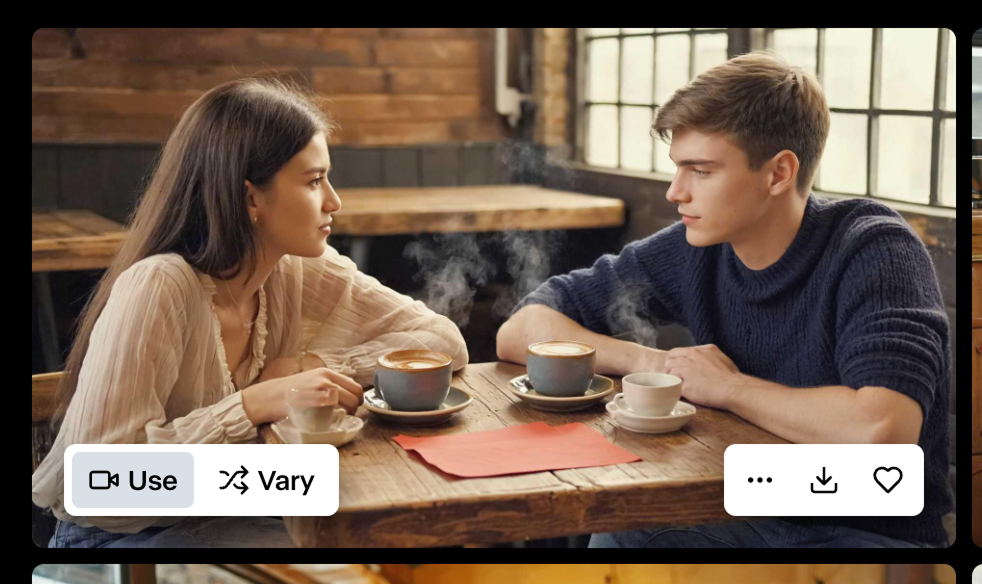

The model generated these four images:

Only the bottom two comply with the prompt. We can see both images on the top have more than two coffee cups, and the first even has some strange artefacts.

The reason I asked for a red paper on the table is that I wanted to generate a video from this image of the man creating an origami paper flower to give to the girl.

To do so, we can hover the image we want to use and click the “Use” button:

I created a prompt asking to create a video with a fixed camera of the man making an origami flower and giving it to the girl. I tried it twice, once generating a 5-second video and then a 10-second one. The results were quite disappointing this time (I used Gen-4 here):

Missing Features for Character Consistency

I’ve been testing a lot of AI video-generating tools in the past few weeks. One of the main problems we face in creating longer content is the ability to have the same character in multiple scenes. These tools typically let us generate videos of a few seconds in duration. This is enough to make a long video by combining multiple scenes, but we need the ability to have the same character appear in multiple scenes.

In their Gen-4 introduction article, they make it seem like it’s very easy to use the new mode to generate longer videos:

I expected to be able to provide an image of my character, generate the scene images with that character in them, and finally generate each scene using Gen-4. However, that’s not supported, at least for now.

In the image generation tab, we see a “References” feature that isn’t available yet.

I find this misleading because, without that feature, the videos in their announcement simply aren't possible to make.

Working Around Runway’s Limitations

Fortunately, both Google and OpenAI recently released this feature in their image generation models. Google’s Gemini 2.0 has a storytelling mode that will generate a series of images for a given story, while OpenAI’s GPT-4o image generator can take a reference image and make it into whatever we want. If you want to learn more about these two tools, I covered them here:

- GPT-4o Image Generation: A Guide With 8 Practical Examples

- Gemini Image Editing: A Guide With 10 Practical Examples

Generating a video with Gemini storytelling

Here’s a video I created asking Gemini to tell a visual story about a flame and a water drop that fall in love but learn that they can’t touch each other.

In the storytelling mode, Gemini generates a sequence of images to tell a visual story. Then, I used an AI tool to generate prompts based on Runway’s guidelines to animate each of the frames. Here’s the result:

The clips were put together using video editing software. I did some cutting and some simple transitions. Also, I had to iterate a lot on both Gemini and Runway to get the frame I wanted.

I’m quite pleased with the result. There are some glitches here and there, but overall, I was able to tell the story I wanted to tell. I actually felt quite sad with this story showing that even AI-generated content can pack real human emotions because behind it all there’s still a human trying to express a message.

Generating a video with GPT-4o image generation

I think that GPT-4o is much more powerful and versatile in generating images than Gemini. The previous video required a lot of iterations to get close to what I wanted, and I even had to use GPT-4o to generate one of the frames.

I want to try a story of an adventurer who climbs into a painting and gets teleported into the world in the painting.

I started by asking GPT-4o to generate the character. When I was happy with the result, I thought about the frames I wanted:

- The character is looking at the painting.

- We see a close-up of the character’s face contemplating the painting.

- The character is climbing inside the painting.

- The character is now in the world of the painting.

- An aerial shot shows this vast new world.

Once I was happy with the frames, I used Gen-4 to generate a video with each of them and put them together in a video editing software:

I think it’s not all bad for a first attempt. The scene where the man walks into the painting needs rework. I tried multiple generations, but none of them were as I wanted them to be.

Runway Gen-4 Vs. Other Tools

Since the fundamental tools required to keep character consistency aren’t available on Runway yet, as of this writing, Gen-4 is just an image-to-video model. Therefore, I wanted to compare it with other models to see how it performs.

I chose to animate the first scene of the Flame and Water Drop video, where the flame walks to the right and exits the frame.

Here’s a side-by-side comparison between Runway, Sora, and WanVideo:

We can see that only Runway can perform the task. This shows that there’s a huge potential for this model once all the features become available.

More Runway Gen-4 Examples

In this section, I show a few more videos that I tried to generate to give you a better understanding of what Runway Gen-4 can do at this stage.

Paper plane

I had this idea of making a video of someone building a paper airplane and then flying it. I created four frames:

- The person is making the plane.

- The person is in a field running to throw the plane.

- The person is jumping into the plane.

- The person is flying in the plane.

In this example, we see again that the model struggled with the paper. I guess it's too much detail. Also, the scene of jumping into the plane is very strange.

Animating objects

I was curious to see how the model deals with animating real-world objects, so I took a photo and asked the Gen-4 to make them dance. The result is quite funny, in my opinion:

Traffic light

On their website, they have an example of a traffic light stickman coming to life and jumping out of the traffic light. This inspired me to try creating a small video of a traffic light stickman running from the police and then jumping into an inactive pedestrian traffic light to hide from the police.

Here are the three images I generated with GPT-4o, together with the videos:

Unfortunately, Gen-4 wasn’t able to make my vision come true. I tried multiple iterations it was always far off.

Conclusion

Overall, I feel that Gen-4 is a good image-to-video model, especially when it comes to familiar scenes. However, I felt a lot of friction and frustration in getting it to do what I wanted.

I also felt that their announcement was a little bit misleading because some of the features to make consistent long-form videos just aren’t available yet, and that was not clear at all before buying the subscription. I wish AI companies would wait for their products to be done instead of hyping them out. If it hadn't been for recent releases by Google and OpenAI, I wouldn’t have been able to make any of the videos I made here.

I think that Runway shows promise, but they are still far from delivering a product that anyone with a story to tell can do it. We’re still worlds away from being able to imagine something, put it into words, and see it come to life.

Their examples are very beautiful, and I believe they were made with Gen-4, but I also think there was a tremendous amount of behind-the-scenes work to make them come to life.