Curso

Databricks Genie functions as a no-code, conversational analytics interface that translates plain English questions into analytical queries, providing instant insights, visualizations, and summaries.

Think of it like chatting with a data expert who gives you answers in seconds without writing complex SQL queries. In this article, I will show you how all this works.

If you are still new to Databricks, I recommend taking our Introduction to Databricks and Databricks Concepts courses to learn the core features and applications of Databricks and get a structured path to start your learning.

Understanding Databricks Genie

Databricks Genie is an AI-powered conversational interface that has changed how users interact with data across the Databricks Data Intelligence Platform. Positioned at the intersection of AI, natural language processing (NLP), and business intelligence (BI), Genie lets users ask questions in plain language and receive accurate, data-driven answers instantly. It also fits into a growing class of tools like Power BI Copilot, Tableau Ask Data, Amazon QuickSight Q, and ThoughtSpot, all of which allow you to ask questions in natural language. What sets Genie apart is that it is within the Databricks ecosystem, which allows it to integrate with large-scale data and analytics workflows with minimal issues.

A key differentiating feature of Databricks Genie is its advanced, multi-component architecture, which layers several specialized tools to ensure high accuracy and contextual understanding. Unlike traditional BI tools that rely on rigid query builders or predefined dashboards, Genie integrates powerful processing models directly with Databricks’ unified data and governance framework. This approach allows Genie to dynamically interpret natural language questions, correctly map them to the necessary data structures, and generate precise, executable SQL queries across all your data sources. From my extensive experience in SQL, this is something I appreciate since Genie can provide clean SQL queries that are easy to understand and troubleshoot.

Databricks Genie Key Features

I tried out Databricks Genie, and I found the following features especially impressive:

-

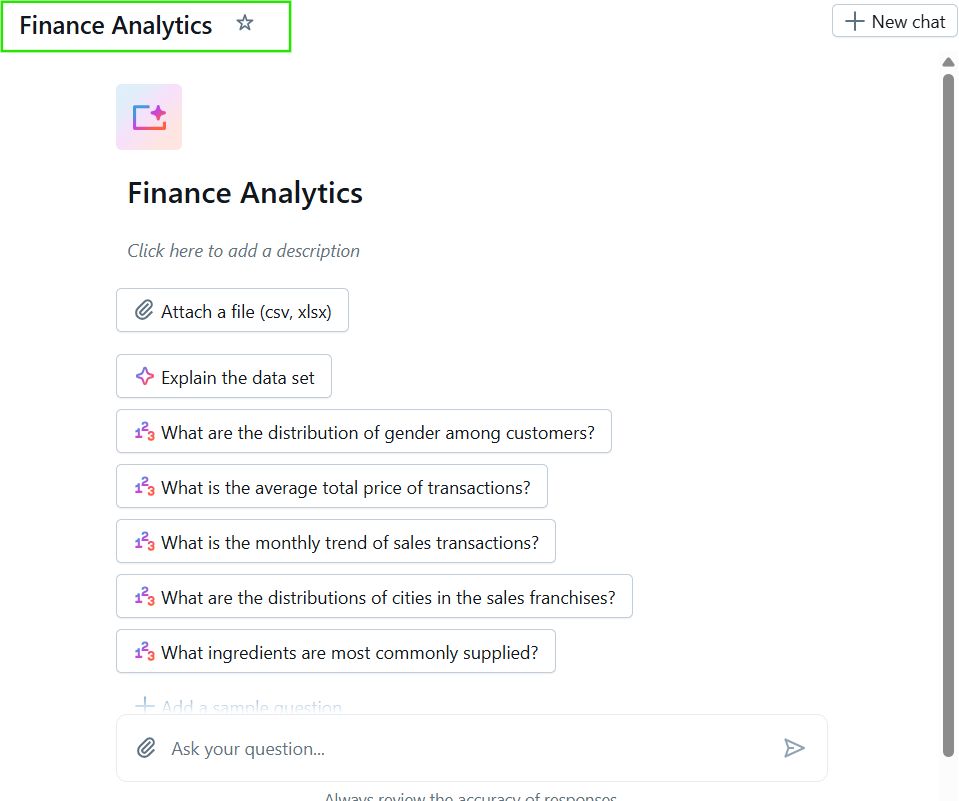

Natural language Q&A: I started to mention this earlier, and it's the most obvious feature. But I should also say that you can also bring in your own CSV or Excel files and explore them alongside your organization's datasets using simple conversational prompts.

- Collaboration tools: The platform keeps chat history for ongoing context. This way, you can give feedback on responses which then improves accuracy. There are also sample questions on offer to guide usage. Also useful: Genie integrates with Slack, Microsoft Teams, and (of course) Databricks notebooks.

-

Iterative learning: Genie uses a compound AI framework and learns from human feedback, so its responses improve over time as you use it.

The Architecture and Technology Behind Genie

Before we see what Genie can do, you're probably wondering how it works under the hood. The following are the architecture and technology behind Genie, designed for performance, flexibility, and accuracy.

Compound AI systems and their benefits

Genie is a compound AI System, which is to say that it's an architecture that manages multiple, specialized AI components and tools to solve complex problems. Databricks' documentation describes this at a high level: when a user submits a question, Genie parses the request, identifies relevant data sources, and generates an appropriate response.

- Intent parsing / NLU agent: To understand what the user is asking, such as metric, filter, time range, or aggregation.

- Planner/orchestration agent: Breaks multi-step or compound questions into an ordered execution plan.

- Retriever/grounding agent: Finds the right tables, columns, dashboards, and prior queries to ground the request.

- SQL generation agent: Translates the plan into syntactically correct, optimized SQL.

- Execution and adapter agent: Runs the query against the correct data source and formats the raw results.

- Verifier/safety agent: Checks permissions, data lineage, and ensures the result complies with governance rules.

- Summarizer/visualization agent: Creates human-friendly text summaries, charts, and tables.

The multi-agent system in Genie AI is important as each can be fine-tuned for a single use case. Also, new agents, such as geospatial or time-series specialists or others, can be added without requiring a complete overhaul of the system. As a developer, you can audit and benchmark each agent separately.

Curated semantic knowledge stores

The knowledge store provides Genie with context within your organization. The result should be more accurate translations from language to query. Components include:

- Semantic metadata: Includes friendly column names, canonical metrics, and business definitions that instruct Genie on business logic.

- Value sampling: These are small representative samples of column contents to help the retriever and SQL generator pick sensible defaults and detect likely filters.

- Value dictionaries and entity lists: It is a dictionary that maps user terms to these values, ensuring that synonyms are mapped correctly.

- Embeddings/vector indexes: For fuzzy matching and semantic retrieval of relevant docs, notebooks, dashboards, and prior queries.

- Lineage and governance metadata: This controls who can access what, trusted sources, and the timestamps of the last refresh.

How Genie generates responses

The following is the usual flow from user input to final presentation in Genie:

Step 1: User input (natural language)

The user types a question or uploads a file (CSV/Excel) and asks a question about it.

Step 2: Intent parsing and normalization

The Orchestrator and Intent Agent analyze the query. They consult the Unity Catalog to see what tables and columns the user has permission to access.

Step 3: Context grounding (knowledge store + retrieval)

The retriever consults semantic metadata, embedding indexes, and recent query history to map user terms to concrete tables, columns, and canonical metrics.

Step 4: Planning and decomposition

If the question involves multiple steps, the planner breaks the task into ordered subtasks.

Step 5: SQL / query generation

The SQL generation agent creates optimized, auditable SQL (or API calls for other systems). It may propose multiple query shapes, such as summary vs detailed, and select the best based on cost estimates and permissions.

Step 6: Permission and governance checks

Before execution, a verifier checks role-based access, data sensitivity labels, masking rules, and audit requirements to ensure compliance. If the user lacks rights, the system either denies the request or returns a masked/safe alternative.

Step 7: Execution and adapter layer

The system executes the queries against the appropriate compute (Databricks SQL warehouse, lakehouse tables, external databases, or uploaded file adapters). Results are streamed back and sampled for responsiveness.

Step 8: Post-processing and validation

Results are validated, and if something appears to be incorrect, the verifier can trigger re-runs or ask the user a clarifying question.

Step 9: Summarization, visualization, and explanation

A summarizer generates plain-language takeaways and suggested visualizations; the visualization agent renders charts and tables and prepares shareable insight cards.

Step 10: Feedback loop and learning

User feedback and query logs are fed back to improve the knowledge store, refine synonyms, and retrain agents where necessary.

What Are Genie Spaces and How to Set Them Up

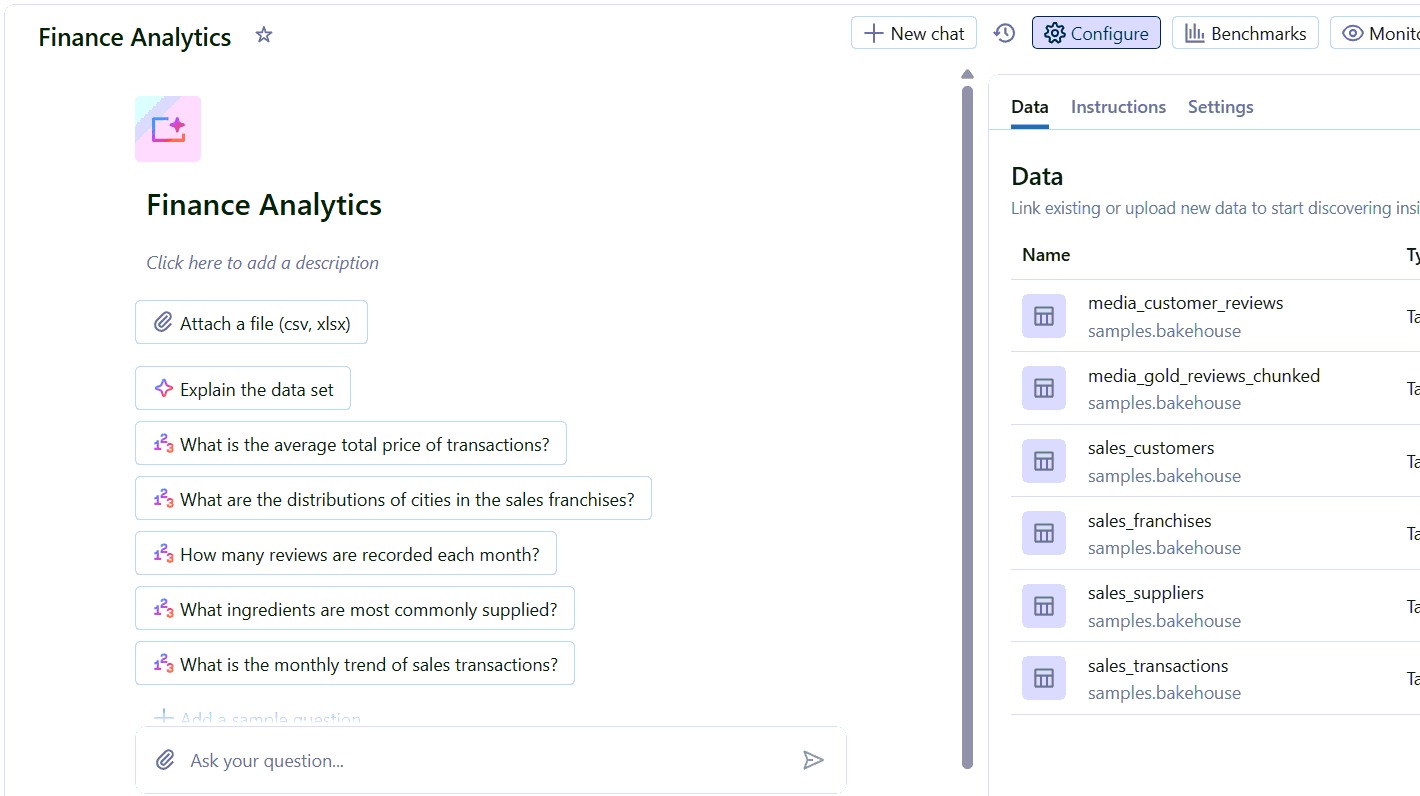

Genie Spaces are curated environments within Databricks Genie that define the data, semantics, and business logic powering conversational analytics. Think of a Genie Space as the context layer where you tell Genie what data to use, what it means, and how it should be interpreted.

I will show you how to set up Genie Spaces in Databricks.

Technical requirements and initial setup

Before creating a Genie Space, you need the following technical and governance prerequisites in place:

- Compute resources: Ensure your Databricks workspace has an active SQL warehouse or compute cluster available for query execution.

- Permissions: You’ll need the appropriate Unity Catalog privileges to access and manage datasets, as well as admin or editor permissions within Genie.

- Governance setup: The workspace should already have data lineage tracking, classification tags, and access policies defined to enforce compliance.

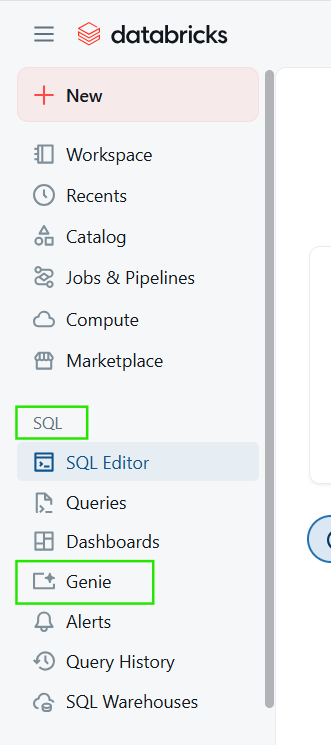

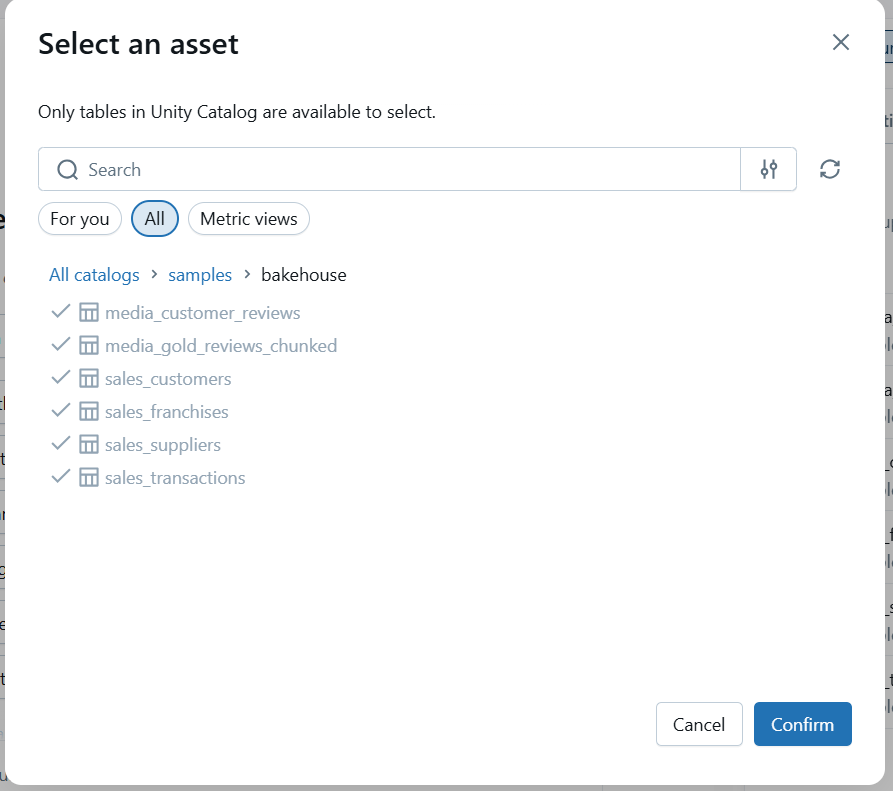

For the initial setup, you can use these steps to create a new Genie Space either through the Databricks UI or via the REST API:

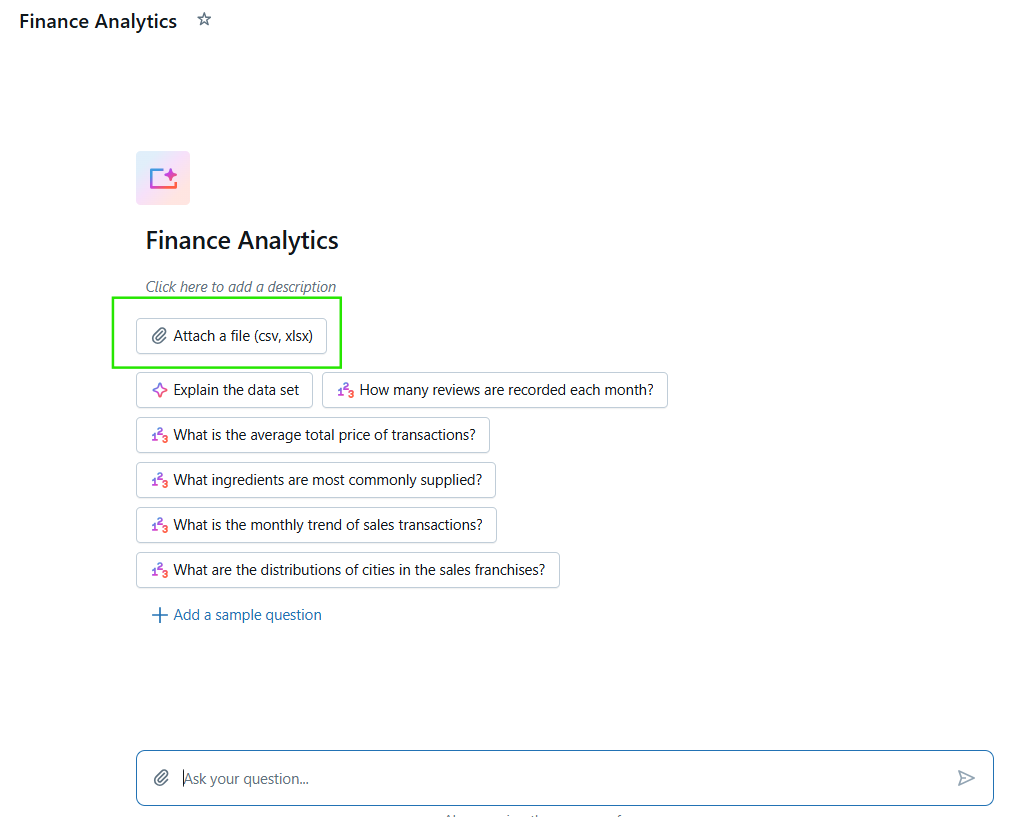

- Navigate to the "Genie Spaces" section in the SQL Persona.

- Click "Create Space" and give it a name such as "Finance Analytics."

- Select the tables and views from Unity Catalog that will be "in-scope" for this Space.

- Save and publish the Space for use by authorized teams.

Configuring and curating the space

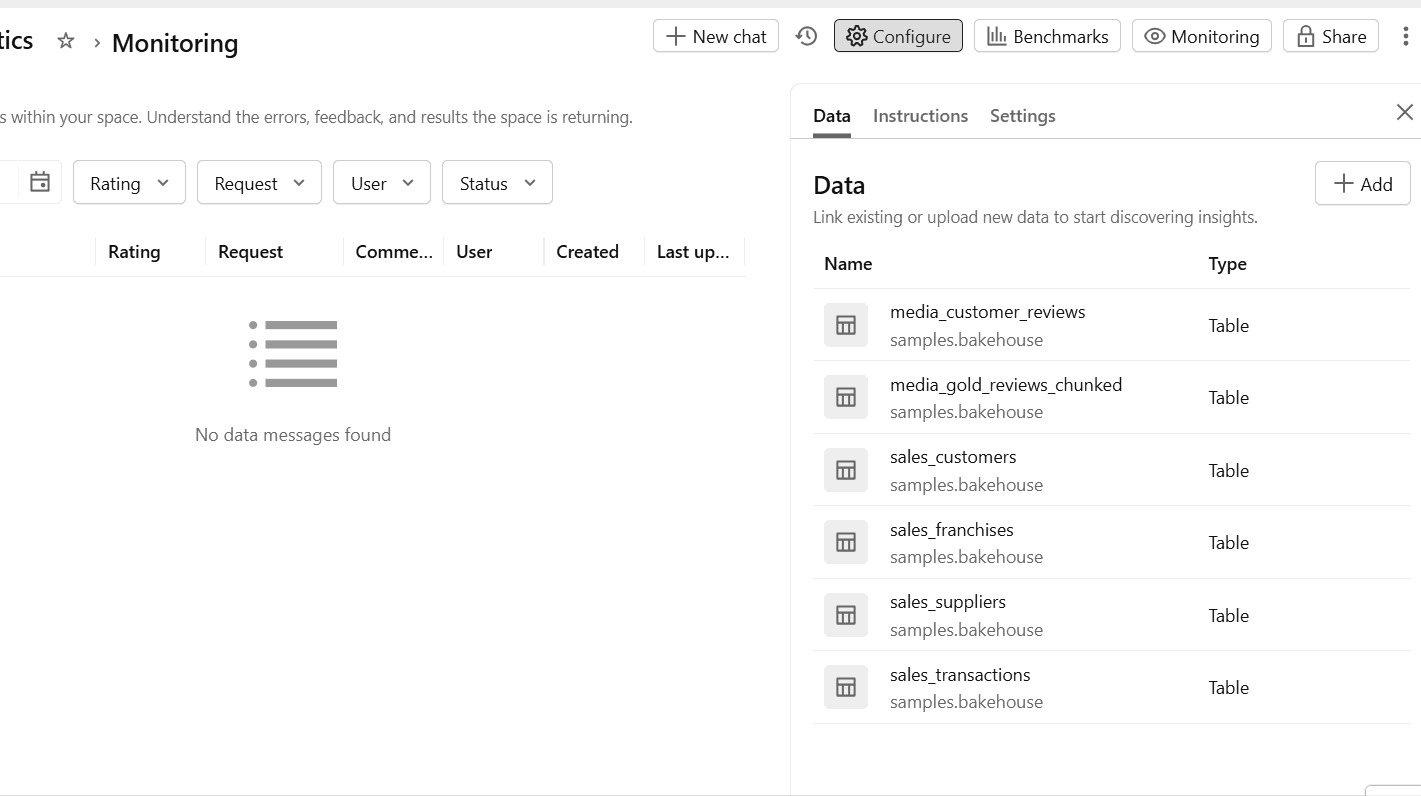

After creating the space, the next step is to curate the knowledge store. This step will enhance the Genie Space with semantic details and examples to improve interpretability and user experience. You can do the following to achieve this:

- Edit column metadata: Add human-friendly names, business definitions, and usage notes to improve Genie’s understanding of user intent.

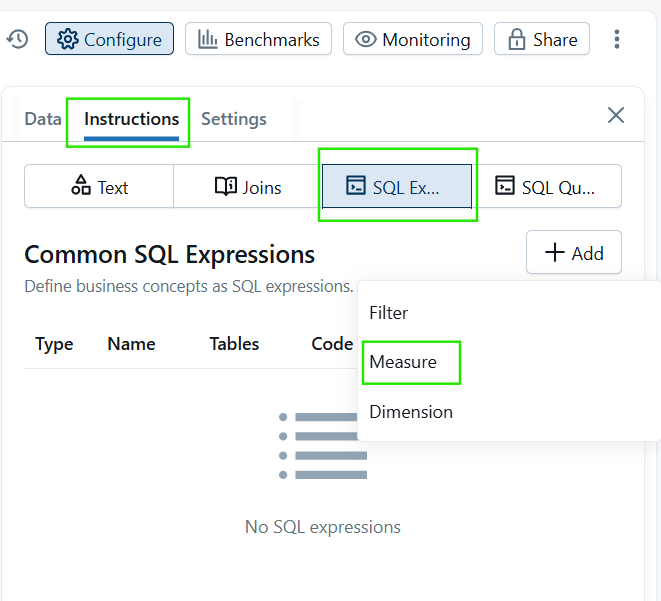

- Define SQL expressions: Create custom metrics, calculated fields, or aliases such as “Net Revenue.”

- Add trusted assets: You can also link relevant dashboards, notebooks, or reference tables as trusted data sources.

- Add sample queries: Include example questions and responses to help Genie learn context and guide users.

Defining scope and involving domain experts

Each Genie Space should have a clear purpose and audience. For example, a Sales Analytics Space might focus on pipeline, bookings, and revenue. Similarly, a Customer Insights Space could revolve around churn, engagement, and NPS data. If you explicitly define the spaces, it prevents confusion and improves response precision.

It is also important to involve domain experts from that business unit. If creating a Sales Space, the data analyst setting up the Space should work directly with the Head of Sales. These experts provide accurate business terminology, validate KPI definitions, and are familiar with the common questions their team needs to address.

Instruction types and benchmarking

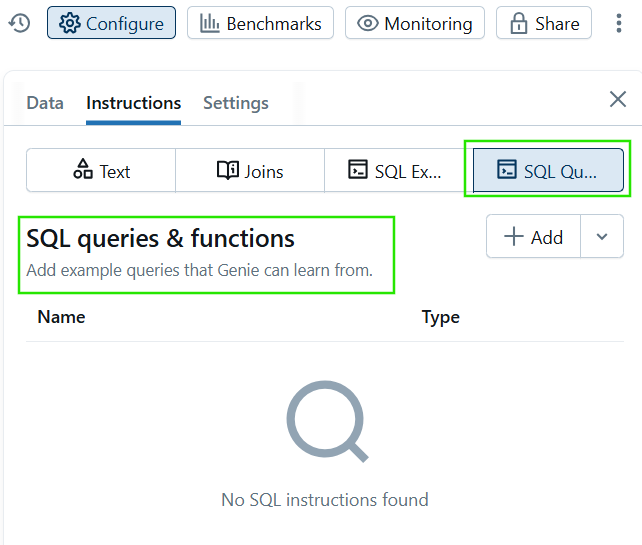

You can guide Genie in several ways within the Knowledge Store, each serving a different purpose:

- SQL expressions: Teach Genie how to compute complex metrics or KPIs.

- Example queries: Provide concrete examples that illustrate how users can ask questions effectively.

- Sample questions: Offer quick-start prompts visible in the Genie chat window to encourage exploration.

To ensure consistent quality, always test and benchmark Genie’s responses by running representative questions across all Spaces. Also, validate results for accuracy, completeness, and compliance.

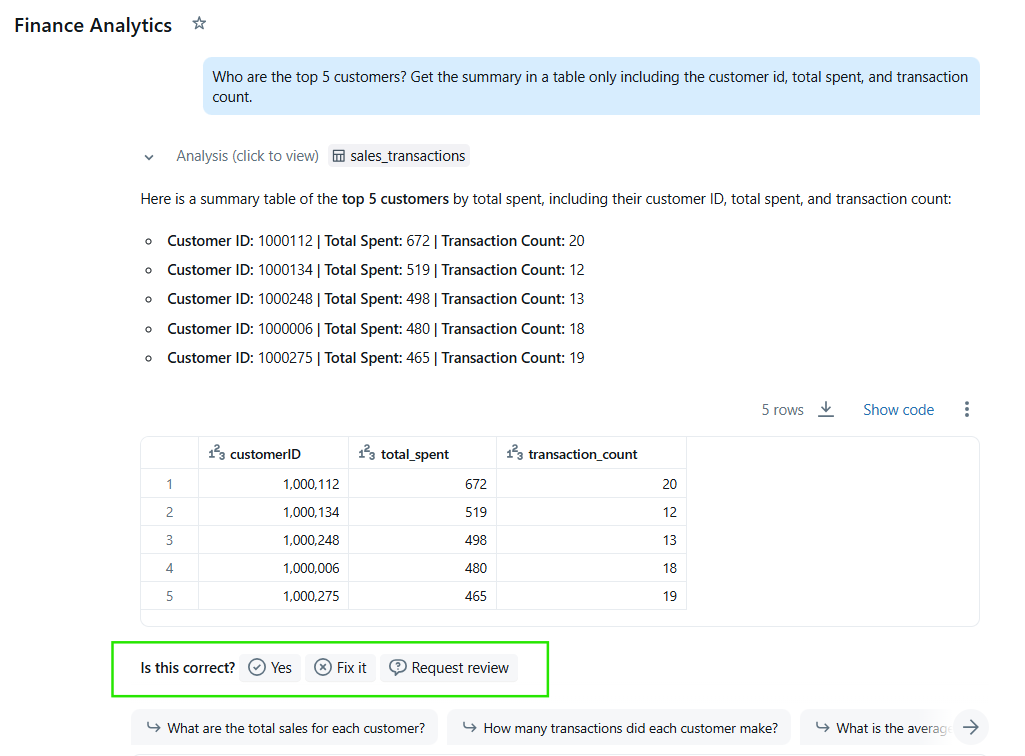

User interaction and governance

Once you have configured Genie Space, you can interact with it through the conversational interface. Here, you can ask natural language questions relevant to the Space’s domain. You can also ask follow-up questions to refine the results and provide feedback. The interface allows you to view results in different formats, including summaries, visualizations, and data tables.

It is important to note that Genie automatically enforces governance and permissions, exposing only the data to which users are authorized. Role-based access, data masking, and lineage visibility ensure all interactions remain secure and auditable.

Integration, APIs, and Advanced Use Cases

Databricks Genie offers flexible API endpoints and integration options that connect conversational analytics to the broader ecosystem of business applications, collaboration tools, and AI systems. Let’s look at some of these features below.

Genie Conversation API and integrations

The Genie Conversation API allows developers to connect NLP directly into business applications, chatbots, and external data systems. This API handles necessary steps, including authentication, initiating a conversation, checking query status, and retrieving results in JSON format, making the data easy to parse and integrate into various platforms.

Genie also supports integration with popular collaboration platforms, which I mentioned earlier in the artilce, including Slack, Microsoft Teams, and Databricks notebooks. I'm sure more integrations will happen soon.

When implementing integrations using the Databricks API, be mindful of limits, such as concurrent query requests, token limits, and response caching, to maintain reliability. To optimize integration, use batching requests, caching of frequent queries, and scheduling of off-peak workloads.

Advanced features and multi-agent systems

Genie’s architecture uses multi-component processing systems, which enable more complex and sophisticated analytical workflows. These advanced setups enable Genie to handle complex tasks, such as thematic extraction, sentiment analysis, and integrating insights across multiple datasets. The system's growing knowledge store further enhances these capabilities by providing richer definitions of business terms and a much broader data context.

Next-generation knowledge store and product updates

Recent product updates continue to expand Genie’s reach and usability. They include the following:

- File upload support: Users can now upload CSV or Excel files directly into Genie conversations for instant, ad-hoc exploration.

- Enhanced semantic metadata: Genie now better understands and applies business semantics from Unity Catalog and the knowledge store, improving contextual accuracy in responses.

- Cross-platform accessibility: Genie experiences are increasingly consistent across web, notebook, and chat integrations, enabling a unified analytics experience for all users.

- Performance and optimization improvements: Faster SQL generation, improved caching, and smarter query planning reduce latency and enhance responsiveness.

Evaluating and Improving Genie Responses

After you have created the Genie Space, you should continue, as a best practice, to evaluate and keep improving Databricks Genie’s responses. Here are some ideas:

Benchmarking and feedback

To test accuracy, you set up benchmarks using test questions and their exact, correct SQL answers. The system scores Genie's performance by comparing its generated output to your expected result. These responses are then categorized as "Good" or sent for "Manual review." This process quantifies the quality and reliability of Genie's answers across various user queries.

Iterative training and response evaluation

Genie allows users can rate responses, flag issues, and provide clarifications that inform iterative training and tuning of the system. This feedback loop helps authors to refine instructions, example SQL queries, and metadata to better guide Genie’s interpretations. Over time, these adjustments improve the AI’s ability to handle diverse queries and reduce error rates.

Monitoring and continuous improvement tools

Databricks offers different monitoring tools and dashboards to evaluate Genie’s operational health and user engagement. These tools allow administrators to track query success and failure rates across Spaces. You can also use these tools to identify frequently asked questions and topics where Genie struggles or delivers inconsistent results. The tools will help analyze feedback trends to spot areas for retraining or clarification, and review system logs and audit trails for compliance and quality assurance.

Conclusion

Databricks Genie has helped push data democratization forward by allowing people across an organization to get meaningful insights through simple natural-language questions. In my own work with SQL-focused workflows, I’ve often seen how even basic questions can turn into technical requests, so removing that barrier genuinely changes how teams access info.

Looking ahead, this shift mirrors what’s happening across the analytics world. Conversational analytics is steadily becoming more natural and context-aware. Genie will continue to evolve with deeper semantic understanding and more proactive intelligence.

If you want to become a professional data engineer, I recommend taking our Introduction to dbt and Big Data Fundamentals with PySpark to learn how to perform ETL pipelines using SQL for Big Data analysis. I also recommend checking our Big Data with PySpark skill track to learn practical skills using the PySpark API on a hands-on project. As the next step, I recommend our Associate Data Engineer in SQL career track to become a proficient data engineer.

Databricks Genie FAQs

How does Genie work?

Databricks Genie translates user questions into SQL queries, runs them on Databricks data, and returns insights with text, tables, and visuals.

What are Genie Spaces?

Genie Spaces are curated environments that define data, semantics, and logic, powering Genie’s conversational analytics.

Can I integrate Genie with other tools?

Yes, you can integrate Genie with Slack, Microsoft Teams, notebooks, and external apps through its Conversation API.

Does Genie support file uploads?

Yes, you can upload CSV or Excel files for ad-hoc exploration and instant analysis through natural language queries.

Can Genie handle real-time data?

Yes, Genie supports real-time data intelligence and ad-hoc analysis, including file uploads for flexible queries.