Course

So you've just heard about Databricks Unity Catalog. And you're now left wondering what it's all about and how it plays a role in modern data management.

This comprehensive guide will introduce you to Databricks Unity Catalog and help you understand its importance in data analytics in the modern data stack.

What is Databricks Unity Catalog?

Databricks Unity Catalog is a unified data governance service that simplifies and streamlines data management for analytics workloads. It brings together all the different types of metadata used by various business intelligence tools, databases, data warehouses, and data lakes into one central location. This encourages interoperability across systems, making it easier to find and access both structured and unstructured data.

As organizations scale their data operations, managing access, governance, and compliance across multiple data platforms becomes complex. Unity Catalog addresses this challenge by providing a unified layer for metadata, access control, and lineage tracking, reducing operational overhead.

This centralized data governance approach brings some benefits in sharing common data products such as:

- Machine learning models

- Notebooks

- Dashboards

- Files

The core components of Databricks Unity Catalog include:

- Metastore: A storage layer for metadata that simplifies data discovery and improves data lineage.

- Catalog: A central repository for storing, managing, and discovering data assets.

- Schema: A data model used to organize and manage data assets in Databricks Unity Catalog.

- Granularity: The level of detail available in metadata tracking. It allows teams to manage access at various levels (catalog, schema, table, or even column), ensuring fine-grained control over data assets.".

- Tables, views, and volumes: These are the fundamental data objects in Databricks Unity Catalog used to represent structured and unstructured data.

- Data lineage: A visual representation of the flow of data from its source to its destination, helping track changes and ensure data integrity.

These components work together to provide a comprehensive data governance solution that simplifies data management and promotes collaboration across teams.

Benefits of Unity Catalog

There are several key benefits of using Databricks Unity Catalog, as we’ve outlined below:

1. Data governance and compliance

Databricks Unity Catalog provides a centralized location for storing and managing metadata, ensuring data governance and compliance across different systems.

For example, Unity Catalog provides alerts for potential PII detection in machine learning models in production. The data lineage allows these models to be rolled back easily.

This helps organizations maintain data integrity, comply with regulations, and reduce risks.

2. Collaboration and self-service analytics

Unity Catalog encourages collaboration across teams by providing a central location for storing data assets. This eliminates silos and fosters cross-functional communication, promoting self-service analytics.

Data analysts and business users can easily discover and access relevant data assets, reducing the time spent on data preparation tasks.

Key use cases for Unity Catalog

There are a variety of ways to implement Unity Catalog:

1. Data access control and security

Databricks Unity Catalog enables organizations to implement fine-grained access control for data assets, ensuring data is only accessible to authorized users. It also allows for secure sharing of sensitive data between teams.

2. Data discovery and lineage

Unity Catalog's metadata management capabilities allow for efficient data discovery and provide a visual representation of the flow of data from its source to its destination. This helps track changes in datasets, enabling auditing and troubleshooting.

3. Streamlining compliance and audits

With a centralized repository for all data assets and their lineage, Unity Catalog simplifies compliance reporting and auditing processes. This reduces the time and effort required to ensure regulatory compliance.

How to Enable Unity Catalog in Databricks

Now, let's have a go at Unity Catalog using this simple setup tutorial:

Prerequisites

Before you can enable and use Unity Catalog, ensure you meet the following requirements:

Databricks premium or enterprise plan

Unity Catalog is only supported on Databricks Premium or Enterprise tiers. Make sure your Databricks workspace is on one of these plans.

Appropriate permissions

- Account admin: You must have the Account Admin role in Databricks to set up and manage Unity Catalog at the account level.

- Workspace admin: You should be a Workspace Admin in the workspace where you want to enable Unity Catalog.

Metastore availability

Unity Catalog requires a metastore at the account level. If you have not created one, you will need to do so during setup. For a trial Databricks account, you will have a catalog with metastore already created for you.

Supported cloud platforms

Currently, Unity Catalog is supported on:

- AWS

- Azure

- GCP

Ensure you’re using a supported Databricks environment in one of these clouds.

Managed storage or external locations

Decide if you will leverage managed tables (managed storage) or create external tables referencing data in an external location (like S3, ADLS, or GCS). Each approach can have different permission requirements.

Enabling Unity Catalog: Step-by-Step Guide

Navigating Databricks settings

- Log into the Databricks account console

- Go to the Databricks Account Console (URL may vary slightly for AWS, Azure, or GCP). Use an account admin user to sign in.

- Access the Catalog Page

- In the left-hand navigation pane (in the account console), look for Catalog depending on your Databricks release.

- In a Databricks Trial version, a Serverless Starter Warehouse metastore and catalog should have been created.

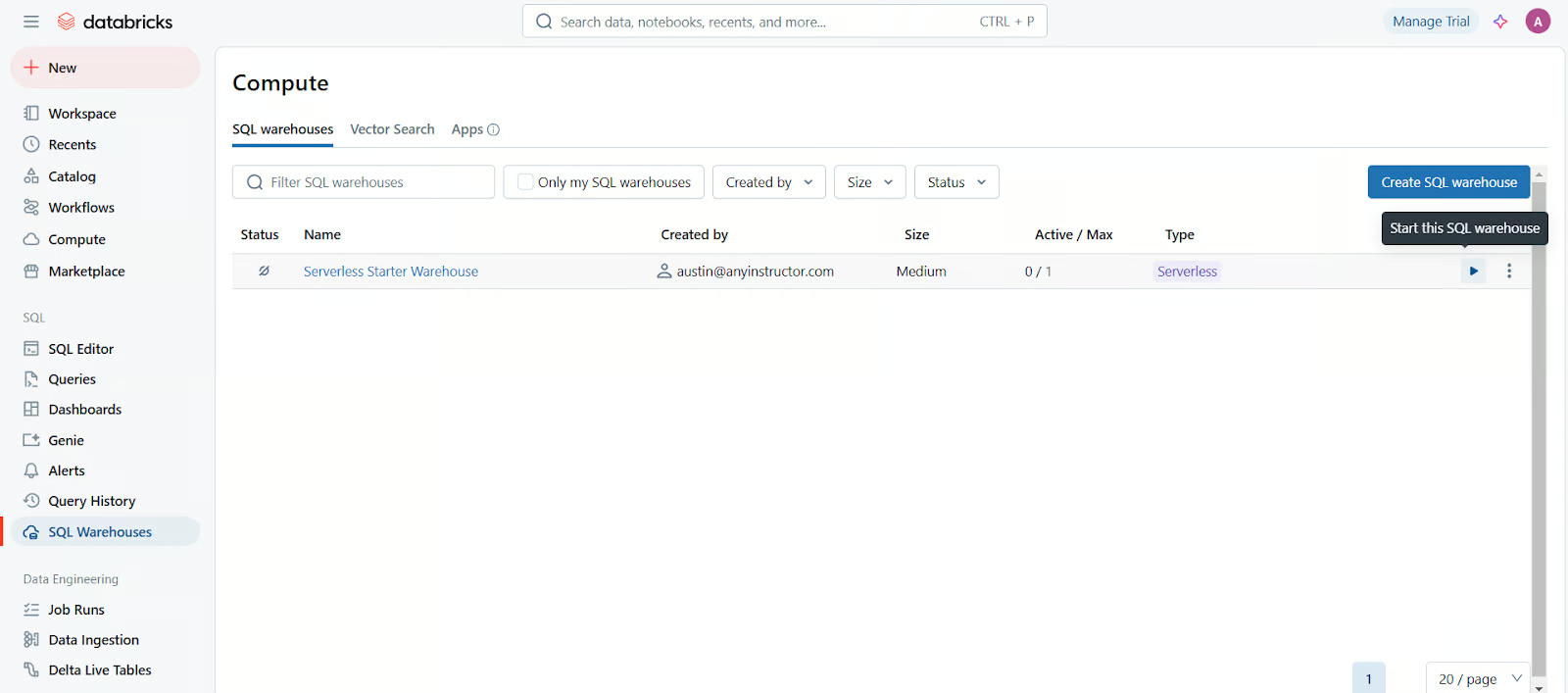

- Start SQL warehouse compute

- In the left-hand navigation pane, select SQL Warehouses, and then start the Serverless Starter Warehouse by clicking on the Play icon on the right.

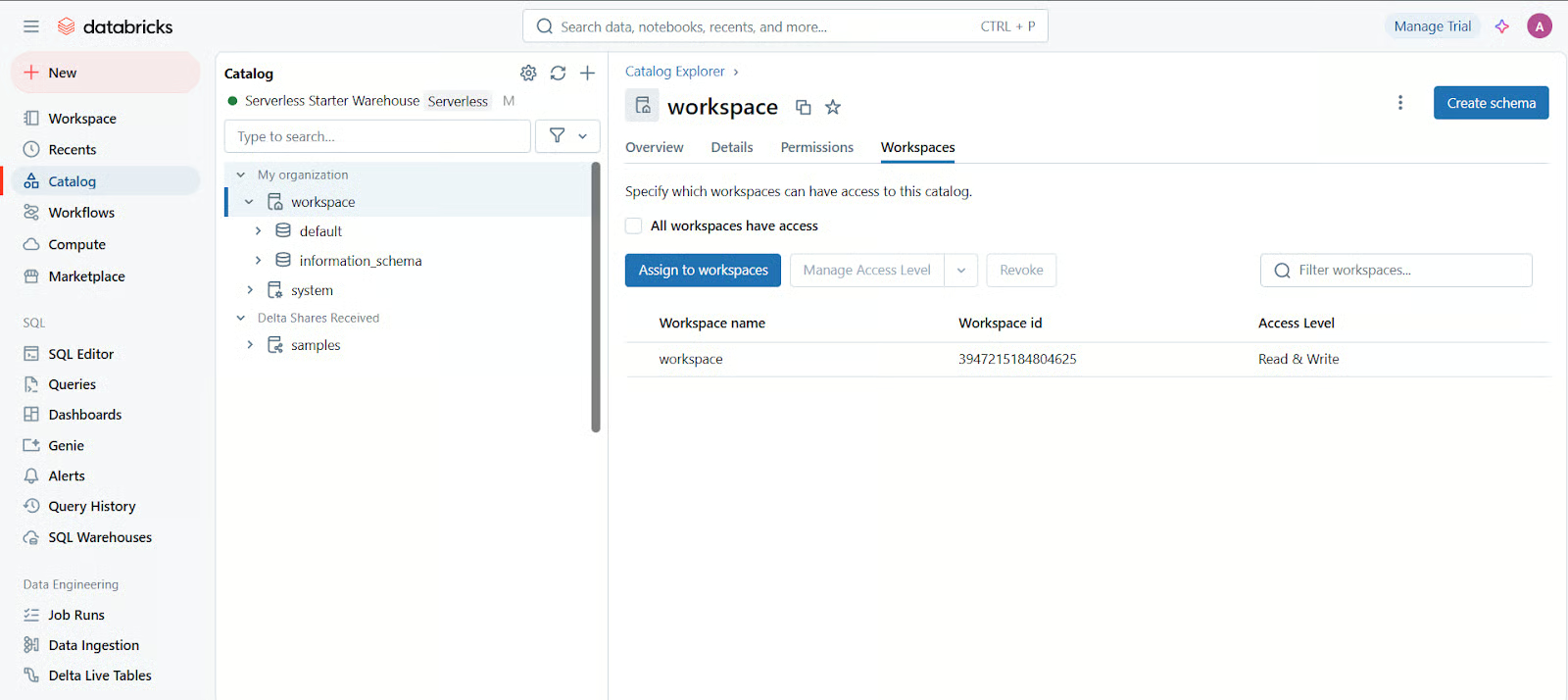

- Assign to workspaces

- Under Workspaces in the Catalog configuration, attach the workspace(s) that should use this catalog.

Configuring Unity Catalog

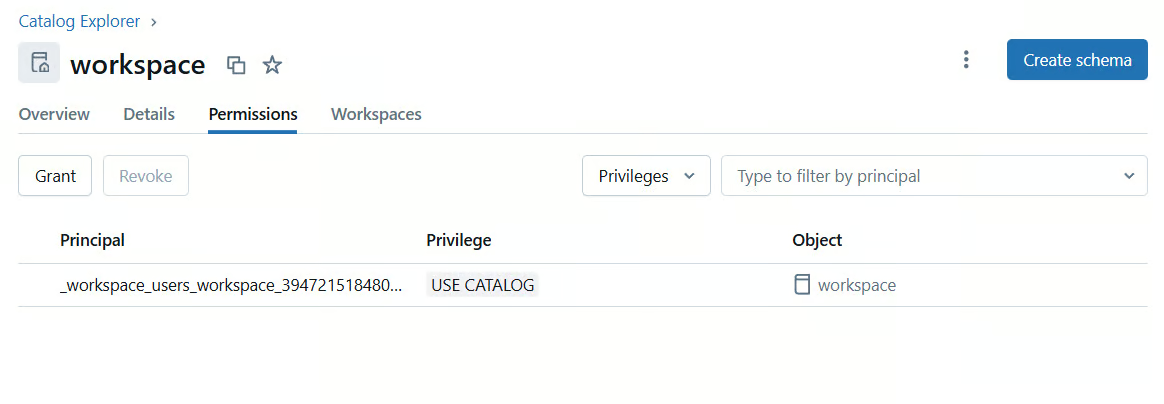

- Assign permissions

- As an admin, navigate to Catalog in the left sidebar of your Databricks workspace.

- Select the workspace you want to manage.

- Click on the Permissions tab to set up permissions (e.g., who can create catalogs, schemas, and tables). By default, the account admin or workspace admin may have full privileges, but you can delegate privileges to other users or groups.

- Create Catalogs and Schemas (if needed)

- You can create additional catalogs and schemas for different teams, projects, or data domains. This is often done via SQL commands (shown below).

-- Creates a new catalog named "demo_catalog"

CREATE CATALOG IF NOT EXISTS demo_catalog

COMMENT 'This catalog is created for Unity Catalog demo';- Configure external locations (optional)If you need external tables stored in external locations:

- Create an external location in Unity Catalog pointing to your cloud storage (S3, ADLS, or GCS).

- Grant users or groups access to read and write to these locations through Unity Catalog.

Sample Code and Usage

Below are some sample Databricks notebooks/SQL commands that you can use once Unity Catalog is configured in your workspace.

You can run them in a Databricks notebook or the SQL Editor within your workspace.

Granting privileges on a catalog

Granting privileges on a catalog ensures that specific user groups can access and interact with the data stored within it. However, USAGE alone does not allow users to query data—it simply lets them see that the catalog exists. To allow full interaction, you need to grant additional privileges at the schema or table level.

For example, to let data engineers access a catalog:

-- Grant USAGE to a specific group on the newly created catalog

GRANT USAGE ON CATALOG demo_catalog TO data_engineers_group;If they also need to create schemas within this catalog:

GRANT CREATE ON CATALOG demo_catalog TO data_engineers_group;Creating a schema (database) within the catalog

A schema in Unity Catalog organizes tables and views within a catalog, similar to how a database holds tables in traditional RDBMS systems. Using IF NOT EXISTS prevents errors if the schema already exists.

To create a schema for analytics data inside demo_catalog:

CREATE SCHEMA IF NOT EXISTS demo_catalog.analytics

COMMENT 'Schema for analytics data';After creation, you may need to grant privileges to specific users:

GRANT USAGE ON SCHEMA demo_catalog.analytics TO data_analysts_group;This allows the data_analysts_group to see the schema but not query its tables yet.

Creating a managed table

Managed tables are fully controlled by Unity Catalog, meaning Databricks handles storage and metadata tracking. This simplifies management but means data is deleted if the table is dropped.

To create a managed table for sales data:

USE CATALOG demo_catalog;

USE SCHEMA analytics;

CREATE TABLE IF NOT EXISTS sales_data (

transaction_id STRING,

product_id STRING,

quantity INT,

price DECIMAL(10,2),

transaction_date DATE

)

COMMENT 'Managed table for sales data';

Granting privileges on a table

Granting table privileges ensures users can query or modify data. The SELECT privilege allows users to read data but not modify it.

To allow data scientists to read from the sales table:

GRANT SELECT ON TABLE demo_catalog.analytics.sales_data TO data_scientists_group;

If they need to insert new records, grant INSERT:

GRANT INSERT ON TABLE demo_catalog.analytics.sales_data TO data_scientists_group;

For full control (read, write, modify), use:

GRANT ALL PRIVILEGES ON TABLE demo_catalog.analytics.sales_data TO data_scientists_group;

External location setup

If you have an external data location (e.g., S3 on AWS), you can create an external location object in Unity Catalog:

-- Create an external location named "demo_external_location"

CREATE EXTERNAL LOCATION demo_external_location

URL 's3://my-demo-bucket/my-path/'

WITH CREDENTIAL my_storage_credential

COMMENT 'External location for demo data';

-- Grant read and write privileges to a group

GRANT READ, WRITE ON EXTERNAL LOCATION demo_external_location

TO data_engineers_group;Databricks Unity Catalog Architecture

Databricks Unity Catalog is built on top of the open-source project Apache Spark, which provides a distributed computing platform for large-scale data processing. It uses the Hive Metastore to store metadata about datasets and tables, allowing for efficient management and query performance.

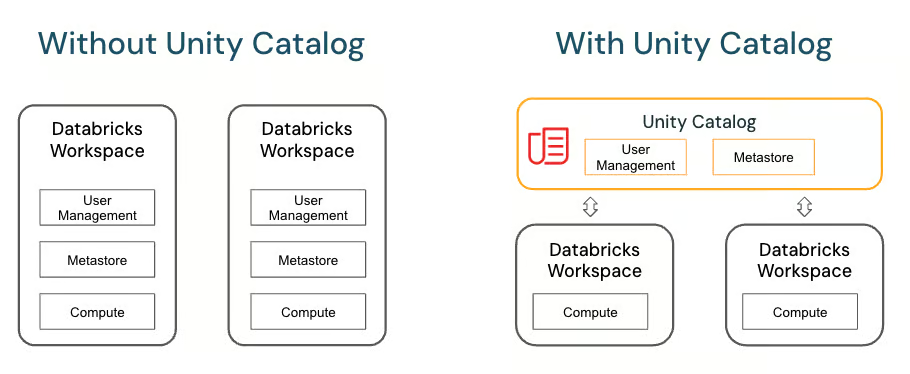

The architecture of Unity Catalog includes three main components:

- Metadata Store: This component stores all the metadata related to datasets, tables, external locations, and other objects.

- Catalog: This component acts as an interface between the Metadata Store and the compute layer. It provides a unified view of all the metadata stored in the Metadata Store, making it easy for users to access and query their data.

- Workspaces: Workspaces are virtual storage locations where data can be securely stored and managed. They allow for easy collaboration between teams by providing a central location for data access and manipulation.

Source: Databricks

With Databricks Unity Catalog, users can easily manage and query their data without having to worry about the underlying infrastructure. They can also collaborate with other team members by granting them specific permissions, such as read and write privileges, for different datasets or tables.

To grant these permissions, users can use SQL commands like "GRANT” to specify which users or groups can access certain data. This ensures that only authorized individuals have access to sensitive information.

How Unity Catalog integrates with existing data systems

Unity Catalog integrates seamlessly with existing data systems, such as Amazon S3 and Azure Blob Storage, making it easy to access and manage data from different sources. This allows users to quickly analyze large datasets without having to manually move or copy the data into Databricks.

Unity Catalog also supports various file formats like CSV, JSON, Parquet, and Avro, allowing for flexibility in working with different types of data.

Databricks Unity Catalog Best Practices

When working with Unity Catalog, there are some best practices that can help ensure efficient and effective data management.

These include:

- Use descriptive naming conventions for tables and columns to make it easier to understand the data.

- Regularly update the metadata in Unity Catalog to keep track of any changes made to the underlying data.

- Utilize access controls to restrict access to sensitive data, following the principle of "least privilege". This means granting only the necessary permissions to users or groups based on their roles and responsibilities.

- Use tags and labels to classify and organize datasets within Unity Catalog, making it easier to search for specific data.

These measures will help ensure effective data governance and optimal performance.

Common Challenges and How to Address Them

Unity Catalog is not a foolproof solution and can face some challenges in effectively managing data.

Some common issues include:

- Data duplication: This occurs when the same data is stored multiple times, leading to inconsistencies and errors.

- Data quality: Poorly maintained or inaccurate data can impact business decisions and processes.

- Complex data hierarchies: Large datasets with complex relationships can be difficult to organize and manage within the Unity Catalog.

To address these challenges, here are some tips:

- Regularly review and clean up data to avoid duplication. This includes removing unnecessary or outdated data.

- Implement data quality checks and validations to ensure the accuracy of data within Unity Catalog.

Final Thoughts

Unity Catalog is a powerful tool for managing data in a single view through its use of metastores, catalogs, and workspaces. It provides a centralized location for storing and organizing data, making it easier to manage and utilize within your business processes.

Want to learn more about Databricks? You should check out our Introduction to Databricks course, Databricks Tutorial guide, and Databricks webinar.

Databricks Unity Catalog FAQs

What is the difference between Unity Catalog and Hive Metastore Databricks?

Unity Catalog is a unified metadata service that offers a more efficient and scalable alternative to the traditional Hive Metastore on Databricks. It provides a single source of truth for all your metadata needs and allows for easier management and access.

Can I use Unity Catalog with non-Databricks data sources?

Yes, Unity Catalog supports both Databricks-managed and external tables, allowing you to easily integrate metadata from various sources into one location. However, there might be some limitations.

How does Unity Catalog improve performance?

By providing a centralized metadata store, Unity Catalog eliminates the need for frequent queries to external systems, resulting in faster query execution times.

I'm Austin, a blogger and tech writer with years of experience both as a data scientist and a data analyst in healthcare. Starting my tech journey with a background in biology, I now help others make the same transition through my tech blog. My passion for technology has led me to my writing contributions to dozens of SaaS companies, inspiring others and sharing my experiences.