OpenAI’s first Developer Advocate. Building the developer relations function from the ground up. Supporting developers building with ChatGPT, DALL-E, GPT-3, the OpenAI API, and more!

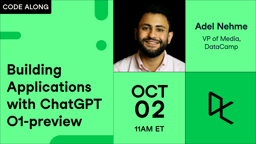

Adel is a Data Science educator, speaker, and VP of Media at DataCamp. Adel has released various courses and live training on data analysis, machine learning, and data engineering. He is passionate about spreading data skills and data literacy throughout organizations and the intersection of technology and society. He has an MSc in Data Science and Business Analytics. In his free time, you can find him hanging out with his cat Louis.

Key Quotes

Step one of beginning to integrate AI into your company's product is taking a step back and like actually understanding what is the problem that you're trying to solve. Do large language models—something like ChaGPT or embeddings or image capabilities, whisper for audio, are they actually the solution to the problem?

And I think this question is more important now because of how much excitement there is around this technology. There are a lot of people who are perhaps integrating this technology for the sake of integrating it without taking a step back and saying ‘Does it doesn't actually make sense for us to just like plop this in as a feature in our product?’.

OpenAI is seeing a lot of companies that are actually rewriting the whole roadmap and saying ‘Instead of adding this language model-based feature into our product, let's actually rethink our product entirely with this understanding of the future of language models being important. Part of that is reshaping our platform to use that technology from the ground up.’. So that's the conversation that I would sort of push folks towards initially when considering using AI in their product.

One of the biggest use cases with fine-tuning is, ‘I have some corpus of data for my company or my project and I want this language model to be an expert at this information and really have ready access to this information.’ The problem ends up being is most people are not using, at least people who we work with—are fine-tuning in the correct case. It's actually only, at least in the current form, only really useful for classification tasks. Making the model specifically follow, certain formats with our current fine-tuning infrastructure doesn't work well. It doesn't or can’t, retrieve information really well. Most of the time what people are actually looking for when they're fine-tuning with our models, at least, is embeddings. They're looking for like direct retrieval of some source of truth. Our flavor of fine-tuning makes this a little bit different than like if you were just fine-tuning a model in PyGeorge, for example.

Key Takeaways

Providing context around the problem you’re trying to solve and the type of answer you’d like is a great way to generate a more accurate response to the prompt you input to ChatGPT. Give clear instructions just like if you were talking to a fellow human.

When thinking about implementing AI or OpenAI products into your company’s product, take a step back and gauge whether it is the best use case for implementing AI. Don’t implement AI for implementation's sake. The execution is easy, the real difficulty lies in making sure the AI is effective for your use case.

When approaching GPT model fine-tuning, think about the task that you’re expecting the model to complete. If it is a retrieval task, fine-tuning a GPT model might not be the best course of action. Instead, look for embeddings.

Transcript

Richie Cotton: Welcome to the Data Framed AI series. This is Richie. I'm extra especially excited today since this is the first of a four-part series on generative AI. Generative AI has, as you may have noticed, turn the world upside down in the last few months. Naturally, we're kicking off the series by going right to the heart of the hurricane with a guest from open ai.

Logan Kilpatrick leads developer relations open, AI supporting developers building with Dali, the Open AI API and ChatGPT outside of OpenAI. Logan is the lead developer community advocate for the Julia Programming language and is also on the board of directors at NU Focus. Today we're going to talk GPT four plugins and prompt engineering.

I'm looking forward to finding out what open AI are building and how to use it. Hi there, Logan. Thank you for joining us on the show today.

Logan Kilpatrick: Thanks for having me. I'm super excited about this.

Richie Cotton: We're gonna dive right in and I think chat, g p t is maybe the most famous AI product that you have at Open ai. But I'd like to get an overview of what all the other AI that are available.

Logan Kilpatrick: Yeah, that's a great question. So, I think two and a half years ago, OpenAI released the API that we still have available today, which is essentially our giving people access to these models. And for a lot of people giving people access to the model that Powers ChatGPT, which is ou... See more

Richie Cotton: And just on that terminology, I know a lot of people get confused, like what's the difference between ChatGPT and what's the difference between GPT3.5 and GPT4? How do they all relate?

Logan Kilpatrick: This is a great question, and I try, I know most people are not as close to this as me, but I still, it still slightly irks me when I see the wrong term. Like I see people say CHATT four and stuff like that, chalk.

GPT3, GPT3.5, GPT4 is the actual machine-learning model that underlies the web UI interface. So that's the biggest distinction. Isb, those are models. ChatGPT is an application on top of it. So there are too many GPTs everywhere and now people like build their own projects and companies.

Richie Cotton: Brilliant. I'm definitely making sure all our marketing people listen to this cause uh, I know a lot of people are getting confused. So yeah. ChatGPT web application or other GPT plus a number is, is a model. Okay. So, I think beyond talking about the web application, the API is really interesting particularly for data scientists.

So, when should you use this API rather than using the web interface?

Logan Kilpatrick: Yeah, that's a great question. I think, the webinar interface is great for experimentation. I think it's really opened people's eyes to this technology. Like prior to the api, you basically had to either know how to code to experience like this language model technology or go through our platform.com website.

We actually have a bunch of examples. We have a playground, so it was like the. Beta version of the Chat UI experience. But not focus on like mass adoption, more focus on here are people who are trying to use this technology through the API's. Start playing around. So I think if you actually wanna build something, if there's product or service that you trying to integrate, large language model, conversational AI technology into, that's you use, you're looking sort around

UI itself, that set up your API key and get Python working with all the right versions and all that kinda stuff.

Richie Cotton: Guess, yeah, if you wanna send like a few messages, then the web interface is pretty easy. But by the time you're sending like a million messages, probably a bit more effort than you wanna use the api. Right?

Logan Kilpatrick: Yeah it's also like cheaper too. you can actually locally host your own version of Chat gbt and one of the many challenges of Scaling Chat GBT to the level that it's at today is there's a lot of people who are trying to use it. But you can actually again, locally host your own version of Chat GBT using our api.

it'll be available all the time as long as API is available. Essentially access.

Richie Cotton: But it seems like the API is maybe slightly more developer focused. Is it also relevant for data scientists and data analysts as well?

Logan Kilpatrick: Yeah, definitely. It's something that's very interesting is a lot of our initial use cases, or maybe not a lot of them, but definitely some of the initial use cases were around like, you know, you can do sentiment analysis and clustering and stuff like that, like actual, like typical data science tasks without actually having to write code to do this.

Which I think is super interesting. Like you could just pass in some a table of data that you might be looking at as a data scientist and say figure out these things or explore these different ideas that I have and look at this data and do that work for you. Obviously it's not.

It's not doing that in like the statistical sense. Like you wouldn't wanna rely on it to put in the data and then be like, what's the, average of these numbers. It'll probably give you something wrong for that. But it can do more like understanding problems probably better than you would be able to like program them and set up the experiment yourself if that sort of makes sense.

Richie Cotton: Okay, so these are sort of natural language processing type tasks is it gonna be text classification and things like that? Or

Logan Kilpatrick: Yeah. Text classification or like clustering things into different clusters of what, whatever the categories are that you would be interested in, those types of things are all possible. Using the models. There's also a lot more that's probably similar to what data scientists would wanna do with our embeddings.

So embeddings is, you take a bunch of a bunch of text and you turn it into a numerical formats up being like a 15 six length list of floating point numbers. So you can take like a arbitrary input size text, turns it into that numerical format, and then you can do a bunch of like cool data science operations the cosign similarity between two two of those list of numbers to tell how related to strings of techstar, that type of stuff.

All of that is probably more up the alley of data science folks.

Richie Cotton: Interesting. So, looking at how related two bits of tech are, I guess that could be useful for maybe finding duplicate data or near duplicate data or maybe off the head.

Logan Kilpatrick: That's a great one though. Cause it's actually like really just something simple as finding duplicates. You changed the period at the end or like all these like weird edge cases that like are really difficult. If you were to likely programmatically try to do these things and with embedding context, similar search, you could actually do a much better job of figuring it.

So it's of.

Richie Cotton: Nice. Yeah, I really important for data, quality. And I guess the other really hot thing at the moment is your plugins. So can you tell me what's going on with those?

Logan Kilpatrick: Yeah, plugins is the ability for people to essentially build apps within inside of chat cbt. And I can imagine, again, in the context of people doing data science and stuff like that, you can imagine like an Excel plugin. So now you have all the functionality of chat cbt, but you wanna integrate it with Excel.

that type of stuff is possible with this new developer ecosystem that we're building. And it's literally as simple as you create an api which is, essentially some arbitrary internet server send request send, send a response back. That's the basic gist of folks aren't familiar with APIs.

And what ends up happening is you can define this API in a way that the language models specifically chat GBT, can understand that API and then it can make those API calls itself. When it thinks that the question or what you're asking it is related to that plugin. So if I install the Excel plugin and then I say, what's my favorite color?

Chat, BT is smart enough to know that's not related to the plugin. It's just gonna give you, it's probably gonna say, Hey, I'm a language model. I dunno what your color is, or something like that. But then if you ask it, here I've uploaded this Excel document, give me the average of this column.

And you can write your plugin in a way that it actually sends the Excel spreadsheet to your server. You do whatever the computation is, you return the results then you're right there, interface. This will open up just so many. Cool new opportunities that like I think even this excel example that I'm giving is probably a bad example, but like it's just like a scratching the surface of what's actually gonna be possible.

Richie Cotton: it sounds a little bit like search engine plugins where you've got the sort of standard search behavior and then you can have plugins that say, okay, well if you wanna search a particular website for something interesting, then you can do that. So, in terms of who's gonna make use of a plugin, it sounds like you can have plugins for other software.

So you mentioned the Excel example. Do you envisage the idea of things like corporate plugins as well, where you have one, you can do something for one particular company?

Logan Kilpatrick: Yeah. And we already have those today. Like for example, part of our launch plugins, features of have already plugin and some examples, these folks are like Expedia and Airbnb. So you can install the Airbnb plugin, then you can say, I really have this, dream vacation and here are all these things that I would wanna do.

And you can send that information off. It'll literally all information you need. Airbnb,

probably the coolest example of one of these corporate plugins that I've seen people do is asky to do meal prep for them. So you can say, here are my dietary restrictions. Here is the number of you calories or whatever that I wanna consume, meal are I eating? Just use its base understanding of, language to create these meals for you.

And then you can install the Instacart plugin and be like, now order all of these items that I need to make these on Instacart. And it'll actually do all that for you. And there'll one button you can Instacart actually make order. So something that would be like actually a decent amount of work for us as humans to figure out the meal, figure out the recipes for all this, then go and figure out like how many of all these different things I need to buy.

Like probably hours of work you can do using chat plugins and five minutes or less, which I think is so cool. Um, And again, reduces the friction for something that, like my opinion is like in general would be.

Richie Cotton: It's amazing how much effort, just simple like daily life tasks. And so yeah, having that sort of reduction of friction does seem Pretty useful. It's interesting that it somehow becomes easier to type sentences about what you want and describe what you want compared to actually like figuring out the precise details.

do you see any other areas in sort of day to day life where this sort of thing gonna become useful?

Logan Kilpatrick: I think one of the things that's always top of mind for me as I'm thinking about this technology is the fact that like we have so much, like on my computer right now, I have so much context about the work that I do and the things I'm involved with, and it is actually.

Almost a hundred percent inaccessible to use my own data. Like I have all this data that's on my computer, I have it in my email, I have it in all these different sources. again, it's very difficult to like actually take action on that data in any way. And I think that chat is, add this layer, you taking your own data, self hosting it with something like our retrieval plugin.

where you can run a server locally on your computer and drag and drop a bunch of files into this web UI that's running locally on your computer. Then access data when you want it to and be like, Hey, I know Richie sent me an email about this podcast that's coming up.

Find me the email that has the Google Doc for this podcast recording that we're doing. And like it has that context cause it's connected to your, it's doing all these things. When me actually having to find that myself, and maybe this is a simple example cause we have search and email and stuff like that, but you could imagine like a much more complicated version.

This where I have some files are sorted away and maybe I wanna know something more abstract. I don't know who I emailed about this topic, but I was talking to somebody about, waterfalls a couple of months ago, and I don't remember at all who it can context using, embedding, using own locally hosted data through that language interfaces so powerful for people to like actually be able to use the data that they own.

That's productive. Hopefully all the information accessible. So I'm super.

Richie Cotton: It's exciting stuff. And from what you're describing, it sounds like chat g p t or g p t becomes more powerful when you're integrating it with other pieces of software as well. So, can you talk about how you like that ecosystem forming and coming together? Like how's everything end up linking up together?

Logan Kilpatrick: Yeah, I, so I think the idea is that plugins will do this for us. like at the start of any ecosystem, there're. Yeah, a lot of the question in my mind is around what ends up being part of like the core chat bt product itself versus what ends up being like a great plugin. And I think you see similar tension with this for, Apple's App store for example, around what stuff should Apple build and give to all iOS iPhone users versus what do they wanna rely on the ecosystem to build and like then just make available via the app store.

So I think there's a lot of those questions for us. But I do think that plugins is be that interface or the first version of this interface, we see language models actually connecting them to the world. I really do genuinely think that it makes the technology so like, I almost can't imagine.

Context and just taking the specific actions that are super important to.

Richie Cotton: I really love that app store analogy. And so maybe we'll see like the Open AI app store at some point. But yeah, I don't wanna push you on that, but we'll, we'll see what happens. So, I think another thing that we cool talk about is the image input feature of G P T four. So that was maybe one of the more exciting announcements about GPT four.

Can you tell us like how you expect people to use this feature?

Logan Kilpatrick: this is another thing that I don't think that people have actually seen a great example of how powerful this technology is gonna be in everyday life. So the basic idea is that, yeah, GT four is multimodal today. If you were to use PT four, whether it's in CHATT or the api, it doesn't accept image inputs.

It's in the very limited data right now. Or alpha or whatever the correct terminology is with one partner who provide. Is for low and no people. So if you have, if you're blind or partially blind they provide the service. You can essentially have an iOS app and you can take picture of things real time that are around you.

Then they've done, there's actually volunteer human at the of that to you what is happening in some particular image. And now with T4 and this multimodal capability, we're actually able to just take picture real what's I'm

ubiquitous access to this technology is gonna be so, groundbreaking and so freeing for them to be able to do all the things that we, with people who have perfect vision, are able to take for granted in everyday life. I'm super excited about. That'll awesome. What that, in that particular use case, I also think that there's just so many more like.

Incredibly cool things that are going be possible that integrate the image functionality with tech. Like even like demo for GBT Greg, that amazing ui,

hey build me this website and make it look visually like this example that I've drawn out. And I think that is gonna unlock so much creativity for people who even for myself, like I have a really hard time doing like front end visual interface design. Like it just doesn't, there's something about it that just doesn't really click well for me.

But I do think that I can concisely, draw something out by hand. And if I'm able to do that or even take a picture of another website and be like, Hey Bill, be something that's similar to this. I think that's just gonna be so, so transformative to open up access to this. to this technology, it's gonna be awesome.

Richie Cotton: That's a pretty cool example with well, first of all, the accessibility stuff. I think it's often very easy to forget that not everyone has like a full set of working senses and people have different needs and being able to help thing those people through whatever medium is, is most comfortable for them is gonna be really powerful for that sort of accessibility.

The other idea about like designing websites is also interesting. I'm wondering are there any like data science specific use cases? I'm thinking like there's a lot of database going on and how might G P T be useful there?

Logan Kilpatrick: Yeah, this is this is a great example. I think one, perhaps not that useful example is there's a bunch of iterations of this in a very similar context, but you could take a picture of some graph like some different graph and be like, write me the code to actually have this working as a demo and ended up going for something else instead.

And it just takes, whatever the. Way that the graph is moving, whatever kinda graph, it's, it takes that and then it'll produce for you like the Python code that will generate the graph that looks exactly like that. How useful that is for people doing data science is a little bit questionable to me.

Like I'm not sure what the actual use case for that is. It's cool to see. I think another version of this is you could take a picture of a bunch of columns or rows in an Excel spreadsheet and be like, write me the Python code to do X, Y, and Z. I think that maybe will be where it's more useful or, and maybe like just a simple screenshot of a couple rows and you put 'em in chat Bt and say again, find me the mean or the, or whatever it regression model I.

Richie Cotton: actually that first case is something I have stumbled over where is someone's shown me some like paper journal, like from 1970 or something. You'd be like, okay, can you just reproduce this plot here? And you're like, okay, how do I do that? Having that done automatically would be really fantastic, although that's a niche academic use.

The idea of being able to show a plot and then have some text explanation of it does seem really useful. Is that something that's possible.

Logan Kilpatrick: Yeah, most definitely. I think. all of those types of explanations will be super powerful, like super powerful and actually very feasible to make happen. So you could pretty much show anything and ask for like is happening. Some of those examples with multimodal g4 is it actually, it understands the cause and effect relationship.

So there's a bunch of pictures of something being held by a bunch of balloons and you can ask through PT four what happens if I like, cut or remove the connection between the object and the balloons fall up understanding. I think once we start to live with this technology, it'll be much more clear, like how valuable and beneficial it actually is to have that level of and understanding available to you at all times. But even me right now, like I haven't hadn time to how it's be useful my life, but I'll be interested to see, we'll have to do another time less in a year and half and see how this has changed significantly over.

Richie Cotton: Absolutely. Actually I have a, a really stupid question about this, but it's been bugging me. So there's a sort of running joke about how with all these sort of generative ai like image things where like you've got Dali and the stable diffusion, all these where if you try and get them to draw hands, like they can't count the number of fingers correctly.

I'm wondering does it work in reverse? Can gt understand hands properly when you as an input.

Logan Kilpatrick: Yeah, that's a good. Yeah I think it'll get better at doing this. I think a lot of those, I don't, I need to read a research paper and try to understand better, like what's the actual reason why the model has a hard, it's something for humans. And my guess is that this, like multimodal understanding will help solve part, helps solve part of the problem at least.

Richie Cotton: so the idea that you have like text and images together that somehow improves the model performance is that correct?

Logan Kilpatrick: Yeah. I think it's the understanding of the visual piece. Like right now you can generate an image and do it like pretty well. But it still has these problems with hands, for example. And faces is another thing. I think the other piece of it is that I guess this is actually the real challenge is Dolly does a really good job, for example, of like generating abstract art.

Cause there's no there's no constraints on what it's like, there's no, in some image of like the future of like buildings and stuff like that. There doesn't need to be doors. Like it's essentially something that's completely made up. It's totally abstract. And the problem that we have is when you take something that is like we would expect as humans to be like, there is one right answer for this.

What do hands look like? With a bunch of, fingers, like we have a preconceived notion of what that should look like. It's something that we're very familiar with. Model then has hard of, it's all these weird nuance language models, image models, something that's really. Simple for humans to do and not difficult, like even just reversing a string.

If you take a string and you ask GPT three or gpt four to reverse it, it actually won't do a job of this might sit there and question like it's actually very deterministic, easy thing to do. There's no uncertainty in this. There is one correct answer, forward, written and backwards. And the model just has a hard time parsing things in that way cause of the technical limitations.

Cause it's designed to do things at level. Then you reverse index like change. So it has to do all this crazy mapping behind the scenes. Try

work's, understanding.

Richie Cotton: That's a really interesting explanation and it reminds a bit the idea of trying to do calculations using G P T, where it's got a lot better over the last few months, but it's not a perfect calculator and.

Logan Kilpatrick: Yeah, it can actually do calculations, which is the, it's informed guessing which is the like calculation capability built into it.

Guess which is right? In some cases.

Richie Cotton: Alright, so, I'd like to talk a bit about fine tuning models. And well actually, do you wanna explain to everyone what what fine tuning a model is and why you might want to do it?

Logan Kilpatrick: Yeah. So essentially today we have a bunch of models that are available to be fine tuned. The original GT three models through our API, are able to be fined. And the basic intuition is take a model. It has a bunch ofs, it's trained for the entire. What you end doing is like making small customizations to like the final output layers of the model in order to influence it to more correctly understand whatever data is relevant for you.

So a lot of people, like I think of the biggest use cases that people try with fine is, Hey, I have some, corpus of data for my company or my project, whatever. It's, I want. This language model to be an expert at this information and really have like ready access to this information.

The problem ends up being most people are not using least, you know, we work with, are trying to fine things are not using fine in the correct case. Like it's actually only, at least in the current forms, only really useful for classification tasks. Like giving it a bunch of examples of the different classes that you're interested in and having the model learn that is actually quite successful.

But making the model very specifically follow like certain formats with our current fine tuning infrastructure doesn't work well. It doesn't like, it can't like retrieve information really well. Most of the time what people are actually looking for when they're fine tuning with our models at least is they're looking for embedding they're looking for like direct retrieval, some source of truth.

They're like a. You customer support company for example, or theyre trying to use this model for customer support and they wanted to be able to reference like different solution guides and here's the X question. Embedding are a much better solution, that problem than fine model in general, outside of the context.

Open ai, fine tuning is viable solution for some of.

Richie Cotton: Okay. Interesting. So fine tuning is purely for classification at the moment, but anything else look for embeddings. Okay. Can you give some examples of maybe, you mentioned a lot of companies doing this wrong, so let's start with like the lessons learned then. What are some other examples of like where you might go wrong with trying to use this fine tuning.

Logan Kilpatrick: I think that's probably the biggest thing. With fine tuning specifically, I do think that there's most of the companies don't end up going totally wrong because they get in contact with us and our team is to help guide through that process. The piece of this as well is that the models that are available to fine today are are only the original model.

A much less powerful solution. If you could fine tune GBT four for example, maybe it'd be better at solving some of these problems than some of those original base models. So it's just again, it's just a little bit less useful than a lot of other approaches that you could take today.

Richie Cotton: I mean, it certainly just seemed quite appealing to be able to just throw in the load of your own company data to GT four and then have it give better results somehow.

Logan Kilpatrick: But I think with GBT four specifically, embeddings plus the ability to have a system message that like is truly, deeply steering the conversation. A great example of this is Khan Academy. So Khan Academy was one of the launch partners for GBT four. If people aren't familiar with Khan Academy, it's a software platform that allows, free education for the world.

they've done a great job of making that technology specifically suited for their use case to the point where, One of the biggest challenges in the educational spaces, you don't actually want, if you're trying to help someone learn something, you don't want the model to give an answer. And like by default, the model really wants to give an answer.

That's what it's there to do. It's there to answer your question. So if you ask it, you know how many stars are in the galaxy or whatever. It's gonna try to answer that question. And what Khan Academy was able to do just through messages and some other stuff, is actually make so model away from people's

that's. That capability is much more powerful in GPT four than it's for some of our previous models to actually follow those system messages. And set the tone of what you want this AI system to do and customize it for your use case. Like you could make it say you can put in the system message.

You are a customer support chat bot who works for X, y, and Z company. You shouldn't respond in like a way that's aggressive towards users. Even if they're mad at you. You should always be polite, you should, apologize if you say something wrong. All those kinds things you can put model will actually understand, stick to those as it processes stuff, which is super interesting to see.

Richie Cotton: That is actually absolutely amazing. The use case you described because something Datacom is building at the moment is trying to be like, okay, well we use, we'll use G PT to. Give students some help but not give them the answer. And it's just getting that balance right, of being supportive but not actually giving things away.

Very delicate.

Logan Kilpatrick: Yeah, it's a hard, it's a hard line to walk especially when you can imagine for younger learners who are maybe like less intrinsically motivated to be going through this learning process they're really going to be like, continually prompting just gimme the answer. I don't wanna do the work.

Just, which I totally understand. And I think, yeah, the folks at Khan Academy had to do a lot of work to sort make this work. I've.

Richie Cotton: I'm hoping this is gonna be successful for data camp as well. It's, and promising. Anyway.

Logan Kilpatrick: Fingers crossed.

Richie Cotton: Magnificent. Alright, so, uh, I'd also like to talk about co-pilot. So, while. While we're on the subject of using GBT for learning things, so copilot is a great solution for generating code for you.

So, more developer focused, but can you tell me what are the data science use cases here?

Logan Kilpatrick: Yeah. I think a lot of, like if you're doing work in a Jupyter Notebook, for example, and you're running your data science experiments, you could imagine that. In the future you're able to, and maybe not even in the future, probably today with co-Pilot X which is what's on the horizon of being available to developers through GitHub.

You could describe experiments that you wanna run. I imagine when

Trying all of these different model configurations. We were trying all these different starter, pre-trained models to then do

by hand. Setting up all these different manual experiments. And you can imagine that you just say here are the list of the experiments that I wanna try in natural language. Write me the Python code, write me the code to actually run all these different experiments. And with G4 powering all of these features, new feature GitHub, you're actually able to make that happen.

Which I think is so exciting. And we'll hopefully put data science folks back in the position of being really close to solving problems. And like thinking critically about how to solve the problems versus just typing keys to set up experiments. So I think for myself, like that would be, thinking back to, doing that work of actually training models and doing data science workflows, like it would be so convenient to have this tool.

And also another layer of this is. Being able to explain what's actually happening to me as the developer, as the data scientist who's running these experiments. my background was like pure computer science. Like I didn't have a lot of the like statistical background.

And for some of the models that we were doing at Apple, it was more like statistical models that like I just didn't have a good grasp of the stats background that was required to make some of that work. And I could just imagine how helpful it'd be to like, while the code is running and being generated, here's gbt.

Just like giving me a explanation in simple terms of like how this statistical method or property actually works. That would be so helpful. And now you're job.

Richie Cotton: Absolutely. And there's always been the problem with data sciences that you need both the statistics skills and the coding skills, not to mention all the other stuff like communication. And if you can get supported in all of those areas, then that's gonna make life easier, I think for data scientists.

So, there was a recent announcement related co-pilot saying the underlying technology's changed and it's all gonna be based on G P T four now. So how is that gonna change its capabilities?

Logan Kilpatrick: I think what the folks you know, and again, don't inside knowledge on this isth

models.

You can actually get like a great balance of the ability to just generate code, which I think was originally like copilot its current or

section. You couldn't like talk to it, you couldn't like iterate with it. It did have the ability to show you multiple generated options and you could choose one if you wanted to, but it didn't have that conversational layer. And I think what is gonna allow to do is still be extremely excellent at generating code for problems also have a deeper reasoning understanding of what's happening in that code that copilot wasn't able to do before.

Copy paste a Python

copilot. just makes the technology much more usable in my opinion.

Richie Cotton: And I guess related to this just make you talked about deeper reasoning about what's happening. So one of the, the big objections to using things G4 is occasionally it's gonna make mistakes. I mean, example is maybe one of those. So, I guess, when is it gonna be a good idea to have this sort of automated like generation of code and things like that and then, and automated explanations compared to just saying, okay, manual.

Logan Kilpatrick: Yeah, this is a really good question. I think it's a deep question. Maybe we won't to cover all.

Solving some of these problems can be done by models like gbt but it still requires somebody who has the deep expertise and understanding of you. I did a live earlier today. We were to essentially build UI clone chat GBT to actually generate that for us based on a bunch of context that we gave it and didn't go perfectly

actually going and solving

a bunch of code that looks really great, but when, just one little thing doesn't. Hook up correctly. All of a sudden the whole thing blows up and then you're sort back to mode as programmer of, now I have to solve problem that all stuff, maybe it's, a little, I'm little ie myself because now I don't have of intellectual background context of what this code is actually doing.

Why did I do it this way? So in some sense it can actually make that problem a little bit more abstract than harder to solve. But still I think, it requires the use of somebody who like has the programming background. So this goes back to the broader conversation around, will these new productivity gains mean that there'll less software engineers or less data scientists in world?

Unlikely you still need to have somebody who capable these tools in their hands. But I do think that likelihood that. You'll start off with some AI generated template or first pass at whatever the problem you're trying to solve. I think that goes up significantly in the next six months. like even for me today, when I'm doing software engineering development or data science workflows, like I'm starting with whatever GT four generates for me, just because I don't wanna do, extra few hours of work that it would probably take for me to set all that up.

Richie Cotton: That's interesting. It sounds like maybe the skillset for developers are gonna change a bit. So rather than being able to write code, it's more about can you review and understand and tweak code? Is that does that sound correct? And if so I guess how do you think that's gonna change?

Like, how we train, people to do data science and great software?

Logan Kilpatrick: I would posit that it's probably still going to be very similar for developers. And I think the context, or at least my thinking on this right now is that we're essentially all doing this today. Like I don't have the knowledge base of like how pie Torch works to train a machine.

Like I intuitively understand how pie George works. I intuitively understand how computer vision model, but if you put of with no resources, I'm. Type out the code to trade a machine learning model right now. Like I don't have that in my memory to just do off offhand. So what I'll do is as a developer go to the PIE website, look at the templates that they have.

At the examples, probably start with one of the examples, give and then modify that specific use case. So it's a very similar process to like what we do today. We just go off to all these different websites and we have to like hunt around for the right resource or try to make some resource like fit into our use case.

Like I remember doing this a lot with documentation. Like, Yeah, I'm kind solving this problem, but I'm also solving this like segmentation problem. And you tutorial that in between two. I'm,

when you. PT four, do the first pass on these things for you instead of to go and look through 12 Stack overflow.

Richie Cotton: That's a very familiar story looking through 12 different stack overflow posts. So, I like that idea that it's basically it's creating templates for you, but it's maybe just a little bit more personalized to your problem. So I guess just going back to your previous example about trying to build a it was like the open AI website using G P T.

and you said you were struggling with it slightly, like getting the right prompts and things, and that does seem to be, the other objection I've heard from a lot of people is that they'll go, they'll try and they'll write a prompt and then they'll get a bad response and they're, oh, well this is a stupid model, I'm gonna use it again.

So, it just seemed like you have to provide a lot more detail to the prompts than at least I first thought. do you have any tips on like, well, how'd you go about writing good prompts to get the response you want?

Logan Kilpatrick: Yeah, I think the. Mental model for people that puts them in the position where they're not able to get the most of what they want outta this technology is the mental model that you're talking to another human who has expertise in this topic. And the reality is you and I have some shared understanding of a bunch of stuff that like we haven't agreed on, but it's probably there.

And that, if I told you to do something just the model might actually, you might, in a bunch of things that I didn't actually say, and this is exact same thing, that with the model where, I'm gonna do something, it has its own knowledge base that it's been trained on just like any human.

So not unlike humans, and I actually think this goes back to, great managers or people who are able to really effectively delegate things to people. Do this part well where they're to say here are the things that I'm looking for in excruciating detail. This is what I wanna see happen.

And I think that we don't have to do a lot of that all the time just because we're usually like, OK with the, in some sense, but you might actually do this in your daily job. If you're a, some software engineer and there's like a list of like requirements that you're, you've gathered from some clients those things are often like very explicit.

Like they want X, Y, and z. And tbt chatt, BT can do a great job of understanding those things cause it's very intentional. I think when you give the model room to interpret, it's unlikely that it's gonna interpret them or infer things in the same way that you would. So you have to be explicit.

And again, with GBT four, it's much better at like actually following those specific things that you laid out that you were looking for. If you try like example might do some, but.

Richie Cotton: That's interesting so the difference is just about its ability to follow instructions, like the difference between the two levels of model. Are there any particular like specific bits of advice, like for data scientists in terms of, well, okay, if you're gonna tell it to do a data science task, like what are the kind of, I mean, you said be specific, but are there any particular sort of things that you need to know about when you're writing prompts?

Logan Kilpatrick: one of the things that. The model is limited by is like how much context you can give it. for example, we're, in this demo that I was doing earlier where I was trying to make my own version of the UI that's on the website.

a bunch of context that the model doesn't have cause it's training cut off. Sometime in 21. So like all new knowledge that's been created since the end of the model has no idea about it doesn't, whatever breakthroughs in science or technology or new versions of, some Python library that came out in two, the model isn't gonna, you do is actually it.

Example. So it's like few learning where you can like, take an example of, here's how I want this problem to be solved. Here's like the response that I get from you. Model can do incredibly good job at actually solving it and giving you back the data in the way that you've formatted it.

If you provide that single shot or example best you.

Today, it's not possible with the multimodal input, you can imagine the future where as a data scientist, you're like, here's the dashboard that I want, here's the picture of like how I want the different things set up. Here's an example of like my data input sources. And I wanna use this version of this library and, maybe it's been updated since.

So here's a few lines of the code that it might look like. All of that context can be bundled together to create some like actual data science dashboard that generates for you automatically based on those inputs. So don't know if this is specific enough, but not making assumptions about what the model knows and like very clearly giving these examples, I think makes it so much effective solving.

Richie Cotton: So it's not just about, well, this single prompt. It's about give it a bit of training data. Say, okay, I want, I want, I want you to do something like this and then ask it the question. That seems like really cool advice. So, I'd love to talk about the different interfaces to, particularly to Python uh, sql.

I think if you're data scientist or a data analyst, that's what you're gonna be using. And I know you're one the developers of the open package, so can tell me bit about what's happening with development of that.

Logan Kilpatrick: Yeah, just nothing, I would say groundbreaking. Just interface to our API Python

functionality package. I'm not one of the core folks. I help out a little bit and help triage a lot of the craziness that happens on our open source repositories, but a bunch of other folks doing like the difficult engineering work to make that package work. We also have, I think that library and our No JS one are the only ones that are like officially hosted by open ai.

There's also the huge swath of community libraries across all those languages. Said Julia are probably some of the other more niche data science languages as well. If you're like, matlab, maybe's an integration, I'm not a hundred percent sure, but maybe there is. So yeah, essentially language agnostic.

Whatever language you're using today, you could use with open api, which is really nice. And it's like a request. So any language arbitrary call to server whatever language you're excited about.

Richie Cotton: Okay, cool. And is the plan to make the RN Julia packages official at all, or is, are these gonna be purely community efforts?

Logan Kilpatrick: Yeah, it's a difficult trade off. For us. I think, in a perfect world with unlimited resources, we'd probably wanna do some of the more popular ones ourself. I would guess Julia is maybe not one of those. But definitely, maybe are as well would be one of those that you might wanna consider.

Again, the reality is we don't have the resources to, to do that, so relying on the community is a nice sort of happy medium. But it does mean that like when we release stuff, all the community libraries they don't necessarily get a heads up. They have to see it when everyone else does.

And therefore things are like a little rely on your maintainer for your community library to actually update when we release something. So users it's un

we're doing today's already tremendous amount Python. So yeah, it's a challenge.

Richie Cotton: So it sounds like if you need to be up to date at all times, then Python

Logan Kilpatrick: library at the moment, so I would highly suggest folks to make use of it if possible. We do have an open API spec available, so if you like, there's 1,001 different tools that like Autogenerate Fdk based on open API specs. So that's probably the other way to not building a library by hand using.

Richie Cotton: So I think a lot of organizations are trying to just figure out, well, how do I get started with this? And I guess they're not sure where to start. So, if you wanna include open air stuff within your product what's step one?

Logan Kilpatrick: I think step one is taking a step back and actually this is probably the same for most things, but like understanding what is the actual problem that you're trying to solve. Do large language models, does something gbt embedding capabilities? Is that actually, and I think there's, I think this question is more important now cause of how much excitement there is around this technology.

And I think there's a lot of people who are perhaps integrating this technology for the sake of integrating it. Without taking a step back and saying does it actually make sense for us to just this in as a feature in our product? Or maybe we and

companies.

Language model based feature into our product. Let's actually rethink our product entirely with this understanding of the future of language models being important. And part of reshaping our

technology's folks towards initially, and obviously it's a much bigger thing to off to say, yeah, we're redo entire product or platform using this technology. People want that different experience. They're not looking for your product plus a chatbot on top of, they're looking for like a interface access information that your, is, your company is offer. Yeah. So I think starting with that is the right place. And then the actual tech, the cool thing to me is the technology's actually really easy to integrate.

It's literally just making an API call. The hard part is like figuring out the problem, doing all the prompting correctly figuring out like a data fly wheel of like improving the model's performance or improving the prompts that you have based on the data that you're collecting. Those are the more nuanced pieces, but actually, yeah, it's a simple few lines of code to integrate the technology into your application.

Richie Cotton: Okay, so it does sound like really the hard part is just making sure that you're adding value for your customers and not just building stuff because it's kinda cool.

Logan Kilpatrick: Yeah. I to, I mean I think that there's a lot of companies that are likely building it cause they think it's cool. And haven't thought deeply about like, how does this, Actually help people. And is this the right experience that I wanna provide to people? And I think the added layer of complexity for like smaller companies and individual developers is there's actually a cost associated with every API call.

So every API call to the open costs money. It's like relatively cheap to make these API calls. So you can do a lot for a little, but it still costs money. So like thinking about this is another like forcing mechanism to think about like, how do I actually build a scalable business? Like how do I provide enough value to somebody that they're interested in, like giving me money back for the service that I'm providing.

And I think, you can circumvent a lot of that with a $5 a month, Heroku server somewhere and do a lot of things with just that without having to think about how you actually build a business around this and cause this technology costs a little bit more money and as powerful.

Having that sort of front of mind earlier on in the conversation I think is also important for people. And something that I, folks are missing who are, obviously companies, developers aren't,

are running a solvent product or service, whatever. It's, and you don't need to make gobs of money, but you need to make enough money to pay your bills. Yeah.

Richie Cotton: Absolutely. And I have to say, it's like it does seem pretty cheap to run the the api, but I was trying like, Generating data sets you generated five row data set, it's like super cheap. Less than the cent. Try and generate like a million row data set that's gonna be a little bit more expensive cause you're paying for the output.

So yeah, I, I understand how thinking about your API costs is gonna be a good idea. So, I'd like to a little bit about what's happening in the future. I dunno whether you can tell me what's coming soon from open AI or what's exciting in the pipeline.

Logan Kilpatrick: I think this fusion of multimodal models which is a tongue twister. More impactful than people realize. I think that will again continue. Today we have audio models. I have no idea whether we'll have these different modalities together, but brain, potentially Yes. Makes I'm or not. So the fusion of more of these things together, I would hope that there's a bunch of advancements in the size of these models so that, we could run locally on your computer, on your phone, and we've some of models come out

computer versus this massive infrastructure server.

It makes sense to keep the data that you're processing locally on the device, which is coming from and not have to send it to a server. So that will be really cool to see. And again, I think the chat GBT as a product will just continue to evolve and get better. Like it's something that team and all those folks care deeply about.

I would expect it to continue to get more valuable, more useful more integrated with now all these plugins that are coming in the future. So I'm optimistic. Technology is help so many people become better at doing the things that they enjoy and sort work.

Richie Cotton: All right. Fantastic. Do you have any final advice for anyone who's wanting to develop products around generative ai?

Logan Kilpatrick: I would get started now, like figure, like I've seen a bunch of quotes that like, AI isn't going to take your job, but somebody who is using AI is the person who's gonna disrupt you. And I think for folks who are writing off the technology or aren't becoming, in tune with its capabilities, if you're not using it on a daily basis I think that's super important to understand how you can be more effective in what you do using these tools so that you don't end up getting disrupted by the potential change of technology's.

So

Understanding of what the problems they actually solve are.

Richie Cotton: Alright, brilliant. Thank you so much for your time, Logan. That was that was really informative, amazing.

Logan Kilpatrick: Yeah, this was super fun, Richie. Thank you for having me. And I'm super excited. Hope, I'm hopeful that datas tutoring efforts go well.

Richie Cotton: Alright, thanks.