Lernpfad

The AI war is still going strong, with Google's Gemini and Gemma making waves recently, but now Anthropic’s Claude 3 has emerged as a powerful contender, outperforming GPT-4 and Gemini Ultra on all benchmarks.

In this tutorial, we will explore Claude 3 models, performance benchmarks, and how to access them. We will also compare Claude 3 with its predecessor, Claude 2.1, and ChatGPT. Finally, we will learn about Claude 3's Python API for generating text, accessing vision capabilities, streaming responses, and using it asynchronously.

What is Claude 3?

Anthropic has recently introduced a new family of AI models called Claude 3. These models set industry benchmarks in various cognitive tasks. The Claude 3 models include three state-of-the-art models: Haiku, Sonnet, and Opus. These models can power real-time customer chats, auto-completions, and data extraction tasks.

Currently, Opus and Sonnet are available for claude.ai and the Claude API, while the smaller model Haiku will be released soon. The Claude 3 models are better at following complex, multi-step instructions, adhering to brand voice and response guidelines, and generating structured output in formats like JSON.

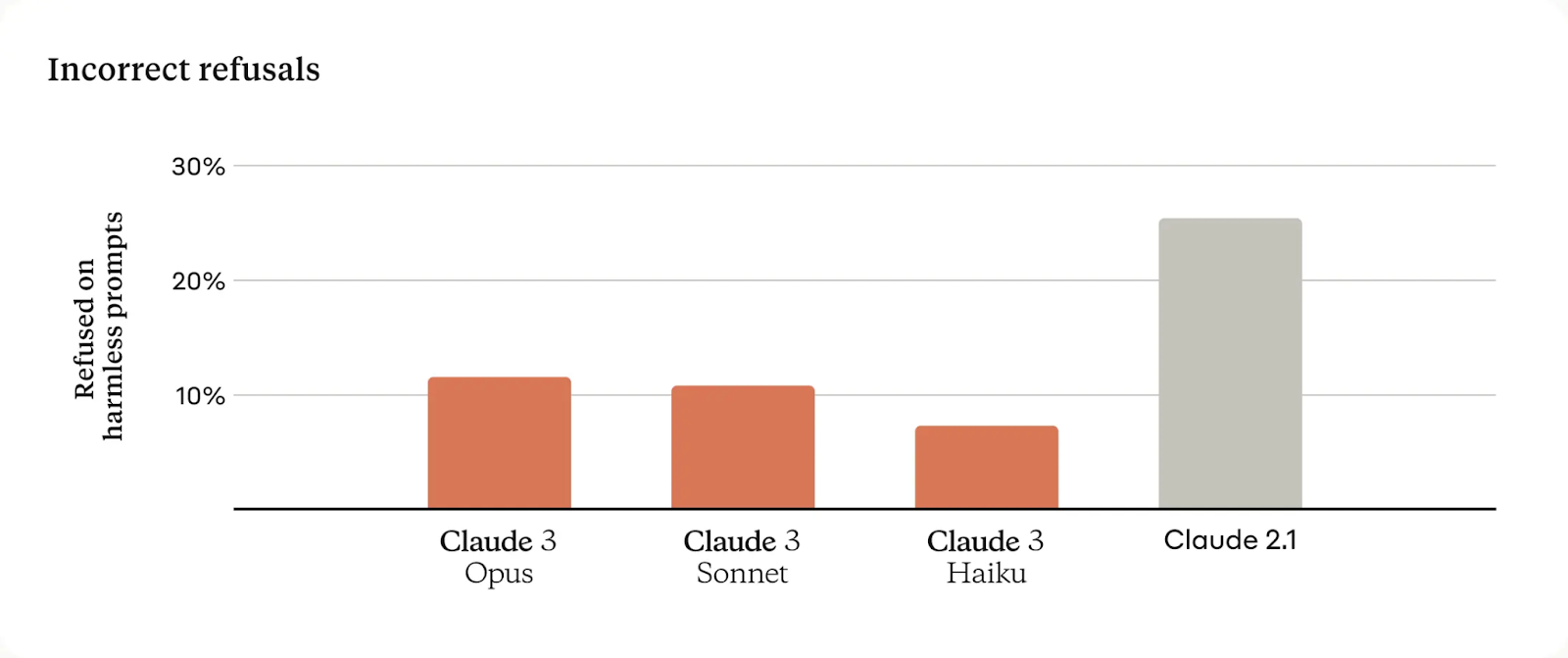

Compared to previous Claude models, these new models show improved contextual understanding, resulting in fewer unnecessary refusals.

They offer a 200K context window but can process inputs exceeding 1 million tokens. Specifically, Claude 3 Opus achieved near-perfect recall, surpassing 99% accuracy.

To ensure the trustworthiness of the models, Anthropic has dedicated teams to track and mitigate a broad spectrum of risks.

Model details

Claude 3 offers three models with a balance of intelligence, speed, and cost for various applications.

Claude 3 Opus

Opus is designed for complex, high-intelligence tasks, excelling in open-ended problem-solving and strategic analysis, with a significant context window and higher cost reflecting its advanced capabilities.

Price: Input tokens at $15 per million and Output tokens at $75 per million.

Claude 3 Sonnet

Sonnet offers an optimal balance between performance and cost, ideal for enterprise workloads involving data processing, sales optimization, and scalable AI deployments. It is affordable and comes with a large context window.

Price: Input tokens at $3 per million and Output tokens at $15 per million.

Claude 3 Haiku

Haiku stands out for its speed and efficiency, catering to real-time response needs in customer service, content moderation, and cost-saving tasks, making it the most affordable and responsive model in the suite.

Price: Input tokens at $0.25 per million and Output tokens at $1.25 per million.

How to Access Claude 3

There are three ways to access the Claude 3 models.

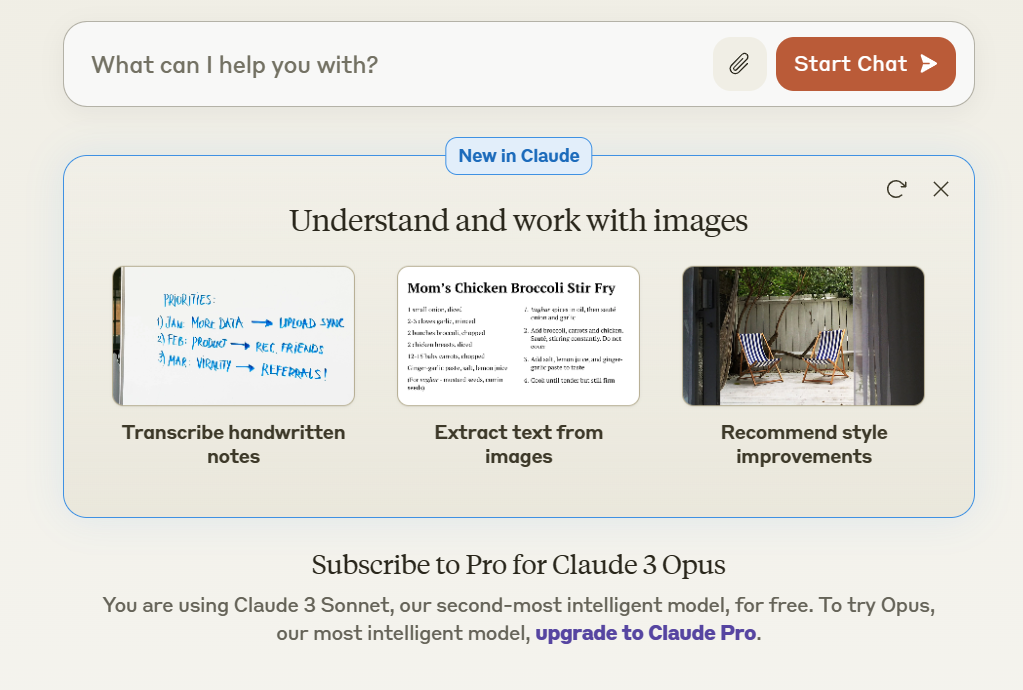

Claude Chat

If you're looking for a simple and free way to try out the Claude AI models, you can sign up for claude.ai/chats, which is similar to ChatGPT. The chat interface is interactive and easy to use.

Currently, they are offering Claude 3 Sonnet for free, but to access Claude 3 Opus, you'll need to purchase a Claude Pro monthly subscription.

Claude Chat

Workbench

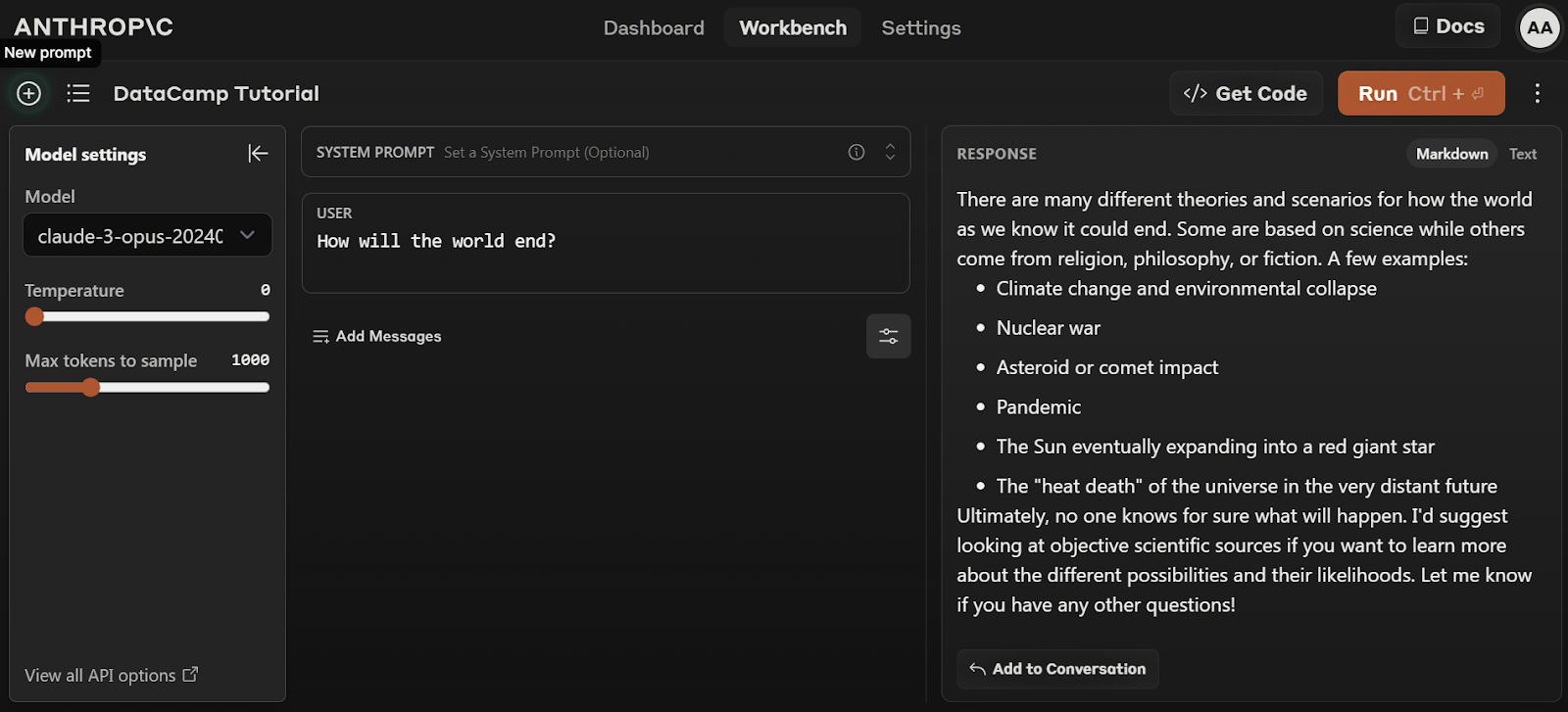

To access Claude 3 Opus for free, you have to sign up for Anthropic API: console.anthropic.com.

Follow the simple steps to sign up for free and start using the Opus model by clicking on the Workbench tab and selecting the “claude-3-opus-20240229” model.

Anthropic Workbench

API and SDK

The third way to access the Claude 3 models is through API. Antropic offers client Software Development Kits (SDKs) for Python and Typescript. You can also access the model through the REST API by using the curl command in the terminal.

curl https://api.anthropic.com/v1/messages \

--header "x-api-key: $ANTHROPIC_API_KEY" \

--header "anthropic-version: 2023-06-01" \

--header "content-type: application/json" \

--data \

'{

"model": "claude-3-opus-20240229",

"max_tokens": 1024,

"messages": [

{"role": "user", "content": "How many moons does Jupiter have?"}

]

}'A Dive into Benchmarks

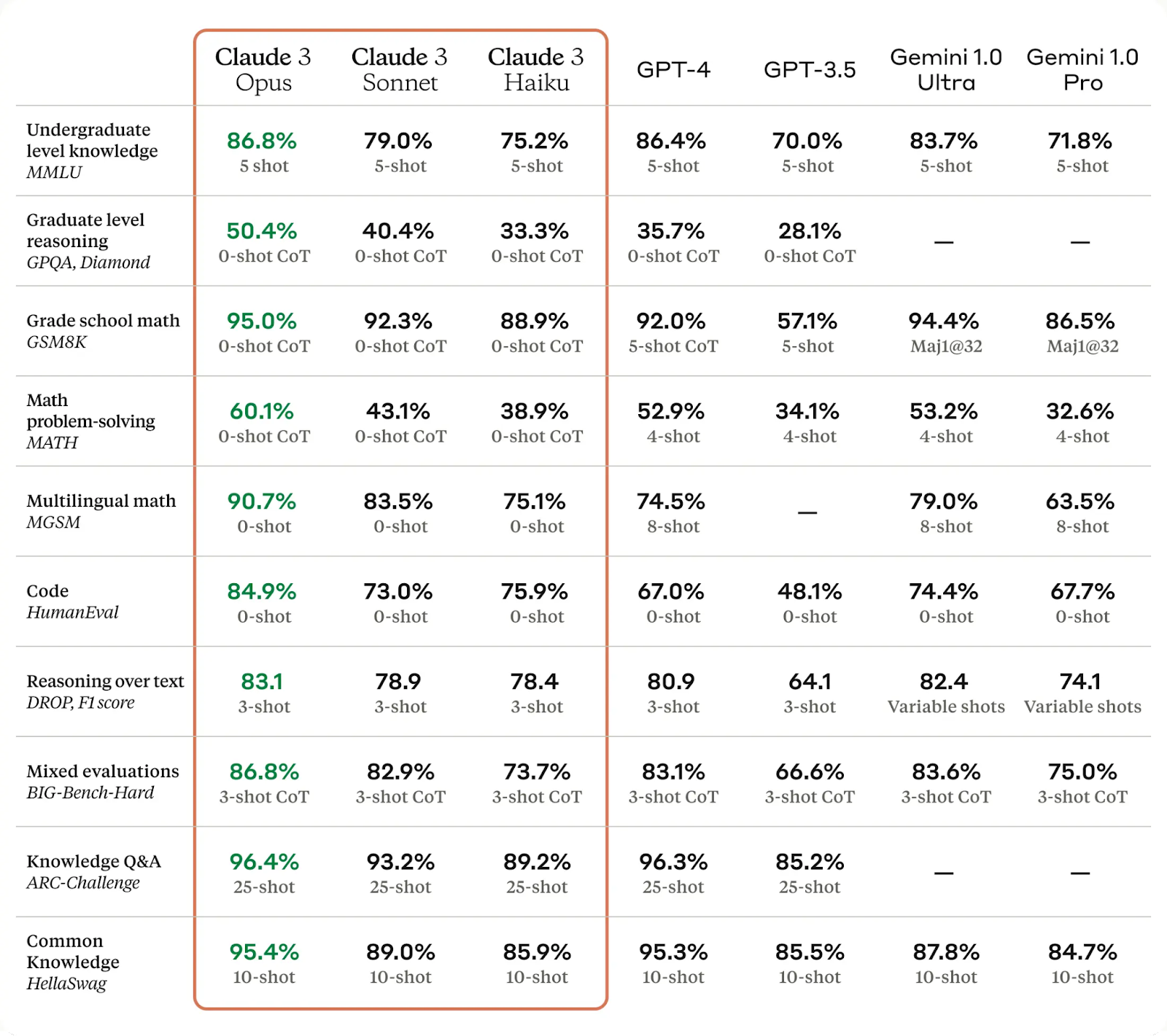

The Claude 3 models have been benchmarked against the top-performing large Language models (LLMs) and have shown significant improvements in various tests.

Claude 3 Opus, in particular, has outperformed GPT-4 and Gemini Ultra on some of the most common evaluation benchmarks, including MMLU, GPQA, and GSM8K. It exhibits near-human levels of comprehension and fluency on complex tasks, making it the first step towards achieving general intelligence.

Discover OpenAI state-of-the-art LLM by reading DataCamp’s article on What is GPT-4 and Why Does it Matter? Also, learn about Google's ChatGPT rival, Google Gemini, by reading What is Google Gemini? Everything You Need To Know About It.

Claude 3 models have gotten better at analysis and forecasting, code generation, and generating responses in non-English languages, as seen from the Benchmark data below:

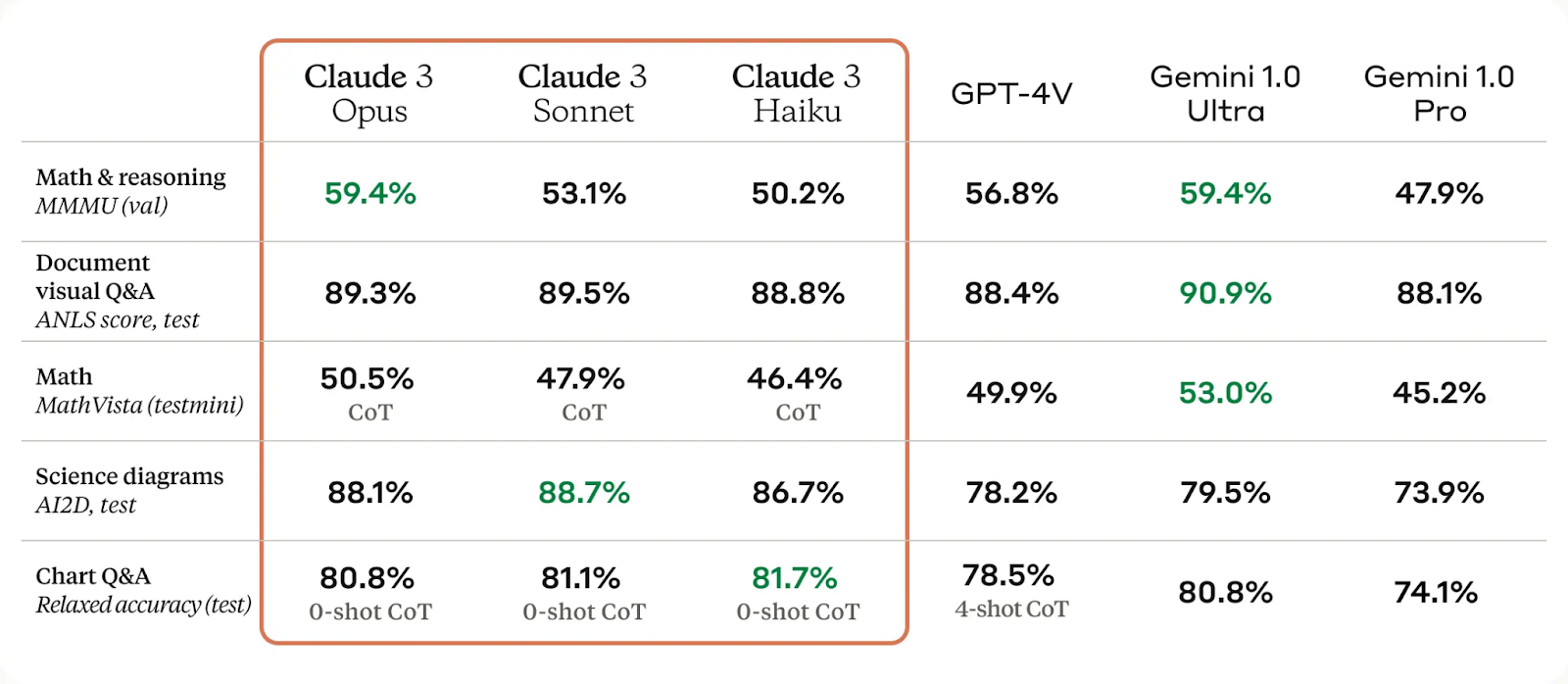

Claude 3 model's visual capabilities have significantly improved, enabling it to comprehend complex photos, diagrams, and charts. This advancement benefits businesses with knowledge bases in various formats, such as PDFs, flowcharts, and presentation slides.

The Claude 3 models have also significantly improved in reducing unnecessary refusals and accuracy compared to previous generations of models.

Finally, we can see that the Claude 3 models exhibit a two-fold improvement in accuracy on open-ended questions compared to Claude 2.1. Additionally, the new models show reduced levels of incorrect answers.

Claude 3 Opus vs Claude 2.1

In this section, we will compare the responses of Claude 3 Opus and Claude 2.1 on three common tasks to understand their respective performances.

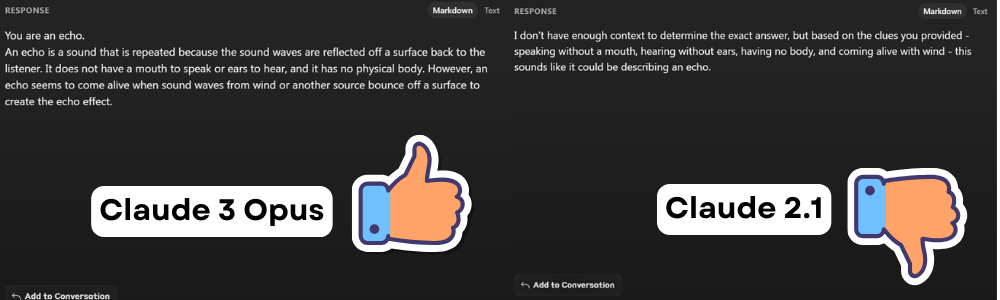

Q&A

Prompt: I speak without a mouth and hear without ears. I have no body, but I come alive with wind. What am I?

Claude 2.1 refused to answer the question, citing a lack of content. However, the user was asking a riddle, and Claude Opus provided the correct response.

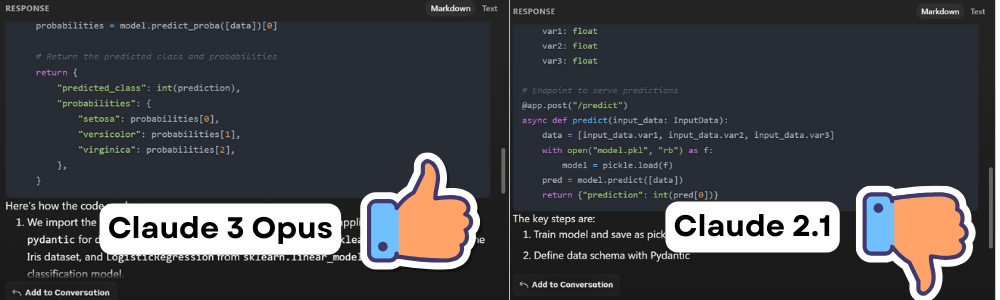

Generating Code

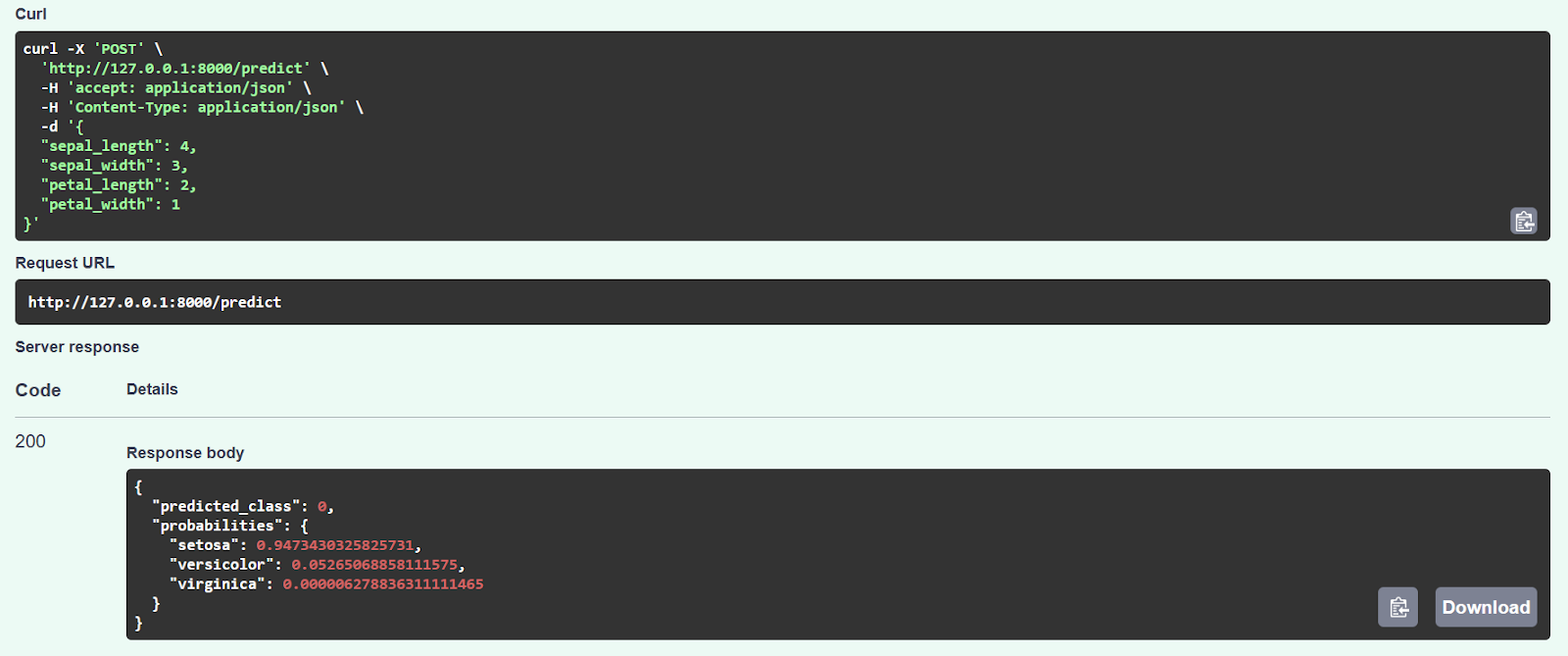

Prompt: Create a FastAPI web application for a simple Scikit-learn classification model.

Claude 2.1 code appeared to have worked initially, but it raised an error after entering the sample values. Upon investigation, we discovered that the code is not optimized and the inference function has some mistakes.

On the other hand, when we compared it with the Claude 3 Opus, we found that the code ran smoothly and provided accurate predictions, as shown below.

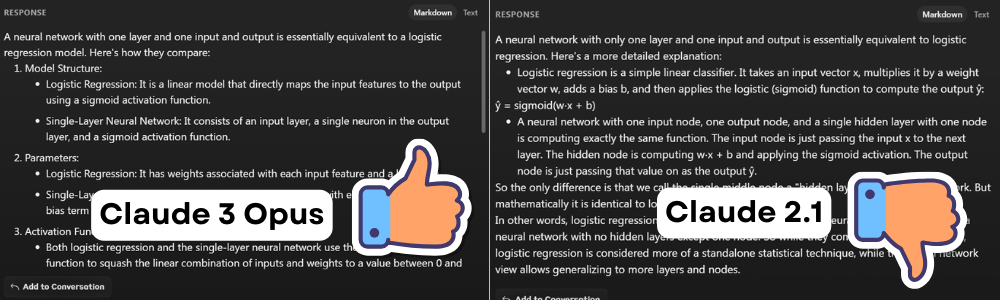

Complex Problem

Prompt: How does a neural network with one layer and one input and output compare to a logistic regression?

Both models provided similar answers, but Claude 3 Opus' response was more detailed and easily readable.

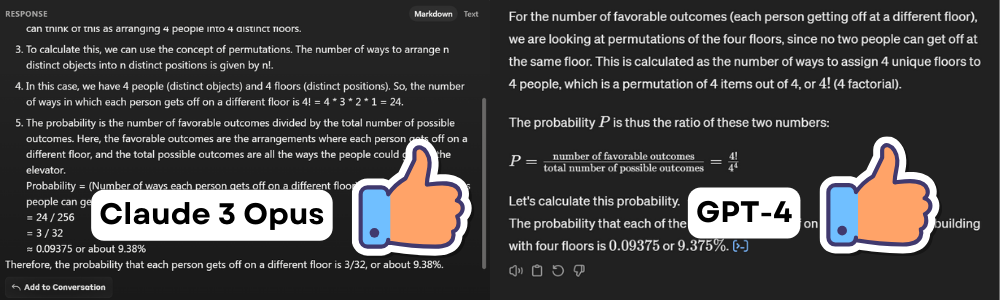

Claude 3 Opus vs ChatGPT 4

Let us compare Claude 3 Opus and ChatGPT 4 on similar tasks as above to showcase how Claude 3 has a small edge over the GPT-4 model.

Q&A

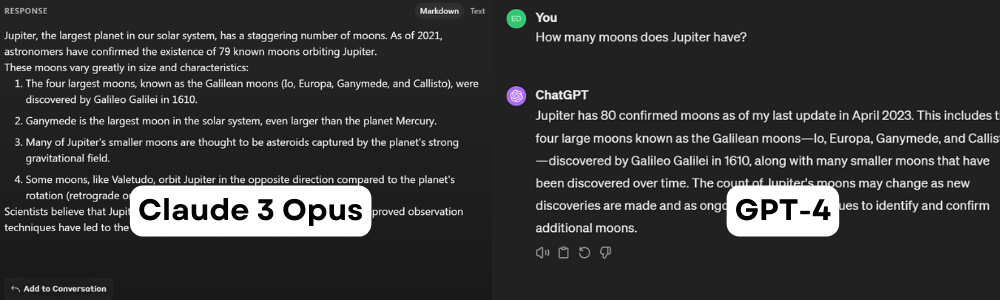

Prompt: How many moons does Jupiter have?

Both statements are incorrect. As of February 5th, 2024, Jupiter has 95 moons. This prompt is useful in understanding the cutoff period for datasets. It appears that Claude 3 Opus was trained on an older dataset from 2021, while ChatGPT was last updated in April 2023.

Generating Code

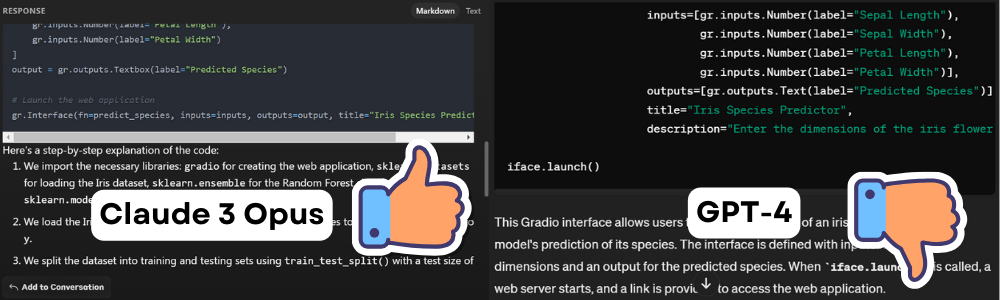

Prompt: Creating a Gradio web application for a simple Scikit-learn classification model.

Claude 3 model generated code ran smoothly, but the code generated by GPT-4 failed to run due to multiple errors and hallucinations.

Complex Problem

Prompt: There are four people in an elevator and four floors in a building. What’s the probability that each person gets off on a different floor?

Both models came up with the correct answer to the famous probability question. One provided the mathematical work, and the other generated and ran Python code to display the same answer. In this case, both win.

Claude 3 Python SDK

In this part, we will learn about Claude 3 Python SDK and how we can use it to generate customized responses.

Getting Started

1. Install the anthropic Python package using PIP.

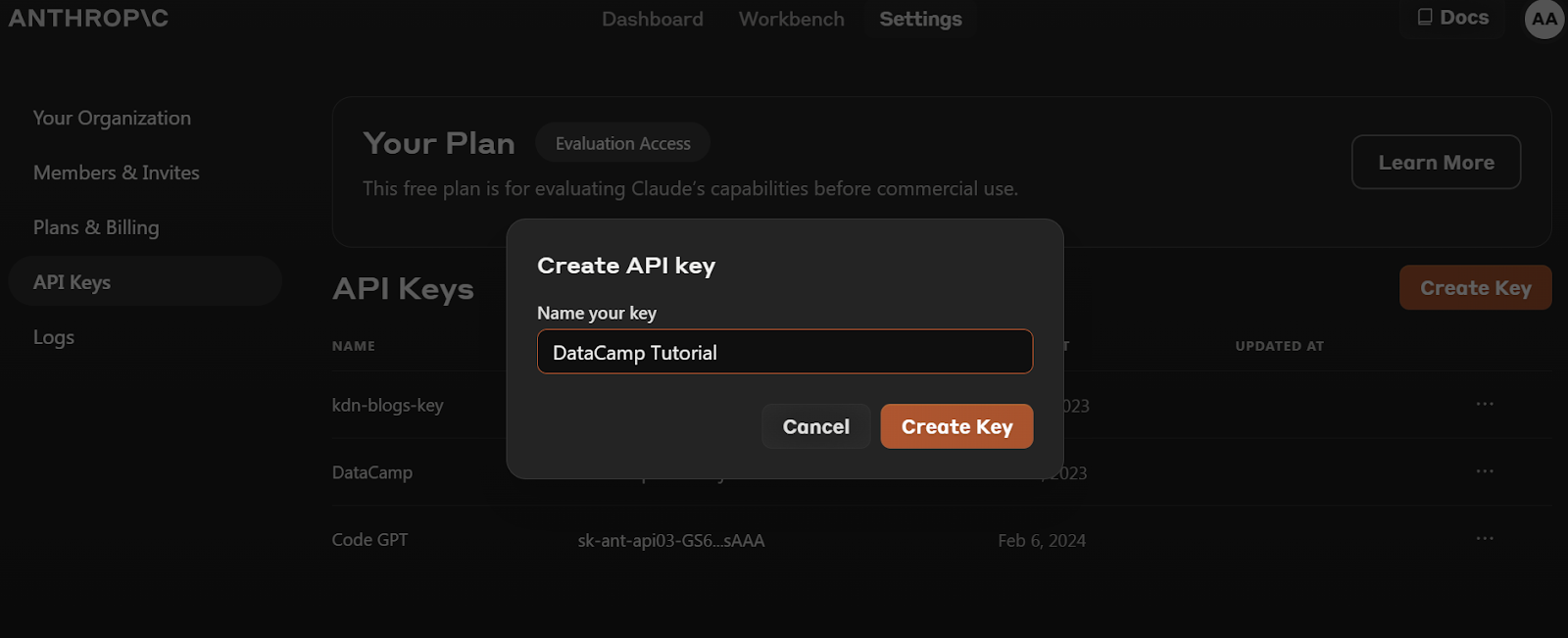

pip install anthropic2. Before we install the Python Package, we need to go to https://console.anthropic.com/dashboard and get the API key.

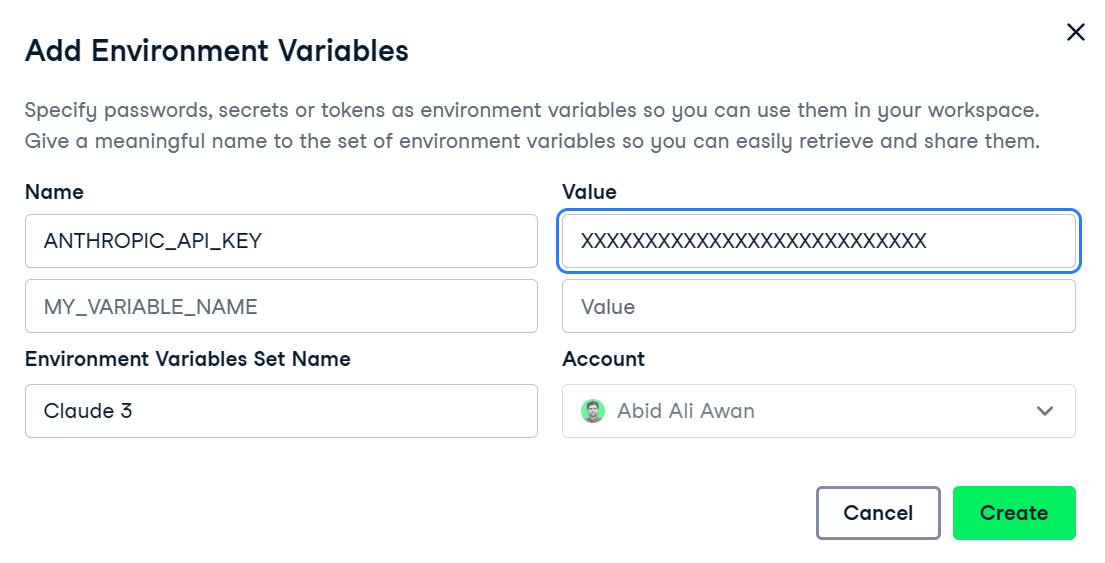

3. Set the ANTHROPIC_API_KEY environment variable and provide the value with the API key. Setting up and accessing the environment variable is quite easy in DataLab, DataCamp's AI-enabled data notebook.

4. Create the client object using the API key. We will use this client for text generation, access vision capability, and streaming.

import os

import anthropic

client = anthropic.Anthropic(

api_key=os.environ["ANTHROPIC_API_KEY"],

)Claude 2 Completions API

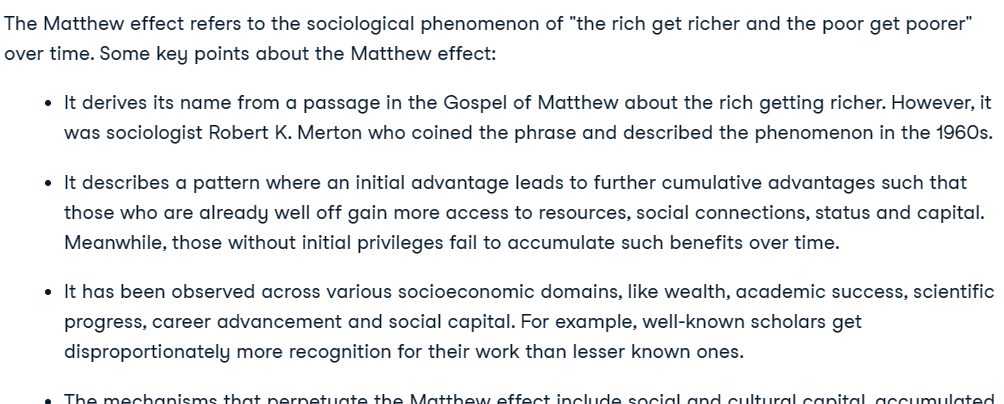

It's important to note that the newer Claude 3 model does not use the Completion API. If you attempt to use this API for the Claude 3 models, it will result in an error, and you will be advised to use the Messages API instead.

from IPython.display import Markdown, display

from anthropic import HUMAN_PROMPT, AI_PROMPT

completion = client.completions.create(

model="claude-2.1",

max_tokens_to_sample=300,

prompt=f"{HUMAN_PROMPT} What is Matthew effect? {AI_PROMPT}",

)

Markdown(completion.completion)

If you want to learn more about the cheaper Claude Python API, read Getting Started with the Claude 2 and Anthropic API.

Claude 3 Messages API

To clarify, we will be testing the Anthropic Messages API for the Claude 3 Opus model. This API is similar to the Completion API, but it requires a messages argument to be provided as a list of dictionaries that contain the role and content.

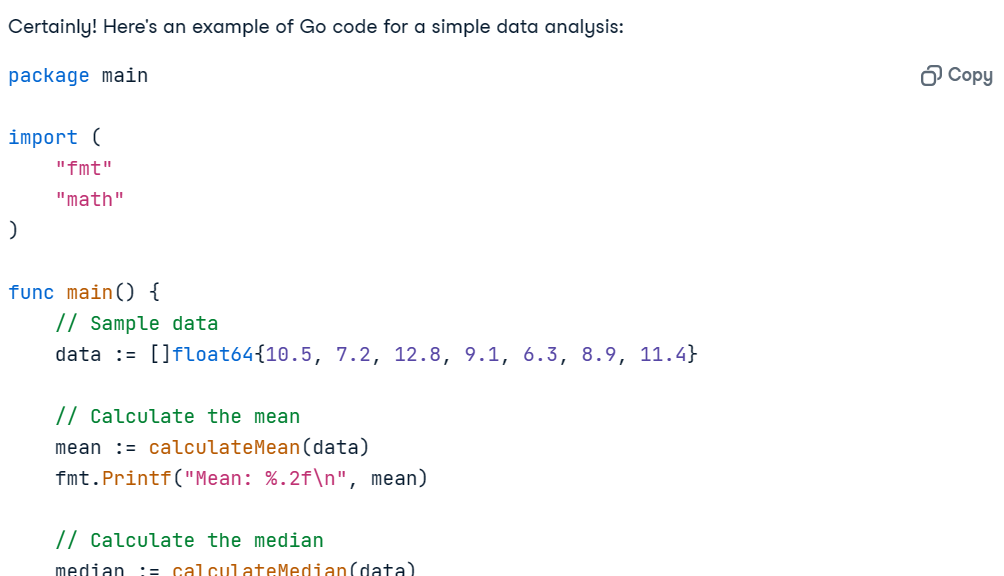

Prompt = "Write the Go code for the simple data analysis."

message = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{"role": "user", "content": Prompt}

]

)

Markdown(message.content[0].text)Using IPython Markdown will display the response in Markdown format. Meaning it will show bullet points, code blocks, headings, and links in a clean way.

The code works perfectly, and the explanation is clear enough for anyone to understand what is going on.

Adding System Prompt

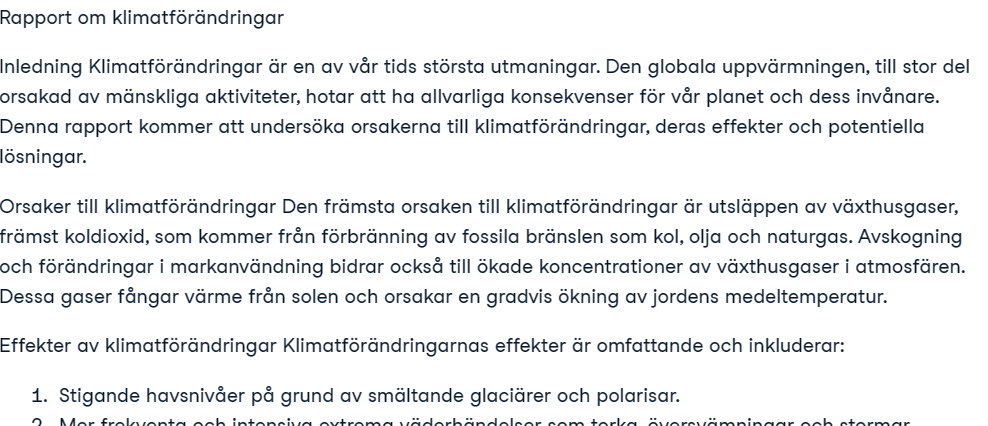

We can customize the response by providing the system prompt. We have asked it to convert all the responses into Swedish.

Prompt = "Write a report on climate change."

message = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1024,

system="Respond only in Svenska.",

messages=[

{"role": "user", "content": Prompt}

]

)

Markdown(message.content[0].text)It has perfectly generated a report on climate change in Svenska.

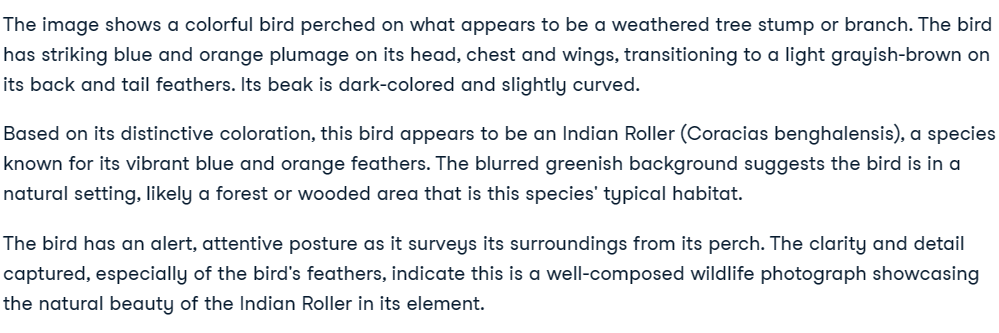

Claude 3 Vision Block

The Messages API includes vision capabilities. In this section, we will use two photos from Pexels.com to generate a response.

- Image 1: Photo by Debayan Chakraborty: https://www.pexels.com/photo/indian-blue-jay-20433278/

- Image 2: Photo by Rachel Xiao: https://www.pexels.com/photo/brown-pendant-lamp-hanging-on-tree-near-river-772429/

We will load the images using the httpx package and convert it into base64 encoding.

import base64

import httpx

media_type = "image/jpeg"

url_1 = "https://images.pexels.com/photos/20433278/pexels-photo-20433278/free-photo-of-indian-blue-jay.jpeg?auto=compress&cs=tinysrgb&w=1260&h=750&dpr=2"

image_encode_1 = base64.b64encode(httpx.get(url_1).content).decode("utf-8")

url_2 = "https://images.pexels.com/photos/772429/pexels-photo-772429.jpeg?auto=compress&cs=tinysrgb&w=1260&h=750&dpr=2"

image_encode_2 = base64.b64encode(httpx.get(url_2).content).decode("utf-8")Vision with a Single Image

To inquire about the images, we need to provide Claude 3 API with image data, encoding type, and media type. Both the image and prompt are part of the content dictionary for the message argument.

message = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{

"role": "user",

"content": [

{

"type": "image",

"source": {

"type": "base64",

"media_type": media_type,

"data": image_encode_1,

},

},

{

"type": "text",

"text": "Describe the image."

}

],

}

],

)

Markdown(message.content[0].text)

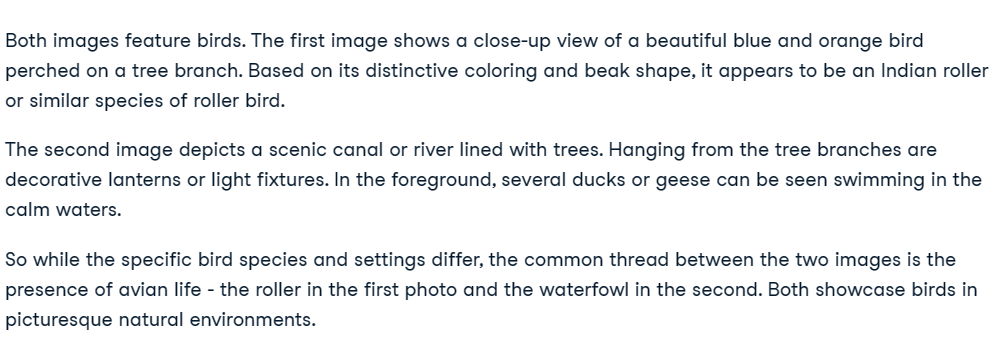

Vision with Multiple Images

Adding multiple images can be complex, but if you follow the code pattern in the code example, you will understand how it works.

We simply need to create text that points out Image 1 and Image 2, and then ask questions about these two images.

message = client.messages.create(

model="claude-3-opus-20240229",

max_tokens=1024,

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "Image 1:"

},

{

"type": "image",

"source": {

"type": "base64",

"media_type": media_type,

"data": image_encode_1,

},

},

{

"type": "text",

"text": "Image 2:"

},

{

"type": "image",

"source": {

"type": "base64",

"media_type": media_type,

"data": image_encode_2,

},

},

{

"type": "text",

"text": "What do these images have in common?"

}

],

}

],

)

Markdown(message.content[0].text)Perfect. Both images feature birds.

Claude 3 Streaming API

Streaming refers to the process of generating text outputs in a continuous fashion, rather than waiting for the entire sequence to be generated before returning any output. With this technique, the output is generated token by token, which helps to minimize the perceived latency.

Instead of create, we will use the stream function to generate the streaming response.

To display the results, we will use with syntax and for loop to print the token as soon as they are available.

Prompt = "Write a Python code for typical data engineering workflow."

completion = client.messages.stream(

max_tokens=1024,

messages=[

{

"role": "user",

"content": Prompt,

}

],

model="claude-3-opus-20240229",

)

with completion as stream:

for text in stream.text_stream:

print(text, end="", flush=True)

Claude 3 Async API

Synchronous APIs execute requests sequentially and block them until a response is received before initiating the next call. On the other hand, asynchronous APIs allow multiple concurrent requests without blocking, making them more efficient and scalable.

We will initialize the async client using the AsyncAnthropic. Then, we will create the main function using the async keyword and run the function using the asyncio.run function.

Note: If you are using async in the Jupyter Notebook, try using await main(), instead of asyncio.run(main()).

import asyncio

from anthropic import AsyncAnthropic

async_client = AsyncAnthropic(

api_key=os.environ["ANTHROPIC_API_KEY"],

)

Prompt = "Could you explain what MLOps is and suggest some of the best tools to use for it?"

async def main() -> None:

message = await async_client.messages.create(

max_tokens=1024,

messages=[

{

"role": "user",

"content": Prompt,

}

],

model="claude-3-opus-20240229",

)

display(Markdown(message.content[0].text))

asyncio.run(main())

Combining Streaming with Async API

We can easily combine streaming with async methods by using the stream function of the async client. This way, we will be able to receive multiple requests and stream the responses, making the AI application scalable and robust.

async def main() -> None:

completion = async_client.messages.stream(

max_tokens=1024,

messages=[

{

"role": "user",

"content": Prompt,

}

],

model="claude-3-opus-20240229",

)

async with completion as stream:

async for text in stream.text_stream:

print(text, end="", flush=True)

asyncio.run(main())

If you're having difficulty running the code, you can access this DataLab workbook where you can view and execute the code yourself (after making a copy).

Learn how to access OpenAI's large language models by following the tutorial on Using GPT-3.5 and GPT-4 via the OpenAI API in Python.

Final Thoughts

Anthropic is now a public and enterprise-facing company, similar to OpenAI. It provides access to its models to anyone, and in some cases for free. You can access the best-performing LLM Claude 3 Opus through either a Pro subscription or API access. However, Anthropic does not have the ecosystem that OpenAI has, lacking embedding models, image and video generation, text-to-speech, and speech recognition. It also lacks the core functions that OpenAI API offers.

You can discover OpenAI's new text-to-video model that has taken social media by storm by reading What is Open AI's Sora? How it Works, Use Cases, Alternatives & More.

In this tutorial, we learned about the Claude 3 model, which is getting a lot of hype in the world of AI. We compared it with Claude 2.1 and GPT-4 in various tasks. Finally, we used the Anthropic Python SDK to generate text, access vision capabilities, stream the response token by token, and use the API asynchronously.

If you are new to AI and want to understand the inner workings of state-of-the-art LLMs, then you should consider taking the AI Fundamentals skill track. It will provide you with practical knowledge on popular AI topics such as ChatGPT, large language models, generative AI, and much more.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.