Course

If you want to integrate a more natural-sounding and highly accurate language model into your application, Claude 2 by Anthropic is your best choice. It comes with a public chat application (similar to ChatGPT), API, and playground for various versions of the models.

Learn the differences between Claude's and ChatGPT's responses in the blog post Claude vs. ChatGPT: Which AI Assistant Is Best for Data Scientists in 2023?

In this tutorial, we will learn about the Claude 2X models and how to access them using the Python API. We will cover setting up with the API, making basic queries, and handling responses. We will also learn about streaming queries, asynchronous requests, and some more advanced functions of the API. All the source code for this tutorial is available in this DataLab workbook; you can easily create a copy to edit and run the code, all in the browser, without having to install anything on your computer.

By the end, you should have a good understanding of how to integrate Claude 2 into your own application to leverage its advanced natural language capabilities.

For those new to generative AI, our AI Fundamentals skill track helps you learn new technologies and models for preparing for tomorrow's AI world.

Basics of Claude 2

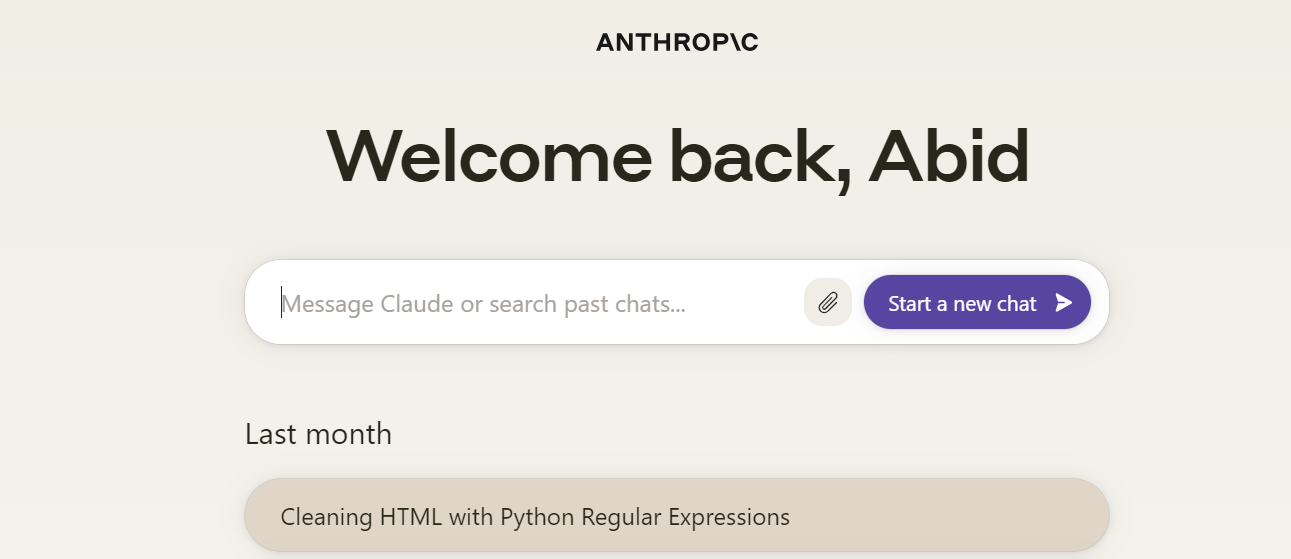

Anthropic recently launched Claude 2, the latest version of their large language model. It has shown improved performance across several tasks with longer and more coherent responses. Moreover, it comes with API access and a public beta website, which is available at claude.ai.

Image from claude.ai

Claude 2 is famous for natural conversation, with enhanced reasoning capabilities, expanded memory, and context tracking while minimizing harmful outputs. It has shown improvements in capabilities such as coding, mathematical reasoning, and logic.

For instance, in legal reasoning, Claude 2 scored 76.5% on the multiple-choice section of the Bar exam, which is 3.5% higher than Claude 1.3. When evaluated on academic aptitude tests, Claude 2 scores above the 90th percentile on GRE reading and writing sections, on par with median applicants on quantitative reasoning.

Introducing Claude 2.1

On November 21st, 2023, Anthropic released Claude 2.1, demonstrating notable improvements that enable more advanced reasoning while reducing hallucinated content. A major focus was enhancing reliability through improved honesty. Claude 2.1 is now more transparent about gaps in its knowledge base instead of attempting to fabricate information.

It comes with a larger context window of 200K, allowing Claude to reference and cross-check long-form content like research papers to maintain factual consistency in lengthy summaries or analyses.

Claude 2.1 has also introduced experimental tool use. It enables Claude 2 to retrieve and process data from additional sources with high accuracy while invoking functions and taking actions.

Setting Up Claude 2

Access to the Anthropic API is currently restricted to a select group of individuals and organizations. If you want to be among the first to try it out, you can fill out the application form available on the website. Anthropic is gradually granting access to ensure the safety and scalability of the API. Please be aware that due to the high volume of requests, it might take some time to get access to the API.

Once you receive the approval email from Anthropic and create your account, you will be redirected to the dashboard. The dashboard has a link to the workbench, similar to OpenAI Playground, where you can switch between different models and analyze the responses. Moreover, it also contains links to generate API keys and documentation.

Image from Dashboard

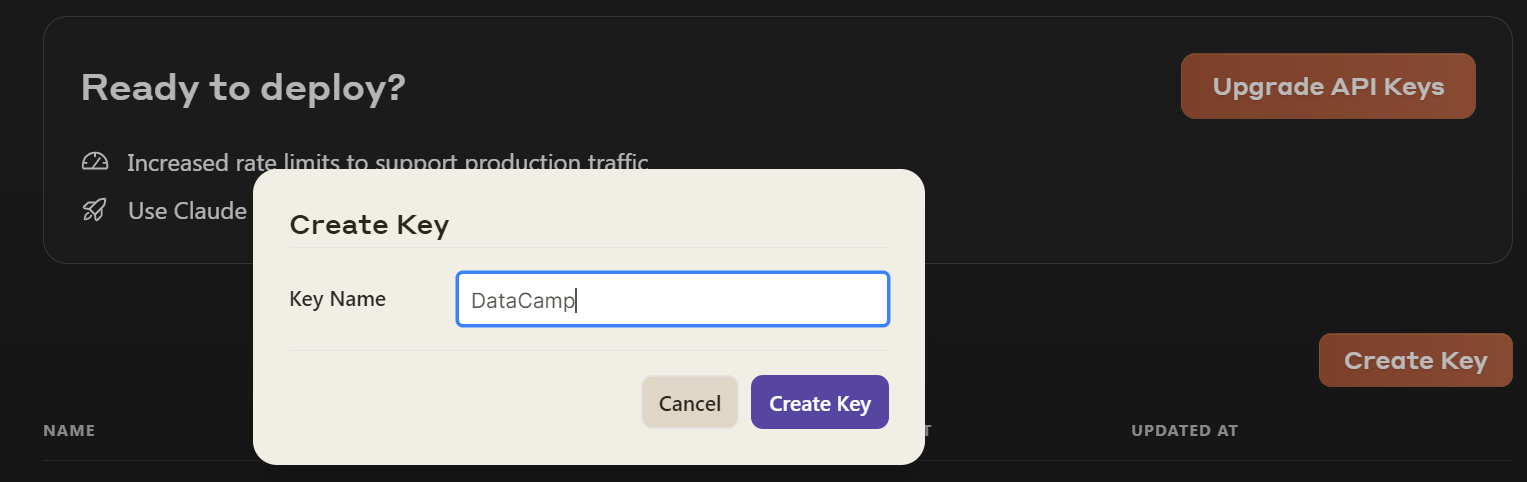

To create an API key, you need to click on the button "Get API Keys" and enter the name of the key. Once you have done that, you will receive a key that you can use to generate responses using Claude 2 API.

Image from Anthropic

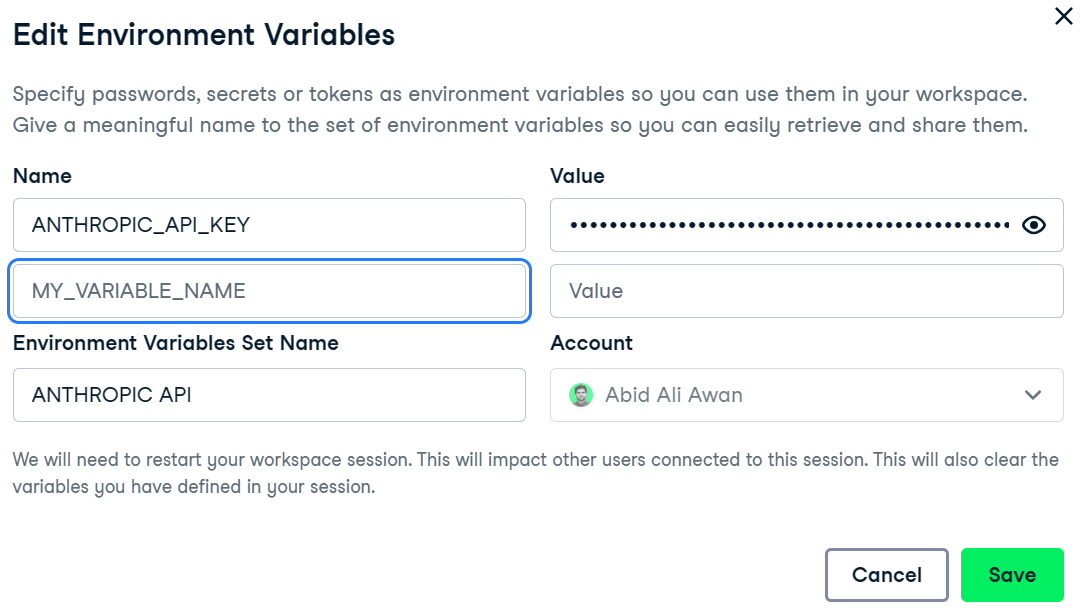

To set up an API key as an environment variable, you can use a Bash command or set it in a DataLab workbook. First, launch a new workbook and then go to the "Environment" button on the left panel. Click on the plus button and add the name and value of the variable. Finally, press save to apply the changes.

Image from DataLab

Note: you can apply the API key directly in the Python function, but it is highly discouraged due to security reasons.

To access the Anthropic API in Python, you have to first install the Anthropic SDK for Python using PIP.

pip install anthropicAccessing the Claude 2 API

In this part, we will learn to access the Anthropic API to generate the response using the Claude 2.1 model. You can code along by creating a copy of this worbook: Getting Started with the Claude 2 and the Claude 2 API.

We will load the os for accessing the API key and anthropic library for creating the conversational AI pipeline using the API key.

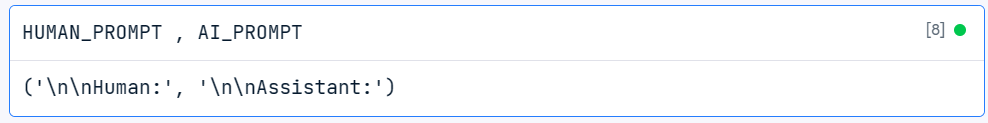

from anthropic import Anthropic, HUMAN_PROMPT, AI_PROMPTimport osanthropic_api_key = os.environ["ANTHROPIC_API_KEY"]anthropic = Anthropic( api_key= anthropic_api_key,)The “HUMAN_PROMPT” and “AI_PROMPT” are actually strings for creating the correct prompt structure for the generation pipeline.

We will now generate the response by providing the model version, max token length, and prompt. Make sure your prompt is following the Claude 2X template.

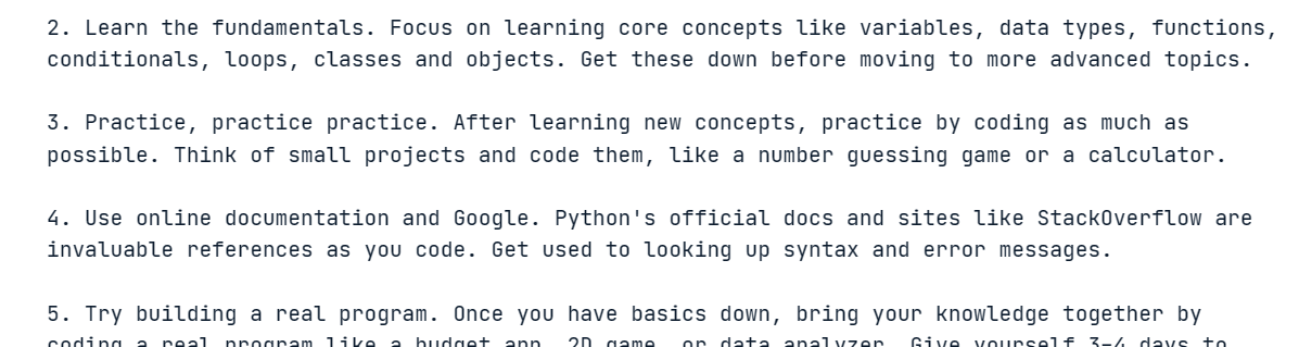

completion = anthropic.completions.create( model="claude-2.1", max_tokens_to_sample=350, prompt=f"{HUMAN_PROMPT} How do I learn Python in a week?{AI_PROMPT}",)print(completion.completion)We got a perfect result. Claude 2.1 is providing us with a detailed plan for learning Python in a week.

Anthropic offers support for both synchronous and asynchronous API requests.

Synchronous APIs execute requests sequentially, blocking until a response is received before invoking the next call. On the other hand, asynchronous APIs allow multiple concurrent requests without blocking, handling responses as they complete. This makes asynchronous APIs more efficient and scalable compared to synchronous ones.

To call the API asynchronously, import AsyncAnthropic instead of Anthropic. Define a function using async syntax and use await with each API call.

from anthropic import AsyncAnthropicanthropic = AsyncAnthropic()async def main(): completion = await anthropic.completions.create( model="claude-2.1", max_tokens_to_sample=300, prompt=f"{HUMAN_PROMPT}How many moons does Jupiter have?{AI_PROMPT}", ) print(completion.completion)await main()We got an almost accurate result. Some sources say 79, but NASA says that Jupiter has 95 moons.

Note: Use asyncio.run(main()) instead of await main() when running the code in a Python .py file instead of a Jupyter Notebook.

LLM Streaming with Claude 2

Streaming has become an essential technique for large language models, offering several benefits over traditional processing methods. Instead of waiting for the entire response, streaming allows for the processing of output as soon as it becomes available. This approach reduces perceived latency by returning the output of the language model token by token, rather than all at once.

To activate streaming, set the 'stream' argument to `True` in the completion function.

anthropic = Anthropic()stream = anthropic.completions.create( prompt=f"{HUMAN_PROMPT}Could you please write a Python code to train a simple classification model?{AI_PROMPT}", max_tokens_to_sample=350, model="claude-2.1", stream=True,)for completion in stream: print(completion.completion, end="", flush=True)It appears that we are receiving the response line by line much faster, as if Claude 2.1 is typing it out.

Similarly, we can also run streaming in `async` mode. Note that we have just added `await` in front of the completion function and `async` before the for loop.

anthropic = AsyncAnthropic()stream = await anthropic.completions.create( prompt=f"{HUMAN_PROMPT}Please write a blog post on Neural Networks and use Markdown format. Kindly make sure that you proofread your work for any spelling, grammar, or punctuation errors.{AI_PROMPT}", max_tokens_to_sample=350, model="claude-2.1", stream=True,)async for completion in stream: print(completion.completion, end="", flush=True)Our generative AI is doing a good job of writing a proper blog.

Check out the list of 7 essential Generative AI tools that can help you build fully functional, context-aware chatbot applications.

Advanced Features and Customizations

The Anthropic API provides several advanced features and customization options beyond basic response generation:

- Error handling: Specific exception types like APIConnectionError and APIStatusError allow handling connection issues and HTTP errors cleanly.

- Default headers: The anthropic-version header is sent automatically to identify the client. This can be customized by changing the

default_headersargument inanthropicobject. - Logging: Debug logging can be enabled by setting the ANTHROPIC_LOG environment variable. This aids in troubleshooting.

- HTTP client: The HTTPX client powering requests can be configured for proxies, transports, and additional advanced functionality.

- Resource management: You can close the client manually using the

.close()method or with a context manager that closes automatically upon exiting. - Prompting techniques: API documentation provides multiple keywords for optimizing your inputs for text, structure documents, code snippets, and RAG results.

- Prompt validation: the API performs basic prompt sanitization and validation to ensure that it is optimized for Claude 2 model.

To learn more about advanced functions, check out Anthropic API documentation.

Practical Applications of Claude 2 API

Claude 2 API has widespread practical applications across many industries and domains. In content creation and copywriting, it can assist in drafting high-quality articles, marketing materials, and creative writing. Moreover, in:

- Language translation: you can build applications that provide real-time translation leveraging advanced multilingual capabilities.

- Education: it is great at personalized learning. Students can take advantage of it to learn about complex topics faster.

- Customer support: it provides enhanced context-aware responses that are more accurate and human-like.

- Programming assistance: generate code snippets, debugging, and explain complex concepts to speed up development

- Creative arts: collaborate with artists to generate content like musical compositions

- Legal/compliance: draft documents, summarize laws, and ensure regulatory compliance.

Conclusion

The Python API provides a powerful way to access Anthropic's state-of-the-art conversational AI models. We covered the basics of getting set up with access keys, making synchronous and asynchronous API calls, streaming responses, and some advanced features. Additionally, we learned about the practical applications of Claude 2's.

While the API is still in limited availability, Anthropic is gradually granting more access over time. As Claude 2 continues to advance, the Python API will enable developers to easily integrate its cutting-edge capabilities into a wide range of products and services.

I hope you found this introduction to the Claude 2 API useful! Feel free to experiment with the code examples to see Claude 2's impressive skills firsthand. Here is a list 5 Projects You Can Build with Generative AI Models and get inspired to build your next generative AI project using Claude 2.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.