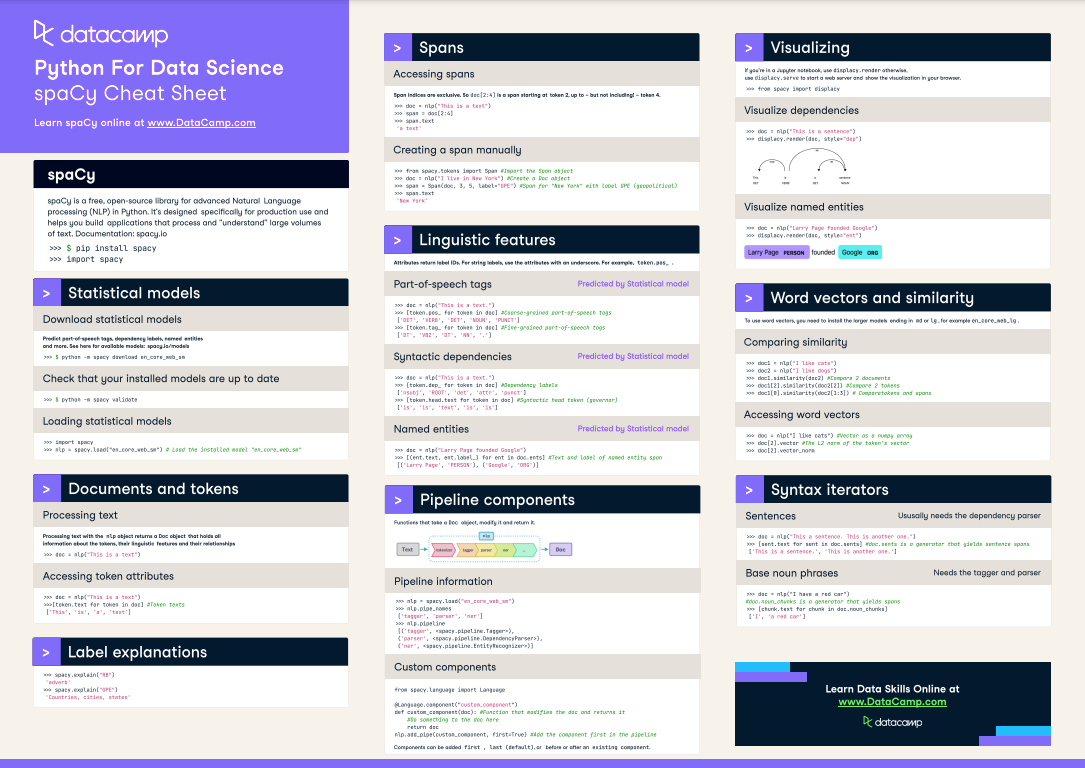

spaCy Cheat Sheet: Advanced NLP in Python

Check out the first official spaCy cheat sheet! A handy two-page reference to the most important concepts and features.

Updated Aug 2021 · 6 min read

RelatedSee MoreSee More

blog

Attention Mechanism in LLMs: An Intuitive Explanation

Learn how the attention mechanism works and how it revolutionized natural language processing (NLP).

Yesha Shastri

8 min

blog

Top 13 ChatGPT Wrappers to Maximize Functionality and Efficiency

Discover the best ChatGPT wrappers to extend its capabilities

Bex Tuychiev

5 min

blog

The Top 21 Airflow Interview Questions and How to Answer Them

Master your next data engineering interview with our guide to the top 21 Airflow questions and answers, including core concepts, advanced techniques, and more.

Jake Roach

13 min

blog

The 4 Best Data Analytics Bootcamps in 2024

Discover the best data analytics bootcamps in 2024, discussing what they are, how to choose the best bootcamp, and you can learn.

Kevin Babitz

5 min

blog

A Guide to Corporate Data Analytics Training

Understand the importance of corporate data analytics training in driving business success. Learn about key building blocks and steps to launch an effective training initiative tailored to your organization's needs.

Kevin Babitz

6 min

tutorial

Encapsulation in Python Object-Oriented Programming: A Comprehensive Guide

Learn the fundamentals of implementing encapsulation in Python object-oriented programming.

Bex Tuychiev

11 min