Curso

El lenguaje es crucial para la comunicación humana, y automatizarlo puede aportar inmensos beneficios. Los modelos de procesamiento del lenguaje natural (PLN) lucharon durante años para captar eficazmente los matices del lenguaje humano, hasta que se produjo un gran avance: el mecanismo de la atención.

El mecanismo de la atención se introdujo en 2017 en el documento La atención es todo lo que necesitas. A diferencia de los métodos tradicionales que tratan las palabras de forma aislada, la atención asigna pesos a cada palabra en función de su relevancia para la tarea actual. Esto permite al modelo captar las dependencias de largo alcance, analizar simultáneamente los contextos local y global, y resolver las ambigüedades atendiendo a las partes informativas de la frase.

Considera la siguiente frase: "Miami, acuñada como la "ciudad mágica", tiene hermosas playas de arena blanca". Los modelos tradicionales procesarían cada palabra en orden. El mecanismo de atención, sin embargo, actúa más como nuestro cerebro. Asigna una puntuación a cada palabra en función de su relevancia para comprender el enfoque actual. Palabras como "Miami" y "playas" son más importantes cuando se considera la ubicación, por lo que recibirían puntuaciones más altas.

En este artículo, daremos una explicación intuitiva del mecanismo de la atención. También puedes encontrar un enfoque más técnico en este tutorial sobre cómo funcionan los transformadores. ¡Sumerjámonos de lleno!

Modelos lingüísticos tradicionales

Empecemos nuestro viaje para comprender el mecanismo de la atención considerando el contexto más amplio de los modelos lingüísticos.

Aspectos básicos del tratamiento de la lengua

Los modelos lingüísticos procesan el lenguaje intentando comprender la estructura gramatical (sintaxis) y el significado (semántica). El objetivo es producir un lenguaje con la sintaxis y la semántica correctas que sean relevantes para la entrada.

Los modelos lingüísticos se basan en una serie de técnicas para descomponer y comprender el texto:

- Analizar: Esta técnica analiza la estructura de la frase, asignando partes de la oración (sustantivo, verbo, adjetivo, etc.) a cada palabra e identificando relaciones gramaticales.

- Tokenización: El modelo divide las frases en palabras individuales (tokens), creando los bloques de construcción para realizar el análisis semántico (puedes obtener más información sobre la tokenización en otro artículo).

- Vástago: Este paso reduce las palabras a su forma raíz (por ejemplo, "andar" se convierte en "caminar"). Esto garantiza que el modelo trate las palabras similares de forma coherente.

- Reconocimiento de entidades y extracción de relaciones: Estas técnicas trabajan juntas para identificar y categorizar entidades concretas (como personas o lugares) dentro del texto y descubrir sus relaciones.

- Incrustaciones de palabras: Por último, el modelo crea una representación numérica para cada palabra (un vector), que capta su significado y sus conexiones con otras palabras. Esto permite al modelo procesar el texto y realizar tareas como la traducción o el resumen.

Las limitaciones de los modelos tradicionales

Aunque los modelos lingüísticos tradicionales allanaron el camino para los avances en la PNL, se enfrentaron a retos a la hora de comprender plenamente las complejidades del lenguaje natural:

- Contexto limitado: Los modelos tradicionales solían representar el texto como un conjunto de tokens individuales, sin captar el contexto más amplio de una frase. Esto dificultaba la comprensión de cómo palabras muy separadas en una frase podían estar relacionadas.

- Contexto breve: La ventana de contexto que estos modelos tenían en cuenta durante el procesamiento era a menudo limitada. Esto significaba que no podían captar las dependencias de largo alcance, en las que palabras muy separadas en una frase influyen en el significado de las demás.

- Problemas de desambiguación de palabras: Los modelos tradicionales se esforzaban por desambiguar palabras con múltiples significados basándose únicamente en las palabras circundantes. Carecían de la capacidad de considerar el contexto más amplio para determinar el significado pretendido.

- Retos de generalización: Debido a las limitaciones de la arquitectura de red y a la cantidad de datos de entrenamiento disponibles, estos modelos suelen tener dificultades para adaptarse a situaciones nuevas o no vistas (datos fuera del dominio).

¿Qué es la atención en los modelos lingüísticos?

A diferencia de los modelos tradicionales que tratan las palabras de forma aislada, la atención permite que los modelos lingüísticos tengan en cuenta el contexto. ¡Veamos de qué se trata!

Atención es todo lo que necesitas

El cambio de juego para el campo de la PNL se produjo en 2017, cuando el documento La atención es todo lo que necesitas introdujo el mecanismo de la atención.

Este artículo propone una nueva arquitectura denominada transformador. A diferencia de métodos más antiguos, como las redes neuronales recurrentes (RNN) y las redes neuronales convolucionales (CNN), los transformadores utilizan mecanismos de atención.

Al resolver muchos de los problemas de los modelos tradicionales, los transformadores (y la atención) se han convertido en la base de muchos de los grandes modelos lingüísticos (LLM) más populares de la actualidad, como el GPT-4 y el ChatGPT de OpenAI.

¿Cómo funciona la atención?

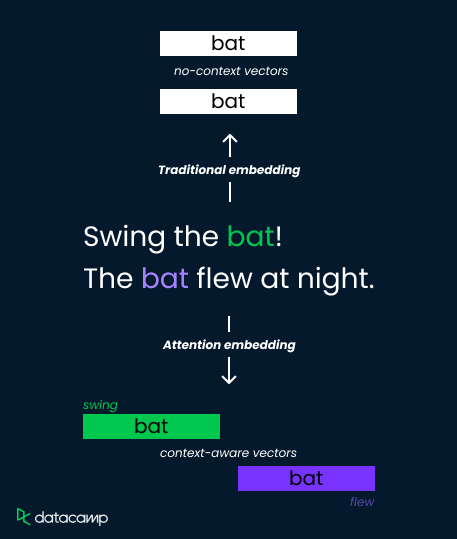

Consideremos la palabra "murciélago" en estas dos frases:

- "¡Gira el bate!"

- "El murciélago volaba de noche".

Los métodos tradicionales de incrustación asignan una única representación vectorial al "murciélago", lo que limita su capacidad para distinguir significados. Sin embargo, los mecanismos de atención lo solucionan calculando pesos dependientes del contexto.

Analizan las palabras circundantes ("columpio" frente a "voló") y calculan puntuaciones de atención que determinan la relevancia. A continuación, estas puntuaciones se utilizan para ponderar los vectores de incrustación, lo que da lugar a representaciones distintas del "bate" como herramienta deportiva (mayor peso en "swing") o como criatura voladora (mayor peso en "voló").

Esto permite que el modelo capte los matices semánticos y mejore la comprensión.

La importancia de la atención en los LLM

Basémonos ahora en nuestra comprensión intuitiva de la atención y aprendamos cómo el mecanismo va más allá de las incrustaciones de palabras tradicionales para mejorar la comprensión del lenguaje. También veremos algunas aplicaciones de la atención en el mundo real.

Más allá de las incrustaciones de palabras tradicionales

Las técnicas tradicionales de incrustación de palabras, como Word2Vec y GloVe, representan las palabras como vectores de dimensión fija en un espacio semántico basado en estadísticas de co-ocurrencia en un gran corpus de texto.

Aunque estas incrustaciones captan algunas relaciones semánticas entre las palabras, carecen de sensibilidad al contexto. Esto significa que la misma palabra tendrá la misma incrustación independientemente de su contexto dentro de una frase o documento.

Esta limitación plantea retos en tareas que requieren una comprensión matizada del lenguaje, especialmente cuando las palabras conllevan diferentes significados contextuales. El mecanismo de atención resuelve este problema permitiendo a los modelos centrarse selectivamente en las partes relevantes de las secuencias de entrada, incorporando así la sensibilidad al contexto en el proceso de aprendizaje de la representación.

Mejorar la comprensión lingüística

La atención permite a los modelos comprender los matices y ambigüedades del lenguaje, lo que les hace más eficaces en el procesamiento de textos complejos. Algunas de sus principales ventajas son:

- Ponderación dinámica: La atención permite a los modelos ajustar dinámicamente la importancia de determinadas palabras en función de la relevancia del contexto actual.

- Dependencias de largo alcance: Hace posible captar las relaciones entre palabras situadas a gran distancia.

- Comprensión contextual: Además de representaciones contextualizadas, ayuda a resolver ambigüedades y hace que los modelos sean adaptables a diversas tareas posteriores.

Aplicaciones e impactos

El impacto de los modelos lingüísticos basados en la atención ha sido tremendo. Miles de personas utilizan aplicaciones construidas sobre modelos basados en la atención. Algunas de las aplicaciones más populares son:

- Traducción automática: Los modelos como Google Translate aprovechan la atención para centrarse en las partes relevantes de la frase original y producir traducciones más precisas desde el punto de vista contextual.

- Resumen de textos: Las frases u oraciones importantes de un documento pueden encontrarse con atención, facilitando resúmenes más informativos y concisos.

- Responde a las preguntas: La atención ayuda a los modelos de aprendizaje profundo a alinear las palabras de la pregunta con las partes relevantes del contexto, lo que permite extraer respuestas precisas.

- Análisis del sentimiento: Los modelos de análisis de los sentimientos emplean la atención para captar las palabras portadoras de sentimientos y su significado contextual.

- Generación de contenidos: Los modelos de generación de contenidos utilizan la atención para generar contenidos coherentes y contextualmente relevantes, asegurándose de que el texto generado sigue siendo coherente con el contexto de entrada.

Mecanismos avanzados de atención

Ahora que ya sabemos cómo funciona la atención, veamos la autoatención y la atención multicabeza.

Autoatención y atención multicabeza

La autoatención permite a un modelo atender a distintas posiciones de su secuencia de entrada para calcular una representación de esa secuencia. Permite que el modelo pondere la importancia de cada palabra de la secuencia en relación con las demás, captando las dependencias entre las distintas palabras de la entrada. El mecanismo tiene tres elementos principales:

- Consulta: Es un vector que representa el enfoque actual o la pregunta que tiene el modelo sobre una palabra concreta de la secuencia. Es como una linterna que el modelo ilumina sobre una palabra concreta para comprender su significado en el contexto.

- Key: Cada palabra tiene una etiqueta o punto de referencia: el vector clave actúa como esta etiqueta. El modelo compara el vector de consulta con todos los vectores clave para ver qué palabras son las más relevantes para responder a la pregunta sobre la palabra enfocada.

- Valor: Este vector contiene la información real asociada a cada palabra. Una vez que el modelo identifica las palabras relevantes mediante las comparaciones de claves, recupera los vectores de valores correspondientes para obtener los detalles reales necesarios para la comprensión.

Las puntuaciones de atención pueden calcularse haciendo un producto escalar de puntos entre la consulta y los vectores clave. Finalmente, estas puntuaciones se multiplican por los vectores de valores para obtener una suma ponderada de valores.

La atención multicabeza es una ampliación del mecanismo de autoatención. Aumenta la capacidad del modelo para captar información contextual diversa, atendiendo simultáneamente a distintas partes de la secuencia de entrada. Lo consigue realizando múltiples operaciones paralelas de autoatención, cada una con su propio conjunto de transformaciones aprendidas de consulta, clave y valor.

La atención multicabezal conduce a una comprensión contextual más fina, mayor robustez y expresividad.

Atención: Retos y soluciones

Aunque la aplicación del mecanismo de atención tiene varias ventajas, también conlleva su propio conjunto de retos, que la investigación en curso puede abordar potencialmente.

Complejidad computacional

Los mecanismos de atención implican el cálculo de similitudes por pares entre todos los tokens de la secuencia de entrada, lo que da lugar a una complejidad cuadrática con respecto a la longitud de la secuencia. Esto puede ser costoso desde el punto de vista informático, especialmente para secuencias largas.

Se han propuesto varias técnicas para mitigar la complejidad computacional, como los mecanismos de atención dispersa, los métodos de atención aproximada y los mecanismos de atención eficientes, como el hashing sensible a la localidad del modelo Reformer.

Atención al sobreajuste

Los mecanismos de atención pueden sobreajustar la información ruidosa o irrelevante de la secuencia de entrada, lo que conduce a un rendimiento subóptimo en los datos no vistos.

Las técnicas de regularización, como el abandono y la normalización de capas, pueden ayudar a evitar el sobreajuste en los modelos basados en la atención. Además, se han propuesto técnicas como el abandono de la atención y el enmascaramiento de la atención para animar al modelo a centrarse en la información relevante.

Interpretabilidad y explicabilidad

Comprender cómo funcionan los mecanismos de atención e interpretar sus resultados puede ser un reto, sobre todo en modelos complejos con múltiples capas y cabezas de atención. Esto suscita inquietudes sobre la ética de esta nueva tecnología - puedes aprender más sobre la ética de la IA en nuestro curso, o escuchando este podcast de con el investigador de IA Dr. Joy Buolamwini.

Se han desarrollado métodos para visualizar los pesos de la atención e interpretar su significado, con el fin de mejorar la interpretabilidad de los modelos basados en la atención. Además, técnicas como la atribución de atención pretenden identificar las contribuciones de fichas individuales a las predicciones del modelo, mejorando la explicabilidad.

Escalabilidad y limitaciones de memoria

Los mecanismos de atención consumen mucha memoria y recursos informáticos, lo que dificulta su ampliación a modelos y conjuntos de datos más grandes.

Las técnicas para escalar los modelos basados en la atención, como la atención jerárquica, la atención eficiente en memoria y la atención dispersa, pretenden reducir el consumo de memoria y la sobrecarga computacional, manteniendo al mismo tiempo el rendimiento del modelo.

Atención: Resumen

Resumamos lo que hemos aprendido hasta ahora centrándonos en las diferencias entre los modelos tradicionales y los basados en la atención:

|

Función |

Modelos basados en la atención |

Modelos tradicionales de PNL |

|

Representación de palabras |

Vectores de incrustación sensibles al contexto (ponderados dinámicamente en función de las puntuaciones de atención) |

Vectores de incrustación estáticos (un único vector por palabra, sin tener en cuenta el contexto) |

|

Enfoque |

Considera el significado de las palabras circundantes (mirando el contexto más amplio) |

Trata cada palabra de forma independiente |

|

Puntos fuertes |

Capta las dependencias de largo alcance, resuelve la ambigüedad, comprende los matices |

Más sencillo, computacionalmente más barato |

|

Puntos débiles |

Puede ser costoso computacionalmente |

Capacidad limitada para comprender un lenguaje complejo, dificultades con el contexto |

|

Mecanismo subyacente |

Redes codificador-decodificador con atención (varias arquitecturas) |

Técnicas como el análisis sintáctico, el stemming, el reconocimiento de entidades con nombre, la incrustación de palabras |

Conclusión

En este artículo exploramos el mecanismo de la atención, una innovación que revolucionó la PNL. A diferencia de los métodos anteriores, la atención permite a los modelos lingüísticos centrarse en las partes cruciales de una frase, teniendo en cuenta el contexto. Esto les permite captar el lenguaje complejo, las conexiones de largo alcance y la ambigüedad de las palabras.

Puedes seguir aprendiendo sobre el mecanismo de la atención:

- Comprender el contexto más amplio siguiendo nuestro curso de Grandes Modelos Lingüísticos (LLM ).

- Construir un transformador con PyTorch.