Curso

The AI race in February 2026 has been unusually intense. After Anthropic released Claude Opus 4.6 and Claude Sonnet 4.6 within two weeks of each other, Google countered with Gemini 3.1 Pro.

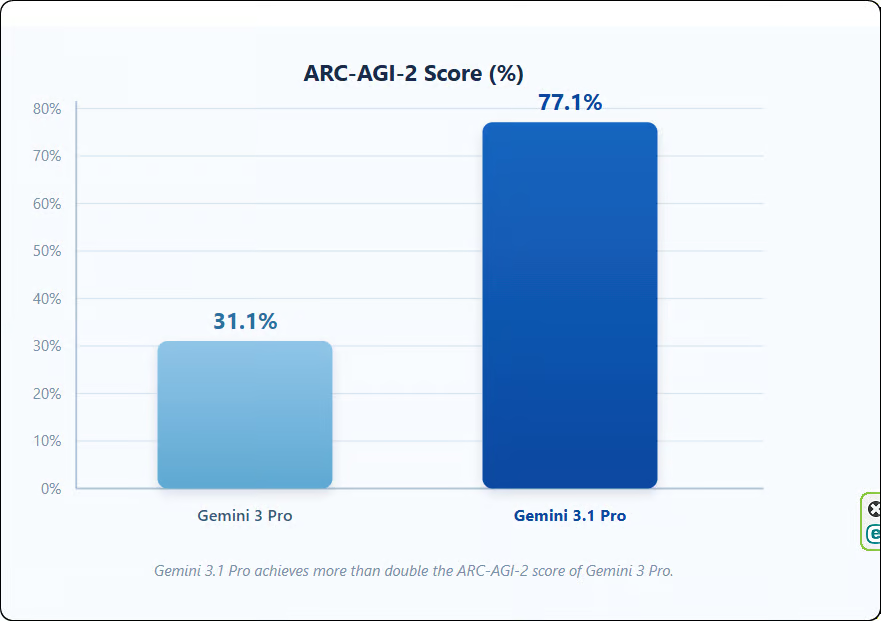

Google claims that this is a significant release, mostly because Gemini 3.1 Pro more than doubled its reasoning performance compared to Gemini 3 Pro, as measured by the ARC-AGI-2 benchmark, where it achieved a verified score of 77.1%

ARC-AGI-2 is important because it tests novel pattern recognition rather than memorized knowledge. It’s designed so that models can't just train their way to a high score in the traditional sense. So a doubling on this test is more meaningful than a doubling on, say, MMLU. We will get more into the importance of this result later on, and even test it for ourselves.

To learn more about Google's AI ecosystem, I recommend checking out our NotebookLM guide and our Gemini CLI tutorial.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What Is Gemini 3.1 Pro?

Gemini 3.1 Pro is Google's latest flagship model, released in preview on February 19, 2026. It's the first time Google has used a ".1" version increment (every previous mid-cycle update used ".5"), signaling a focused intelligence upgrade rather than a broad feature expansion. This makes sense because Gemini 3 was already a sweeping release that introduced a new multimodal architecture.

Google's launch post explains that the intelligence powering Deep Think's recent scientific breakthroughs, including disproving a decade-old math conjecture, has now been distilled into 3.1 Pro for everyday use.

Deep Think was technically available before, but only if you had an Ultra subscription. Google would have you believe the goal was always to bring this reasoning to everyday use at scale, but it’s only with this release of Gemini 3.1 that it looks like they're finally making good on that. Perhaps Google discovered that the $249/month Ultra subscription was more than people were willing to pay.

What's New With Gemini 3.1 Pro?

Here are the key improvements in this release:

Much stronger reasoning

As I mentioned in the intro, the big change is in abstract and multi-step reasoning. Gemini 3.1’s performance on ARC-AGI-2 more than doubled compared to Gemini 3 Pro in roughly three months.

Beyond the ARC-AGI-2 improvements, the model hit the highest score ever recorded on GPQA Diamond, a graduate-level science benchmark.

Gemini 3.1 Pro always engages in "dynamic thinking": It automatically applies chain-of-thought reasoning based on task complexity.

The API introduced a new thinking_level parameter with four settings: low, medium (new in 3.1), high, and max, giving developers a middle ground between speed and depth.

Much better agentic performance

One of the clearer patterns in this release is how much the agentic benchmarks have moved. The model now scores much higher on autonomous web research, long-horizon multi-step tasks, and terminal coding than its predecessor.

For anyone building workflows where the model operates with minimal supervision (debugging, web research, data gathering), these improvements matter in practice.

Agentic performance roughly doubled compared to Gemini 3 Pro in some categories, and it now leads over GPT-5.2 and Claude across most of these benchmarks.

Code-based animated output

This one caught my attention. Google highlighted that Gemini 3.1 Pro can generate animated SVGs and interactive dashboards entirely through code output. Because these are mathematical definitions rather than rendered images, they scale without quality loss and are far smaller than video files.

The examples from the launch are impressive: a portfolio website generated from the themes of Wuthering Heights, a live aerospace dashboard pulling ISS telemetry, and a 3D starling murmuration with hand-tracking and a generative audio score.

These are code outputs, not images, which means they're editable, embeddable, and lightweight.

Output truncation finally fixed

This is less flashy but probably more immediately relevant for anyone who's used Gemini 3 Pro in production. A recurring complaint with the previous model was that it would cut off long responses mid-generation.

User reports after the launch indicate that 3.1 Pro resolves this. One user reported generating a massive response in a single run without any truncation.

JetBrains also confirmed real quality improvements with the new model, noting it delivers "more reliable results" with "fewer output tokens" needed. That efficiency gain, combined with no truncation, makes a real difference for long-form generation.

Gemini 3.1 Pro Benchmarks

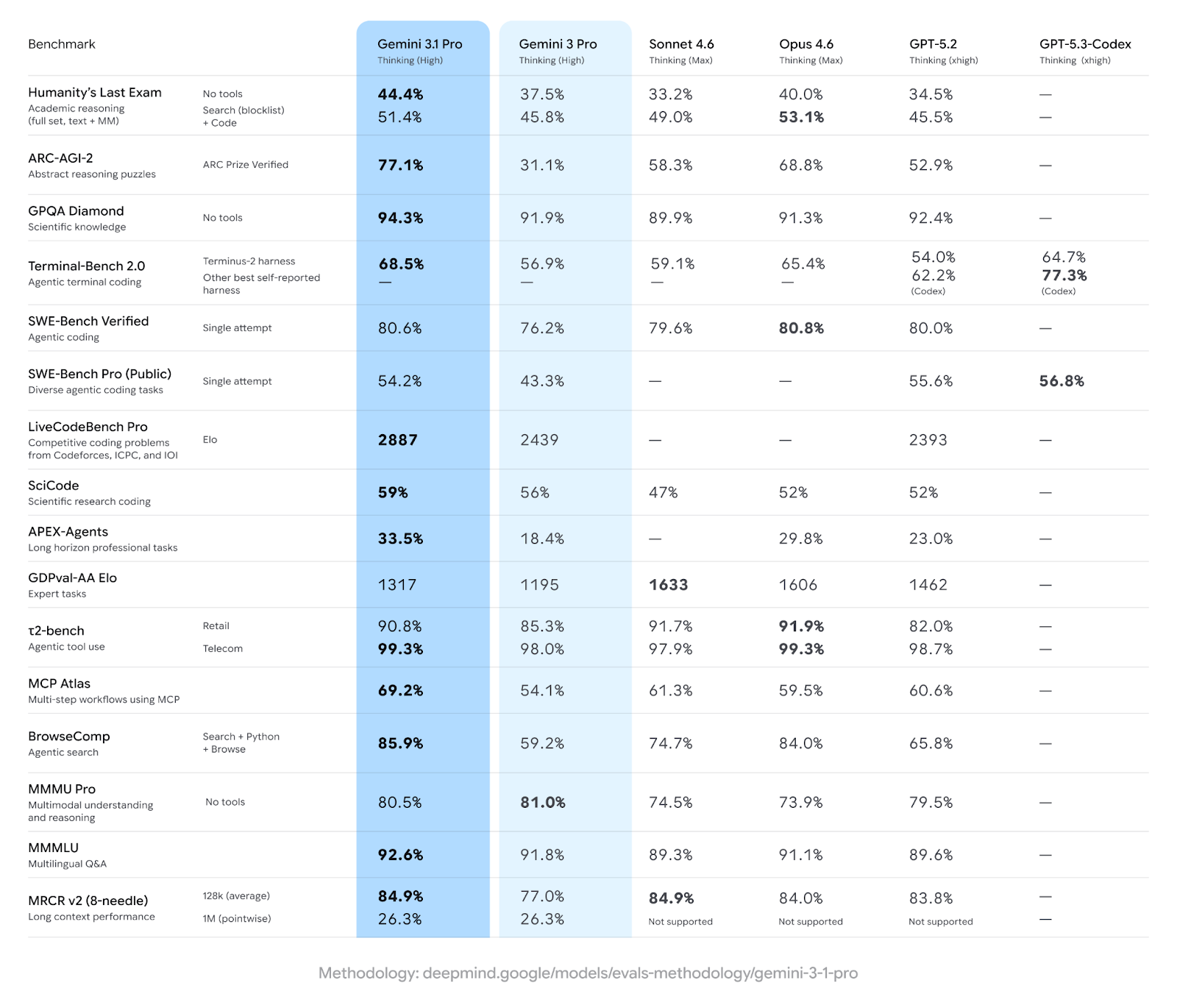

Google shows that Gemini 3.1 Pro leads on 13 of 16 of some of the most important benchmark tests, including ones related to abstract reasoning, agentic tasks, and graduate-level science. (Gemini 3 Pro was already leading on a handful of these benchmarks anyway.)

Here's how the latest model stacks up against the other major releases of February 2026.

As you can see, and as I mentioned earlier, the abstract reasoning result is the most striking. Gemini 3.1 Pro leads by a clear margin over Opus 4.6, which itself leads by a clear margin over GPT-5.2. This represents a real shift from where frontier models stood just a year ago.

Where Claude still holds an edge

I want to be upfront about this because it's easy to get swept up in the big numbers. Claude models really do lead in some important areas:

- Real-world software engineering: Opus 4.6 narrowly takes the win on SWE-bench Verified. (They're nearly tied, but Anthropic gets the flag.)

- Tool-augmented reasoning: Opus 4.6 beats Gemini 3.1 Pro when both models can use external tools, which suggests stronger tool integration.

- Knowledge-intensive work: Sonnet 4.6 leads by a wide margin on GDPval-AA, which measures economically valuable tasks like financial modeling and research. This is a gap worth paying attention to.

- Computer use via GUI: Claude leads clearly here, with no published Gemini equivalent.

The honest picture: Gemini 3.1 Pro is the best model right now for abstract reasoning, scientific knowledge, and multimodal breadth. Claude models remain ahead for knowledge work, tool orchestration, and operating software via a graphical interface.

Testing Gemini 3.1 Pro

To see how these improvements translate to real-world reasoning, I ran three tests designed to probe different aspects of abstract thinking:

Test 1: A symbol sequence puzzle

To see how Gemini 3.1 Pro handles ARC-AGI-2 style reasoning, we used a simple rule-inference puzzle. The model has to infer both a color rule and a shape rule from examples, without being told the rules explicitly.

Here is my prompt:

You are shown these transformations:

- [Red Circle] → [Blue Triangle]

- [Blue Square] → [Red Circle]

- [Red Square] → [Blue Circle]

- [Blue Triangle] → ?Gemini 3.1 Pro correctly answered [Red Square]. The model identified both rules independently: colors toggle (Red ↔ Blue) and shapes cycle (Square → Circle → Triangle → Square). It then traced through the logic step by step, showing how Blue Triangle becomes Red (color toggle) and Square (next in shape cycle), which is exactly the kind of compositional reasoning this test targets.

Test 2: The disguised sequence

This test checks hypothesis elimination across multiple layers. We give the model two sequences and ask it to identify what the first sequence is (partition numbers from the OEIS) and work out the two transformations applied to produce the second.

Here are two sequences. The second was derived from the first in two separate steps.

Identify the named mathematical sequence that Sequence A belongs to, and work out

both transformations that were applied to produce Sequence B.

Sequence A: 1, 1, 2, 3, 5, 7, 11, 15, 22, 30, 42, 56, 77

Sequence B: 2, 3, 5, 8, 3, 9, 8, 1, 7, 9, 8, 7

Explain your reasoning step by step.Gemini 3.1 Pro correctly identified Sequence A as partition numbers (A000041) and explained what partition numbers represent in number theory. It then systematically worked through both transformations: first summing consecutive pairs to generate an intermediate sequence, then calculating the digital root of each result. The model verified each step against Sequence B, showing the complete chain of reasoning from the original sequence to the final output.

Test 3: The broken clock network

This test targets constraint consistency checking. Six clocks are networked, each applying a fixed 20-minute offset. One clock is broken. The model has to trace both paths through the network and spot the contradiction.

Here is the prompt I used:

Six clocks (A, B, C, D, E, and F) are connected in a network. Each clock applies

a fixed offset to the time it receives. A is the root and shows 12:00. You observe:

- B receives from A and shows 12:20

- C receives from A and shows 11:40

- D receives from B and shows 12:40

- E receives from C and shows 11:00

- F receives from both D and E and shows 13:00

There is exactly one broken clock in the network. Based on the pattern of offsets,

identify which clock is broken, and give two possible answers for what it should

actually show (one for each path through the network).

Explain your reasoning step by step.Gemini 3.1 Pro correctly identified F as the broken clock and inferred two possible values for it: 13:00 via D’s path and 10:00 via E’s path. The model treated the right-hand path as a constant +20 minute offset and the left-hand path as an arithmetic sequence of −20, −40, then −60 minutes.

Hands-On With Gemini 3.1 Pro

Beyond abstract reasoning tests, I wanted to see how the model handles practical tasks that showcase its new features.

Animated SVG generation

Google made a big deal of code-based visual output in the launch, so I tested it directly with a simple brief and no template.

Here is the prompt I used:

Create an animated SVG loading spinner with three bouncing dots. Make it smooth,

professional, and suitable for embedding on a website. Output only the SVG code.Gemini 3.1 Pro returned clean SVG code with CSS animations. The output was a working three-dot loader with staggered bounce timing, exactly what was asked for. It rendered correctly in a browser on the first try, no adjustments needed. File size was tiny, and because it's vector-based code, it scales cleanly to any size.

This is one of those features that sounds gimmicky in a press release but turns out to be really practical. Lightweight, embeddable, infinitely scalable animated graphics from a text prompt is a solid tool for frontend prototyping or quick visual assets.

How Can I Access Gemini 3.1 Pro?

Gemini 3.1 Pro is currently in preview. Google has said it will reach general availability soon, after addressing feedback and making further improvements to agentic workflows.

Here are the main access options:

Gemini CLI

The Gemini CLI is an open-source terminal agent that gives the model direct access to your local environment. Install it using the following code:

npm install -g @google/gemini-cli

# Or run directly: npx @google/gemini-cliThe CLI uses a ReAct loop, meaning it can write code, run it, read errors, fix issues, and iterate on its own. With 3.1 Pro's improved terminal coding performance, this loop is noticeably more reliable. The free tier gives you 60 requests per minute and 1,000 requests per day.

Gemini API

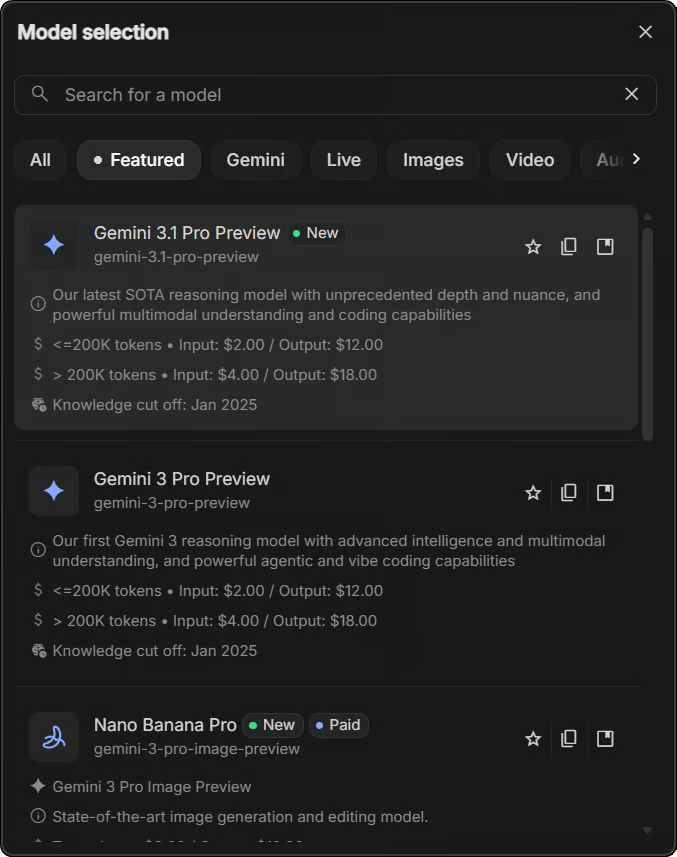

The Gemini API gives developers direct programmatic access to Gemini 3.1 Pro.

The model ID you want is: gemini-3.1-pro-preview

Here's some Python code to help you get started:

from google import genai

client = genai.Client()

response = client.models.generate_content(

model="gemini-3.1-pro-preview",

contents="Your prompt here"

)

print(response.text)The pricing is the same as Gemini 3 Pro Preview.

|

Context size |

Input (per 1M tokens) |

Output (per 1M tokens) |

|

≤200K tokens |

$2.00 |

$12.00 |

|

>200K tokens |

$4.00 |

$18.00 |

The thinking_level parameter accepts low, medium, high, or max. Supported tools include Google Search, URL context, code execution, and file search. I'll cover the context window details in the comparison section below.

NotebookLM

NotebookLM is now powered by Gemini 3.1 Pro for Google AI Pro and Ultra subscribers. NotebookLM responds only based on documents you upload, making it a really useful research tool when you want the model to stay grounded in specific materials.

Consumer access

Google has started rolling Gemini 3.1 Pro out across its consumer and developer products, but it has not published a simple "plan X = model Y" mapping. Practically, you'll see 3.1 Pro in the Gemini app and API as it rolls out, with AI Ultra providing the broadest access.

|

Plan |

Monthly price (US) |

What you get related to Gemini |

|

Free |

$0 |

Gemini 3 Flash in the Gemini app, limited features |

|

Google AI Pro |

$19.99 |

Higher limits and access to Gemini Pro models in the Gemini app |

|

Google AI Ultra |

$249.99 (often discounted to $124.99 for first 3 months) |

Highest limits, Deep Think mode, and access to Google's latest AI features across products |

Gemini 3.1 Pro vs. Claude Models

The February 2026 releases from Google and Anthropic have created a really interesting set of trade-offs. These aren't situations where one model clearly wins. The right choice depends heavily on what you're building.

The pricing gap is worth dwelling on. Gemini 3.1 Pro is much cheaper on both input and output than Claude Opus 4.6. If you're running high-volume API calls, that's not a small difference.

Choose Gemini 3.1 Pro when:

- Abstract reasoning and scientific analysis are the priority

- You need robust, natively multimodal support for video and audio in the same model

- You want the full 1M context window in its stable, non-beta form

- Cost efficiency matters, especially at scale

Choose Claude Opus 4.6 when:

- You need the full 128K output tokens (Gemini caps at 64K)

- Multi-agent orchestration is central to your workflow (Agent Teams is a real differentiator)

- Computer use via GUI is important

- You're doing knowledge-intensive work where deep research quality matters

Choose Claude Sonnet 4.6 when:

- Knowledge work, document analysis, or financial analysis are the main tasks

- You need near-flagship performance at a lower price point

- You're already using Anthropic tools and Sonnet is your default

Gemini 3.1 Pro Use Cases

Based on the benchmarks and hands-on testing, here are the areas where Gemini 3.1 Pro is particularly well-suited:

- Scientific research and analysis: Strong GPQA Diamond performance plus 1M context window makes it practical for literature review, hypothesis generation, and synthesizing across multiple papers at once.

- Autonomous research agents: Improved agentic benchmarks translate to real-world multi-step tasks like gathering information from multiple sources, verifying facts, and producing structured reports with minimal supervision.

- Codebase analysis and refactoring: A large context window plus improved reasoning handles tasks like identifying architectural inconsistencies across modules or tracing bugs through multiple files.

- Multimodal content analysis: Native video and audio support enables analyzing recorded meetings, extracting insights from lecture videos, or processing podcasts without preprocessing.

- Cost-sensitive production deployments: At roughly half the cost of Claude Opus 4.6, it makes sense for high-volume inference where reasoning quality matters but budget is a constraint.

- Prototyping and visual assets: Code-based animated output generates loading spinners, animated charts, or interactive dashboards from text prompts that you can embed directly.

Final Thoughts

Gemini 3.1 Pro is a good example of where these models are going. Less focus on new input types, more focus on better reasoning, more reliable agents, and handling longer contexts. Even though it's just a ".1" release, the benchmark improvements and the Deep Think connection make it feel like a bigger step forward in how these systems think.

For teams building real products, there's no single "best" model. Gemini 3.1 Pro works well for scientific reasoning, research agents, and analyzing large codebases, especially when you consider the price and video support. Claude is still better for knowledge work and using computers through the screen, and GPT-5.3-Codex still wins on some coding tests.

The interesting question is what happens when it leaves preview. Google has said they're working on agent improvements before the full release. If those ship alongside the current reasoning upgrades, the gap between research models like Deep Think and everyday models will get smaller. For now, it's a good time to try different models and build systems that can use what each one does best.

To get started with Google's AI tools, check out our Introduction to Google Gemini course. For working with the API in Python, our Working with the Gemini API tutorial covers the essentials.

I’m a data engineer and community builder who works across data pipelines, cloud, and AI tooling while writing practical, high-impact tutorials for DataCamp and emerging developers.

Gemini 3.1 FAQs

Is Gemini 3.1 Pro free to use?

You can test it for free through Google AI Studio with daily quotas. For production use, you'll need a paid plan. Google AI Pro is $19.99/month, and Google AI Ultra is $249.99/month (often discounted to $124.99 for the first 3 months). The free Gemini app defaults to Gemini 3 Flash, not 3.1 Pro.

What's the difference between Gemini 3.1 Pro and Deep Think?

Deep Think is the research lab version: slower, more expensive, but hits higher on reasoning benchmarks. Gemini 3.1 Pro takes those same intelligence upgrades and makes them fast and affordable enough for everyday use. Think of it as the production-ready version of the same core technology.

Does it actually understand video, or just extract frames?

Gemini 3.1 Pro is a natively multimodal model that can take video as input alongside text, images, and audio. In practice, you can upload a recording and ask questions about both what is said and what is shown on screen. Competing models are still more limited in how broadly they expose video understanding features to end users.

How does the 1M context window compare to Claude's?

As I mentioned in the comparison section, Gemini's 1M window is stable and production-ready, while Claude's is currently in beta. The maximum output per request is 64K tokens.

When does it leave preview?

Google hasn't set a date, but they've said they're working on agentic improvements before the GA release. Based on past patterns, preview periods usually run a few months.