Course

Mere hours after xAI unveiled Grok 4.1, Google has gone one better and announced Gemini 3. This latest Gemini model is Google’s most intelligent to date, and it’s available immediately in the Gemini app, as well as in AI Studio and Vertex AI.

Gemini 3 will also immediately start powering AI Mode in search, and Google also announced Gemini 3 Deep Think mode and Google Antigravity, a new agentic development platform.

In this article, I’ll cover everything announced by Google, what it means for the LLM space, and get hands-on with the new Gemini 3 model to see what it’s capable of. You can also see our guide on the Gemini 3 API to work through a hands-on project, check out Google Antigravity with our tutorial, and our guide to creating a Gemini 3 Flash project.

What Is Gemini 3 Pro?

Gemini 3 Pro is the latest and most advanced large language AI model from Google. It’s available now through the Gemini App, AI Studio, and Vertex AI. The company hails this as their smartest model yet, with improved performance in reasoning, coding, and multimodal tasks.

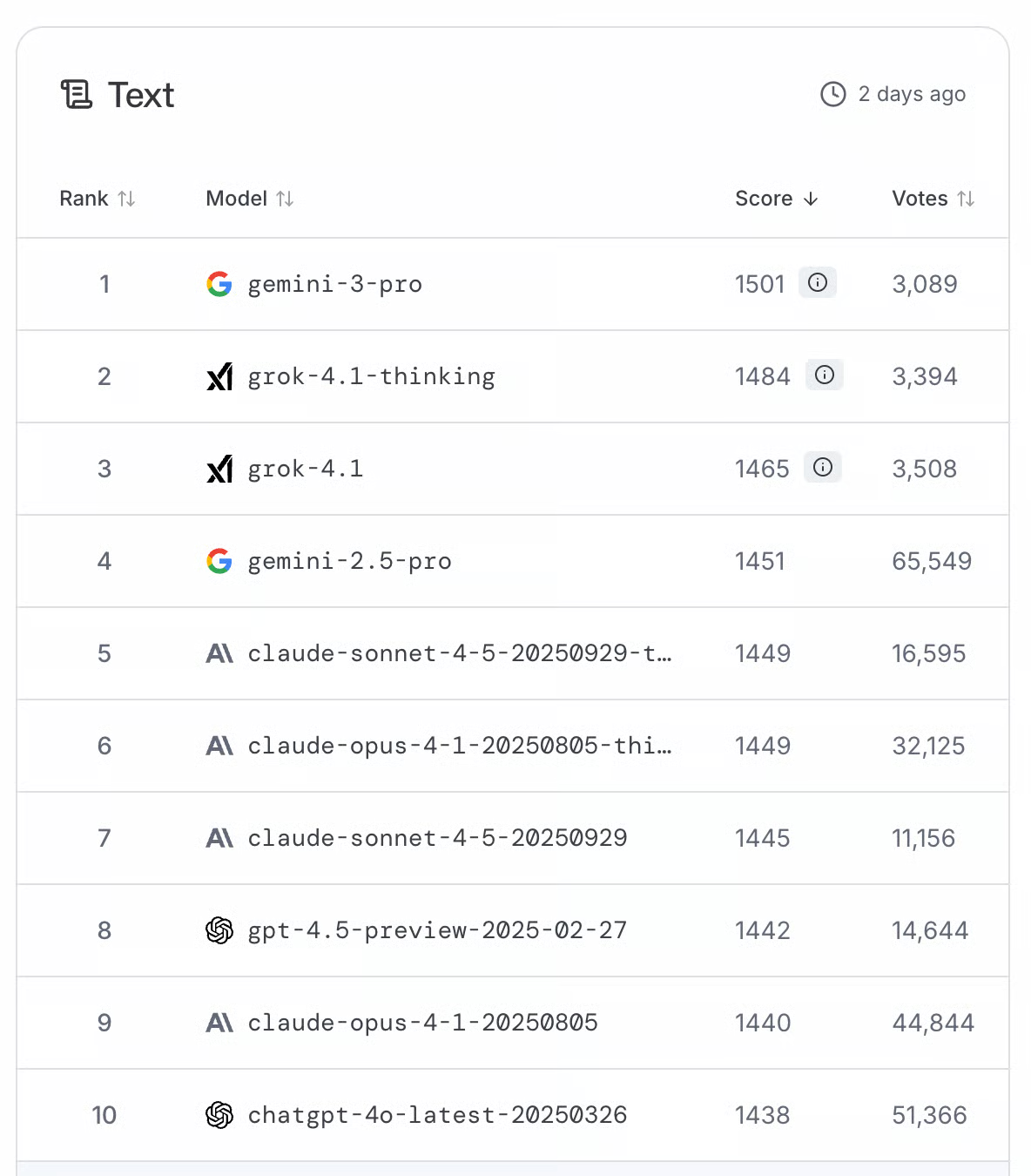

This new model builds on the success of the Gemini 2.5 Pro release, which was only seven months ago. Gemini 3 tops the leaderboard on the LMArena Leaderboard, replacing Grok 4.1 after only a matter of hours.

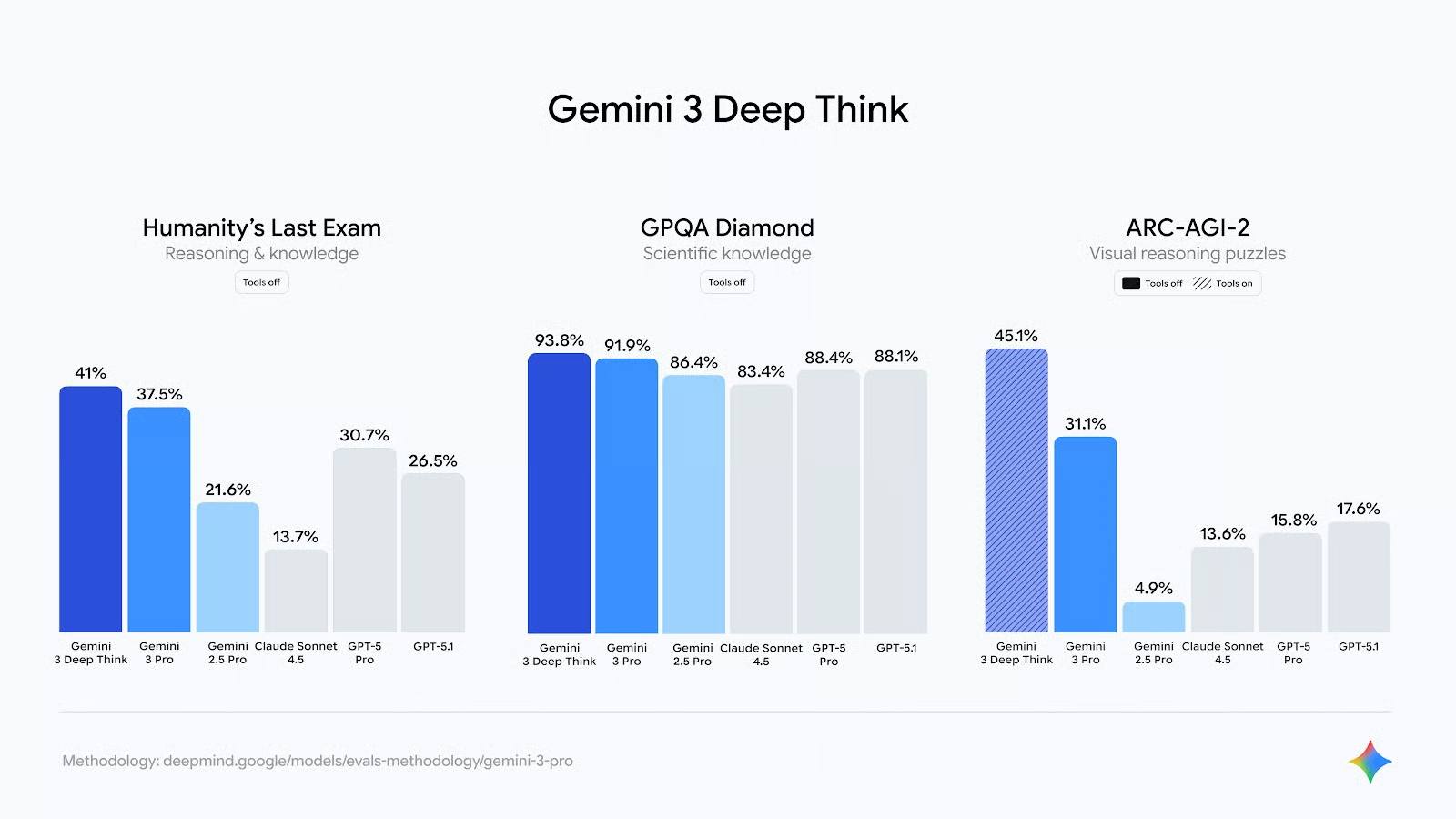

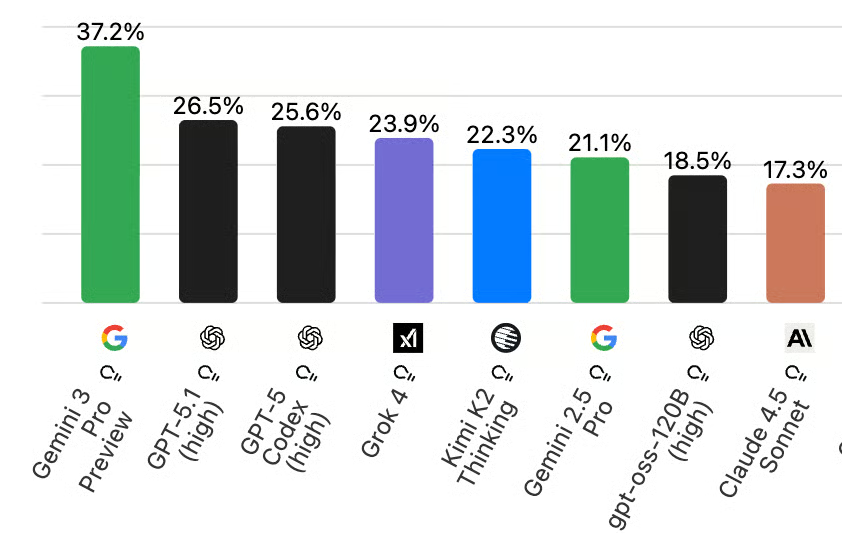

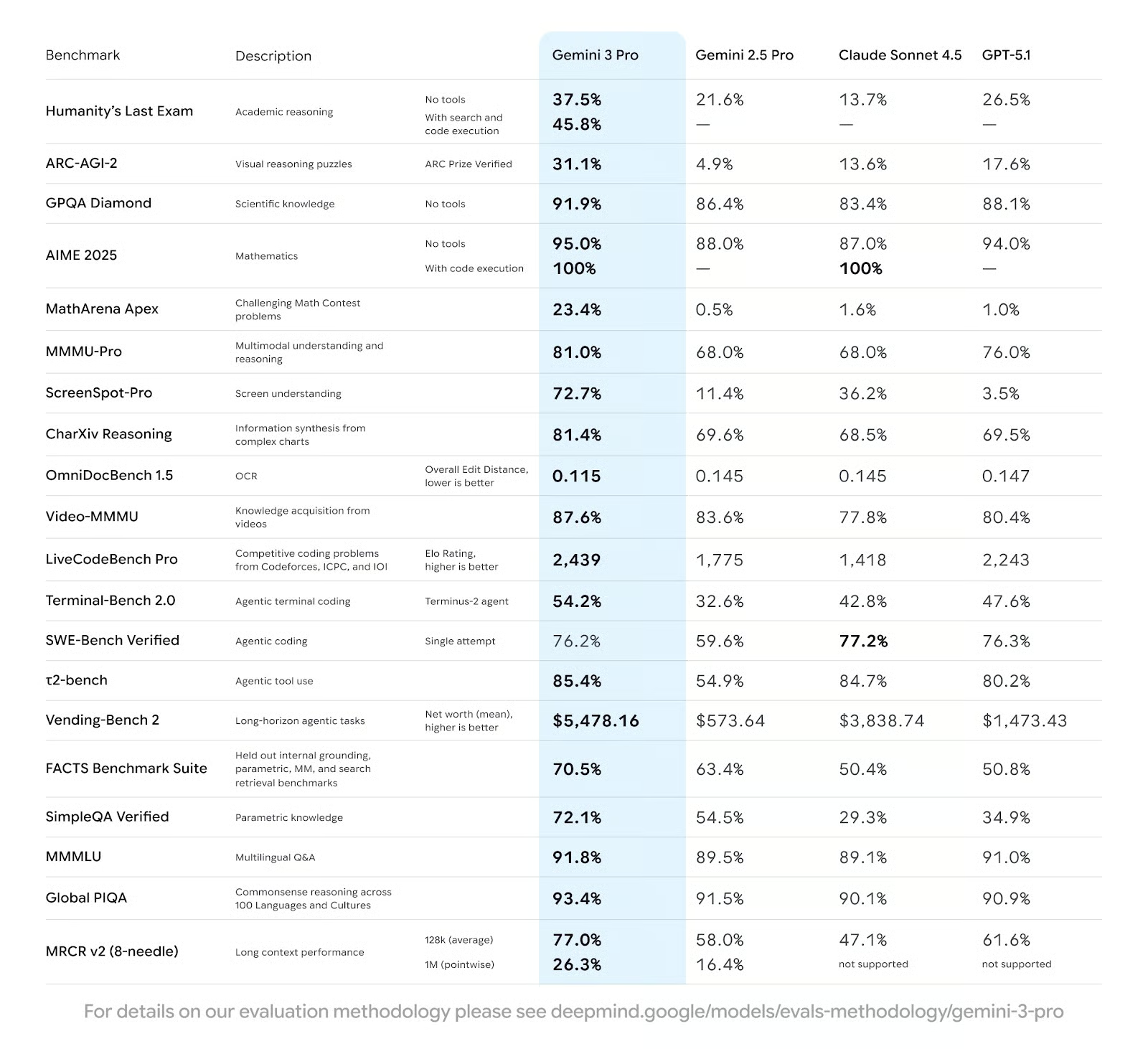

Google claims that Gemini 3 Pro has PhD-level reasoning, and the model boasts new high scores on various AI benchmarking leaderboards, including Humanity’s Last Exam, GPQA Diamond, and MathArena Apex. This impressive set of results means it outperforms models like the new GPT-5.1 from OpenAI and Claude Sonnet 4.5 from Anthropic.

New Features in Gemini 3

So, what does Gemini 3 bring to the table compared to its predecessor and the other raft of new models? As well as a big step up in performance, Google is touting that you can ‘learn, build, and plan anything’ with Gemini 3. Bold claims indeed. Let’s look at the new features to see what this means:

Improved performance

One of the headline features of the new Gemini 3 model is its chart-topping performance. Even without the Deep Think mode, this release is the stand-out leader on Humanity’s Last Exam benchmark leaderboard, with a score of 37.2% without tools (41% with Deep Think) compared to GPT-5.1’s 26.5%.

It’s not just text that Gemini 3 excels with. With a score of 23.4% on the MathArena Apex benchmark, it is the highest-scoring frontier model available for mathematics. Similarly, on the Video-MMMU, which is a multi-modal and multi-disciplinary video benchmark that evaluates LMMs' knowledge acquisition capability from educational videos, it scores 87.6%, again taking it to the top of the chart.

I know it’s sometimes easy to get carried away with benchmark numbers, and perhaps the real test should focus on usability, but Gemini 3 Pro is clearly an impressive step forward in LLM performance.

What is Gemini 3 Deep Think mode?

As we saw in Gemini 2.5 Pro, Gemini 3 Deep Think mode uses parallel thinking and reinforcement learning to significantly improve responses (at the cost of slower replies). Deep Think makes Gemini more detailed, creative, and ‘thoughtful’.

With Gemini 3 Deep Think, we can see improvements on Gemini 3 Pro non-thinking across areas like reasoning, scientific knowledge, and visual reasoning puzzles. This should mean that Deep Think has an improved ability to solve new, unfamiliar challenges.

Deep Think is currently in evaluation mode, meaning that testers are evaluating it before it becomes available to Google AI Ultra subscribers ‘in the coming weeks.’

True multimodality

Gemini 3 introduces a new multimodal architecture that processes text, image, audio, video, and code within a single transformer stack rather than through separate encoders.

This unification enables genuine cross-modal reasoning. Gemini 3 can interpret a sketch and generate working code, or analyze a video and explain its scientific concepts. Its Benchmark results on Video-MMMU and MMMU-Pro confirm its big leap in spatial and visual understanding. Like Gemini 2.5 Pro, it also has a one-million-token context window, so it can connect ideas across vast and different inputs.

Agentic creativity

Gemini 3 moves from passive generation to agentic creation, powered by new advances in tool use, planning, and vibe coding. The model’s architecture supports long-horizon reasoning loops that let it plan, execute, and validate multi-step workflows, like coding a playable game or building an interactive UI, all from a single prompt.

These capabilities are also now being deployed through the also just announced Google Antigravity, a development platform where agents have direct access to the editor, terminal, and browser. It’s the first Gemini release built to operate as a co-developer, using structured autonomy and real-time tool integration to turn creative ideas into functional systems.

Learn, build, and plan anything?

Google gives several examples of how the latest model can help you learn, build, and plan.

There is a lot of focus on multimodal improvements; Gemini’s ability to understand and create information across areas like text, images, video, audio, and code.

With vibe coding being a big focus for many these days, Gemini 3 should make it even easier for developers to bring ideas to life. Google claims it’s great at one-shot generation, meaning you can create high-quality results from a single prompt. It can also handle complex instructions, which are needed if you want your projects to feel really alive and responsive.

Gemini 3 is available for developers to start building agentic projects in Google AI Studio, Vertex AI, Gemini CLI, and the newly announced Google Antigravity (more on that in a moment).

One final, fairly impressive claim here is that Gemini 3 Pro can maintain tool usage and decision-making consistently for a simulated year of operation. For users with a Google AI Ultra subscription, you will immediately be able to access the new Gemini Agent experimental feature, which can help you build multi-step agents capable of utilizing these features.

Gemini 3 Pro Examples

Thanks to its 1 million token context window, Gemini 2.5 Pro has long been a favourite of mine, so I’m excited to see what Gemini 3 can do with improved multimodal performance in the same context window. To get started with what it can do, here are a few tests:

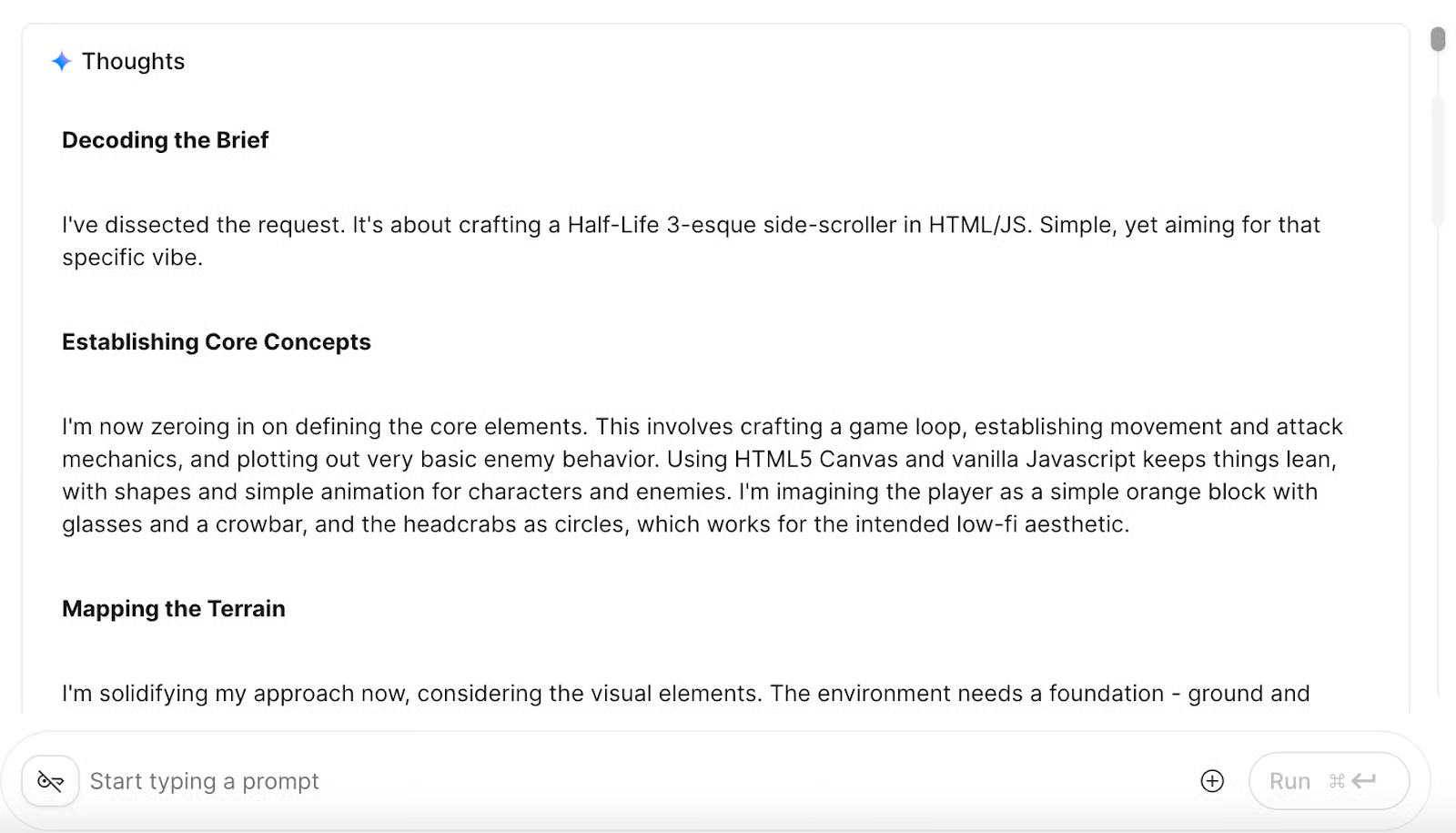

Simple Half-Life 3-inspired game

As we tested with Gemini 2.5, with a prompt of around 10 words, I got Gemini 3 Pro to create a Half-Life 3-inspired side-scrolling game. Here’s the prompt: Make me a simple side-scrolling Half-Life 3-inspired game

With not very much thinking time and around the same coding time, Gemini 3 Pro (through AI Studio) was able to create a fairly solid effort for me, considering the brief prompt. It runs, it’s a side-scroller, and it’s clearly inspired by the source material.

Here’s the video of how it turned out:

After that experiment, I wanted to try something a little more classic. Specifically, I was thinking about the Chrome dinosaur game that comes up when you open the browser without internet access.

Make me a simple side-scrolling game like the Chrome dinosaur that jumps over cactuses when the internet is down.

Enter, Gemini Runner:

The small touches were not lost on me. Gemini Runner had the same starry night sky, which wasn’t even in the prompt. It also said “Extinct” when I lost.

Multimodal capabilities

To test the multimodal capabilities, I uploaded a picture drawn by a six-year-old.

I then asked it to turn the scene into a simple game. Here is the prompt that I used:

Generate a simple browser game using the elements in this image. Base the game on what’s shown.

In this prompt, I was careful not to specify anything in the actual picture because I wanted to see if it could interpret the visual content and generate a game around what it saw.

The result was a small game called Snowy’s Day where you could move the stick figure to collect snow as it fell.

As you can see, the elements of the game were pulled directly from the image I shared. I was impressed by the fidelity in spirit to the screenshot, but it definitely wasn’t the most fun game in the world. Although maybe it would be a fun game for a six-year-old.

One tidbit: Gemini did understand that the image was snow. I felt bad because I thought he drew stars!

Gemini 3 Benchmarks

Google has provided extensive benchmark data for both Gemini 3 Pro and Gemini Deep Think, and the results are impressive across the board.

Gemini 3 Pro benchmarks

When it comes to pure reasoning ability, Gemini 3 Pro demonstrates what Google calls "PhD-level reasoning." Here are the standout results:

Visual reasoning

Visual reasoning takes a massive leap forward.

- On ARC-AGI-2, Gemini 3 Pro scores 31.1%. This is a huge jump from Gemini 2.5 Pro's 4.9% and well ahead of both Claude Sonnet 4.5 and GPT-5.1.

- Similarly, on ScreenSpot-Pro, which tests screen understanding, Gemini 3 Pro achieves 72.7%, absolutely dominating Claude Sonnet 4.5 (36.2%) and GPT-5.1 (3.5%).

These are both huge leaps in how well the model can understand and reason about visual information.

Mathematics

With a score of 23.4% on MathArena Apex, Gemini 3 Pro crushes the competition. To put this in perspective: Gemini 2.5 Pro scored 0.5%, Claude Sonnet 4.5 got 1.6%, and GPT-5.1 managed only 1.0%. This represents a new standard for frontier models in mathematical reasoning.

Long-horizon planning

To measure long-horizon planning, there exists a benchmark called Vending-Bench 2 that simulates running a vending machine business for a full year. The model has to make ongoing decisions about inventory, pricing, and everything else. It's testing whether an AI can maintain consistent, rational decision-making over thousands of sequential choices without losing track of its goals or making erratic decisions.

On this benchmark, Gemini 3 Pro achieves a mean net worth of $5,478.16. Compare that to Claude Sonnet 4.5 ($3,838.74) and GPT-5.1 ($1,473.43). It might sound like an obscure benchmark, but for real-world AI agents that might need to manage tasks over days or weeks, this kind of reliability matters.

Other benchmarks

Gemini 3 Pro shows strong performance on a wide range of additional benchmarks. It leads on multimodal understanding (MMMU-Pro), video comprehension (Video-MMMU), chart reasoning (CharXiv), and competitive coding (LiveCodeBench Pro).

One notable exception: Claude Sonnet 4.5 narrowly edges out Gemini 3 Pro on SWE-Bench Verified, which is a software engineering benchmark.

Gemini 3 Deep Think mode benchmarks

Now, let's look at the benchmarks for Gemini Deep Think, the enhanced reasoning mode that's currently in evaluation with safety testers before becoming available to Google AI Ultra subscribers in the coming weeks.

You might be wondering: if Gemini 3 Pro is already topping leaderboards, why does the Deep Think mode matter? The answer is that some problems will benefit from extended reasoning time. Deep Think uses parallel and prolonged reasoning to spend more computational effort on especially challenging tasks. It’s designed to take longer, but for the hardest reasoning problems, that extra processing time will translate into meaningfully better results, as reflected in these benchmarks:

Novel problem solving

The ARC-AGI-2 result is the standout result for me. Gemini 3 with Deep Think mode achieves 45.1% on ARC-AGI-2. (This is the official verified version of the benchmark associated with the ARC Prize competition.) In this test, the model was allowed to write and run code as a reasoning tool, essentially using programming to help think through problems.

Why does this matter? ARC-AGI-2 specifically tests a model's ability to solve novel, unfamiliar challenges. These are problems it has never seen before and can't just pattern-match from training data. This has historically been one of the hardest things for AI systems to do, so a strong score means we're seeing genuine reasoning capabilities rather than memorization.

Extremely difficult questions

Deep Think mode achieves 37.5% without using any tools on Humanity's Last Exam. And with Deep Think mode enabled, this jumps to 41.0%. For reference, GPT-5.1 got a score of 26.5%.

This benchmark is fascinating because it's designed to test knowledge and reasoning at the limit of human understanding. Think of extremely difficult problems that require deep expertise to solve. The fact that Gemini 3, using Deep Think, can correctly answer over a third of these questions suggests it's not just reciting information, but actually reasoning through very complex and very ambiguous problems.

Graduate-level science

Deep Think mode gets an A score - 91.9% - on GPQA Diamond, which is a graduate-level science question benchmark. Deep Think pushes the score higher still to 93.8%. While the 91.9% score is impressive enough, what's more interesting is that this represents a baseline that Deep Think can improve upon still.

How Can I Access Gemini 3?

Gemini 3 Pro is available now across multiple platforms. You can use it immediately in the Gemini app. It's also powering AI Mode in Search, so you might be interacting with Gemini 3 without even knowing it.

If you are a developer, you can access Gemini 3 Pro is accessible through the Gemini API in Google AI Studio, Vertex AI, and the Gemini CLI. It's also available in the newly announced Google Antigravity platform and a bunch of third-party tools like Cursor, GitHub, JetBrains, Manus, and Replit.

Google AI Ultra subscribers get access to Gemini 3 Pro, along with the experimental Gemini Agent feature for building multi-step agents. Deep Think is currently in evaluation with safety testers and will be available to Ultra subscribers in the coming weeks, but only after additional safety evaluations are complete.

For students, Google has introduced a free one-year plan that provides access to Gemini 3 Pro, along with unlimited image uploads, 2 TB of storage, and additional tools such as NotebookLM.

How Google Is Using Gemini 3

Gemini 3 Pro is already out in the wild, and Google is already putting it to work in two key features:

Google Search AI Overviews

So convinced is Google about this new model that they are taking the unusual step of integrating it directly into Google Search. While AI Overviews have been rolling out to more and more pages since April, Gemini 3 Pro will now power these overviews as of launch day.

Gemini 3 and Google Antigravity

Another really exciting part of this announcement is the inclusion of a new agentic development platform, Google Antigravity.

This new tool is powered by Gemini 3’s reasoning, tool use, and agentic coding abilities. Google promises it will feel familiar, like an AI IDE, but agents will be able to directly access the editor, terminal, and browser to help you plan, write, and check complex projects on their own.

Antigravity will connect with Gemini 3 Pro, the Gemini 2.5 Computer Use model for browser control, and Nano Banana (Gemini 2.5 Image) for image editing.

Final Thoughts

Google is clearly making a statement with this release: they're back in the race for the most capable AI model, and they're shipping it fast at scale across their entire product ecosystem. Given the specs, it would be hard not to agree with them; Gemini 3 Pro feels like the best AI model in the world for multimodal understanding.

Google is, as always, well-positioned. Its cloud infrastructure, custom chips, and vertically integrated AI stack are now reinforcing one another to deliver performance that few competitors can match. Because the gains span everything from reasoning, coding, multimodal understanding, and long-horizon planning, it strongly suggests that Google has made foundational improvements to Gemini’s core architecture with Gemini 3 Pro. What is more, Google looks like it’s building with specific business use cases in mind, so the ways in which we are going to be using AI are fast becoming more varied and more interesting.

If you need to get up to speed with all things AI, I recommend starting with the AI Fundamentals skill track.

Gemini 3 FAQs

What’s the difference between Gemini 3 Pro and Gemini 3 Deep Think?

Gemini 3 Pro is the main model available across Google’s apps and developer platforms, optimized for everyday use. Deep Think is an optional mode that enables slower but more advanced multi-step reasoning, available to premium users as Google completes safety testing.

What’s the 1-million-token context window useful for?

It lets Gemini 3 process massive inputs, like full research papers, entire codebases, or long video transcripts, without breaking them into chunks, enabling deeper, more coherent reasoning across large datasets.

How can I try Gemini 3 Pro for free?

Gemini 3 Pro is available for everyone in the Gemini app, as well as for Google AI Pro and Ultra subscibers. It's also powering AI Mode in search. For developers, you can access the API in AI Studio, as well as on the new agentic development platform, Google Antigravity. Students can get a free one-year Gemini Pro plan, which includes access to Gemini 3 Pro, 2 TB of Google Drive storage, and unlimited image uploads.

What is Google Antigravity, and how is it different from AI Studio or Vertex AI?

Antigravity is Google’s new agent-driven development platform, acting like an AI-powered IDE where agents can code, test, and use tools directly. AI Studio and Vertex AI are APIs and platforms for deploying models; Antigravity brings those capabilities into a hands-on, collaborative workspace.

Why do benchmark scores matter for Gemini 3 Pro?

Benchmarks provide measurable evidence of a model’s reasoning, coding, and multimodal performance. They help users assess which model best fits their needs, though real-world testing is still essential since benchmarks can’t capture every use case.

A senior editor in the AI and edtech space. Committed to exploring data and AI trends.

I'm a data science writer and editor with contributions to research articles in scientific journals. I'm especially interested in linear algebra, statistics, R, and the like. I also play a fair amount of chess!