Alon Peleg serves as the Chief Operating Officer (COO) at aiOla, a position he assumed in May 2024. With over two decades of leadership experience at renowned companies like Wix, Cisco, and Intel, he is widely recognized in the tech industry for his expertise, dynamic leadership, and unwavering dedication. At aiOla, Alon plays a key role in driving innovation and strategic growth, contributing to the company’s mission of developing cutting-edge solutions in the tech space. His appointment is regarded as a pivotal step in aiOla’s expansion and continued success.

Gill Hetz is the VP of Research at aiOla where he leverages his expertise in data integration and modeling. Gill was previously active in the oil and gas industry since 2009, holding roles in engineering, research, and data science. From 2018 to 2021, Gill held key positions at QRI, including Project Manager and SaaS Product Manager.

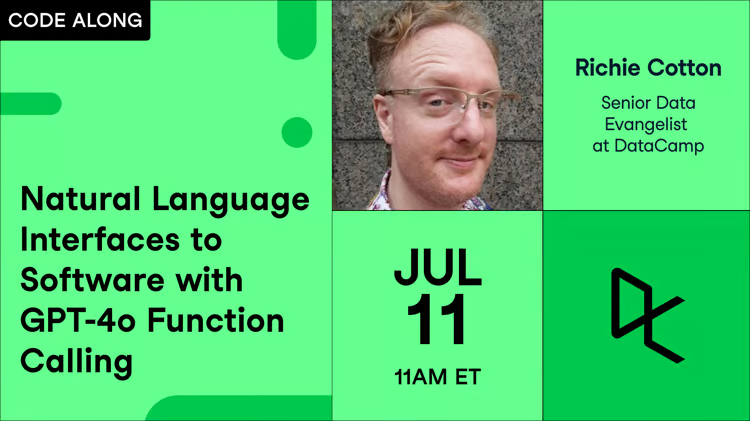

Richie helps individuals and organizations get better at using data and AI. He's been a data scientist since before it was called data science, and has written two books and created many DataCamp courses on the subject. He is a host of the DataFramed podcast, and runs DataCamp's webinar program.

Key Quotes

I believe that every machine interface is going to be voice interact. And we are going there, but we are not there yet. And I think that as long as we can integrate the jargon, as long as we can integrate accent, as long as we can get the background noise, we are in the right path to achieve it.

We don't want to get into a point that we are talking to something and we don't even know if this is a person or a machine, right? So for that, again, what we have at the same time, we're building models to identify those models because you can still replicate voices, but the way that you're generated, you can still see some ways to differentiate it between the human to machine.

Key Takeaways

Understand the three key components of speech AI: ASR (Automatic Speech Recognition), NLU (Natural Language Understanding), and TTS (Text-to-Speech) to effectively implement and utilize speech AI technologies in your projects.

Utilize speech AI for real-time reporting and data capture in environments where hands-free operation is essential, such as manufacturing floors and quality control processes.

Consider the ethical implications and potential for misuse of voice cloning technologies, and implement measures to detect and prevent deepfake audio content to maintain trust and security.

Transcript

Richie Cotton: Hi, Alon. Hi Gill. Welcome to the show!

Gil Hetz: How are doing?

Alon Peleg: Oh.

Richie Cotton: life is good.

really great to have you here. So I guess just to begin with,

can you just gimme an overview of what speech AI is?

Gil, do you wanna start?

Gil Hetz: So, you know, everybody's talking probably the most.

which referring to artificial intelligence, speech AI usually is the process of,

taking understand, generate human speech and understand it.

the key components here usually are the a SR model, the NLU, the A SR, basically taking the speech itself and convert it into text.

The NLU, which basically understands what you are saying.

And then the TTS, we generate the response for you. So that's basically the three components that build the entire

process of the speech ai.

Richie Cotton: So you can go in both directions between,

speech and text, and then you can also have, well, I'm just understanding what is that speech without necessarily having to switch into text as well. Okay, cool. So,

maybe we'll, we'll cover all three of these things.

So,

Gil Hetz: when we're talking about generally,

speech to. Text. ... See more

a lot of the time when you are talking about,

Siri, they need to understand what you're talking.

Alexa, all the virtual assistants call centers,

healthcares education, everybody's basically, when was the last time that you called to a center and a human really answered?

It's never happened, right? So speak to somebody. Text, and then you can take this text

and start working on it, and probably we're gonna talk about that deeply in the next sections. But the idea is really taking,

the, that I'm actually making and translate to text information. But at this point, it's unstructured,

It's unstructured. And if.

Richie Cotton: Okay. Yeah. Call centers just seem like a, an incredibly,

important use case of this. But it seems like anytime you want to transcribe,

text, then this is where the,

automatic speech recognition, I think it was, it was a RS, you said the acronym. That's where that's gonna come in Useful.

Gil Hetz: Speaks recognition. And you know, when we are talking about that,

just a little bit because on.

And I would basically focus, and in this conversation we'll try to talk through the main three topics that really are the challenging ones, which are the accent, right? I have my accent. You have your accent alone has this accent. And you need to understand that there is the background noise, okay?

What is happening when somebody behind you. And the last one, probably the most important one, which really changed the entire way that we're looking and generating results, is the jargon, the complex English or any language that you are talking that general a SR does not understand.

Richie Cotton: Okay. Yeah, so certainly a lot of challenges and I definitely want get into these in more depth. So, you mentioned things like accents and background noise, things like that. So, we'll cover those before are cases when would generate from text.

Gil Hetz: so we talked about like, I'm taking speech, you are talking to me. I'm generating the text. And then if you want to talk about text to speech, where you are basically taking the text itself and generate respond, that sounds like a human, like I'm going to type in a sentence and you're gonna try to the machine and it's going to generate basically speech.

So, you know, in this case, a lot of the customer interactions that we have. Always, somebody's sitting behind the scenes and just talking to you. The, I mean, the machine is talking to you, but you get the response from that accessibility, any assistant virtual assistant, usually getting the response first in text and then generate the speech format.

Any education, training, e-learning. So if you are trying learn a language. Usually you are using text and then it goes to to some of the, these machines that really pronounce the things the way it should be. And a lot of the content marketing ideas that people have, they just throw in text and then in a way that people.

Attention, and that's what marketing is all about. If I can get your attention.

Richie Cotton: Absolutely. I have to say that education exam. Example is really interesting because if you're generating the speech from text with a machine, then it's gonna be more consistent. And this seems really important uh, in the educational use case 'cause you want it to have exactly the same accent every time.

You want it to have the same cadence and whatever. Whereas humans are gonna vary a bit in how they talk over time. Okay. Success stories. So, Alon, can you talk us through, are there business. let's start with the food retail industry. They have a lot of regulations. They need to, for example, check the temperature in every fridge, at least twice a day or once in a shift. it takes them a lot of time. from one of our customers, it took them around one hour and with voice, they just going or even running through the store speaking.

Alon Peleg: And the, system is capturing all the temperature. Fridge in the store, if there is a deviation of for regulation immediately an alert being sent to the manager of the store, to take immediate actions and to make sure that comply So the time it takes today with a speech ai system like ours reduced by 55%. for this industry where the margin is not to maybe 3%, it's a huge, saving, both in terms of the, the worker as well as the reducing the regulation risks. And that's one example. The second example is from a different ward. It's from a pharmaceutical manufacturer. We have two examples there. One is the technicians. The technicians are very busy. And they a legacy system. Is sitting on a PC somewhere in the warehouse or in the floor.

They are expected to report every issue they fixed. To give more description as much description as possible. But, they're very busy. So, okay. I fixed something. I fixed maybe 10 things in the last hour, then I need to return to my office and type all the information I of what we did.

We, we saw that, to record task they made and the average uh, words in their description was actually four, four words, nine minutes to report with voice. first of all, they will report it real time. They don't need to go and type anything. They will just speak to an application.

Application will record exactly the description of what they did. They even can take a, a picture real time of what they fixed. They can also scan a barcode and then they know if the part that they fixed is under warranty or not. And we found out that they cut by half the time to report.

Tasks by half. And the amount of data they capture is in average 11 words. So from four words to 11 words in 50 percent of the time. So it's a, a huge value to the uh, technician department on the same factor. They have also quality control. Departments that are doing change over every time they change their the products that are being manufactured.

they have a process. They reduce the, the change over process from two hours to 14.5 minutes and. They did it mainly by reducing wait time When there is an issue, first of all, again, they don't need to go to a corner in the floor and they, they report it. The inspector that is coming immediately because real time is getting, Hey, there is a problem that was reported now on the production line.

Come to fix it now. So the wait time was reduced dramatically on every shift. The amount of pictures that they're taking, which is a record and visibility to what's going on on the floor. Increase from 10% to 40% in the event of an issue. So they reduce the dramatically the time in 89, almost 90%, and they increase the amount of data that is being captured, let's say.

They're doing quality control for products. It took them 175 seconds to complete a check. And they were reporting uh, an average 4.5 return product. Product that has an issue and need to be returned every like.

To the stores was damaged. So they, they have to do a very thorough work and complete the inspection and to maximize the amount of bugs issues they're find finding in the items. So they reduce the inspection time from 1 75 to 57 and the amount of return items, which is a.

Go from 4.5 in average to almost seven. So the quality perceived by their customer will increase then they can cover much more in terms of to complete much more every shift. So.

Value in our way for this company. Then we can move to fleet management. That they have like about 100,000 cars to check every morning verify it's they will not be stuck on the road. 'cause then it's very costly. It took them with paper, it actually paper 40 minutes to complete every inspection.

With voice, it took them. And this is the name of the check. It's One Minute Check, check.

Gil Hetz: Second. Yeah, it's called 62nd checklist. Checklist. They have 40 items they need to go really in 62nd, and the only way to do that in 62nd, as Alan just said, is by using voice. But I think that, Alan just went through four different cases. We have many more, but each one of them in. Each one of them is really what we are doing as iola.

We bring the speech, AI, to the fingertip of the people in the ground. And that's what is I important. That's what is nice. The only thing you need is your smartphone Speak to that and collect all the information and really make it happen. And you know, you, you have heard the numbers.

The numbers are crazy. the first time when we saw it, we couldn't believe because, such an improve just by switching the way that you are collecting the information.

Alon Peleg: Finish with two examples that just to, to give the audience a point to think of. Think about the cultural change that you're making in an enterprise. When you are all allow, allowing every employee to report a safety issue by voice. You have your own internal application. I am an employee airline.

I'm going through my gate. I see some coffee on the, on the floor. I don't need to open a form or to write a, a letter. I'm just opening my internal application and I'm reporting, Hey, I'm in, I'm in New York. I see in gate 90 D or C, a glass of coffee on the floor.

And it's been captured and immediately been sent. It's, it's actually changing the culture of the company to a, everyone can and should report very easily safety issue that can risk our brand. So let me end with that. I have many more, but let's keep some time for other questions.

Richie Cotton: Yeah, lots of examples there and I'm sure there are many more. But I think the cons. Theme here. It sounds from these examples, is that these are places where you haven't got hands on keyboards. Like, I guess, with my day job, I spend a lot of time at a laptop. It's like, well, I don't really need speech AI so much.

But if you've not got your hands free, if you're in a, shop or you're in a factory or you're in some sort of manufacturing place, then yeah, do things without having to go find somewhere type things. So.

Alon Peleg: A big presentation tomorrow for investors, right? I written all the points I wanna cover and I know that I have a commute of two hours back home and I cannot open a paper and practice right. In few seconds I translate, I, I changed the text to speech and used leverage two hours to just listen to what I want to say tomorrow.

Think how much even us that we are like mostly using our pc even to us. It's very useful too.

Richie Cotton: Yeah, I like that. Just if you've got a script or something, have it turned into speech, then you listen to it back and you're probably gonna decide, does this sound right or not? Is much easier to determine whether it's good or not compared to just reading it. Okay. That seems like, another call use case.

Okay. I wanna since we about sort of shop floor use cases, what happens when you've got background noise? How do you deal with that? Is it still a problem or is.

Gil Hetz: I think the main challenges, as I mentioned, there are three of them, and background noise is definitely one of the things that you need to make sure that your model can handle. I mean there are different ways to do that. Our, I'll talk in general about our model for a second and then we can talk about how other people try to. as I said, I think the number one problem that we are actually dealing with is a journey. This is the problem that, it's not just English that we are talking right now, it's English in a, specific industry, in a specific site, in a specific slang. it can be different from one side to another, even if they're working at the same company.

And that's the number one problem that we are dealing because, you know, when we are going to general speaking English off the shelf model can do a good job in terms of world error rate. that they are Producing when they're translating to text.

That's the word error. And then when you go into the jargon, you are suddenly get down to about 40, maybe 60% error rate that you cannot deal with when you are trying to report and non checklist that really, you know, that important in terms of reporting. That's something that you cannot get. Less than 90% accuracy.

It's not good. It's zero or one now. That's the third thing that we are dealing with, and that's why we have actually two models of working together. One is the A SR model, which is general, and the second one is keyword spotting. When we're inject through the model, all the jargon, and this is a zero shot, basically looking at the way that you are.

Generating the war itself. Okay. The signature of the acoustic sign and try to pronounce it right. So even if I use a word that makes no sense in English, but I type it in in the right format, I will get it from this model. And this is an item that you might, this is the, the second one.

Is the accent and, and background noise. When I'm dealing with area with, a very high DB noise, I really need to include it when I fine tune the model. The way that we are dealing with it is we are generating, we have our own TTS model that can connect the background noise so we get a sample of the background noise.

We have the text that we are generating and we are generating the voice with respect to the background noise. So if you are just taking the background noise and what you generate and does do the, super position, it's not gonna be good enough. Because when I'm talking loudly, there is a background noise that probably louder behind me, right?

This is the reaction that I do because there is a background noise. I'm increasing the the amount of. D that I'm generating when I speak. So that's what our model does. And then we use that to fine tune the a SR very quickly. So that's how we are dealing with the background noise, the jargon.

And last thing is the external. And again, for that, we have in the tts, the, the voice cloning. It is taking your own voice. Include those specific samples and generate the response. And that's how we really dealing with the different challenges that we have. We've seen them in, in different languages, actually, in languages that are not English.

We see a huge improvement because of the fact that, you know, those models to start with, they're not good enough, but when you are improving them, you're getting much better results.

Richie Cotton: Okay, so, lots of different challenges there. So you about background noise.

Maybe with the, with the jargon one, because you said that was the biggest issue. And certainly like once you start talking about very technical terms, quite often these sort of just off the shelf well just don't have a clue what they're talking about. Certainly whenever we, we transcribe these data frame podcast episodes, anytime we have technical terms, it's just absolute nonsense.

If you know what jargon you're gonna be talking about, can you just provide a list of words and say, these are the things that we're gonna be talking about. Does that improve things? Or what do you need to do to get better results?

Gil Hetz: Exactly. So, the way that we're doing that is, we go to a specific site. We collect the, forms that they have, we extract the information, the keywords that they're using, the jargon itself, really the words that, the name of the items, the name of the people, sometimes the name of the events that they're reporting on, and include it in our keywords spotting model.

This model is in charge of integrating the jargon itself to the general A SR. the fact that we're actually having a specific model that is zero shot. Zero shot, meaning that you provide me the list no matter the language, no matter what kind of size of the list, and I'm going to reproduce those words.

I know I'm going to tell the a SR in this sentence. I think you use this word. which could be. A word that does not make any sense in English or in any language. It's just gonna be the signature of the acoustic sign of the pronunciation. And that is going to go into the SR model and increasing the probability because all those models, it's probability.

What is the chances that I use this one? This is going to increase the probability for a word that actually came from the jargon that you're talking.

Alon Peleg: Important to say that it's not process of generating such.

Gil Hetz: Yeah. It's, zero shot, meaning you provide me the list. You got your own model. You provide me the list in, in English. provide me a list in Spanish. You get it in Spanish.

Richie Cotton: That's very cool. So it's not some kind of extensive process of like, I need to go and fine tune my own model. It's just like, these are the words and.

Gil Hetz: exactly. So I think, that's what I said, I, did not touch it right now, but what people are doing in general, they are taking examples and fine tune their own models with, some. Example, we formed the jargon. Now this process takes time and usually you need to get some data.

You need to generate data. And that's why I think our solution in that sense make the advantage that we have in dealing with jargon.

Richie Cotton: So, it sounds like jargon is sort of like a largely solved problem. So the next one you mentioned was, I'm.

There are a lot of like cities with very strong accents. So, like, Glasgow is maybe like the, the most common example of things that foreigners hard.

Gil Hetz: Yeah, so. the fact that English is actually I think is a great example because there are so many people that speaks English, but in so many different accents and what we are doing, we're trying to collect samples from people and we don't need the long samples. We just need a few second samples from specific people in the site.

And then we have, we can generate text, any text. The fact that we're actually have our own TTS model that take this, audio of me speaking with a text, I can generate so many different examples to fine tune the model for a specific access.

Alon Peleg: From French Quebec which is difficult. And we have evidence that French people don't understand French in Quebec and we have customers there and we are reaching very, very high percentage of accuracy.

Gil Hetz: Yeah, you can say um, 98% accuracy. that's pretty much the number. Which is phenomenal for language that is not that common in terms of the accident.

Richie Cotton: so 98 sounds amazing. Is, is that good enough for case? I know whether, like, are there some cases where need. Close to a hundred percent or is 98 good enough for most purposes?

Gil Hetz: usually 98 is good enough. So again, you know, when we are talking about speech ai, we are talking about three different components or maybe two main components. The first one is taking the speech. Convert it to unstructured text. This is the SR model getting 98%. And then you need to have another model that the NLU model, that process the information and put it in a structured way so you can really use it to to make decisions.

Now for us, from what we have seen when we are getting to north to 95%, we usually are in a good position. To move to process the data. It's just like me and you speaking, and I'm sure that, in this specific conversation I made couple of mistakes, but you still could understand what I said and the fact that you could understand it because you don't need the a hundred percent accuracy because you understand the general language and you, you know, what is the context of the conversation.

That's pretty much the same process that we're having when we're talking about models.

Richie Cotton: I suppose that's one of the good things about you can stutter a bit, say the wrong sometimes, and still it's.

Gil Hetz: Exactly. I'm.

Richie Cotton: yes. Yeah. I.

Gil Hetz: Everybody is using. That's okay. It's not, it's everybody using that. That's another way to get your AI to your fingertip. When you're typing in something to charge g pt, sometimes you know you have mistype something. Are you going back to and change it? Not really. I mean, just send it. It'll understand and I mean, I used it many, many times.

I made mistake by typing and I still see that, I get what I need. So that's what I'm saying about, the.

Richie Cotton: Absolutely. Yeah. I think, my church instance knows very well how badly I type. So, yeah, it's good that with all these mechanisms the AI is smart enough to sort of correct mistakes. Wonderful. Alright, so, related to accents, I suppose the other thing is how well did different languages work?

So, I mean, English is just spoken so much, there's so much available that pretty well, I know how well it works for language.

Gil Hetz: So English, of course, the more examples you have, the better accuracy you get. As I said at the beginning, we know how to bring the dragon to a very, very high level of accuracy in different languages and our ability to run a quick fine tune. On the specific examples that we are generating from the field actually bring us to a very good results.

We had that in Chinese. We had that in uh, in Portuguese. We had that in French, ic French, which is differently from, print. So we have seen that. And the fact that we understand the jargon actually allowing us. We get very high accuracy in languages that are not English.

Richie Cotton: So most of the languages you mentioned are sort of like, I guess, top 10 most popular spoken languages around the world. Like how deep into unpopular can you get before it stops working?

Gil Hetz: Yeah, yeah. We also had which is really not easy languages t. We also got very, very good results there. Hebrew, another one, which really we, you don't really

Richie Cotton: You got a vested.

Gil Hetz: Yeah. As I said, you know, when you are working in a systematic way, that includes the jargon because you know what you're looking for when you are including the background noise.

You can fine tune to the way that really people pronounce the language itself. You can gather very good results, and that's what we do. I mean, this is the magic that we bring to.

Richie Cotton: That's very cool. Is opening up to a lot more languages. Speech AI is gonna become accessible to many well, I guess a lot more people around the world. Once once all the different languages work and since translation is one of the big use cases of this having it work in different languages can be incredibly important.

Actually, yeah. Do you wanna talk me through that? Are there some good use cases around translation here? Going from speech in one language to speech in.

Gil Hetz: First of all, if you're looking at trying to fine tune your models, you definitely want to have, in a way your PTS in a very good shape because you are going to use, generate synthetic examples that you are generating to fine tune your a SR models. Now when you are going to the process of translation.

This is usually happening in the middle box, which is the MLU process. And the task that you're trying to solve takes this specific sentence and convert it to the same sentence in the different language, and then run it through the TTS model to generate the translation that you're running. We are, as Alan just mentioned.

We are working with companies all around the world. and at the end of the day, they are collecting information from different countries and they need it in a very systematic way to save it and to have it general. So they need a lot of the time to translate everything to English, even though they are speaking different languages.

And we have this capability, which basically. Happening in the NLU model, I know. I'm still using the A SR to understand the people on the ground. I take it same way it's happening in your head, translate it to the different language, and then processing in this language that I want to send the information.

Alon Peleg: Car manufacturer in Singapore, it will have their employees from all over They can speak natively on with their language. The backend will probably uh, data will be captured in English or in any, or Korean or whatever they decide.

Richie Cotton: Yeah, this just seemed incredibly useful. I was just sort of thinking if I go on vacation somewhere, I just wanna be able to say things and have it automatically translated into whatever the local language is and vice versa, so I can understand what's going on. Does even more important part.

Gil Hetz: so just to comment on that this is what actually happening, real time going through your a SR in your language, l. Or NLU basically translate to the language that you want to, and then run through the TTS generate your voice. And if you have your voice, your example in your tt s it's even going to pronounce it in in the, in the same voice you have is probably going to be problematic unless you the accent, unless you can provide it.

But in general, it is going to be.

Richie Cotton: Okay. That's very cool. That does sound a lot easier than spending hours on Duolingo trying to uh, know the other languages have automatically converted. Okay. Cool. So I guess on that last note, you, you mentioned about having my own voice there. So it does seem like natural language or na, more natural sounding voices have become a thing in the last year.

Gil Hetz: Yeah, absolutely. So, you know, in general, I'm going to focus on the last. Block, which is the TTS. And for that, you have heard about I guess everybody on voice cloning basically taking voice that is natural and then use it to generate TTS models. So basically you constrain your model, the TPS, to the text that you're providing, and the voice, the audio voice that you are providing, and then generate the response.

We see today. And again, I'm going back to call centers that we all talk, I mean, when you need some service. if I go back five, 10 years, it was too robotic, you know, it was like, Hey, our doing, please press wow. And now when you talk to people, you can even hear them the way they're actually breaking up the words and think over words when they're speaking. So I think there is a huge improvement there. We spend a lot of time on creating our own model that, you can integrate that specific voice or any voice. Including background noise because we needed to train the models, but also in general, people want to use that and have it as part of what we are producing.

So we made a huge improvement in the last few years and I think very little time from now we're going to be in a position where it's gonna be hard to differentiate them. A person to a model when it comes to TTS.

Richie Cotton: Okay. This is, that we're gonna get to the point where it is difficult to tell whether it's like a real human voice, or whether it's AI generated. So I suppose this leads to a natural problem in that you've got a voice cloning, deep fakes there, and it's unclear what happens there.

So do you have any concerns around this?

Gil Hetz: we all should have some concerns about that in, you know, in the general view. Because we don't want to get into a point that we are talking to something and we don't even know if this is a person or a machine. Right? So for that, What we have. At the same time, we're building models that, to identify those models because you can still replicate voices, but the, the way that you generated, you can still see some ways to to differentiate it between the human to to a machine, think about the emotions, right? Or breathing or natural flow. That we know how to generate models and we know what kind of pattern we are expecting to get from models compared to people. So that's kind of, things that models can help us. And at the same time that you are producing those models, that generating great voices.

We are we need to generate models to make sure that we can identify those voices that generated by the machine.

Richie Cotton: That's interesting that you have to then have the I guess forensics models to determine what generated thing. So you about its. is there a breathing noise in the background? Okay. Um, Can you do fingerprinting on this? I know with like images you can add like a fingerprint.

Say this was AI generated and it's like just slight changes to the pixels where humans can't detect it, but a a, a machine can. So do you do the same with voice? Can you put something in there to say,

Gil Hetz: That's what I'm trying to, I'm trying to, I mean, at the end of the day, there is a. Like a very clear way of how we are generating those voices so we can identify when and where we generated that. For you as a person, it could be exactly the same, but as a model that the only purpose of this model is to identify this was generated by a person, a real person, or a machine.

Those models probably do better than what we do as, people. So when you get a call, and if you are not sure if this is a person or not, never ever give your credit card.

Richie Cotton: That's a, that's a very good idea. You know, I used to work in debt collection on the phone, just asking people for money and almost everyone would just give out their credit card details, whatever. So yeah, it's tricky stuff. So. I'm wondering whether there are limits, current limits to how well the speech AI can work.

So talked about becoming very natural sounding. Are there any areas of speech that are difficult for AI to replicate?

Gil Hetz: we are dealing with many challenges and specifically the three of those that I talked about. I think background noise is tricky. Specifically where there is not, there is no clear signature or pattern of, of the background noise. And that's kind of thing that at the beginning where having problem, but if you can build a platform that collect information and learn same way that we do as people, right.

We try to learn from mistakes and improve ourselves. You're probably gonna get into a point where the models that you are generating are, I would say, better than what people can understand.

Richie Cotton: That's kind of cool. Most of the problems, I mean, it sounds like there are challenges still left to be faced, but most of 'em sound like they are at least solvable in the, in the near.

Gil Hetz: For us, I think we're right in the, in exactly in the right time. the potential of voice is not there. at the end of the day, I believe that every machine interface is going to be voice. And we are going there, but we are not, there yet. And I think that as long as we can integrate the jargon, as long as we can integrate accent as, as long as we can get the background noise, we are in the right path to achieve it.

Richie Cotton: Interest machine will have some sort of speech or voice I. I'm actually slightly concerned about what happens in open plan offices. So, just for context, dig, gap has an open plan office. Joe from sales sits name, he is very loud. If he starts talking to his AI all day, I'm gonna murder him. So what do you do in that kind of context, do you think?

It's gonna take over like offices as well.

Gil Hetz: I don't think so. think that we as people that are working in the offices, we are going to learn how to to take advantage of it and to improve ourselves. But I don't think it's going to take over what we do. We're just going, you know, It's not about writing 20, 30 years from now. It's not about writing the model. It's more about how you are operating your models to make sure that it does the things that you want it do.

Richie Cotton: So basically just don't be. And put speech everywhere. Just make sure that it's in a sensible place where it's actually gonna benefit you cases when you don't wanna type stuff for communicating instead. Alright. I guess, just to wrap this up, do you have any advice for businesses that want to make more use of speech ai then?

Alon Peleg: In any change you are making in your organization, you need a champion that can run a change. What does it mean? means that he needs to understand the use cases for example, that he wish to prioritize, where he will be able to, he or she will be able to show value gain confidence.

This champion will need to pilot before he runs. He need to walk. the champion will need to make sure that there is budget because, hey, you are building a new operation. champion needs to decide if he is going to replace. Or you wish to integrate to existing, systems with voice.

A lot of decisions that need to be done, and they cannot be like random decision. It's a decision that the organization is taken. You need to involve the relevant employees. Who will be impacted from that and want you need to show them why we are doing the change. You need to choose the right processes to allocate budget, to analyze the R oi, the KPIs.

So, it's a program. to make it successful, you need the right planning and the right champion.

Richie Cotton: Okay, wonderful. So this sounds like it gonna be like a digital transformation programs. You just really need to be mindful about, like, what are your goals? How are you gonna measure? Performs who needs to be involved and, and all that kind of stuff. That's cool. Alright. Is there anything that you about in world of.

Alon Peleg: I'm excited, and all the. Company, you see, everyone are excited because we are making something big. It's the right timing. We see the usage growing dramatically year over year. We see agent coming and joining the, this big wave and agent will voice a.

We are going to change the way employees are using machines uh, reporting data. We are going to be part of a change of how unstructured data in those industries across, you know, industries that are, you speak about ai, they are so, so far away. We are going to work with those industries.

Collect unstructured data and give real time insights that they never had before. We're gonna bring voice AI to every employee. It can be employee in the supermarket, employee in the airline, employee in the automotive. Every employee will.

Maybe in the late beginning, but we are feeling the energy in the market. So every employee here in our company is feeling that we making a big wow and part of a big story and yeah.

Richie Cotton: Yeah, it just. Like exciting times. And Gil, what are you most excited about?

Gil Hetz: for me, this is the way of. At the end of the day, as I said, every interaction with machine is going to be by voice first and we are there Revolution we are going to have in the AI revolution.

Richie Cotton: Yeah definitely exciting times and it seems like. Is a big change in the way that humans and machines interact in progress. Wonderful. Thank you for your time, Alan. Thank you for your time and feel.