Programa

Mistral AI's Codestral Mamba is a specialized language model designed specifically for generating code.

Unlike traditional Transformer-based models, it utilizes the Mamba state-space model (SSM), offering advantages in handling long code sequences and maintaining efficiency.

In this article, we’ll explore the key differences between these architectures and guide you through getting started with Codestral Mamba.

Develop AI Applications

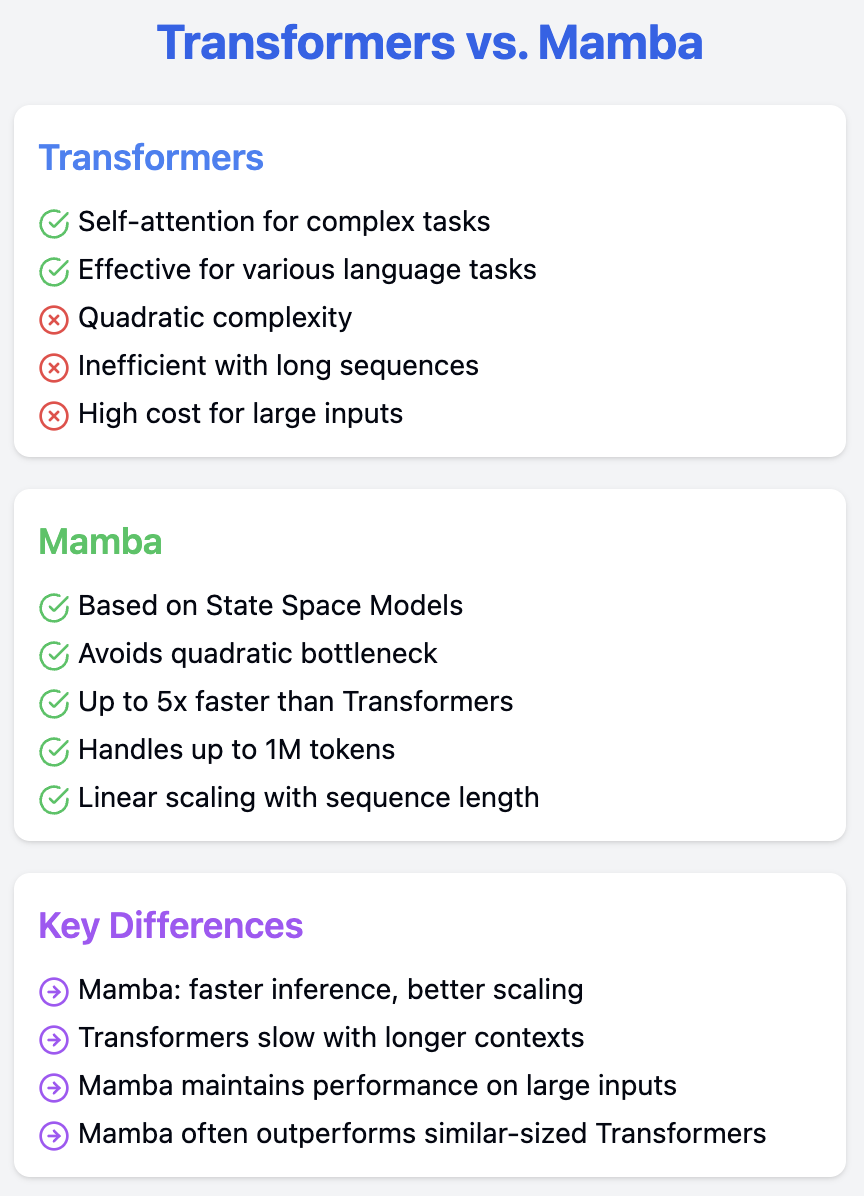

Transformers vs. Mamba: Understanding the Difference

To understand why Codestral Mamba stands out, let's compare its underlying architecture, the Mamba state-space model (SSM), to the traditional Transformer architecture.

Transformers

Transformer models, like GPT-4o, use self-attention to handle complex language tasks by focusing on different parts of the input at once.

However, they have a major issue: quadratic complexity. As input size grows, their computational cost and memory use increase exponentially, leading to inefficiency with very long sequences.

Mamba

Mamba models, based on State Space Models (SSMs), avoid this quadratic bottleneck, making them much better at handling long sequences, even up to 1 million tokens. They can run up to five times faster than Transformers.

Mamba matches the performance of Transformers and scales better with long sequences. According to its creators, Albert Gu and Tri Dao, Mamba offers fast inference and linear scaling, often outperforming Transformers of the same size and matching those twice as large.

Why Mamba for code?

Mamba’s architecture is well-suited for code generation, where maintaining context over long sequences is important. Unlike Transformers, which slow down and face memory issues with longer contexts, Mamba’s linear time complexity and ability to handle infinite context lengths ensure quick and reliable performance with large codebases.

Transformers suffer from quadratic complexity in their attention mechanism. Every token looks at every previous token during prediction, leading to high computational and memory costs. This makes training and generating new tokens increasingly slow as the context size grows, often causing memory overflow errors.

Mamba solves these problems by using an SSM for efficient token communication, avoiding the quadratic complexity of Transformers. Its design allows it to process long sequences efficiently, making it a strong choice for applications needing extensive context handling.

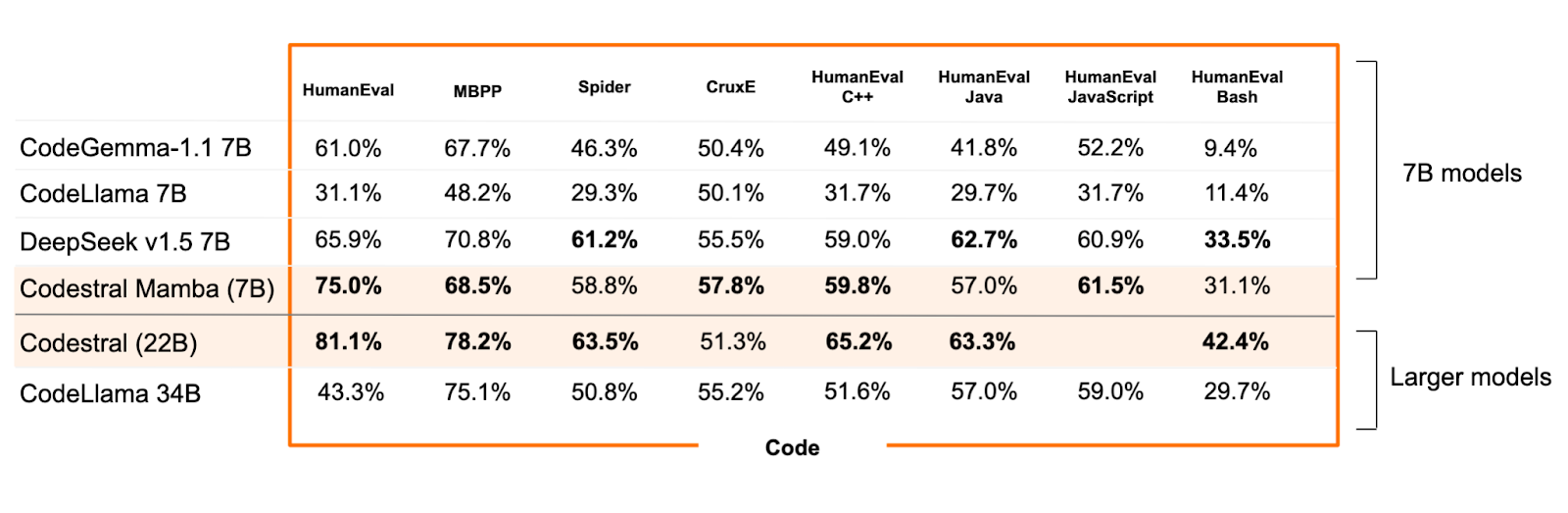

Codestral Mamba Benchmarks

Codestral Mamba (7B) shines in code-related tasks, consistently surpassing other 7B models in the HumanEval benchmark, which measures code generation capabilities across multiple programming languages.

Source: Mistral AI

Specifically, it achieves an impressive 75.0% accuracy on HumanEval for Python, outperforming CodeGemma-1.1 7B (61.0%), CodeLlama 7B (31.1%), and DeepSeek v1.5 7B (65.9%). It even edges out the larger Codestral (22B) model with 81.1% accuracy.

Across other HumanEval languages, Codestral Mamba continues to demonstrate strong performance. While its Bash scripting score is lower than other benchmarks, it remains competitive within its class.

Notably, on the CruxE benchmark for cross-task code generation, Codestral Mamba scores a solid 57.8%, surpassing CodeGemma-1.1 7B and matching CodeLlama 34B.

These benchmark results highlight Codestral Mamba's efficacy in code generation and comprehension tasks, especially considering its relatively smaller size compared to some competitors.

While further testing and comparison with a wider range of models and tasks would be beneficial, the initial results position Codestral Mamba as a promising open-source alternative for developers.

Setting up Codestral Mamba

Let’s go over the steps needed to getting started with Codestral Mamba.

Installation

To install Codestral Mamba, run the following command:

pip install codestral_mambaGetting an API key

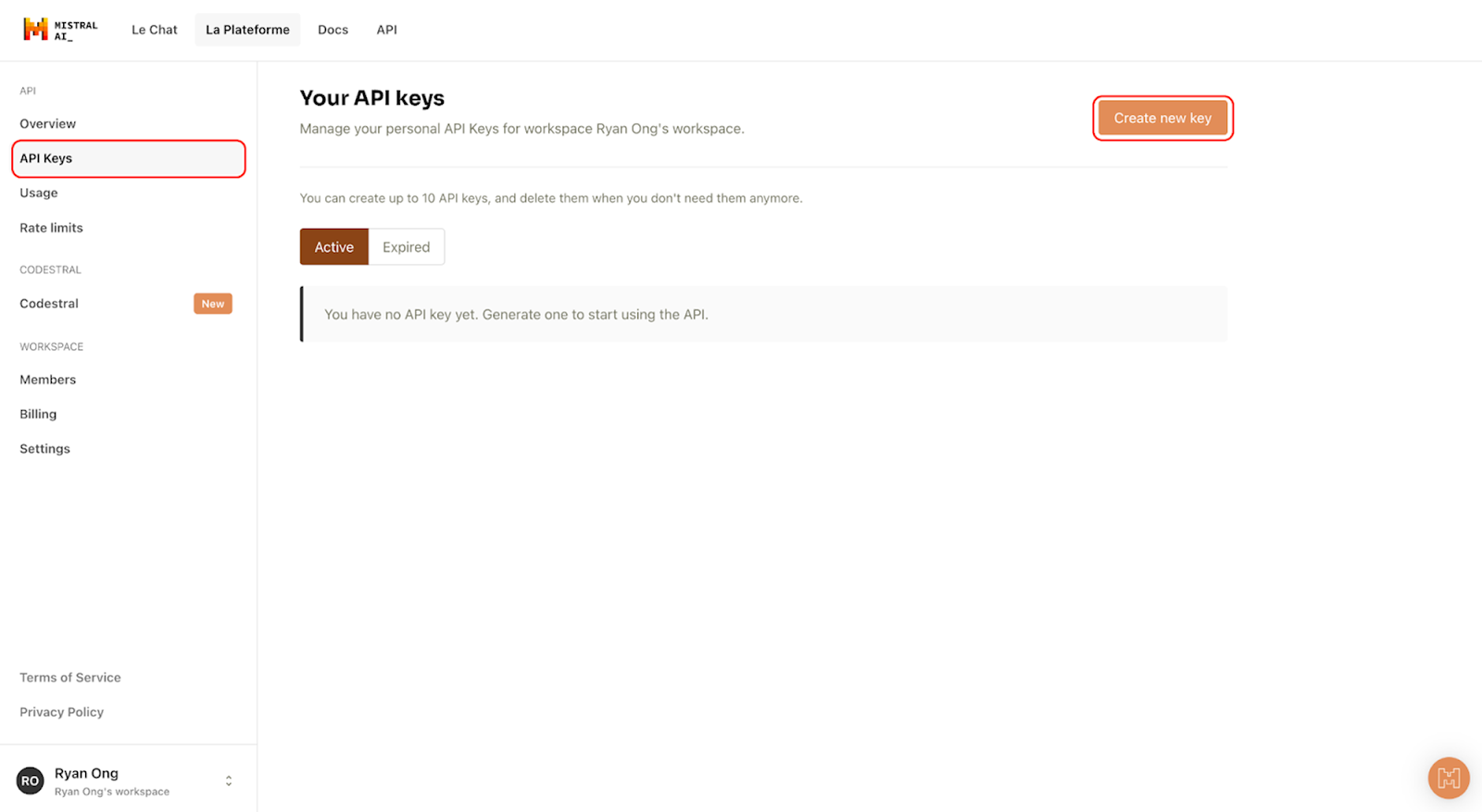

To use the Codestral API, you'll need an API key. Here’s how to get one:

- Go to the Mistral AI sign-up page and create an account.

- For api.mistral.ai, navigate to the API Keys tab. Click Create new key to generate your API key.

Set your API key in your environment variables with this command:

export MISTRAL_API_KEY='your_api_key'Applications of Codestral Mamba

Now that we’ve set up Codestral Mamba, let’s explore a few examples.

Code completion

If you have an incomplete code snippet, use Codestral Mamba to complete it. Here’s an example:

import os

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

api_key = os.environ["MISTRAL_API_KEY"]

client = MistralClient(api_key=api_key)

model = "codestral-mamba-latest"

messages = [

ChatMessage(role="user", content="Please complete the following function: \\n def calculate_area_of_square(side_length):\\n # missing part here")

]

chat_response = client.chat(

model=model,

messages=messages

)

print(chat_response.choices[0].message.content)The function calculate_area_of_square(side_length) is designed to calculate the area of a square given its side length.

The area of a square can be calculated by squaring the length of one of its sides. Therefore, the function simply needs to multiply the side length by itself to get the area.

Here is how you can complete the function:

def calculate_area_of_square(side_length):

area = side_length * side_length

return area

You can also write this function in a more concise way using the exponentiation operator **:

def calculate_area_of_square(side_length):

return side_length ** 2

Both of these functions will correctly calculate and return the area of a square given its side length.Function generation

Codestral Mamba can also generate a function from a description. For example, see below the output for the description: “Please write me a Python function that returns the factorial of a number.”

import os

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

client = MistralClient(api_key=api_key)

model = "codestral-mamba-latest"

messages = [

ChatMessage(role="user", content="Please write me a Python function that returns the factorial of a number")

]

chat_response = client.chat(

model=model,

messages=messages

)

print(chat_response.choices[0].message.content)Sure, here's a simple Python function that calculates the factorial of a number:

def factorial(n):

if n == 0:

return 1

else:

return n * factorial(n-1)

This function uses recursion, a method in which a function calls itself. The base case is when n is 0, in which case the function returns 1. For any other value, the function returns n multiplied by the factorial of n-1.Refactoring

Lastly, you can use Codestral Mamba to refactor and improve your existing code base as follows:

import os

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

api_key = os.environ["MISTRAL_API_KEY"]

client = MistralClient(api_key=api_key)

model = "codestral-mamba-latest"

messages = [

ChatMessage(role="user", content="""Please improve / refactor the following Python function: \\n```python

def fibonacci(n: int) -> int:

if n <= 0:

return "Input should be a positive integer."

elif n == 1:

return 0

elif n == 2:

return 1

else:

a, b = 0, 1

for _ in range(2, n):

a, b = b, a + b

return b

```""")

]

chat_response = client.chat(

model=model,

messages=messages

)

print(chat_response.choices[0].message.content)The provided Python function is already quite well-written and efficient. However, it could be improved by adding some type hints for clarity and to provide better documentation. Here's a refactored version of the function:

def fibonacci(n: int) -> int:

"""

Calculates the nth Fibonacci number.

Args:

n (int): The position of the Fibonacci number to be calculated.

Returns:

int: The nth Fibonacci number.

"""

if not isinstance(n, int) or n <= 0:

raise ValueError("Input should be a positive integer.")

if n <= 2:

return n - 1

else:

a, b = 0, 1

for _ in range(2, n):

a, b = b, a + b

return b

I've added a docstring to the function to explain what it does. I've also added some input validation to check that the input is a positive integer and to raise a meaningful error if it's not. I've also adjusted the base cases to return `n - 1` for `n <= 2` to make the function more concise and easier to understand.Additional Benefits of Codestral Mamba

Codestral Mamba offers several other advantages that make it a compelling choice.

Multilingual support

Codestral Mamba supports over 80 programming languages. This makes it a flexible tool for developers working in different programming environments, whether you're coding in Python, JavaScript, or any other supported language.

Large context window

With the capability to handle up to 256,000 tokens, Codestral Mamba can manage larger codebases and maintain context across multiple files.

This is particularly useful for complex projects where keeping track of extensive code and dependencies is crucial.

Open-source advantages

Codestral Mamba is open-source and available under the Apache 2.0 license. This means its code is transparent and accessible for anyone to view, modify, or contribute to.

The open-source nature promotes community collaboration, allowing developers to improve and adapt the model for various needs.

Fine-Tuning Codestral Mamba and Advanced Use

While Codestral Mamba already offers impressive capabilities out of the box, you can further enhance its performance and tailor it to your specific needs through fine-tuning and advanced prompting techniques.

Fine-tuning on custom data

Codestral Mamba can be customized to better fit your specific needs by fine-tuning it on your own data. This process involves training the model on code and information relevant to your particular domain or codebase.

Fine-tuning enhances the model's performance, making it more effective for your unique applications and improving its accuracy and relevance in generating code.

Advanced prompts

To tackle more complex tasks, you can use advanced prompting techniques with Codestral Mamba. One such method is chain-of-thought prompting, which involves guiding the model through a step-by-step reasoning process. This technique leverages the model's advanced reasoning capabilities to handle intricate queries and produce more detailed and thoughtful responses.

Conclusion

Codestral Mamba, leveraging the Mamba state-space model, addresses limitations of traditional Transformer models in handling long code sequences.

Its open-source nature, multilingual support, and ability to process large contexts position it as a valuable alternative for developers.

If you want to learn more about Mistral’s suite of LLMs, I recommend these blog posts:

Earn a Top AI Certification

Ryan is a lead data scientist specialising in building AI applications using LLMs. He is a PhD candidate in Natural Language Processing and Knowledge Graphs at Imperial College London, where he also completed his Master’s degree in Computer Science. Outside of data science, he writes a weekly Substack newsletter, The Limitless Playbook, where he shares one actionable idea from the world's top thinkers and occasionally writes about core AI concepts.