Course

Code generation is an increasingly important aspect of modern software development. Mistral AI has recently entered this domain with Codestral, its first-ever code model.

In this article, we’ll provide a comprehensive overview of Codestral, exploring its features and functionality.

I'll also share insights from my hands-on experience with the model, including examples of how I've used it to generate code.

If you want to learn more about Mistral, check out this comprehensive guide to working with the Mistral large model.

What Is Codestral?

Codestral is an open-weight generative AI model specifically engineered for code generation tasks—"open-weight" means that the model's learned parameters are freely available for research and non-commercial use, allowing for greater accessibility and customization.

Codestral offers developers a flexible way to write and interact with code through a shared instruction and completion API endpoint. This means we can provide Codestral with instructions in either natural language or code snippets, and it can generate corresponding code outputs.

Codestral's unique ability to understand both code and natural language makes it a versatile tool for tasks like code completion, code generation from natural language descriptions, and even answering questions about code snippets. This opens up a wide range of possibilities for creating AI-powered tools that can streamline our development workflow.

Key Features of Codestral

Codestral offers several notable features that enhance its utility for code generation. Let's take a closer look.

Fluency in over 80 programming languages

One of Codestral's most impressive capabilities is its proficiency in over 80 programming languages. This broad spectrum of language support includes not only popular languages like Python, Java, C, C++, and JavaScript but also extends to more specialized languages used in specific domains or for niche tasks (like Swift or Fortran).

This wide-ranging fluency means that Codestral can be a valuable asset for projects that involve multiple languages or for teams where developers work with different languages. Whether we're working on a data science project in Python, building a web application with JavaScript, or tackling a systems programming task in C++, Codestral can adapt to our needs and provide code generation support across a diverse set of languages.

Code generation

Codestral's core function is code generation. It aims to streamline our coding workflow by automating tasks such as code function completion, test case generation, and filling in missing code segments.

The fill-in-the-middle mechanism is designed to help when working with complex codebases or unfamiliar languages. If effective, these features could potentially free up time for higher-level design and problem-solving, leading to faster development cycles and improved code reliability.

Open-weight

A notable aspect of Codestral is its open-weight nature. This means that the model's learned parameters are freely accessible for research and non-commercial use.

This open accessibility fosters a collaborative environment, allowing developers and researchers to experiment with the model, fine-tune it for specific tasks, and contribute to its ongoing development.

This openness not only democratizes access to powerful code generation capabilities but also encourages transparency and innovation within the AI community.

Performance and efficiency

Mistral AI claims Codestral sets a new standard in code generation performance and latency, outperforming other models in certain benchmarks. Its large context window (32k tokens) potentially enhances its ability to handle long-range code completion tasks.

Let’s discuss performance and efficiency in more detail in the next section.

Codestral Comparison With Other Models

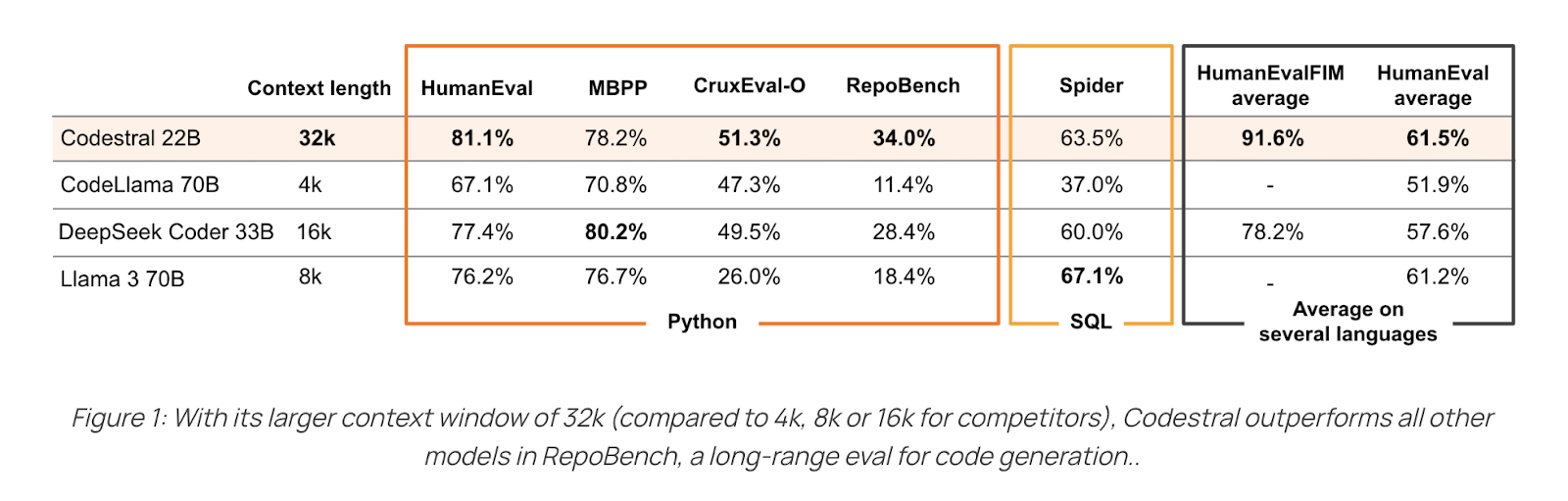

To better understand Codestral's capabilities, let's compare its performance with other prominent code generation models. The following sections will delve into specific benchmark results and highlight key differences.

Context window

Let’s start by looking at these results:

Codestral stands out due to its superior performance in long-range code completion tasks (RepoBench), likely due to its larger context window of 32k tokens. This larger window allows it to consider more surrounding code, leading to better predictions. Codestral also excels in the HumanEval benchmark in Python, demonstrating its ability to generate accurate code.

While Codestral performs strongly in specific areas, other models like DeepSeek Coder excel in different benchmarks (MBPP).

Despite being one of the smallest LLM models compared to others, Codestral's performance is often better or at least comparable to much larger models like Llama 3 70B across all programming languages in both code generation and fill-in-the-middle tasks.

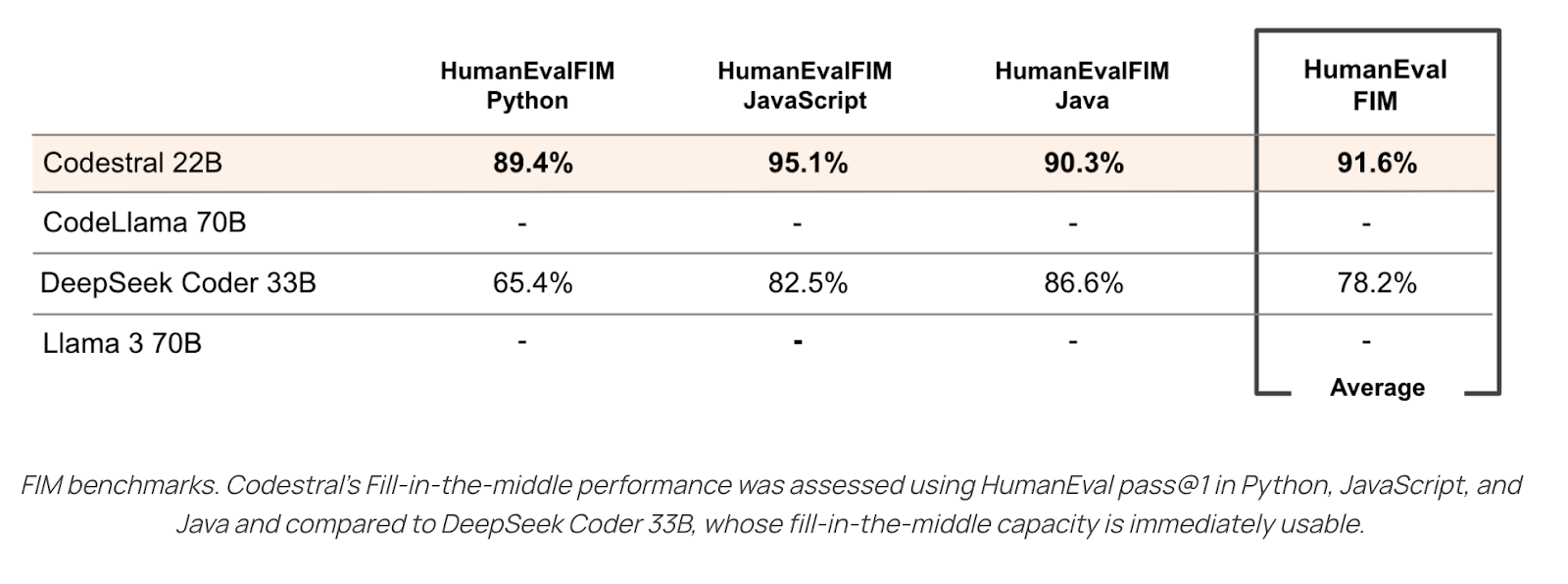

Fill-in-the-middle performance

Let’s now take a look at the fill-in-the-middle (FIM) performance:

Codestral 22B demonstrates significantly higher performance across all three programming languages (Python, JavaScript, and Java) and in the overall FIM average compared to DeepSeek Coder 33B. This suggests Codestral's effectiveness in understanding code context and accurately filling in missing code segments.

However, the benchmark only compares Codestral's performance with DeepSeek Coder 33B and does not include data for CodeLlama 70B or Llama 3 70B. This limits the scope of comparison and conclusions that can be drawn regarding Codestral's performance relative to other models.

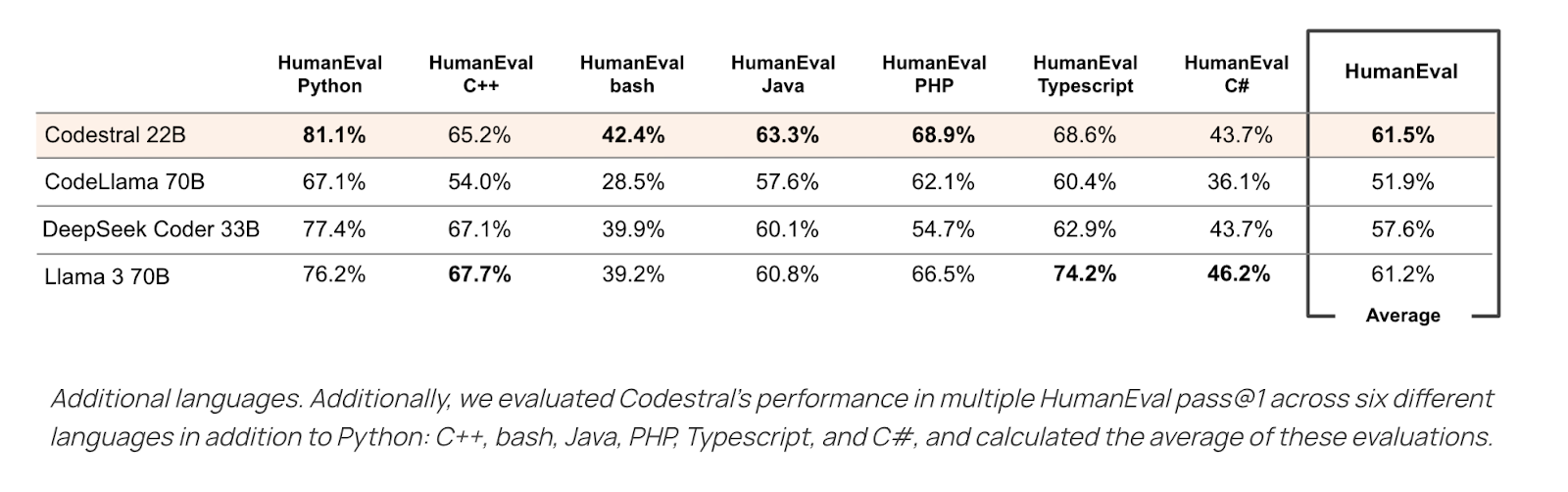

HumanEval

The HumanEval benchmark measures code generation accuracy by testing models' ability to generate code that passes human-written unit tests based on function descriptions. Let’s now see how Codestral compares to other models in the HumanEval benchmark:

Codestral demonstrates the highest performance in Python, bash, Java, and PHP. While other models excel in different languages, Codestral's overall average performance leads, showcasing its strong capability in generating accurate code across multiple languages.

Codestral Use Cases

Codestral's diverse capabilities lend themselves to various practical applications within the software development lifecycle. Let's explore some of the prominent use cases where Codestral can make a significant impact.

Code completion and generation

Codestral excels in code completion and generation, one of its primary use cases. Developers can utilize Codestral to suggest code completions based on the surrounding context, enabling faster and more accurate coding.

Additionally, Codestral can generate entire code snippets from natural language descriptions or instructions, further streamlining the development process and enhancing productivity.

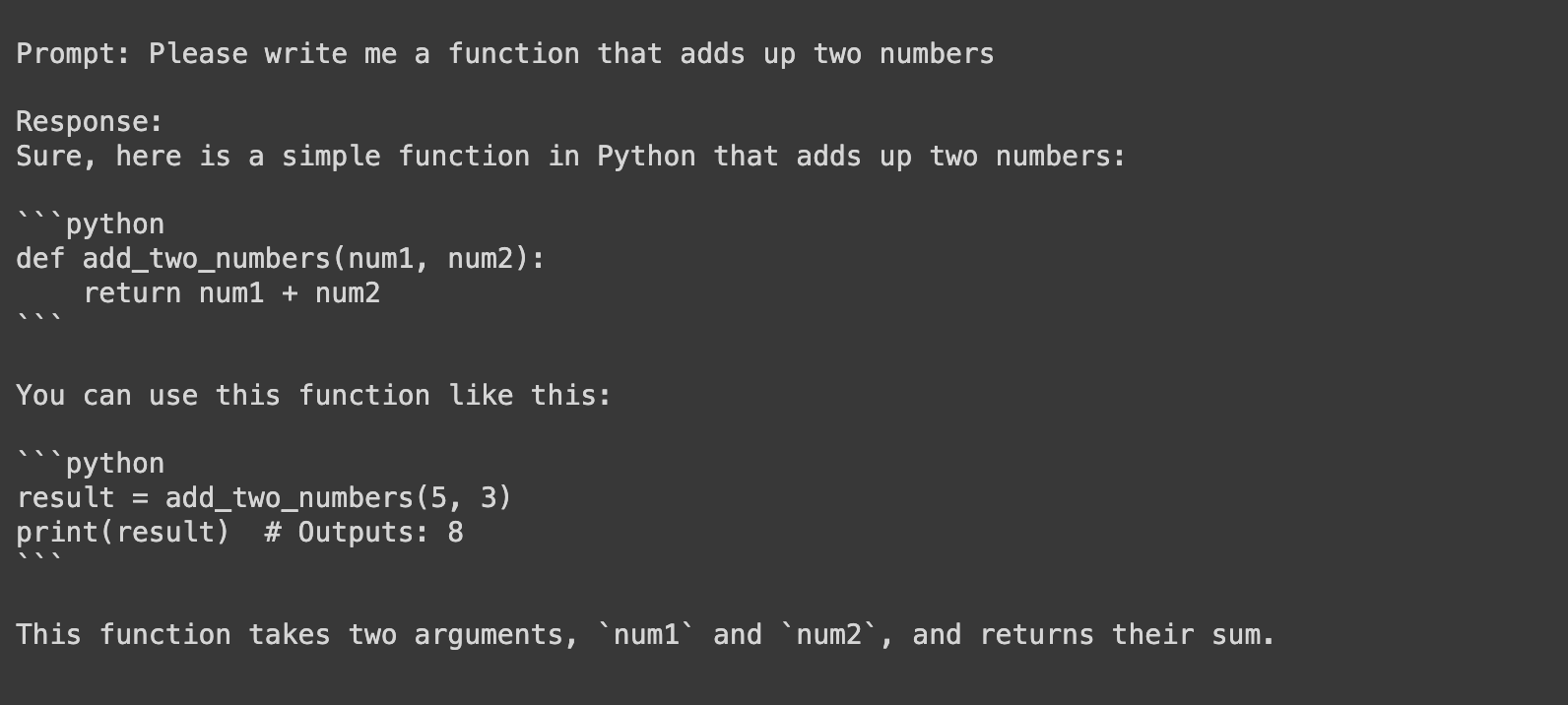

Here’s a quick example of what I asked Codestral to generate:

prompt = "Please write me a function that adds up two numbers"

data = {

"model": "codestral-latest",

"messages": [

{

"role": "user",

"content": prompt

}

],

"temperature": 0

}

response = call_chat_instruct_endpoint(api_key, data)

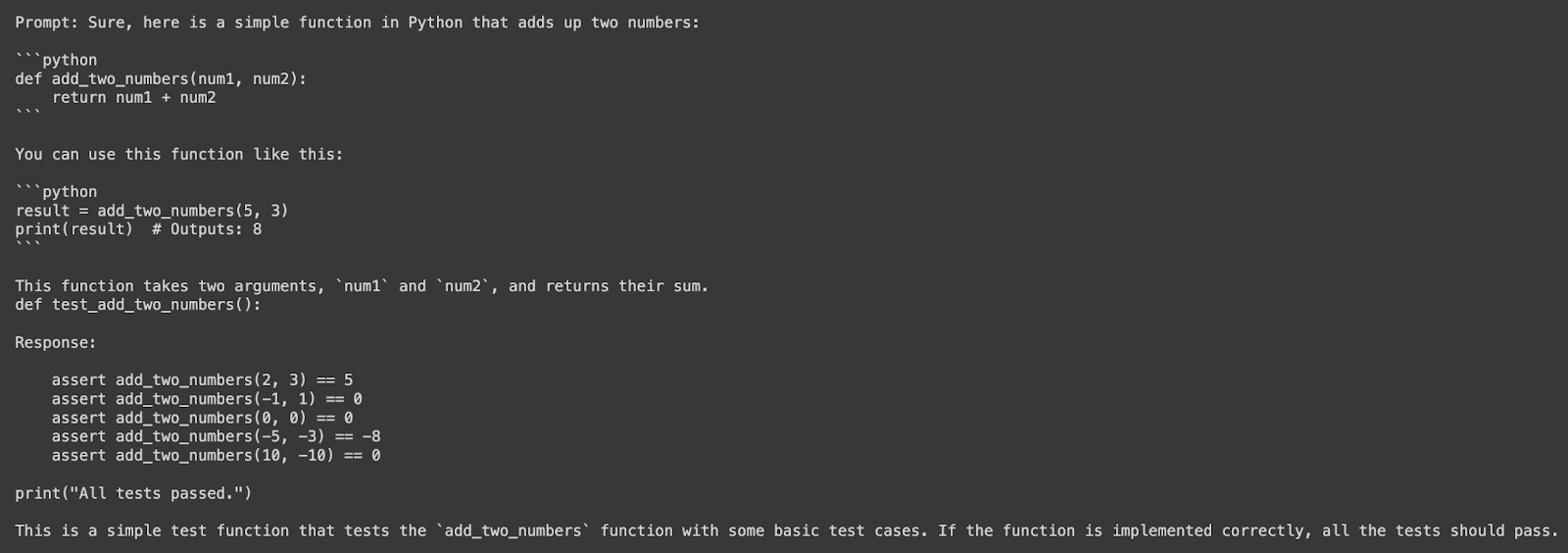

Unit test generation

Codestral also simplifies the generation of unit tests for existing code. This feature saves developers valuable time and effort by automating the test generation process. Consequently, it helps improve code quality and reduces the likelihood of bugs, ensuring that software projects remain robust and maintainable.

I asked Codestral to generate a simple unit test for the function it generated above:

prompt = """

Sure, here is a simple function in Python that adds up two numbers:

```python

def add_two_numbers(num1, num2):

return num1 + num2

You can use this function like this:

result = add_two_numbers(5, 3)

print(result) # Outputs: 8

This function takes two arguments, num1 and num2, and returns their sum.

def test_add_two_numbers():

"""

suffix = ""

data = {

"model": "codestral-latest",

"prompt": prompt,

"suffix": suffix,

"temperature": 0

}

response = call_fim_endpoint(api_key, data)

Code translation and refactoring

Codestral's multilingual capabilities go beyond code generation. It can translate code between different programming languages, allowing developers to work with existing codebases written in unfamiliar languages.

Moreover, Codestral can assist in refactoring code for better readability and maintainability, ensuring that projects adhere to best practices and coding standards.

I asked Codestral to translate the Python code above to JavaScript:

prompt = """

Please translate the following Python code to Javascript:

```python

def add_two_numbers(num1, num2):

return num1 + num2

You can use this function like this:

result = add_two_numbers(5, 3)

print(result) # Outputs: 8

This function takes two arguments, num1 and num2, and returns their sum.

"""

suffix = ""

data = {

"model": "codestral-latest",

"prompt": prompt,

"suffix": suffix,

"temperature": 0

}

response = call_fim_endpoint(api_key, data)

Interactive code assistance

Developers can interact with Codestral for debugging assistance, understanding unfamiliar code, and finding optimal solutions to coding problems. This interactive assistance is particularly valuable for developers working on complex projects or those learning new programming languages and frameworks, providing them with support and guidance throughout their development journey.

How to Get Started With Codestral

Codestral offers us multiple ways to use it:

- Le Chat conversational interface: An instructed version of Codestral is accessible through Mistral AI's free conversational interface, Le Chat, allowing us to interact naturally with the model.

- Direct download and testing: We can download the Codestral model from Hugging Face for research and testing purposes under the Mistral AI Non-Production License.

- Dedicated endpoint: A specific endpoint (codestral.mistral.ai) is available, particularly for those of us integrating Codestral into our IDEs. This endpoint has personal API keys and separate rate limits, currently free for a beta period.

- La Plateforme integration: Codestral is integrated into Mistral AI's La Plateforme, where we can build applications and access the model via the standard API endpoint (api.mistral.ai), billed per token. This is suitable for research, batch queries, or third-party application development.

- Integrations with developer tools: Codestral is integrated into various tools for enhanced developer productivity, including LlamaIndex and LangChain for building agentic applications, and Continue.dev and Tabnine for VSCode and JetBrains environments.

Codestral Limitations

While Codestral shows promise in various code generation tasks, it's important for us to acknowledge its limitations:

- Benchmark performance: Although Codestral performs well in certain benchmarks, real-world performance can vary depending on the complexity of the task and the specific programming language. Thorough testing in our own environment is recommended before fully relying on it in production.

- Limited context window (in some cases): While Codestral boasts a 32k token context window for long-range code completion, certain use cases might require an even larger context to fully grasp the intricacies of our codebase.

- Potential bias: As with any AI model trained on existing code, Codestral may inherit biases present in the training data. This could lead to the generation of code that inadvertently perpetuates undesirable patterns or practices.

- Evolving technology: Codestral is still a relatively new model, and its capabilities and limitations are likely to evolve as it undergoes further development and refinement. It's essential for us to stay updated with the latest research and releases to make the most informed decisions about its use.

Conclusion

Codestral has the potential to free up our time for more complex problem-solving and design work by automating tasks like code completion and test generation.

While its full impact remains to be seen, Codestral is a development worth watching as we explore the future of AI in software development.

If you want to learn more about AI, check out this six-course skill track on AI Fundamentals.

Ryan is a lead data scientist specialising in building AI applications using LLMs. He is a PhD candidate in Natural Language Processing and Knowledge Graphs at Imperial College London, where he also completed his Master’s degree in Computer Science. Outside of data science, he writes a weekly Substack newsletter, The Limitless Playbook, where he shares one actionable idea from the world's top thinkers and occasionally writes about core AI concepts.

Codestral FAQs

Is Codestral available for commercial use?

Mistral AI offers enterprise solutions for businesses interested in leveraging Codestral for commercial applications. Contact their sales team for more information.

Can Codestral be fine-tuned for specific tasks or domains?

Yes, Codestral is an open-weight model, meaning its weights are accessible for fine-tuning on custom datasets to adapt it for specific tasks or domains.

How much does Codestral cost?

Codestral offers a free beta version with limitations. Paid plans include per-token billing and enterprise solutions with custom pricing.