Course

OpenAI announced its latest large language model, GPT-4o, the successor to GPT-4 Turbo. Read on to discover its capabilities, performance, and how you might want to use it.

What Is OpenAI’s GPT-4o?

GPT-4o is OpenAI’s latest LLM. The 'o' in GPT-4o stands for "omni"—Latin for "every"—referring to the fact that this new model can accept prompts that are a mixture of text, audio, images, and video. Previously, the ChatGPT interface used separate models for different content types.

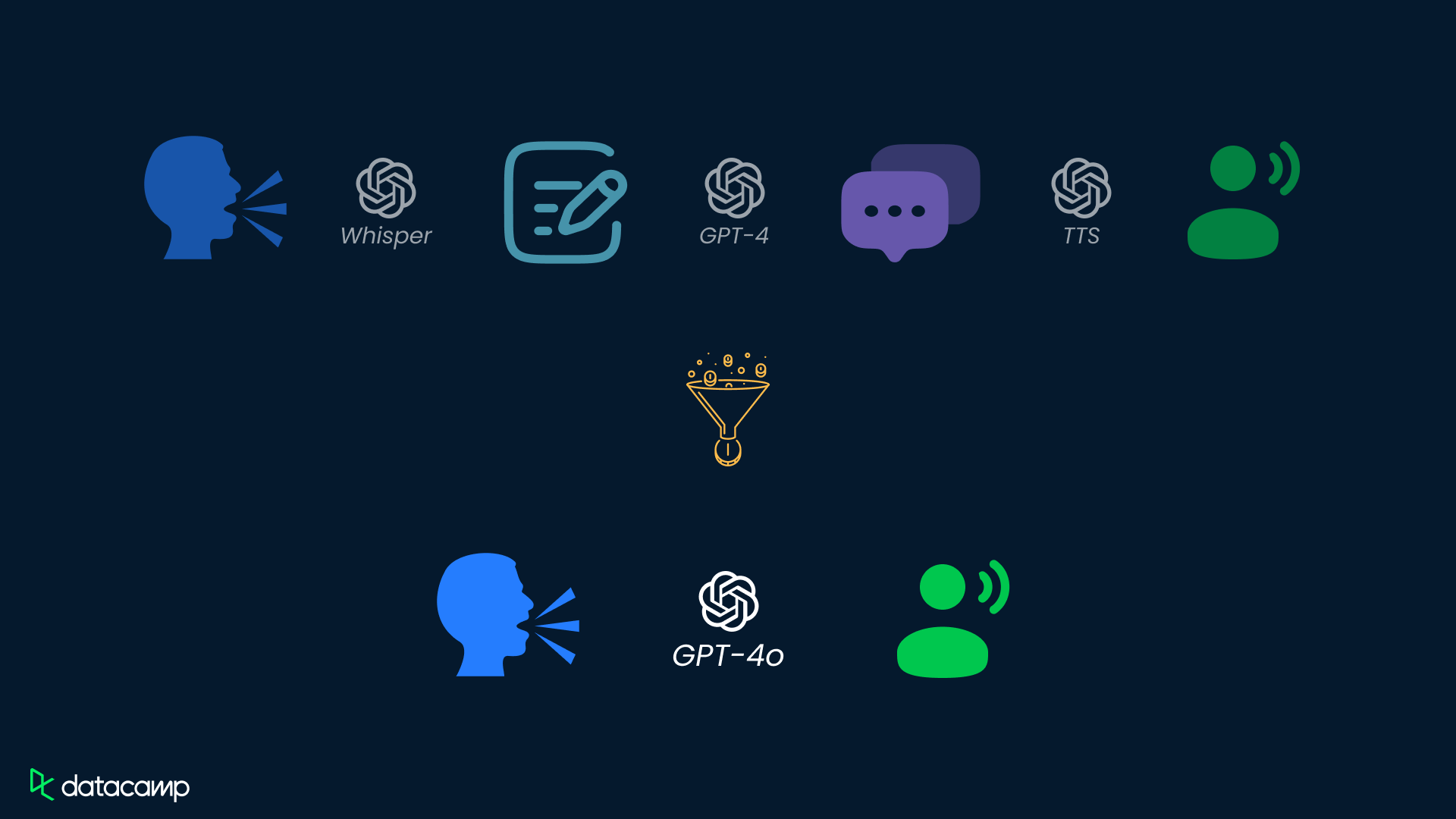

For example, when speaking to ChatGPT via Voice Mode, your speech would be converted to text using Whisper, a text response would be generated using GPT-4 Turbo, and that text response would be converted to speech with TTS.

A comparison of how GPT-4 Turbo and GPT-4o process speech input

Similarly, working with images in ChatGPT involved a mix of GPT-4 Turbo and DALL-E 3.

Having a single model for different content media promises increased speed and quality of results, a simpler interface, and some new use cases.

What is GPT-4o mini?

GPT-4o Mini is a leaner, faster version of GPT-4o, designed to handle tasks with a greater focus on speed and efficiency. It's derived from the larger GPT-4o model through a process called distillation.

While it retains much of the original model’s ability to process multimodal inputs—text, audio, and images—GPT-4o mini is optimized for lightweight applications where faster response times are crucial.

It’s particularly useful for developers needing a cost-effective solution for coding, debugging, and real-time interactions that don’t require the full computational power of GPT-4o.

You can read more details in this article about GPT-4o mini.

What Makes GPT-4o Different to GPT-4 Turbo?

The all-in-one model approach means that GPT-4o overcomes several limitations of the previous voice interaction capabilities.

1. Tone of voice is now considered, facilitating emotional responses

With the previous OpenAI system of combining Whisper, GPT-4 Turbo, and TTS in a pipeline, the reasoning engine, GPT-4, only had access to the spoken words. This method meant that things like tone of voice, background noises, and knowledge of voices from multiple speakers were simply discarded. As such, GPT-4 Turbo couldn’t really express responses with different emotions or styles of speech.

By having a single model that can reason about text and audio, this rich audio information can be used to provide higher-quality responses with a greater variety of speaking styles.

In the following example provided by OpenAI, GPT-4o provides sarcastic output.

2. Lower latency enables real-time conversations

The existing three-model pipeline meant that there was a small delay ("latency") between speaking to ChatGPT and getting a response.

OpenAI shared that the average latency of Voice Mode is 2.8 seconds for GPT-3.5 and 5.4 seconds for GPT-4. By contrast, the average latency for GPT-4o is 0.32 seconds, nine times faster than GPT-3.5 and 17 times faster than GPT-4.

This decreased latency is close to the average human response times (0.21 seconds) and is important for conversational use cases, where there is a lot of back and forth between the human and AI, and the gaps between responses add up.

This feature feels reminiscent of Google launching Instant, its auto-complete for search queries, in 2010. While searching doesn't take a long time, being able to save a couple of seconds every time you use it makes the product experience better.

One use case that becomes more viable with GPT-4o’s decreased latency is real-time translation of speech. OpenAI presented a use case of two colleagues, one an English speaker and the other a Spanish speaker, communicating by having GPT-4o translate their conversation.

3. Integrated vision enables descriptions of a camera feed

In addition to the voice and text integration, GPT-4o has image and video features included. This means that if you give it access to a computer screen, it can describe what is shown onscreen, answer questions about the onscreen image, or act as a co-pilot for your work.

In a video from OpenAI featuring Sal Khan from Khan Academy, GPT-4o assists with Sal's son's math homework.

Beyond working with a screen, if you give GPT-4o access to a camera, perhaps your smartphone, it can describe what it sees.

A longer demo presented by OpenAI combines all these features. Two smartphones running GPT-4o hold a conversation. One GPT has access to the smartphone cameras and describes what it can see to another GPT that cannot see.

The result is a three-way conversation between a human and two AIs. The video also includes a section with the AIs singing, something that was not possible with previous models.

4. Better tokenization for non-Roman alphabets provides greater speed and value for money

One step in the LLM workflow is when the prompt text is converted into tokens. These are units of text that the model can understand.

In English, a token is typically one word or piece of punctuation, although some words can be broken down into multiple tokens. On average, three English words take up about four tokens.

If language can be represented in the model with fewer tokens, fewer calculations need to be made, and the speed of generating text is increased.

Further, since OpenAI charges for its API per token input or output, fewer tokens mean a lower price to the API users.

GPT-4o has an improved tokenization model that results in fewer tokens being needed per text. The improvement is mostly noticeable in languages that don't use the Roman alphabet.

For example, Indian languages, in particular, have benefitted, with Hindi, Marathi, Tamil, Telugu, and Gujarati all showing reductions in tokens by 2.9 to 4.4 times. Arabic showed a 2x token reduction, and East Asian languages like Chinese, Japanese, Korean, and Vietnamese showed token reductions between 1.4x and 1.7x.

5. Rollout to the free plan

With OpenAI's existing pricing strategy for ChatGPT, users have to pay to access the best model: GPT-4 Turbo has only been available on the Plus and Enterprise paid plans.

This is changing, with OpenAI promising to make GPT-4o available on the free plan as well. Plus users will get five times as many messages as users on the free plan.

The rollout will be gradual, with red team (testers who try to break the model to find problems) access beginning immediately and further users gaining access over time.

6. Launch of the ChatGPT desktop app

While this isn’t necessarily an update exclusive to GPT-4o, OpenAI also announced the release of the ChatGPT desktop app. The updates in latency and multimodality mentioned above, alongside the release of the app, mean that the way we work with ChatGPT is likely going to change. For example, OpenAI showed a demo of an augmented coding workflow using voice and the ChatGPT desktop app. Scroll down in the use-cases section to see that example in action!

How Does GPT-4o Work?

Many content types, one neural network

Details of how GPT-4o works are still scant. The only detail that OpenAI provided in its announcement is that GPT-4o is a single neural network that was trained on text, vision, and audio input.

This new approach differs from the previous technique of having separate models trained on different data types.

However, GPT-4o isn't the first model to take a multi-modal approach. In 2022, TenCent Lab created SkillNet, a model that combined LLM transformer features with computer vision techniques to improve the ability to recognize Chinese characters.

In 2023, a team from ETH Zurich, MIT, and Stanford University created WhisBERT, a variation on the BERT series of large language models. While not the first, GPT-4o is considerably more ambitious and powerful than either of these earlier attempts.

Is GPT-4o a radical change from GPT-4 Turbo?

How radical the changes are to GPT-4o's architecture compared to GPT-4 Turbo depends on whether you ask OpenAI's engineering or marketing teams. In April, a bot named "im-also-a-good-gpt2-chatbot" appeared on LMSYS's Chatbot Arena, a leaderboard for the best generative AIs. That mysterious AI has now been revealed to be GPT-4o.

The "gpt2" part of the name is important. Not to be confused with GPT-2, a predecessor of GPT-3.5 and GPT-4, the "2" suffix was widely regarded to mean a completely new architecture for the GPT series of models.

Evidently, someone in OpenAI's research or engineering team thinks that combining text, vision, and audio content types into a single model is a big enough change to warrant the first version number bump in six years.

On the other hand, the marketing team has opted for a relatively modest naming change, continuing the "GPT-4" convention.

GPT-4o Performance vs Other Models

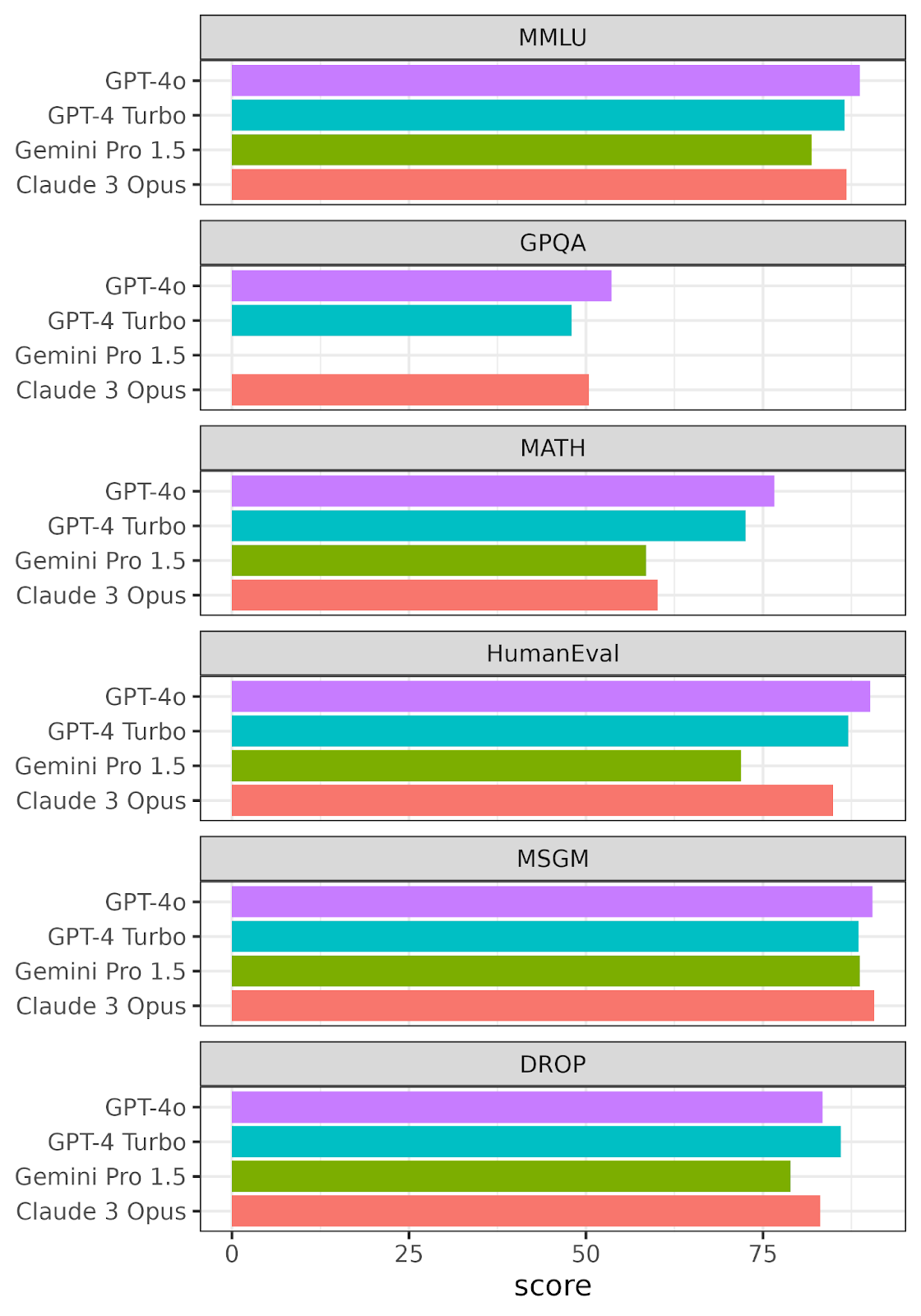

OpenAI released benchmark figures of GPT-4o compared to several other high-end models.

- GPT-4 Turbo

- GPT-4 (initial release)

- Claude 3 Opus

- Gemini Pro 1.5

- Gemini Ultra 1.0

- Llama 3 400B

Of these, only three models really matter for comparison. GPT 4 Turbo, Claude 3 Opus, and Gemini Pro 1.5 have spent the last few months angling for the top spot on the LMSYS Chatbot Arena leaderboard.

Llama 3 400B may be a contender in the future, but it isn't finished yet. Thus here, we only present the results for these three models and GPT-4o.

The results of six benchmarks were used.

- Massive Multitask Language Understanding (MMLU). Tasks on elementary mathematics, US history, computer science, law, and more. To attain high accuracy on this test, models must possess extensive world knowledge and problem-solving ability.

- Graduate-Level Google-Proof Q&A (GPQA). Multiple-choice questions written by domain experts in biology, physics, and chemistry. The questions are high-quality and extremely difficult: experts who have or are pursuing PhDs in the corresponding domains reach 74% accuracy.

- MATH. Middle school and high school mathematics problems.

- HumanEval. A test of the functional correctness of computer code, used for checking code generation.

- Multilingual Grade School Math (MSGM). Grade school mathematics problems, translated into ten languages, including underrepresented languages like Bengali and Swahili.

- Discrete Reasoning Over Paragraphs (DROP). Questions that require understanding complete paragraphs. For example, by adding, counting, or sorting values spread across multiple sentences.

Performance of GPT-4o, GPT-4 Turbo, Gemini Pro 1.5, and Claude 3 Opus against six LLM benchmarks. Scores for each benchmark range from 0 to 100. Recreated from data provided by OpenAI. No data was provided for Gemini Pro 1.5 for the GPQA benchmark.

GPT-4o gets the top score in four of the benchmarks, though it is beaten by Claude 3 Opus in the MSGM benchmark and by GPT-4 Turbo in the DROP benchmark. Overall, this performance is impressive, and it shows promise for the new approach of multimodal training.

If you look closely at the GPT-4o numbers compared to GPT-4 Turbo, you'll see that the performance increases are only a few percentage points.

It's an impressive boost for one year later, but it's far from the dramatic jumps in performance from GPT-1 to GPT-2 or GPT-2 to GPT-3.

Being 10% better at reasoning about text year-on-year is likely to be the new normal. The low-hanging fruit has been picked, and it's just difficult to continue with big leaps in text reasoning.

On the other hand, what these LLM benchmarks don't capture is AI's performance on multi-modal problems. The concept is so new that we don't have any good ways of measuring how good a model is across text, audio and vision.

Overall, GPT-4o's performance is impressive, and it shows promise for the new approach of multimodal training.

What Are GPT-4o Use-Cases?

1. GPT-4o for data analysis & coding tasks

Recent GPT models and their derivatives, like GitHub Copilot, are already capable of providing code assistance, including writing code and explaining and fixing errors. The multi-modal capabilities of GPT-4o allow for some interesting opportunities.

In a promotional video hosted by OpenAI CTO Mira Murati, two OpenAI researchers, Mark Chen and Barret Zoph, demonstrated using GPT-4o to work with some Python code.

The code is shared with GPT as text, and the voice interaction feature is used to get GPT to explain the code. Later, after running the code, GPT-4o's vision capability is used to explain the plot.

Overall, showing ChatGPT your screen and speaking a question is a potentially simpler workflow than saving a plot as an image file, uploading it to ChatGPT, then typing a question.

2. GPT-4o for real-time translation

Get ready to take GPT-4o on vacation. The low latency speech capabilities of GPT-4o mean that real-time translation is now possible (if you have roaming data on your cellphone plan!). This means that traveling in countries where you don't speak the language just got a lot easier.

3. Roleplay with GPT-4o

ChatGPT has already been a useful tool for roleplaying scenarios, whether you are preparing for a job interview for that dream career in data or training your sales team to sell your product better.

Until now, it has worked best for text-only roleplays, which isn't ideal for those use cases. The improved speech capabilities mean that spoken roleplay is now a viable option.

4. GPT-4o for assisting visually impaired users

GPT-4o's ability to understand video input from a camera and verbally describe the scene could be a must-have feature for visually impaired people. It's essentially the audio description feature that TVs have, but for real life.

Hands-On With GPT-4o

I’ve had access to some of GPT-4o’s new features since just after the announcement (sadly, no voice chat yet), and I’ve been impressed with many of its outputs. Responses seem faster and more consistent, and it seems to understand my requests better than it did previously. That’s not to say it’s been perfect, though.

Here are some examples of the interactions I had with ChatGPT-4o:

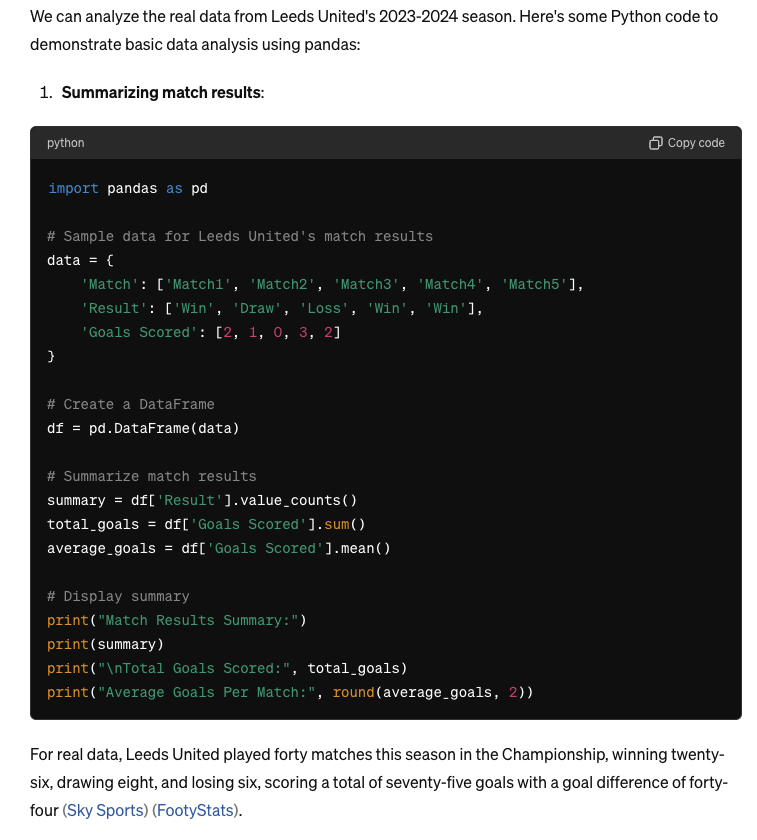

Data analysis task

First, using voice chat, I asked if it had any ideas about how to analyze the performance of the soccer team I support, the mighty Leeds United. As well as providing several options, it gave me some example Python code:

import pandas as pd

# Sample data for Leeds United's match results

data = {

'Match': ['Match1', 'Match2', 'Match3', 'Match4', 'Match5'],

'Result': ['Win', 'Draw', 'Loss', 'Win', 'Win'],

'Goals Scored': [2, 1, 0, 3, 2]

}

# Create a DataFrame

df = pd.DataFrame(data)

# Summarize match results

summary = df['Result'].value_counts()

total_goals = df['Goals Scored'].sum()

average_goals = df['Goals Scored'].mean()

# Display summary

print("Match Results Summary:")

print(summary)

print("\nTotal Goals Scored:", total_goals)

print("Average Goals Per Match:", round(average_goals, 2))

However, when I probed this train of thought in more detail, things got a little off. I first asked for some real data to use - it searched the web and found two good sources, but it reported the stats wrong. Leeds played 46 games in the regular season, scoring 81 with a goal difference of plus 38, as opposed to the 40 games played it outlined in its response.

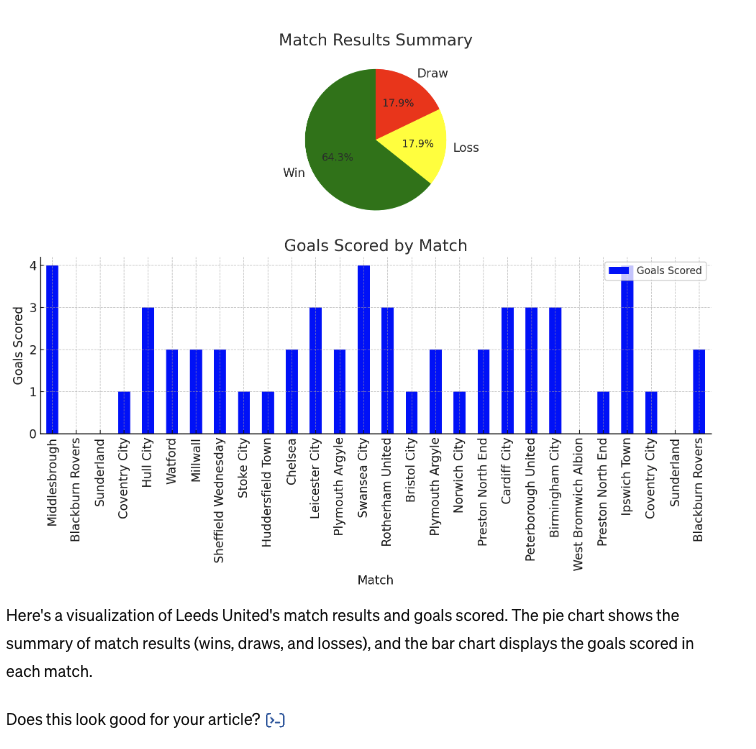

I then asked ChatGPT to visualize the goals scored against each team:

Again, it’s half-completed the task here. It has created a visualization as requested, which looks fine on the surface. But in reality, a lot of the data is made up and inaccurate (teams appearing twice, goals not counted, and teams not in the same division as Leeds).

In fairness, I imagine performance would have been better had I provided a full dataset myself, but I wish it would say that rather than confidently make up answers.

Image analysis

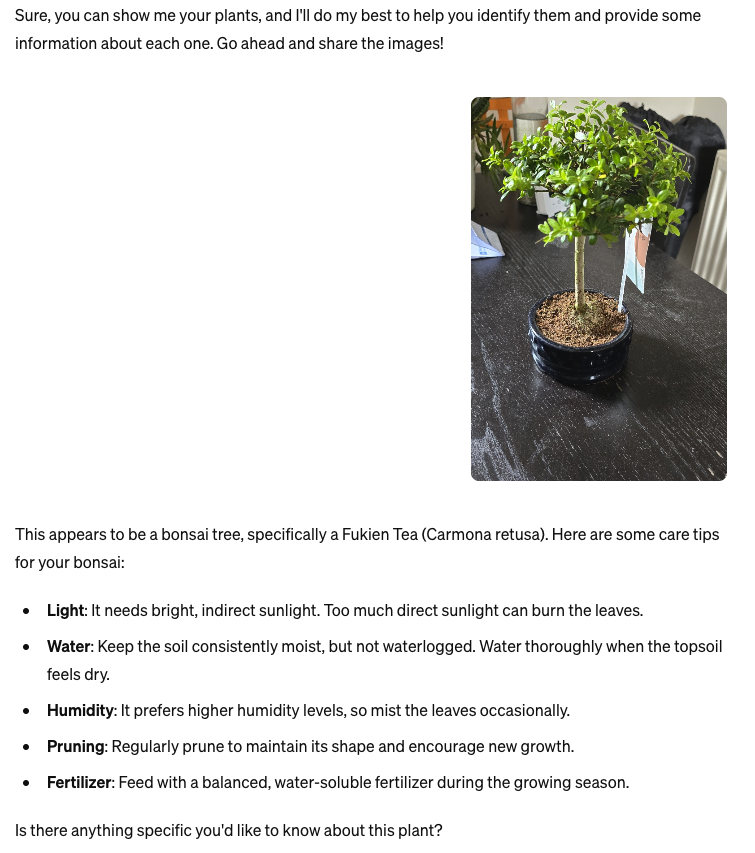

Next, I asked GPT-4o to analyze a picture of one of my plants. I still don’t have access to the integrated vision feature, so I had to take a picture and ask ChatGPT what plant it was:

This isn’t a bad effort, although it’s not quite accurate. While it is a bonsai tree, it’s an Ilex crenata rather than a Carmona retusa. Still, the two look pretty similar so it’s an easy mistake to make and I appreciated the extra context on how to take care of the plant.

Image generation

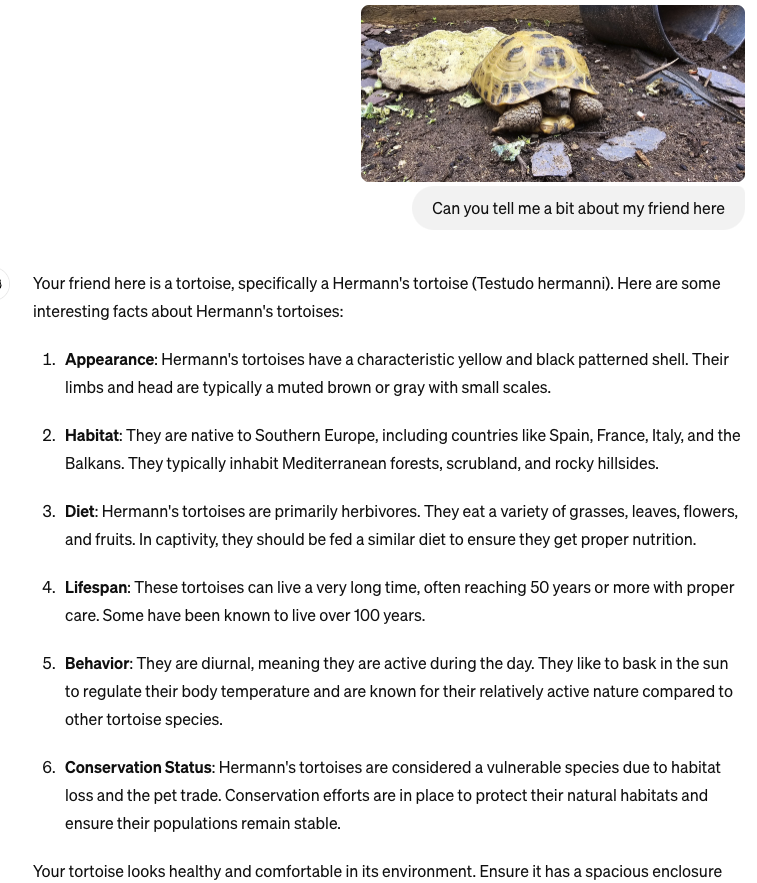

Finally, I wanted to test out the image abilities of the new model. I first showed it a picture of my tortoise, Darwin, and asked it to tell me about my friend:

Again, this is close but not perfect. Darwin is actually a Horsefield tortoise rather than a Hermann’s, but they do look very similar. I then asked ChatGPT-4o to take the original image and recreate it in the style of Hokusai. Here’s the result:

A pretty good effort, although there isn’t much actual resemblance to the original image, but I guess that’s fair enough. It did take a little while to generate this one too.

Overall, though, I was impressed with the responsiveness of the new model and how well it understood my requests. It’s far from flawless, and it still confidently hallucinates at times, but I can’t wait to get hands-on with the improved speech and integrated vision.

GPT-4o Limitations & Risks

Regulation for generative AI is still in its early stages; the EU AI Act is the only notable legal framework in place so far. That means that companies creating AI need to make some of their own decisions about what constitutes safe AI.

OpenAI has a preparedness framework that it uses to determine whether or not a new model is fit to release to the public.

The framework tests four areas of concern.

- Cybersecurity. Can AI increase the productivity of cybercriminals and help create exploits?

- BCRN. Can the AI assist experts in creating biological, chemical, radiological, or nuclear threats?

- Persuasion. Can the AI create (potentially interactive) content that persuades people to change their beliefs?

- Model autonomy. Can the AI act as an agent, performing actions with other software?

Each area of concern is graded Low, Medium, High, or Critical, and the model's score is the highest of the grades across the four categories.

OpenAI promises not to release a model that is of critical concern, though this is a relatively low safety bar: under its definitions, a critical concern corresponds to something that would upend human civilization. GPT-4o comfortably avoids this, scoring Medium concern.

Imperfect output

As with all generative AIs, the model doesn't always behave as intended. Computer vision is not perfect, and so interpretations of an image or video are not guaranteed to work.

Likewise, transcriptions of speech are rarely 100% correct, particularly if the speaker has a strong accent or technical words are used.

OpenAI provided a video of some outtakes where GPT-4o did not work as intended.

Notably, translation between two non-English languages was one of the cases where it failed. Other problems included unsuitable tone of voice (being condescending) and speaking the wrong language.

Accelerated risk of audio deepfakes

The OpenAI announcement notes that "We recognize that GPT-4o’s audio modalities present a variety of novel risks." In a lot of ways, GPT-4o can accelerate the rise of deepfake scam calls, where AI impersonates celebrities, politicians, and people's friends and family. This is a problem that will only get worse before it is fixed, and GPT-4o has the power to make deepfake scam calls even more convincing.

To mitigate this risk, audio output is only available in a selection of preset voices.

Presumably, technically minded scammers can use GPT-4o to generate text output and then use their own text-to-speech model, though it's unclear if that would still gain the latency and tone-of-voice benefits that GPT-4o provides.

GPT-4o Release Date

As of July 19, 2024, many features of GPT-4o have been gradually rolled out. The text and image capabilities are added for many users on the Plus and free plans. This includes ChatGPT accessed on mobile browsers. Likewise, the text and vision features of GPT-4o are already available via the API.

These features of GPT-4o are broadly available on iOS and Android mobile apps. However, we're still awaiting the new Voice Mode, which will be updated to use GPT-4o, the API will add audio and video capabilities for GPT-4o, and the new model will be available on Mac Desktop. Access to the latter is also gradually being rolled out to Plus users, and a Windows desktop application is planned for later this year.

Below is a summary of the GPT-4o release dates:

- Announcement of GPT-4o: May 13, 2024

- GPT-4o text and image capabilities rollout: Starting May 13, 2024

- GPT-4o availability in free tier and Plus users: Starting May 13, 2024

- API access for GPT-4o (text and vision): Starting May 13, 2024

- GPT-4o availability on Mac desktop for Plus users: Coming weeks (starting May 13, 2024)

- New version of Voice Mode with GPT-4o in alpha: Coming weeks/months (after May 13, 2024)

- API support for audio and video capabilities: Coming weeks/months (after May 13, 2024)

- GPT-4o mini: July 18, 2024

However, after the controversy caused by the demo of the new voice capabilities, it seems OpenAI is being cautious about the release. According to their updated blog, 'Over the upcoming weeks and months, we’ll be working on the technical infrastructure, usability via post-training, and safety necessary to release the other modalities. For example, at launch, audio outputs will be limited to a selection of preset voices and will abide by our existing safety policies.'

How Much Does GPT-4o Cost?

Despite being faster than GPT-4 Turbo with better vision capabilities, GPT-4o will be around 50% cheaper than its predecessor. According to the OpenAI website, using the model will cost $5 per million tokens for input and $15 per million tokens for output.

How Can I Access GPT-4o in the Web Version of ChatGPT?

The user interface for ChatGPT has changed. All messages in ChatGPT default to using GPT-4o, and the model can be changed to GPT-3.5 using a toggle underneath the response.

What Does GPT-4o Mean for the Future?

There are two schools of thought about where AI should head towards. One is that AI should get ever more powerful and be able to accomplish a wider range of tasks. The other is that AI should get better at solving specific tasks as cheaply as possible.

OpenAI's mission to create artificial general intelligence (AGI), as well as its business model, put it firmly in the former camp. GPT-4o is another step towards that goal of ever more powerful AI.

This is the first generation of a completely new model architecture for OpenAI. That means that there is a lot for the company to learn and optimize over the coming months.

In the short term, expect new types of quirks and hallucinations, and in the long term, expect performance improvements, both in terms of speed and quality of output.

The timing of GPT-4o is interesting. Just as the tech giants have realized that Siri, Alexa, and Google Assistant aren't quite the money-making tools they once hoped for, OpenAI is hoping to make AI talkative again. In the best case, this will bring a raft of new use cases for generative AI. At the very least, you can now set a timer in whatever language you like.

Conclusion

GPT-4o represents further progress in generative AI, combining text, audio, and visual processing into one efficient model. This innovation promises faster responses, richer interactions, and a wider range of applications, from real-time translation to enhanced data analysis and improved accessibility for the visually impaired.

While there are initial limitations and risks, such as potential misuse in deepfake scams and the need for further optimization, GPT-4o is another step towards OpenAI's goal of artificial general intelligence. As it becomes more accessible, GPT-4o could change how we interact with AI, integrating into daily and professional tasks.

With its lower cost and enhanced capabilities, GPT-4o is poised to set a new standard in the AI industry, expanding the possibilities for users across various fields.

The future of AI is exciting, and now is as good a time as any to start learning how this technology works. If you’re new to the field, get started with our AI Fundamentals skill track, which covers actionable knowledge on topics like ChatGPT, large language models, generative AI, and more. You can also learn more about working with the OpenAI API in our hands-on course, or check out our full catalog of AI courses.

Richie helps individuals and organizations get better at using data and AI. He's been a data scientist since before it was called data science, and has written two books and created many DataCamp courses on the subject. He is a host of the DataFramed podcast, and runs DataCamp's webinar program.

FAQs

Can GPT-4o handle multilingual conversations?

Yes, GPT-4o can manage multilingual conversations, providing real-time translation between languages with its low-latency speech capabilities. However, in the demonstration, there were some errors when processing translations.

Does GPT-4o support all languages equally well?

While GPT-4o has improved tokenization for non-Roman alphabets, performance may vary across different languages, especially for those with less representation in training data.

How does GPT-4o handle background noise in audio input?

GPT-4o can consider background noise when processing audio input, potentially leading to more contextually aware responses.

Is GPT-4o capable of generating video content?

No, GPT-4o can analyze and describe video content but cannot generate new video content. OpenAI's Sora is the model that can generate video content.

Can GPT-4o mimic specific voices?

No, GPT-4o uses a selection of preset voices for audio output to mitigate risks such as deepfake scams.

How secure is the data input into GPT-4o?

OpenAI follows stringent security measures, but users should always be cautious and avoid sharing sensitive information.

Can GPT-4o be integrated into existing applications?

Yes, GPT-4o can be integrated into various applications via the OpenAI API, enabling enhanced functionalities across different platforms.

Check out our Working with the OpenAI API course to start your journey developing AI-powered applications.

When should I use GPT-4o Mini over GPT-4o?

GPT-4o Mini is ideal for tasks that prioritize speed and efficiency over complex reasoning or multimodal interactions. It is well-suited for simple coding tasks, debugging, or quick responses in lightweight applications, making it a cost-effective alternative for projects that don’t require the full power of GPT-4o.

What are differences between GPT-4o and the o1 model?

GPT-4o is designed for multimodal tasks, handling text, audio, and visual inputs efficiently. The o1 model, however, focuses on advanced reasoning and complex problem-solving, excelling in fields like coding and science. While GPT-4o offers versatility and speed, the o1 model prioritizes deep, logical processing for intricate reasoning tasks.