Track

On Wednesday, July 24, 2024, Mistral AI announced Mistral Large 2, the latest generation of their flagship large language model.

Compared to its predecessor, Mistral Large 2 brings significant advancements in code generation, mathematics, reasoning, and multilingual support. This new model aims to bridge the gap between open-source and closed-source LLMs, offering an alternative for various applications.

What makes Mistral Large 2 stand out? How does it perform against other leading models like GPT-4o, Llama 3.1, and Claude 3 Opus? And what new features does it bring to the table?

Read on to discover the capabilities, performance, and potential applications of Mistral Large 2.

Develop AI Applications

What Is Mistral Large 2?

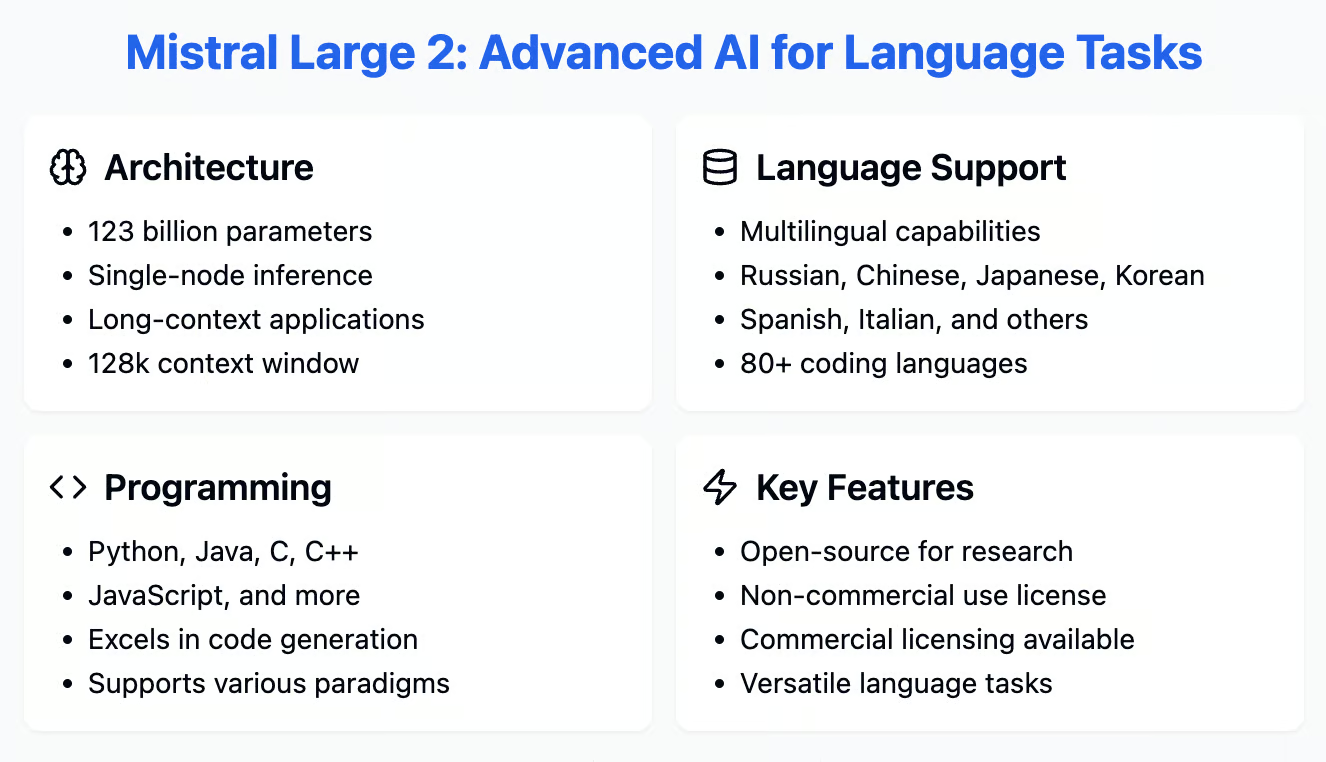

Mistral Large 2 is the newest language model from Mistral AI, designed to excel in various areas like code generation, mathematics, and multilingual tasks. Let’s a look at its key features and capabilities.

123 billion parameters

Mistral Large 2 has 123 billion parameters, making it incredibly powerful for understanding and generating complex language tasks with high accuracy.

This large size allows the model to handle intricate problems with better precision. The model is also built for single-node inference with long-context applications, making it efficient to operate on a single node.

128k context window

A context window of 128k context window allows the Mistral Large 2 to maintain coherence and relevance over long conversations or documents, providing consistent and meaningful outputs throughout extended interactions.

Multiple language and programming support

Mistral Large 2 supports a wide range of languages, including Russian, Chinese, Japanese, Korean, Spanish, Italian, and many others.

It also excels in over 80 coding languages, such as Python, Java, C, C++, and JavaScript, making it a versatile tool for users worldwide.

Open-source and accessible

Mistral Large 2 is available under the Mistral Research License, allowing for open-source use and modification for research and non-commercial purposes.

This makes it accessible for researchers and developers interested in exploring and improving its capabilities.

Commercial licensing

For commercial use, Mistral Large 2 requires a Mistral Commercial License. Interested parties can contact Mistral to obtain this license.

How Mistral Large 2 Works

So, how does Mistral Large 2 work? It uses a decoder-only Transformer architecture, a popular and effective design for modern language models. This setup allows the model to handle various language tasks efficiently. Here’s a look at two key ways Mistral Large 2 shines in managing a wide range of language and coding tasks.

Training on massive datasets

Mistral Large 2 was trained on a vast amount of text and code across many languages and subjects. This extensive training helps the model understand a broad range of topics and skills, from technical documents to everyday conversations and code snippets.

The diverse dataset also enhances the model’s ability to help with programming tasks like code generation and debugging.

Reducing hallucinations

A common issue with large language models is that they sometimes produce information that sounds right but isn’t accurate. To address this, Mistral AI focused on minimizing these “hallucinations” by carefully fine-tuning the model.

They have added stricter accuracy checks and feedback systems to ensure the model gives reliable information. Mistral Large 2 is also designed to recognize when it doesn’t have enough information to provide a confident answer, reducing the chances of misleading or incorrect responses. This focus on accuracy makes Mistral Large 2 a reliable tool for users who need precise and trustworthy information.

Applications of Mistral Large 2

Like its predecessor, Mistral Large 2 is a versatile tool with a wide range of uses. It’s great for coding tasks, including generating, completing, and debugging code.

It also tackles complex math problems and offers clear explanations, making it useful for students and professionals.

The model’s strong reasoning and logical skills are ideal for answering questions and analyzing text, providing deep insights into written content.

Lastly, Mistral Large 2’s multilingual support aids in translation, language learning, and communication across different cultures.

While it mainly handles text now, future updates might expand its capabilities to work with images or audio.

Benchmarks and Performance

Mistral Large 2 is setting new standards for performance and cost efficiency. Here’s a look at how it performs across various benchmarks.

MMLU

On the Massive Multitask Language Understanding (MMLU) benchmark, Mistral Large 2 achieved an impressive 84.0% accuracy.

This benchmark tests the model’s ability to handle a wide range of tasks, from science and humanities to professional challenges. This high score highlights Mistral Large 2’s strong general knowledge and reasoning abilities.

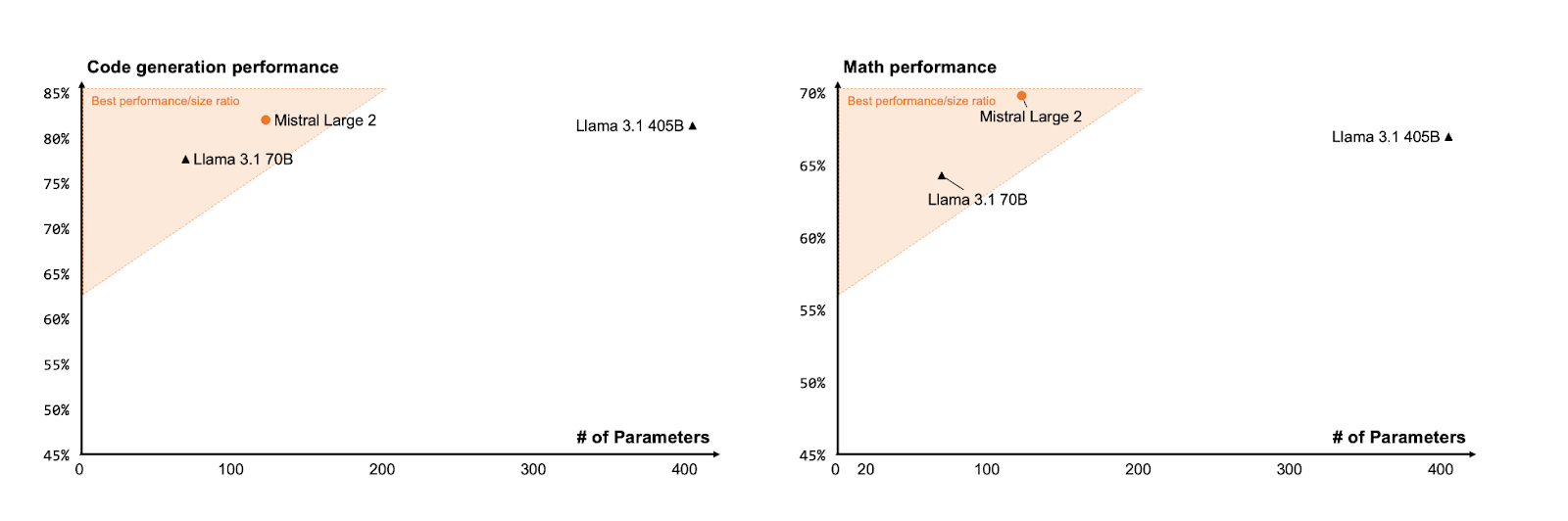

Code generation and math

Mistral Large 2 excels in code generation and math, achieving top scores in these areas while using fewer parameters than larger models like Llama 3.1 405B. Its high performance-to-size ratio makes it stand out, consistently outperforming both larger and smaller Llama 3.1 models.

Source: Mistral AI

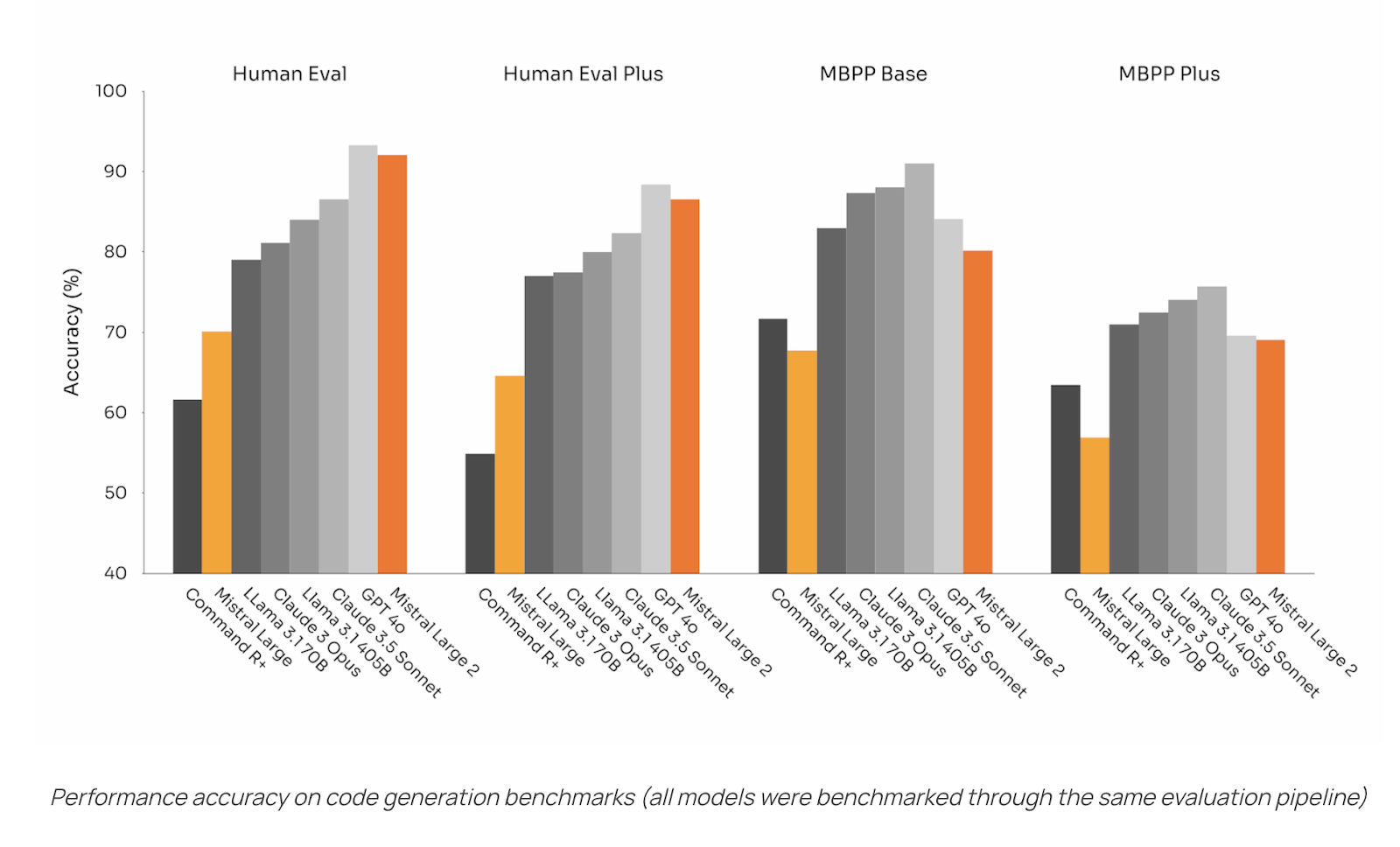

In code generation benchmarks, Mistral Large 2 ranks second only to GPT-4o, with impressive accuracy on Human Eval and Human Eval Plus. Although it is sixth on MBPP Base and MBPP Plus, it still performs well compared to other models.

Source: Mistral AI

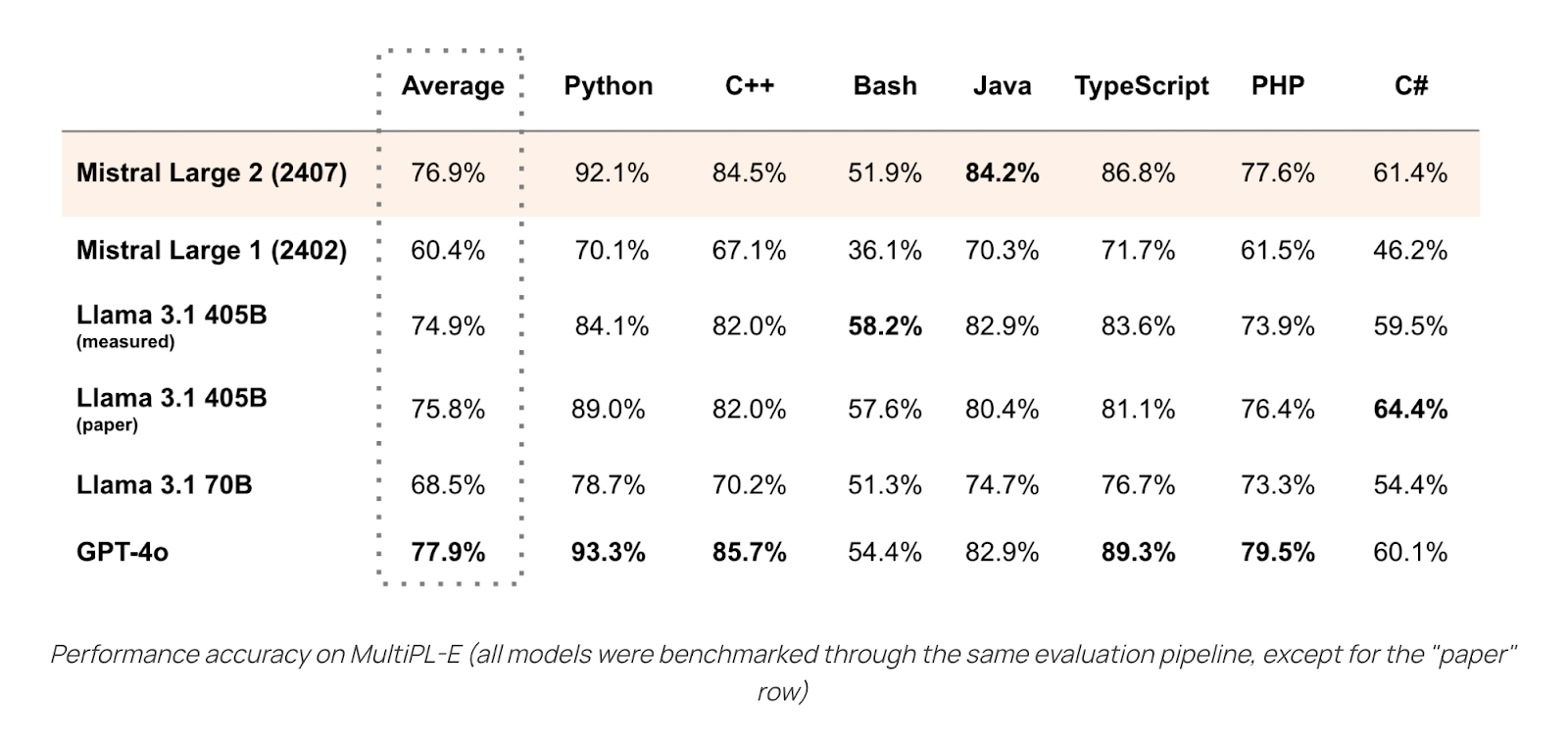

For multi-language code generation, Mistral Large 2 ranks second just behind GPT-4o and shows significant improvement over its predecessor. Overall, it’s highly efficient and versatile, particularly strong in handling code and math tasks.

Source: Mistral AI

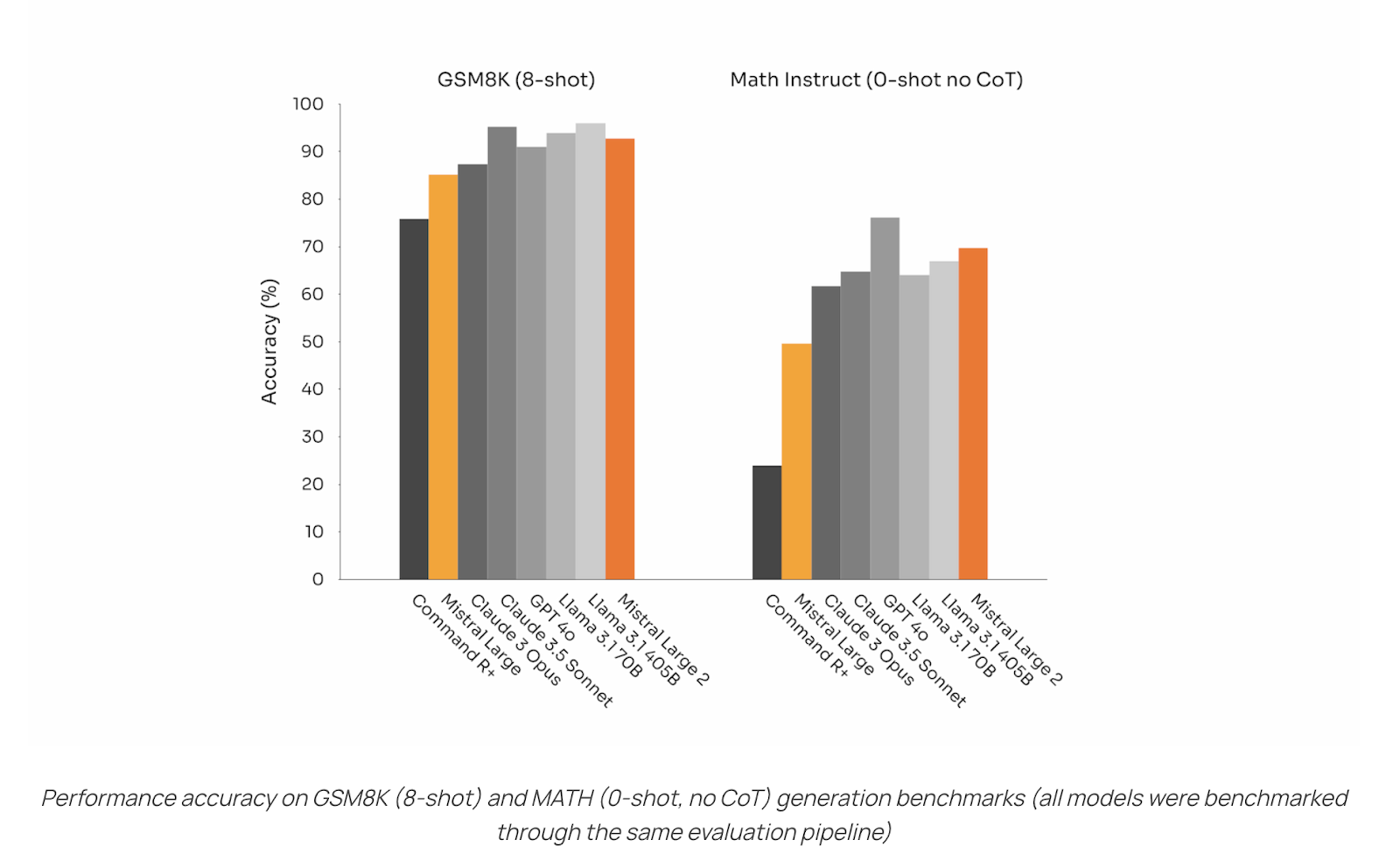

Mistral Large 2 performs well on GSM8K, coming in just behind LLaMA 3.1 70B. On the tougher Math Instruct benchmark, Mistral Large 2 ranks second only to GPT-4o, showing strong mathematical reasoning skills right out of the box. These improvements, especially in zero-shot tasks, reflect its advanced math abilities and solid training.

Source: Mistral AI

Overall, Mistral Large 2 excels in code generation and mathematical reasoning, fields that demand precision and reliability. Trained on a substantial corpus of code, it significantly outperforms its predecessor and is competitive with top models like GPT-4o and Llama 3.1 405B. Its performance shows it’s a powerful tool for software development and academic research.

Instruction following & alignment

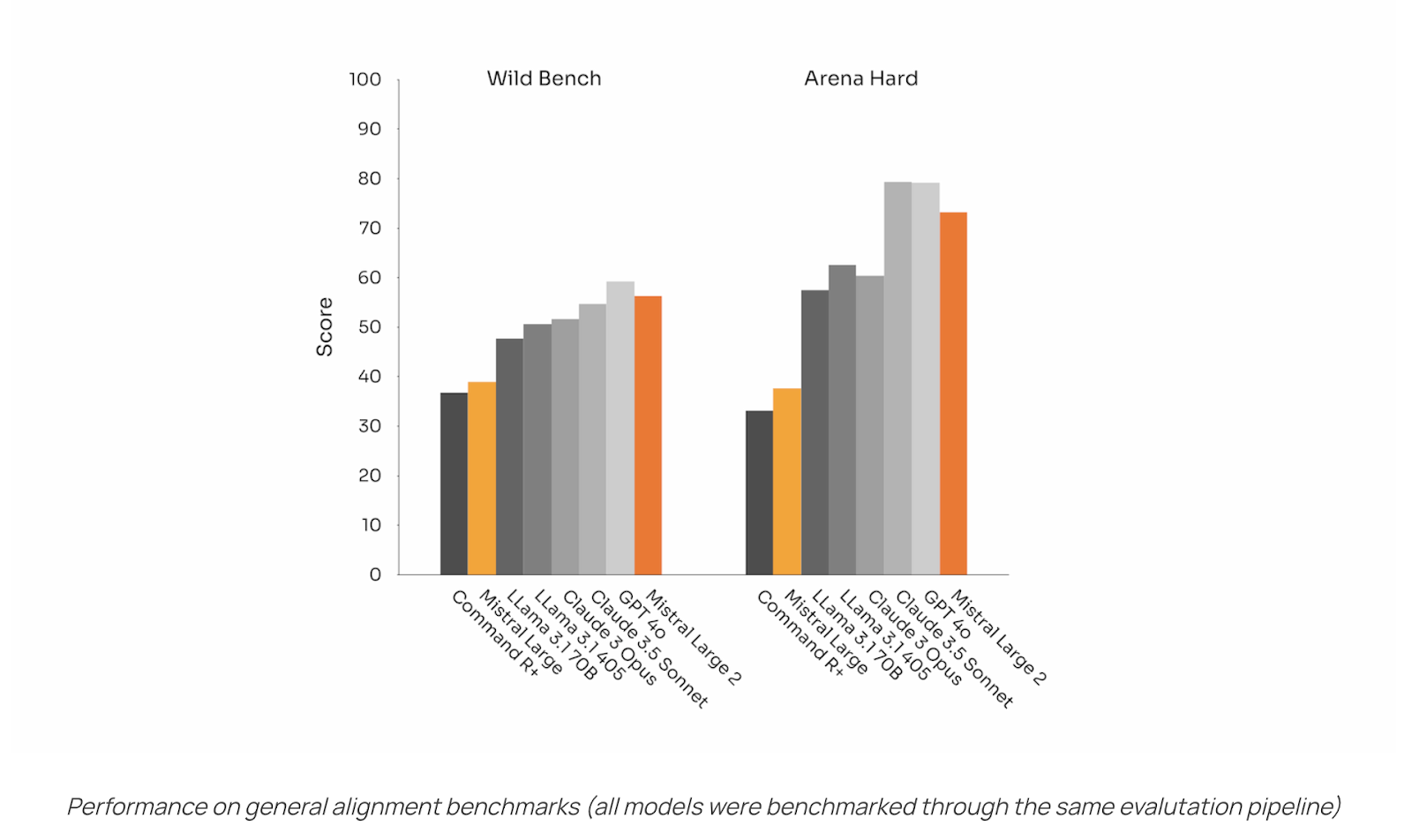

Another key performance of Mistral Large 2 is its strong improvement in instruction-following and conversational capabilities, making it better at following instructions and handle long conversations.

Mistral Large 2 performs well on Wild Bench, coming in second only to GPT-4o. On Arena Hard, it ranks third, behind GPT-4o and Claude 3.5 Sonnet.

Source: Mistral AI

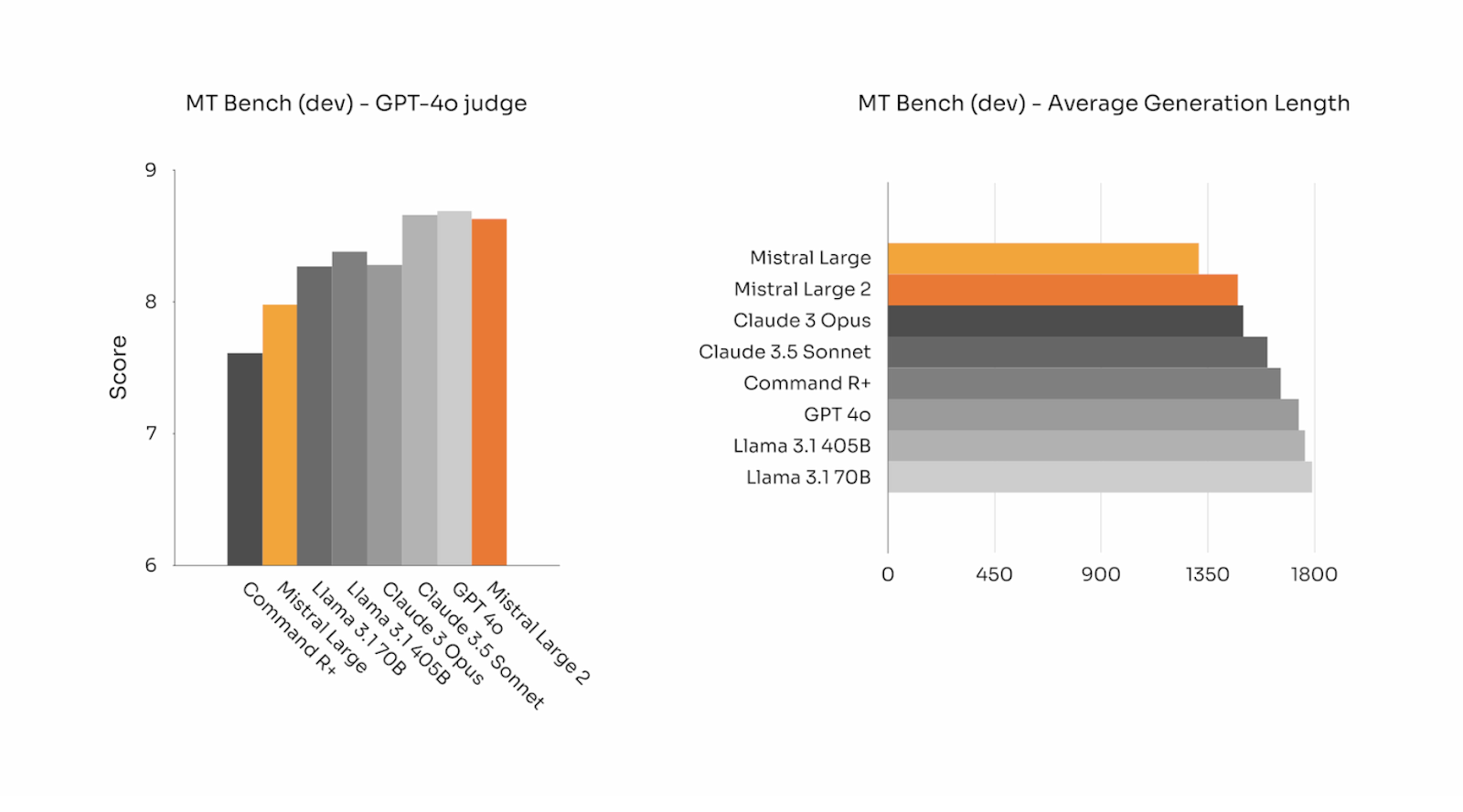

Mistral Large 2’s performance on the MT Bench also scores highly with the GPT-4o judge, ranking third among large models, and it ranks second in generation length, just behind the original Mistral Large. This shows that Mistral Large 2 can deliver both detailed and high-quality responses.

Source: Mistral AI

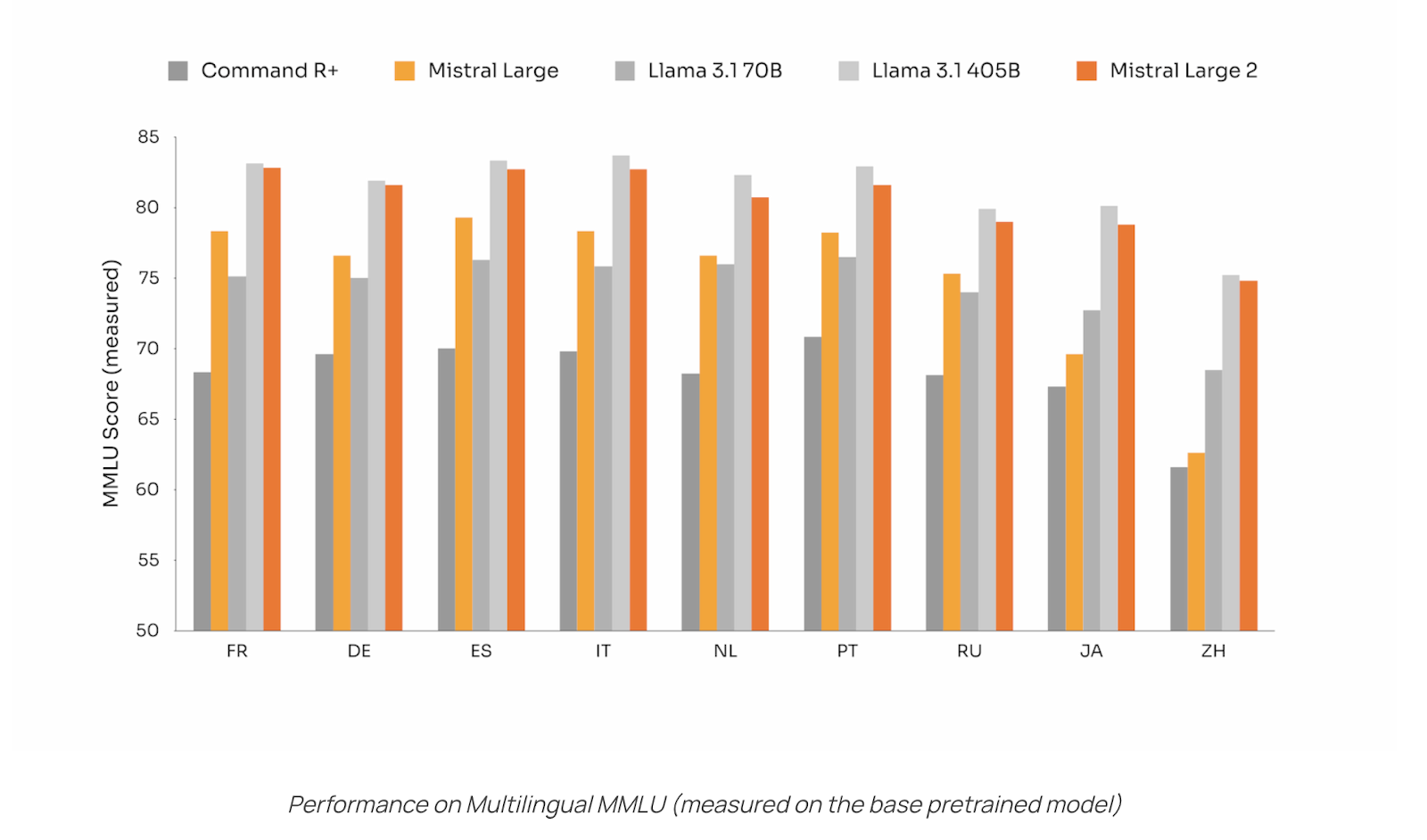

Multilingual MMLU

One of the standout features of Mistral Large 2 is its multilingual capabilities. On the multilingual MMLU benchmark, which evaluates performance across various languages, Mistral Large 2 delivers strong results in all tested languages, consistently ranking second behind the much larger LLaMA 3.1 405B model. This demonstrates Mistral Large 2’s strong balance between performance and efficiency.

Source: Mistral AI

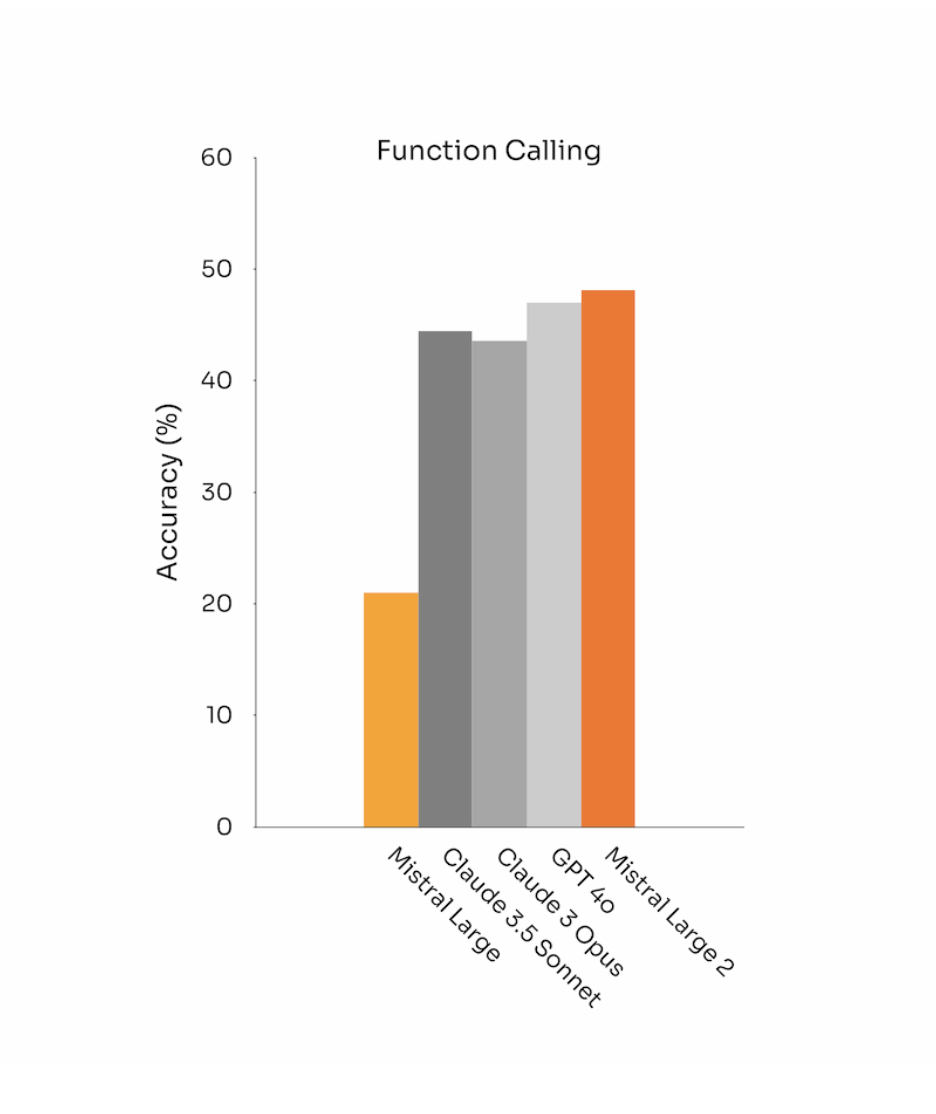

Function calling

Function calling is crucial because it allows the model to execute specific tasks or commands accurately, making it highly effective for practical applications that require precise actions based on user input.

Mistral Large 2 outperformed all the larger models like GPT-4o and Claude 3.5 Sonnet in function calling. This significant improvement demonstrates Mistral Large 2's advanced capabilities and sets it apart from previous models and competitors.

Source: Mistral AI

Performance/cost efficiency

Mistral Large 2 sets a new benchmark on the performance/cost Pareto front, which evaluates the balance between a model’s performance and the cost of serving it. Essentially, it offers great performance without being too expensive, making it an affordable choice for businesses and researchers. This efficiency helps users get impressive results while staying within their budget.

Accessing Mistral Models

You can access Mistral Large 2 in two main ways: La Plateforme and Cloud Service Providers.

La Plateforme

Mistral Large 2 is available on La Plateforme under the name mistral-large-2407, where you can also test it using le Chat. The model weights are hosted on HuggingFace. Overall, you can access Mistral Nemo, Mistral Large, Codestral, and Embed for different needs on the La Plateforme. Fine-tuning options are also now available for Mistral Large, Mistral Nemo, and Codestral.

Cloud service providers

Alternatively, you can also access Mistral Large 2 through major cloud providers. You can find it on Google Cloud Platform's Vertex AI, Azure AI Studio, Amazon Bedrock, and IBM watsonx.ai.

Safety and Responsibility: A Top Priority

Mistral AI is dedicated to making sure its models are used ethically and responsibly.

Strong safety measures

Mistral Large 2 has been thoroughly tested and fine-tuned to minimize risks of harmful or biased outputs. This includes a focus on reducing incorrect or misleading information generated by the model.

Responsible use

Using Mistral Large 2 responsibly goes beyond just technical safeguards. It also involves the ethical actions of its users. To ensure the model is used properly, users must follow the Mistral Research License for non-commercial research or obtain a Commercial License for business purposes. We encourage users to apply Mistral Large 2 in ways that benefit society and to avoid uses that could be harmful or spread misinformation.

Conclusion

Mistral Large 2 marks a step forward for open-source language models.

Its strong performance, wide range of language support, and emphasis on accuracy and safety make it a powerful tool for developers, researchers, and businesses.

If you want to learn more about Mistral’s suite of LLMs, I recommend these blog posts:

FAQs

How does Mistral Large 2 compare to its predecessor, Mistral Large?

Mistral Large 2 offers significant improvements over its predecessor in areas like code generation, mathematics, reasoning, and multilingual support. It achieves higher scores on various benchmarks and boasts a larger context window, allowing it to handle more complex tasks and maintain coherence over longer texts.

Can Mistral Large 2 be used for commercial applications?

Yes, but it requires a Mistral Commercial License. For non-commercial research and development, it's available under the Mistral Research License.

Does Mistral Large 2 support image or audio processing?

Currently, Mistral Large 2 primarily focuses on text-based tasks. However, Mistral AI has indicated plans to expand its capabilities to handle images and audio in future updates.

How can I access and use Mistral Large 2?

You can access Mistral Large 2 through Mistral AI's platform, "la Plateforme," or via managed APIs on major cloud service providers like Google Cloud Platform's Vertex AI, Azure AI Studio, Amazon Bedrock, and IBM watsonx.ai. For commercial use and self-deployment, a Mistral Commercial License is required.

Earn a Top AI Certification

Ryan is a lead data scientist specialising in building AI applications using LLMs. He is a PhD candidate in Natural Language Processing and Knowledge Graphs at Imperial College London, where he also completed his Master’s degree in Computer Science. Outside of data science, he writes a weekly Substack newsletter, The Limitless Playbook, where he shares one actionable idea from the world's top thinkers and occasionally writes about core AI concepts.