Track

As AI coding assistants such as GitHub Copilot gain popularity among developers, open-source alternatives are also emerging.

One notable example is DeepSeek-Coder-V2, a robust open-source model utilizing advanced machine learning techniques. It’s designed specifically for code-related tasks, offering performance comparable to GPT-4 in code generation, completion, and comprehension.

In this article, I’ll explain the features and capabilities of DeepSeek-Coder-V2 and guide you on how to get started with this tool.

Develop AI Applications

What Is DeepSeek-Coder-V2?

DeepSeek-Coder-V2 is an open-source Mixture-of-Experts (MoE) code language model that rivals the performance of GPT-4 on code-specific tasks. Designed to aid developers, this model brings several key features to the table:

- Multilingual: Trained on both code and natural language in multiple languages, including English and Chinese, making it versatile for global development teams.

- Versatile: Supports an extensive range of over 338 programming languages, catering to diverse coding environments and needs.

- Large scale: Pre-trained on trillions of tokens of code and text data, which enhances its understanding and generation capabilities across various coding scenarios.

- Multiple sizes: The models are available in multiple sizes, allowing developers to choose the model size that best fits their computational resources and project requirements.

You can access the model on DeepSeek’s website, they offer paid API access and a chat. The source code is available on GitHub and the research paper is hosted on arXiv.

The models are hosted on Huggingface—however, you need research-grade hardware to run the models locally due to their size.

DeepSeek-Coder-V2 Benchmarks

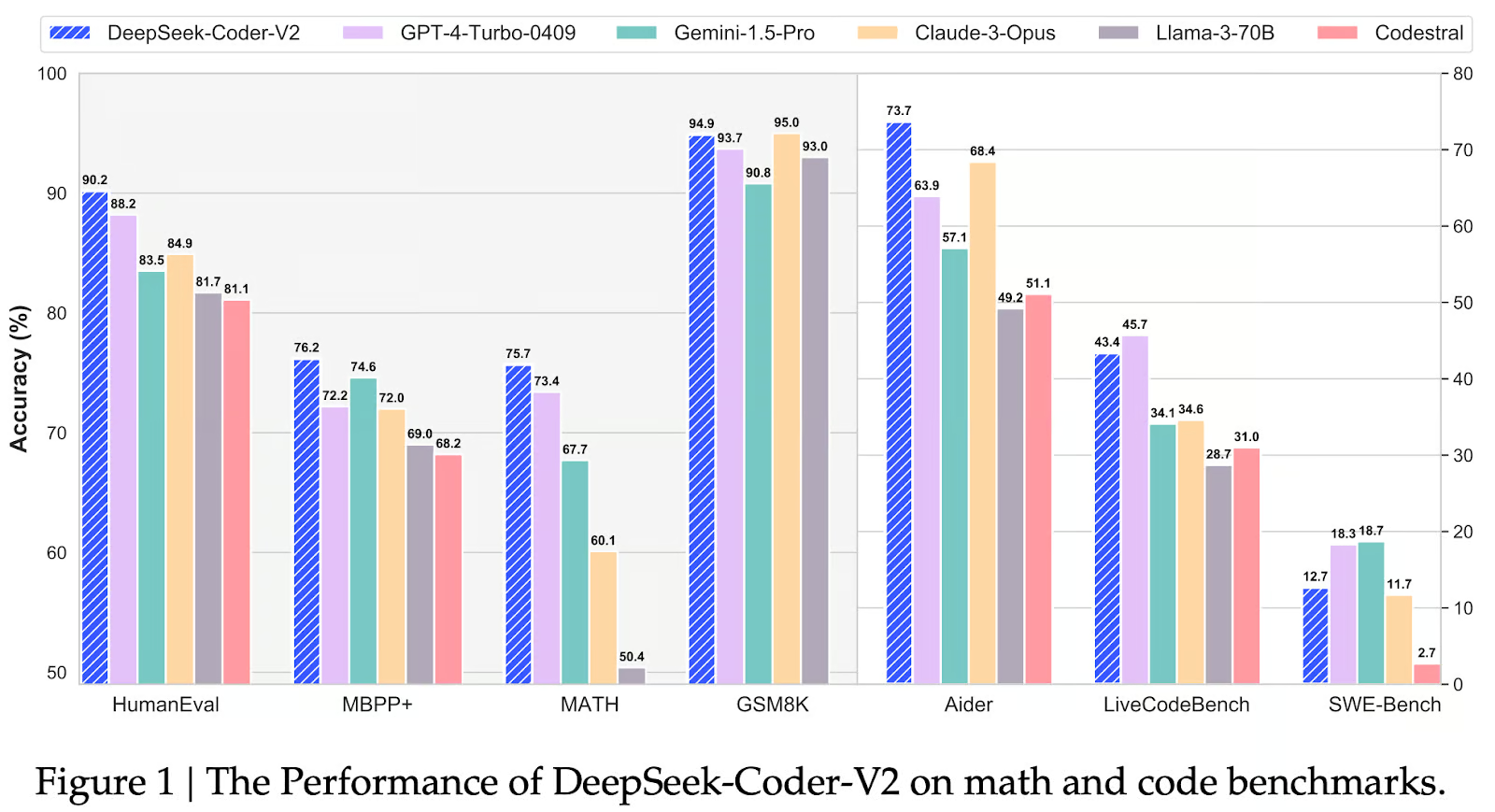

Let’s see how well DeepSeek-Coder-V2 performs across benchmarks and how it compares to models like GPT-4 Turbo, Gemini 1.5 Pro, Claude 3 Opus, LLaMA 3-70B, and Codestral.

Source: Qihao Zhu et. al

HumanEval

The HumanEval benchmark measures code generation proficiency by evaluating whether generated code passes specific unit tests. DeepSeek-Coder-V2 demonstrates exceptional performance on this benchmark, achieving an accuracy of 90.2%. This result underscores the model's ability to generate functional and accurate code snippets, even in complex scenarios.

MBPP+

The MBPP+ benchmark focuses on code comprehension, assessing a model's ability to understand and interpret code structures and semantics. DeepSeek-Coder-V2 again excels in this area, achieving an accuracy of 76.2%, highlighting its strong grasp of code meaning and functionality.

MATH

The MATH benchmark tests a model's mathematical reasoning abilities within code. DeepSeek-Coder-V2 maintains its lead with an accuracy of 75.7%, indicating its proficiency in handling mathematical operations and logic embedded in code, a crucial aspect of many programming tasks.

GSM8K

The GSM8K benchmark focuses on solving grade-school math word problems, evaluating a model's broader problem-solving skills beyond pure code generation. DeepSeek-Coder-V2 comes just behind Claude 3 Opus with an accuracy of 94.9%, demonstrating its ability to understand and solve mathematical problems presented in natural language.

Aider

The Aider benchmark evaluates a model's ability to provide code assistance and suggestions. DeepSeek-Coder-V2 leads with an accuracy of 73.7%, suggesting its potential as a valuable tool for developers seeking real-time guidance and support during coding tasks.

LiveCodeBench

The LiveCodeBench benchmark measures code generation performance in real-world scenarios. DeepSeek-Coder-V2 achieves an accuracy of 43.4% (second after GPT-4-Turbo-0409), showcasing its ability to generate functional and usable code in practical contexts.

SWE Bench Benchmark

The SWE-Bench benchmark specifically evaluates AI models on their ability to perform software engineering tasks, such as code generation, debugging, and understanding complex programming concepts. In this context, DeepSeek-Coder-V2 achieved a score of 12.7, indicating a solid performance but not leading the pack. It falls behind GPT-4-Turbo-0409 (18.7) and Gemini-1.5-Pro (18.3), which show superior capabilities in these tasks. However, DeepSeek-Coder-V2 still outperformed models like Claude-3-Opus (11.7), Llama-3-70B (2.7), and Codestral, suggesting it is a reliable but not top-tier option for software engineering applications based on this benchmark.

How DeepSeek-Coder-V2 Works

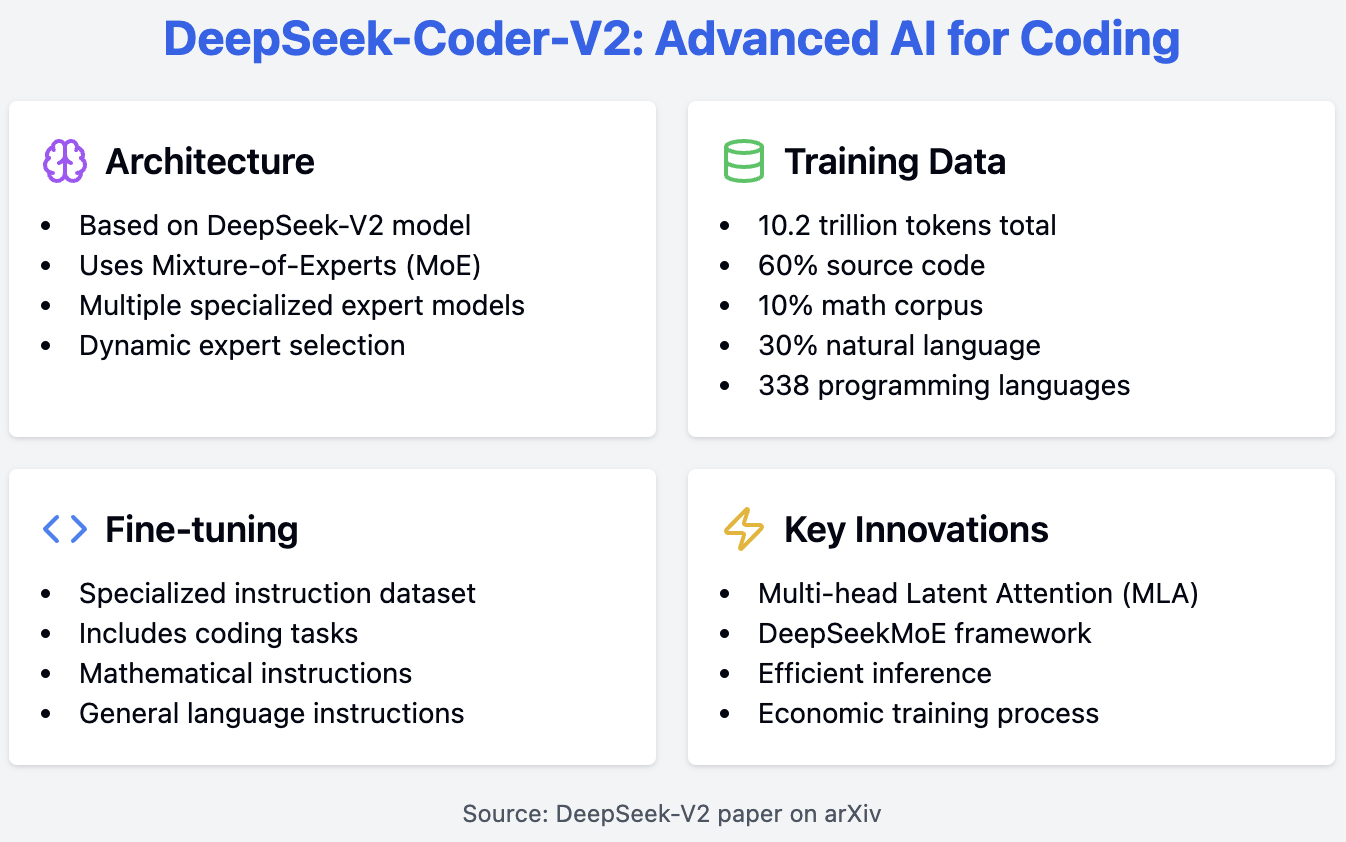

DeepSeek-Coder-V2 builds on the foundation of the DeepSeek-V2 model, utilizing a sophisticated Mixture-of-Experts (MoE) architecture to achieve high performance in code-specific tasks. This model leverages multiple expert models, each specializing in different coding tasks, and dynamically selects the most relevant expert based on the input code. This approach enhances the model's efficiency and accuracy.

The training process of DeepSeek-Coder-V2 involves several critical components. It is pre-trained on a comprehensive dataset comprising 60% source code, 10% math corpus, and 30% natural language corpus, totaling 10.2 trillion tokens. This diverse dataset enables the model to effectively understand and generate code. The source code dataset includes 1,170 billion tokens sourced from GitHub and CommonCrawl, covering 338 programming languages, a significant expansion from previous models.

After pre-training, the model undergoes fine-tuning with a specialized instruction dataset that includes coding, mathematical, and general language instructions. This process improves the model's responsiveness to natural language prompts which transforms the model into a useful assistant.

For those interested in the underlying architecture, the DeepSeek-V2 model, which DeepSeek-Coder-V2 is based on, introduces innovations such as Multi-head Latent Attention (MLA) and the DeepSeekMoE framework. These innovations contribute to efficient inference and economic training, as detailed in the DeepSeek-V2 paper available on arXiv.

Getting Started With DeepSeek-Coder-V2

There are several ways to start using the DeepSeek-Coder-V2 model. You can run the model locally by accessing it through Hugging Face’s transformers library. However, be aware that the models are quite large and require significant computational resources.

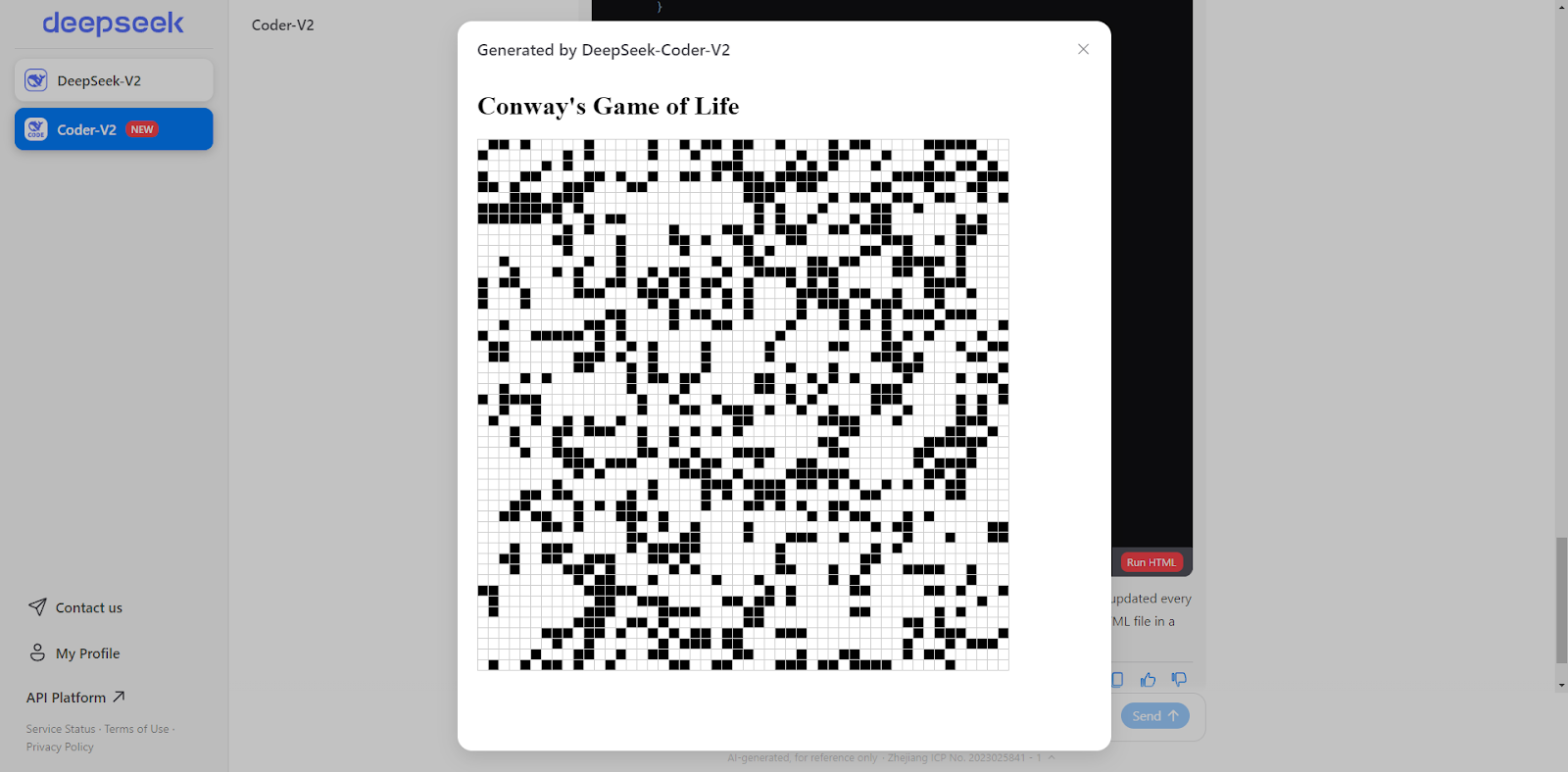

Alternatively, DeepSeek offers a paid API and an online chat interface similar to ChatGPT. DeepSeek’s online chat can also run HTML and JavaScript code directly in the chat window! This feature is quite innovative—I’ve only seen something similar in the Artifacts of the Claude 3.5 Sonnet model. For this article, we will use the chat feature for our examples.

DeepSeek-Coder-V2: Example Usage

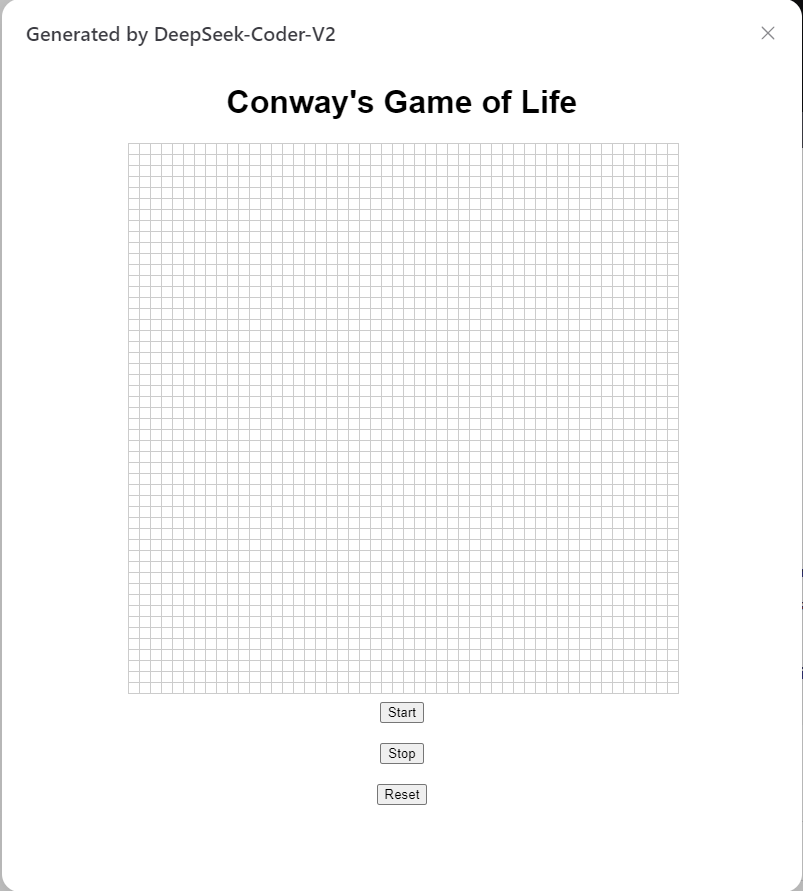

For the first example, I will ask the model to program Conway’s game of life in HTML and JavaScript. I asked the model the following prompt: “Can you write Conway’s game of life in HTML?” Here is the response:

Certainly! Below is a simple implementation of Conway's Game of Life using HTML, CSS, and JavaScript. This version will display the grid in an HTML table and update it every second.<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Conway's Game of Life</title>

<style>

table {

border-collapse: collapse;

}

td {

width: 10px;

height: 10px;

border: 1px solid #ccc;

}

.alive {

background-color: black;

}

.dead {

background-color: white;

}

</style>

</head>

<body>

<h1>Conway's Game of Life</h1>

<table id="grid"></table>

<script>

const N = 50; // Grid size

let grid = createGrid(N);

let intervalId;

function createGrid(N) {

let grid = new Array(N);

for (let i = 0; i < N; i++) {

grid[i] = new Array(N);

for (let j = 0; j < N; j++) {

grid[i][j] = Math.random() > 0.7 ? 1 : 0;

}

}

return grid;

}

function renderGrid(grid) {

const table = document.getElementById('grid');

table.innerHTML = '';

for (let i = 0; i < N; i++) {

const row = table.insertRow();

for (let j = 0; j < N; j++) {

const cell = row.insertCell();

cell.classList.add(grid[i][j] ? 'alive' : 'dead');

}

}

}

function updateGrid(grid) {

const newGrid = grid.map(row => row.slice());

for (let i = 0; i < N; i++) {

for (let j = 0; j < N; j++) {

const aliveNeighbors = countAliveNeighbors(grid, i, j);

if (grid[i][j] === 1) {

newGrid[i][j] = aliveNeighbors === 2 || aliveNeighbors === 3 ? 1 : 0;

} else {

newGrid[i][j] = aliveNeighbors === 3 ? 1 : 0;

}

}

}

return newGrid;

}

function countAliveNeighbors(grid, x, y) {

let count = 0;

for (let i = -1; i <= 1; i++) {

for (let j = -1; j <= 1; j++) {

if (i === 0 && j === 0) continue;

const newX = (x + i + N) % N;

const newY = (y + j + N) % N;

count += grid[newX][newY];

}

}

return count;

}

function startGame() {

intervalId = setInterval(() => {

grid = updateGrid(grid);

renderGrid(grid);

}, 1000);

}

renderGrid(grid);

startGame();

</script>

</body>

</html>We can also click the “Run HTML” button at the end of the response to get the game of life directly in our chat window.

Screenshot by the author.

Next, I asked the model to craft a dynamic website of the game where I could choose the starting board configuration. This is what the result looks like, and it works quite flawlessly.

Screenshot by the author.

For our last example, I tested the model on a hard Project Euler problem—the “jumping frog” problem. Here’s the code generated by the model:

import numpy as np

MOD = 10**9

def matrix_power(matrix, n):

result = np.identity(len(matrix), dtype=int)

while n > 0:

if n % 2 == 1:

result = np.dot(result, matrix) % MOD

matrix = np.dot(matrix, matrix) % MOD

n //= 2

return result

def fib_matrix(n):

if n == 0:

return 0

elif n == 1:

return 1

A = np.array([[1, 1, 1], [1, 0, 0], [0, 1, 0]], dtype=int)

A_power = matrix_power(A, n - 3)

initial_vector = np.array([2, 1, 1])

return np.dot(A_power, initial_vector)[0] % MOD

def S(L):

result = 0

for n in range(1, L + 1):

f_n = fib_matrix(n)

result = (result + f_n**3) % MOD

return result

# Compute S(10**14) % 10**9

print(S(10**14))This script would take ages to run, so I asked the model to optimize it and got the following output:

import numpy as np

MOD = 10**9

def matrix_power(matrix, n):

result = np.identity(len(matrix), dtype=int)

while n > 0:

if n % 2 == 1:

result = np.dot(result, matrix) % MOD

matrix = np.dot(matrix, matrix) % MOD

n //= 2

return result

def fib_matrix(n):

if n == 0:

return 0

elif n == 1:

return 1

A = np.array([[1, 1, 1], [1, 0, 0], [0, 1, 0]], dtype=int)

A_power = matrix_power(A, n - 3)

initial_vector = np.array([2, 1, 1])

return np.dot(A_power, initial_vector)[0] % MOD

def find_pisano_period(m):

previous, current = 0, 1

for i in range(m * m):

previous, current = current, (previous + current) % m

if previous == 0 and current == 1:

return i + 1

def S(L):

pisano_period = find_pisano_period(MOD)

period_sum = 0

for n in range(1, pisano_period + 1):

f_n = fib_matrix(n)

period_sum = (period_sum + f_n**3) % MOD

full_periods, remainder = divmod(L, pisano_period)

result = (full_periods * period_sum) % MOD

for n in range(1, remainder + 1):

f_n = fib_matrix(n)

result = (result + f_n**3) % MOD

return result

# Compute S(10**14) % 10**9

print(S(10**14))I let the optimized code run for half an hour, but it still didn’t produce a result. While I think the solutions are correct, the challenge lies in optimizing the code. Given that only 328 people have managed to solve this problem, it’s not surprising that the model couldn’t solve it.

Conclusion

DeepSeek-Coder-V2 is a notable addition to the growing field of open-source coding assistants, demonstrating competitive performance with established proprietary models.

To get the most out of DeepSeek-Coder-V2, follow best practices by providing clear, specific prompts. I also encourage you to provide feedback to the developers if you encounter any bugs.

If we want to learn more about AI coding assistants, I recommend listening to this DataFramed podcast: The Future of Programming with Kyle Daigle, COO at GitHub.