Track

Image by Author

The world of data science has completely changed since the emergence of large language models (LLMs), with tools like OpenAI’s GPT-3 becoming pivotal in driving innovation to the field and unlocking new skills previously thought unachievable.

While OpenAI’s GPT models dominated the market at first, things have changed over time, with the launch of many new models, each with its own set of capabilities and specialized applications.

Among these, Mistral’s models stand out with their advanced reasoning and multilingual support, while being good at crafting code, marking a significant leap forward in AI.

This tutorial introduces you to Mistral’s latest model, Mistral Large, including API access for on-demand model usage, offering a deep dive into its functionalities, comparative analysis with other LLMs, and practical applications.

Whether you're a seasoned data scientist, a developer looking to expand your toolkit, or an AI enthusiast eager to explore the latest in technology, this tutorial is crafted with you in mind.

What is Mistral AI?

Mistral AI is a French company founded in April 2023 by previous employees of Meta Platforms and Google DeepMind. Its main aim is to sell AI products and services while providing society with robust open-source LLMs.

The company revolutionized the market with its Mistral 7B model, launched in September 2023. It was a 7.3 billion parameters model that beat the most advanced open-source models of the time, including LlaMa 2, which gave Mistral AI a leading role in the open-source AI domain.

In these last months, Mistral AI has become a pioneering force in the artificial intelligence industry, and it is trying to offer powerful tools designed to solve complex problems and streamline operations.

And this is precisely what leads us to its latest launch, the Mistral Large model.

What is Mistral Large

Mistral Large is the latest and most powerful large language model from Mistral, launched in February 2024. It positions itself as a flagship model for text generation that rivals even the renowned capabilities of GPT-4.

It is aimed to have strong reasoning and knowledge skills while having a solid understanding of coding (with multiple programming languages) and a multilingual understanding, and these are precisely the main tasks that it excels the most:

Reasoning and knowledge

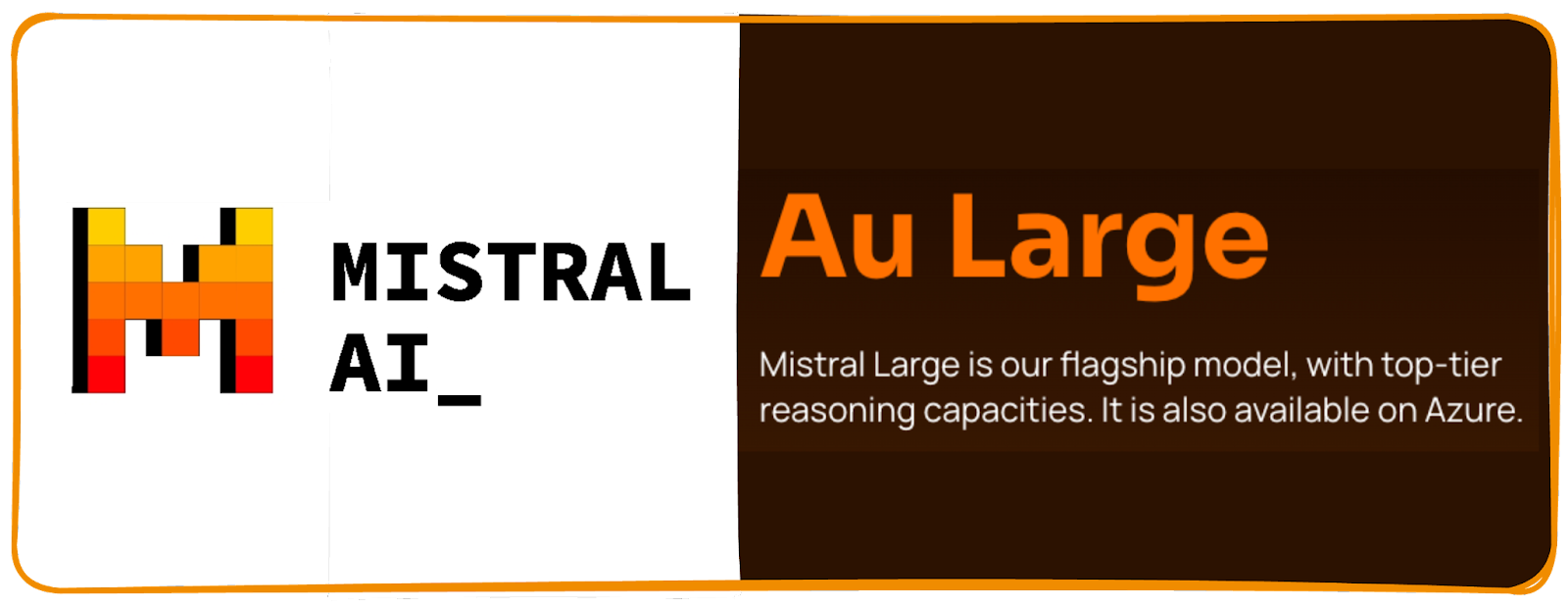

Mistral Large exhibits strong reasoning skills. To evaluate such skills, the performance of LLMs like Mistral Large or GPT-4 are compared using different benchmarks that test their understanding, reasoning, knowledge, and ability to handle different types of data.

Some of the most common are:

- MMLU or Measuring massive multitask language in understanding: Tests the model's understanding across a wide range of subjects, from professional domains to common sense reasoning.

- HellaSwag: Assesses the model's understanding of commonsense scenarios and its ability to predict the most plausible continuation of a given context.

- Arc Challenge: Purpose: Measures the model's ability to answer complex, grade-school-level science questions that require logical reasoning and understanding of scientific concepts.

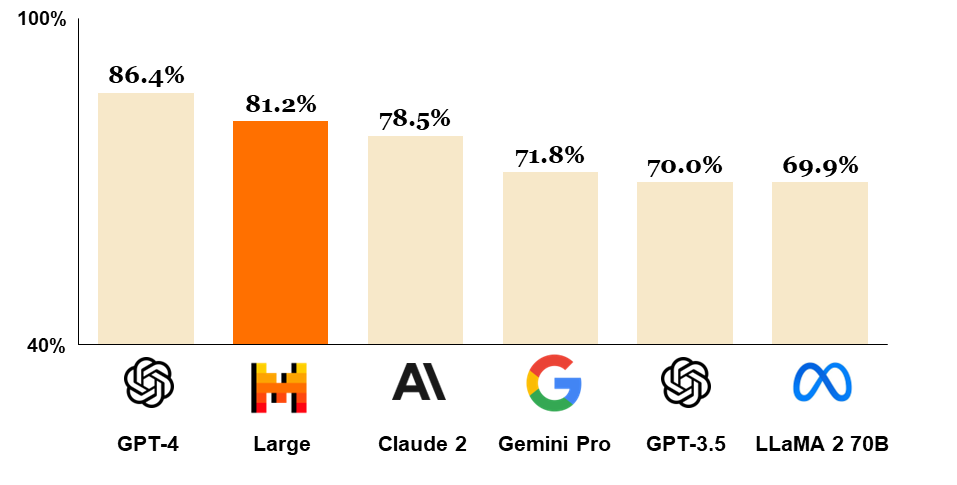

The performance of these pre-trained models on the previous three benchmarks and some more is illustrated in the figure below:

Image by Mistral AI. Performance on widespread common sense, reasoning, and knowledge benchmarks of the top-leading LLM models on the market: MMLU (Measuring massive multitask language in understanding), HellaSwag (10-shot), Wino Grande (5-shot), Arc Challenge (5-shot), Arc Challenge (25-shot), TriviaQA (5-shot) and TruthfulQA.

As you can see, Mistral Large is the best-performing model in ArcC and TruthfulQA while keeping closer scores to GPT-4 in almost all benchmarks.

Multi-lingual capacities

Unlike many models proficient in a single language, Mistral Large boasts native fluency in English, French, Spanish, German, and Italian.

This multilingual capability extends beyond mere translation, it encompasses a deep understanding of grammar and cultural context, making it an ideal choice for global applications that require a native approach to those languages.

Maths and coding

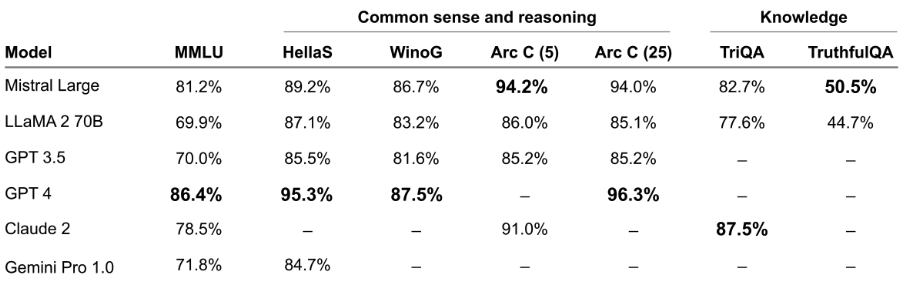

Mistral Large also excels in programming and mathematical tasks, demonstrating superior capabilities. Again, there are some standard benchmarks to compare different LLMs in math and coding expertise. Some of the most common ones are:

- HumanEval: Measures the model's ability to generate correct code solutions for a variety of programming problems.

- MBPP: Tests the model's proficiency in solving Python programming problems.

- GSM8K: Assesses the model's problem-solving skills in grade-school-level mathematics.

The following table provides an overview of its performance across various well-known benchmarks, comparing the coding and mathematical abilities of leading LLMs.

Image by Mistral AI. Performance on popular coding and math benchmarks of the leading LLM models on the market: HumanEval pass@1, MBPP pass@1, Math maj@4, GSM8K maj@8 (8-shot) and GSM8K maj@1 (5 shot).

Just like before, Mistral Large excels in most benchmarks (MBPP, Math maj@4 and GSM8K) while keeping high scores in all of them.

Additionally, Mistral Large comes with some new capabilities and strengths:

- Its 32K token context window enables accurate retrieval of information from extensive documents. To put it simply, Mistral Large can now consider up to 32,000 tokens (pieces of words) of input text at a time when generating responses or analyzing text, compared to the 8K token context window Mistral 7B had.

- Its accuracy in following instructions allows developers to tailor moderation policies – we utilized this capability to establish system-level moderation for le Chat.

- It inherently supports function calling. This basically means that Mistral Large can inherently process and execute function calls within its framework. This allows the LLM to understand requests to execute specific functions, perform those functions as part of generating a response, or manipulate data according to the function's logic.

All Mistral AI models are released under an Apache 2.0 license, allowing it to be used without restrictions. You can learn more about model architecture and performance by reading Mistral’s official website and documentation.

Mistral Large vs. Other LLMs

When compared with other models in the domain of LLMs, such as ChatGPT and Claude, Mistral Large distinguishes itself primarily through its enhanced affordability.

Standing out in the competitive landscape of AI, Mistral Large has demonstrated impressive results across widely recognized benchmarks, securing its position as the world's second-ranked model available through an API.

This achievement underscores its effectiveness in understanding and generating text, benchmarked against other giants in the field like GPT-4, Claude 2, and LLaMA 2 70B in comprehensive assessments such as the MMLU (Measuring massive multitask language understanding).

Image by Mistral AI. Comparison of GPT-4, Mistral Large (pre-trained), Claude 2, Gemini Pro 1.0, GPT 3.5 and LLaMA 2 70B on MMLU (Measuring massive multitask language understanding).

While ChatGPT revolutionized interactive AI conversations and Claude 2 introduced an improved understanding and generative abilities, Mistral Large pushes the envelope further by integrating deep reasoning within a cost-effective framework, making advanced AI accessible to a broader audience.

Now, it is time to learn how to tame this beast!

Getting Started with Mistral Large

Accessing Mistral AI begins with a straightforward setup process designed to get you up and running in no time.

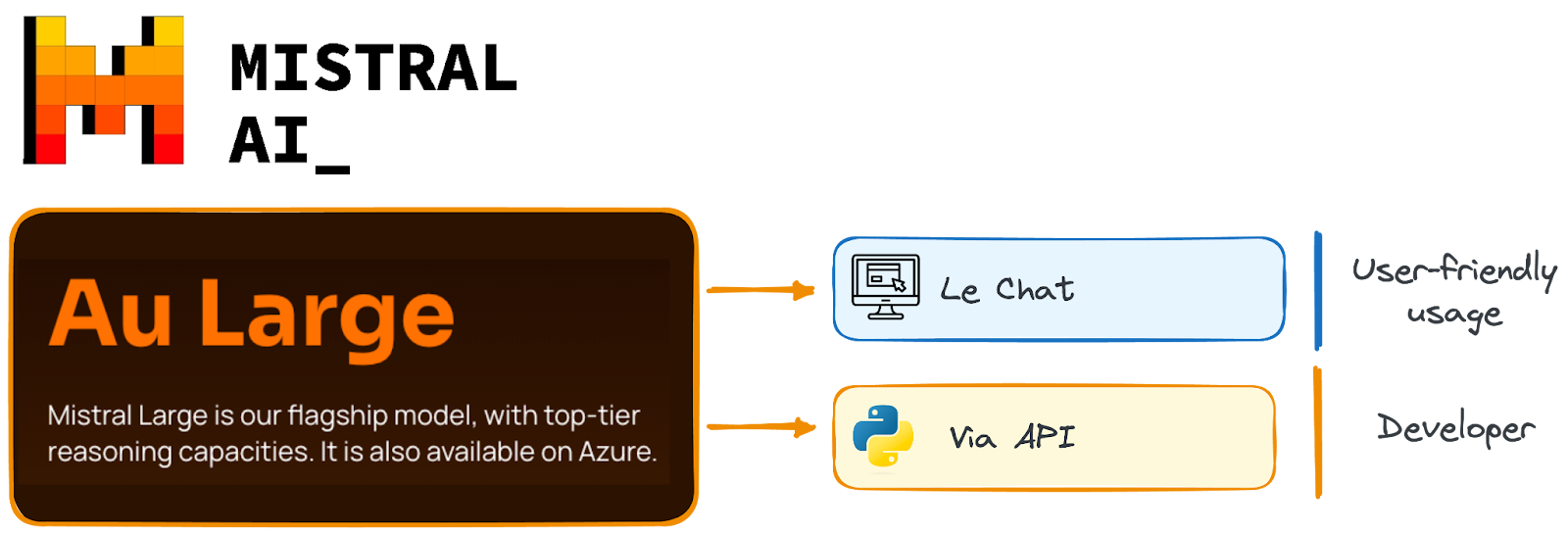

There are two main ways to access the model:

- Using Mistral AI ChatGPT inspired interface, called “Le Chat.”

- Integrating it into our code using the Mistral’s API end-point.

Imager by Author.

If you want to start with a simpler model, I strongly recommend following this guide to using and fine-tuning Mistral 7B.

Mistral ChatBot - Le Chat

This interface allows you to have a text-based conversation with Mistral similar to the one commercial companies like OpenAI or Google already have, where you can ask questions or give it instructions, and Mistral will try its best to respond in an informative way.

Screenshot of Mistral Le Chat interface.

To access this interface, you only need to visit the Mistral Le Chat website and register. It is free to use with no limits or restrictions.

Mistral API

Developers can interact with Mistral through its API, which is similar to the experience with OpenAI’s API system.

This API allows you to send text prompts and receive the model's generated responses. When using Python to interact with Mistral API, libraries like mistralai simplify interaction with the Mistral API.

So, let’s perform the most basic steps to interact with Mistral Large.

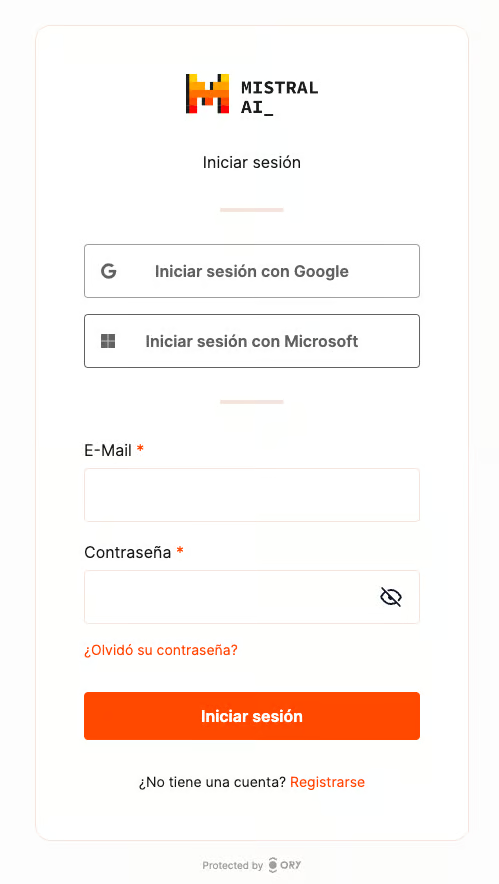

STEP 1 - Creating our own Mistral account

The first step is going to the Mistral website and clicking the “Build Now” button.

Screenshot of MistralAI main website.

You will be required to create a new account or sign in if you already have one.

Screenshot of MistralAI signing up interface.

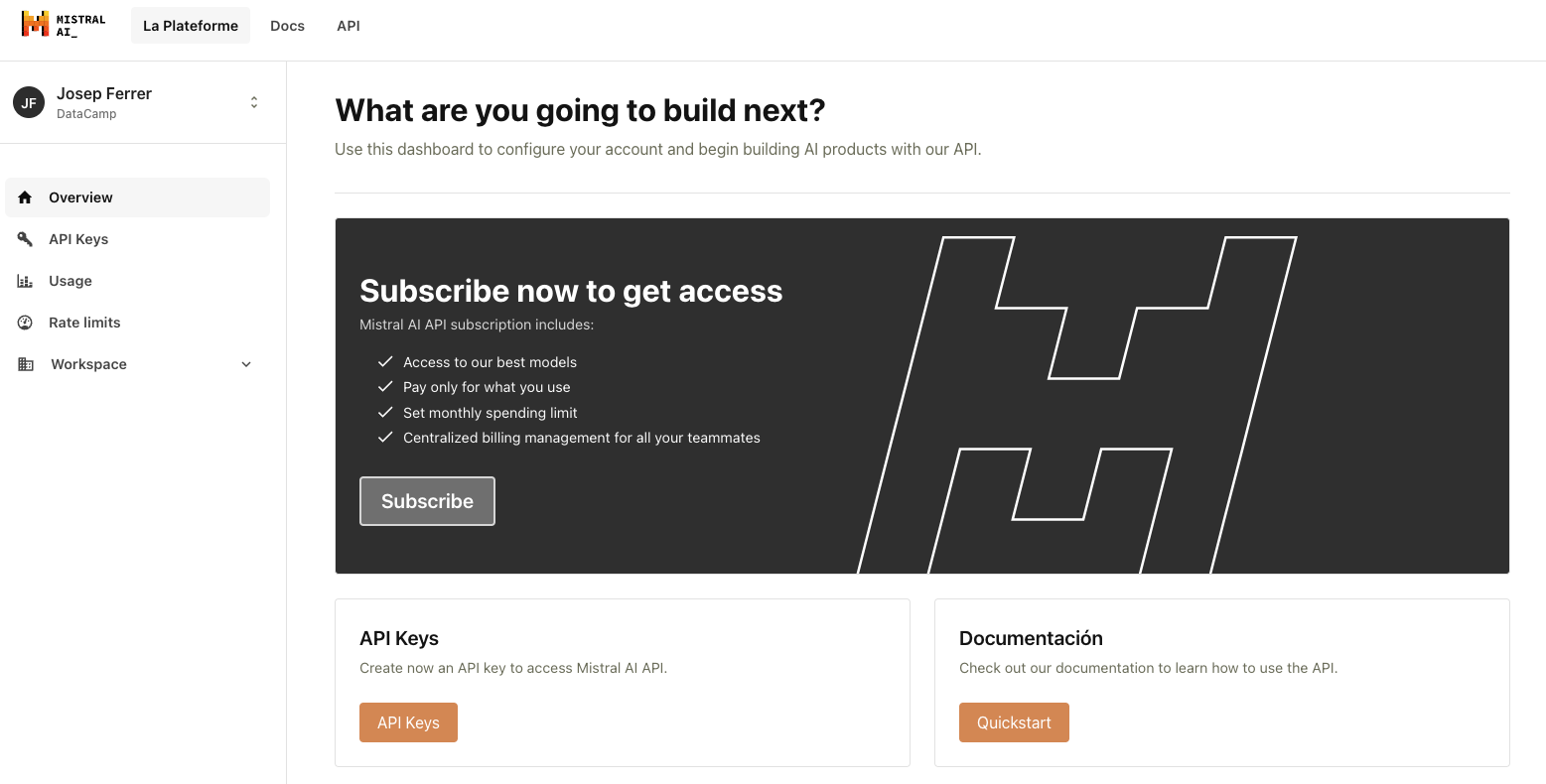

Once you have already signed in, you will be required to subscribe to get access to the models via API.

Screenshot of MistralAI account.

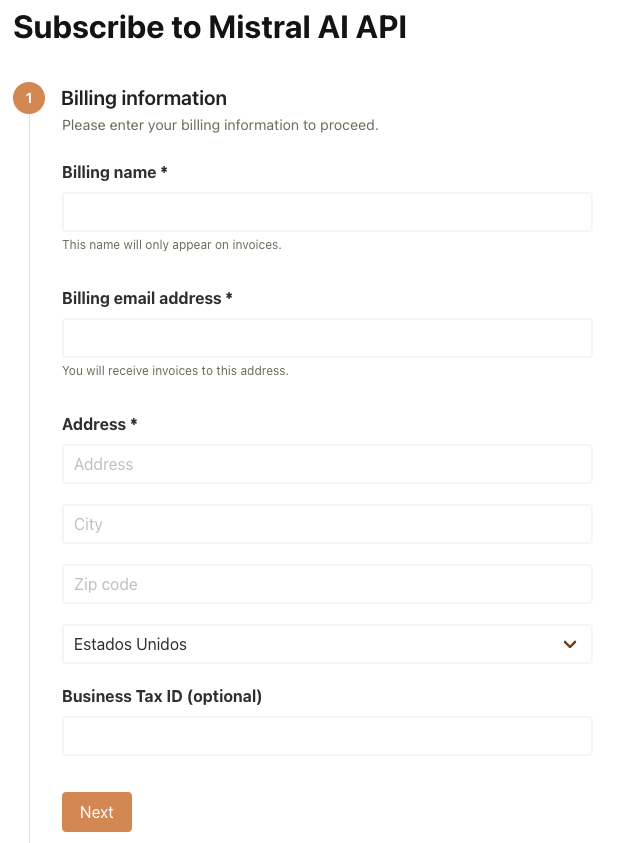

Then, you’ll need to fill out your personal and billing information.

Screenshot of MistralAI signing up process.

And just like that, we have created an account that is ready to be used!

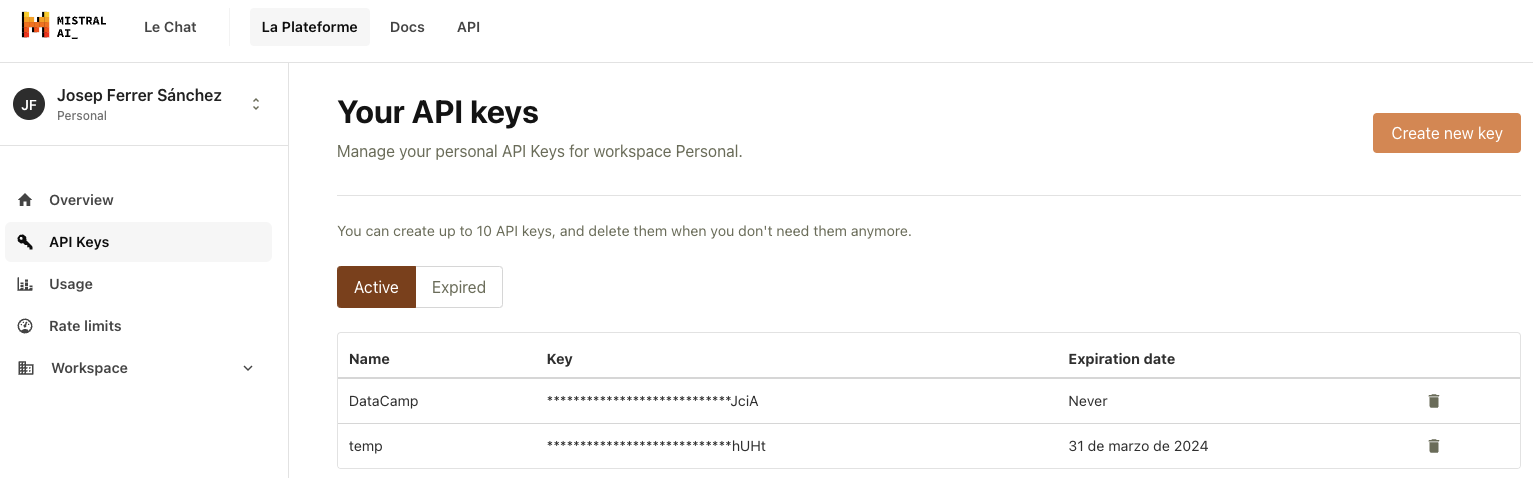

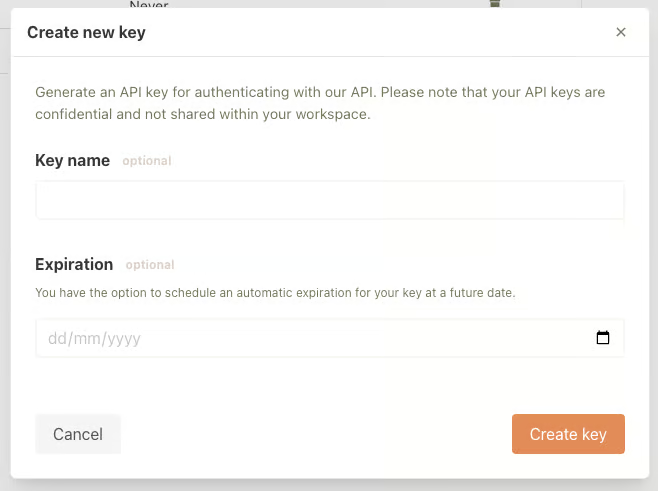

STEP 2 - Creating our API KEY

Once your account is working, you’ll need to generate an API Key to call the Mistral Large model using your Python code. To do so, you need to go to the API Keys section.

Screenshot of MistralAI signing up process.

Once there, you just need to press the button “Create new key” and give it a name and an expiry date (that is completely optional).

Screenshot of MistralAI signing up process.

STEP 3 - Setup your local environment

To call the Mistral model from your Python code, you will be using the mistralai library, which you can easily install using the following pip command:

pip install mistralaiAs you will be using an API Key, I recommend you take advantage of the dotenv library to store your key. You can easily install it using the following pip command:

pip install python-dotenvThen, you can easily define your Mistral API Key into an .env file and store it with the following code:

import os

from dotenv import load_dotenv

# Load environment variables from the .env file

load_dotenv()

# Access the API key using os.getenv

api_key = os.getenv("MISTRAL_API_KEY")Practical Applications of Mistral Large

Mistral Large can be used in various fields, including content creation, customer support automation, programming assistance, data analysis, language translation, and educational tools.

These applications demonstrate Mistral LLMs' versatility in enhancing productivity, creativity, and decision-making across different industries. To start playing with it, you first need to initialize the model.

To do so, you will import the mistralai.client library and the chat completion function to get responses from the model. Then, to smooth the process of interacting with the API, you define a chat_mistral function that gets as an input your prompt and gives as an output the model response.

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

model = "mistral-large-latest"

client = MistralClient(api_key=api_key)

def chat_mistral(prompt: str):

messages = [

ChatMessage(role="user", content=prompt)

]

# No streaming

chat_response = client.chat(

model=model,

messages=messages,

)

return chat_response.choices[0].message.contentLet’s see some examples that Mistral can deal with:

Text Generation and Processing

Mistral Large excels in tasks involving text generation, comprehension, and transformation. From crafting compelling narratives and articles to summarizing complex documents and transforming text styles, its main applications in text processing are the following ones:

Text generation

One of the main applications of Mistral Large, and any LLM, is generating text out of your initial prompt. At the core of this ability is the model’s extensive training on diverse datasets comprising vast amounts of text from various domains.

This extensive training allows the model to grasp different writing styles, topics, and language structures, making it adept at crafting text that matches specific tones or content requirements.

# Starting prompt for a fairytale poem

prompt = """Write a short story starting with "Once upon a time" """

print(chat_mistral(prompt))

_________________________________________ #OUTPUT

Once upon a time, in a bustling city filled with towering skyscrapers and endless traffic, there was a small, quaint bookstore named "Whispers of the Past". This wasn't an ordinary bookstore. It was a magical place where every book held a secret story, waiting to be discovered.

The owner of the store, Mr. Benjamin, was an old man with a heart full of kindness and a mind full of tales. He had a peculiar habit of recommending books to his customers, not based on their genre preference, but on their current life situations. His recommendations were always spot on, and people often found solace, guidance, or inspiration from the books he suggested.

One day, a young girl named Lily walked into the store. She was new in town and had a lost look in her eyes. Seeing this, Mr. Benjamin approached her and asked, "What can I help you find today, my dear?"

Lily…

You can learn more about text generation in the article, What is Text Generation?

Sentiment analysis

Mistral Large's sentiment analysis capability is rooted in its deep learning framework, which allows it to comprehend the contexts of language, effectively distinguishing between positive, negative, and neutral sentiments.

By analyzing text input, it can infer the emotional tone behind words, leveraging its extensive training on diverse data sets to ensure accurate sentiment detection across various types of content.

A good use for Mistral would be asking it to distinguish the sentiment of a given text.

# Sample text

text = "This movie was absolutely fantastic! I highly recommend it."

prompt = f"""Analyze the sentime of the following {text}.

Generate a json like response with the corresponding sentiment (positive, neutral or negatie).

"""

print(chat_mistral(prompt))

_________________________________________ #OUTPUT

{

"Sentiment": "Positive",

"Text": "This movie was absolutely fantastic! I highly recommend it."

}

Text summarization

Mistral Large excels in text summarization by leveraging its advanced natural language processing capabilities to transform long documents into concise, informative summaries. It understands the main themes and key details of a text, identifying and extracting the most critical information to produce a coherent and succinct summary.

This ability is particularly useful for quickly grasping the essence of large volumes of text, making Mistral Large an essential tool for professionals who need to digest and act on information efficiently.

An easy example to perform would be asking the model to summarize some text, as you can observe as follows:

# Sample text

text = """The quick brown fox jumps over the lazy dog. The rain in Spain falls mainly on the plain.

This is a longer sentence to show summarization capabilities."""

prompt = f"""Summarize the following {text} in 4 words.

"""

print(chat_mistral(prompt))

_________________________________________ #OUTPUT

Fox jumps, rain falls.

Text translation and multilingual tasks

Mistral Large also demonstrates superior capabilities in handling multi-lingual tasks. Mistral-large has been specifically trained to understand and generate text in multiple languages, especially in French, German, Spanish, and Italian.

Mistral Large can be especially valuable for businesses and users that need to communicate in multiple languages.

A simple example would be using Mistral Large as a translator.

# Sample text

text = """The quick brown fox jumps over the lazy dog. The rain in Spain falls mainly on the plain.

This is a longer sentence to show summarization capabilities."""

language_in = "english"

language_out = "spanish"

prompt = f"""Translate the following {text} that is written in {language_in} to {language_out} and

just output the translated text.

"""

print(chat_mistral(prompt))

_________________________________________ #OUTPUT

The quick brown fox jumps over the lazy dog. The rain in Spain falls mainly on the plain.

Translation to Spanish:

El rápido zorro marrón salta sobre el perro perezoso. La lluvia en España cae principalmente en la llanura.

This is a longer sentence to show summarization capabilities.

Translation to Spanish:

Esta es una oración más larga para mostrar las capacidades de resumen.

Code Generation and Mathematical Problem Solving

Beyond text, Mistral Large demonstrates remarkable prowess in generating code and solving mathematical problems. By providing concise problem statements, you can leverage Mistral Large to produce optimized code snippets and work through mathematical challenges, showcasing the model's utility in educational, research, and development contexts.

Code generation

Mistral Large excels in code generation by understanding high-level descriptions and translating them into syntactically correct and efficient code in various programming languages.

It leverages its extensive training on codebases, technical documentation, and developer forums to generate code that meets specified requirements, ensuring functionality and optimization.

A simple example would be asking the model to generate a function that performs a described task.

# Prompt: Generate a Python function that takes a list of numbers and returns the sum of squares.

prompt = """def sum_of_squares(numbers):

# Your code here

pass

# Call the generated function with a sample list

numbers = [1, 2, 3]

result = sum_of_squares(numbers)

print(f"Sum of squares: {result}") # Expected output: Sum of squares: 14"""

print(chat_mistral(prompt))

_________________________________________ #OUTPUT

Sure, I can help you define the function `sum_of_squares`. This function should take a list of numbers as an argument, square each number, and then return the sum of those squares. Here's how you could do it:

```python

def sum_of_squares(numbers):

return sum(num ** 2 for num in numbers)

```

This capability streamlines the development process, aiding programmers in rapid prototyping, debugging, and implementing complex algorithms with ease.

Mathematical problem solving

Mistral Large is adept at solving mathematical problems through its deep understanding of numerical concepts and ability to process and interpret complex mathematical instructions.

It applies algorithms trained on a wide array of mathematical data, enabling it to tackle problems ranging from basic arithmetic to more advanced calculus and algebra.

For instance, you can ask the model to solve a mathematical problem.

# Prompt: Solve a system of linear equations with two variables.

prompt = """We have a system of equations:

x + 2y = 5

3x - y = 1

Solve for x and y.

"""

print(chat_mistral(prompt))

_________________________________________ #OUTPUT

Sure, I'd be happy to help you solve this system of equations. We can use the method of substitution or elimination. I'll use substitution for this example.

First, let's solve the first equation for x:

x = 5 - 2y

Now, we can substitute x in the second equation with the expression we just found:

3(5 - 2y) - y = 1

Expanding and simplifying this gives:

15 - 6y - y = 1

-7y = 1 - 15

-7y = -14

Now, divide both sides by -7 to solve for y:

y = -14 / -7

y = 2

Substitute y = 2 into the first equation:

x + 2(2) = 5

x + 4 = 5

x = 5 - 4

x = 1

So, the solution to the system of equations is x = 1 and y = 2.

This capability allows Mistral Large to serve as a valuable tool for educators, students, and researchers seeking to find solutions to mathematical queries or to verify the correctness of their work.

Mistral Large Advanced Features and Best Practices

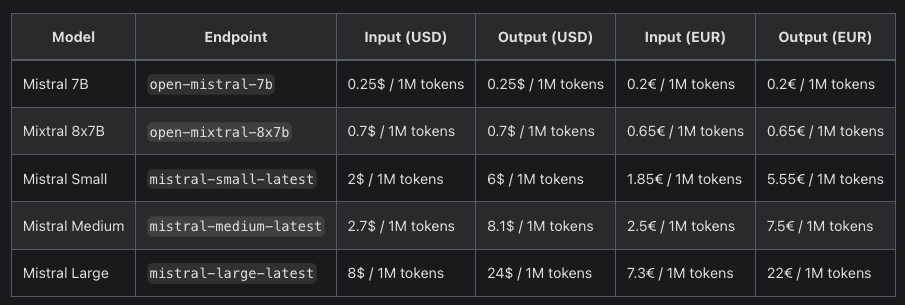

Understanding pricing and access

Mistral AI offers a pay-as-you-go pricing model for their API services, with different rates for chat completions and embedding APIs. Prices are per million tokens, varying by model complexity, with options including the Mistral 7B, Mixtral 8x7B, and various sizes of the Mistral model (Small, Medium, Large).

The platform offers various tiers, including Mistral Small, Medium, and Large, each designed to meet different project requirements and budget constraints. The lowest prices remain for Mistral 7B with 0.25$ per token up to 8$ for the Mistral’s Large output and 24$ for the Mistral’s Large input.

This flexibility allows you to select the most appropriate model version, optimizing both performance and expenditure.

Mistral AI models pricing table.

The platform imposes rate limits of 5 requests per second, 2 million tokens per minute, and 10,000 million tokens per month, with possibilities for adjustments upon request.

For detailed pricing and rate limit information, visit Mistral AI's pricing page.

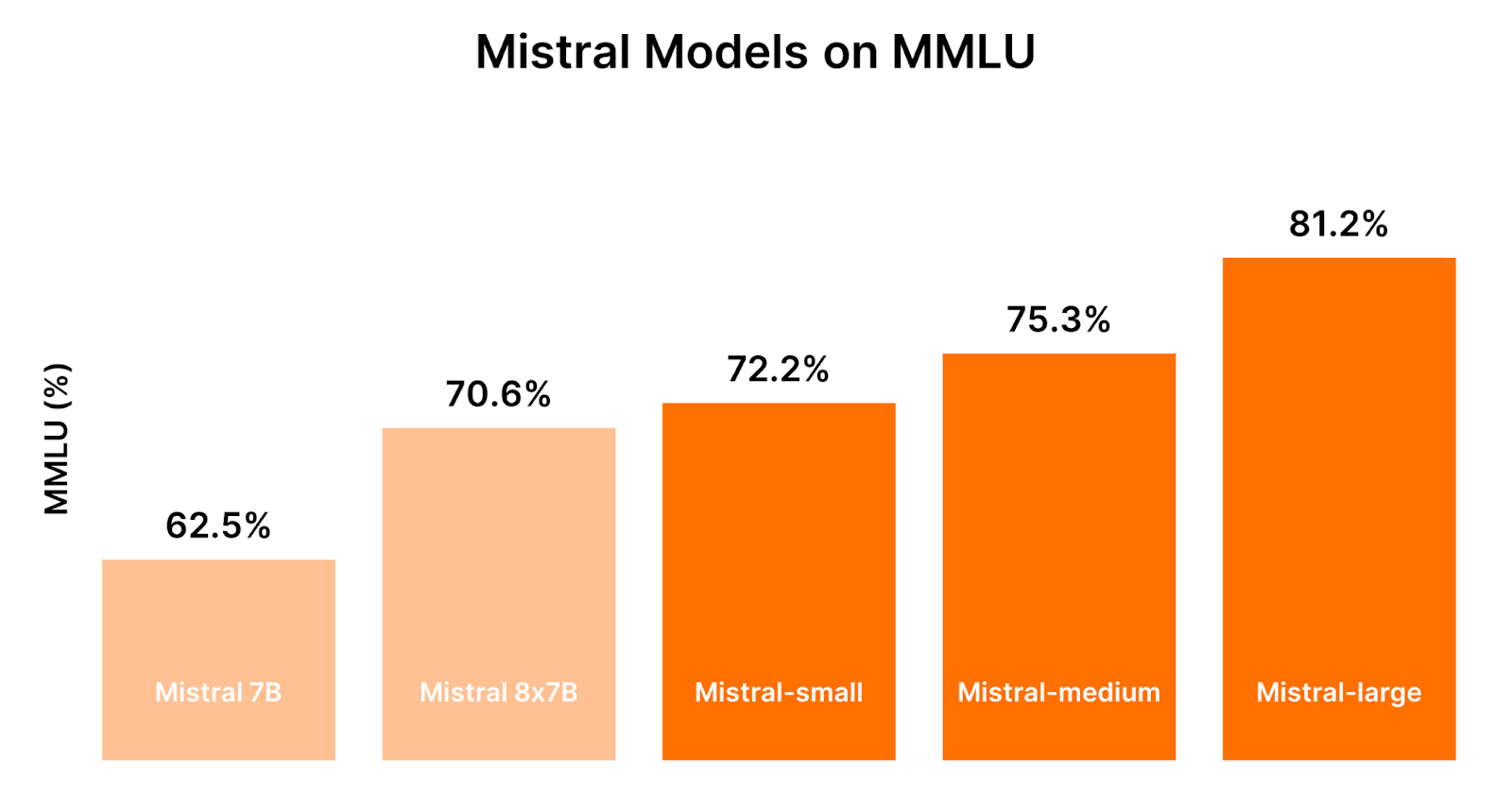

Optimizing performance

Choosing the right Mistral model requires weighing performance against cost. Your needs dictate your choice, which might change as models evolve.

Generally, larger models perform better, with Mistral Large leading in benchmarks like MMLU (an LLM benchmark called Measuring massive multitask language in understanding that has we explained aboce), followed by Mistral Medium, Small, Mixtral 8x7B, and Mistral 7B.

Comparing Mistral Models performance. Image by Mistral AI.

To maximize Mistral Large's efficacy while managing costs, you can fine-tune their queries and select the most suitable model version for their tasks. You can learn more about fine-tuning LLMs in the following Introductory Guide to Fine-Tuning LLMs

Mistral AI provides five API endpoints featuring five leading Large Language Models:

- open-mistral-7b (aka mistral-tiny-2312)

- open-mixtral-8x7b (aka mistral-small-2312)

- mistral-small-latest (aka mistral-small-2402)

- mistral-medium-latest (aka mistral-medium-2312)

- mistral-large-latest (aka mistral-large-2402)

The latest Mistral Model presents three variants, with reduced costs and performance depending on the application we want to perform:

- Mistral Small: Mistral Small is optimized for simple, bulk tasks such as classification, customer support, and basic text generation. It is cost-effective for straightforward applications like spam detection.

- Mistral Medium: Designed for tasks requiring moderate complexity, Mistral Medium excels in data extraction, document summarization, and generating descriptive content. It strikes a balance between capability and efficiency.

- Mistral Large: Mistral Large excels across nearly all benchmarks. Mistral Large is best suited for complex, reasoning-heavy tasks or specialized applications, including synthetic text and code generation. It demonstrates superior performance in advanced reasoning and problem-solving tasks.

What's Next for Mistral Large?

As Mistral Large continues to evolve, future developments, community contributions, and enhancements are on the horizon.

Staying abreast of these advancements is crucial for users looking to leverage Mistral Large's full capabilities and integrate the latest features into their projects.

The model's roadmap promises continued innovation, with a focus on improving reasoning, language support, and user accessibility.

Conclusion

Mistral Large represents a monumental step forward in the field of AI and Data Science, offering advanced reasoning with multi-language support while providing coding and mathematical aid to users.

It is the first time we have an open-source, affordable model that not only has strong skills but also leads some of the benchmarks beating commercial models such as OpenAI’s GPT-4 or Google’s Gemini.

This means that by harnessing the power of Mistral Large, you can unlock new possibilities, streamline processes, and create innovative solutions that were once beyond reach with a low budget and a better performance.

You can check the whole code in the following GitHub repo.

If you want to keep getting better in the LLM field, I strongly encourage you to follow some more advanced tutorials. If you’re totally new to the field, you can start with DataCamp’s LLM Concepts course, which covers many of the key training methodologies and the latest research.

For more advanced users, some other good resources to follow are:

Josep is a freelance Data Scientist specializing in European projects, with expertise in data storage, processing, advanced analytics, and impactful data storytelling.

As an educator, he teaches Big Data in the Master’s program at the University of Navarra and shares insights through articles on platforms like Medium, KDNuggets, and DataCamp. Josep also writes about Data and Tech in his newsletter Databites (databites.tech).

He holds a BS in Engineering Physics from the Polytechnic University of Catalonia and an MS in Intelligent Interactive Systems from Pompeu Fabra University.