Lernpfad

Große Sprachmodelle (LLMs) revolutionieren verschiedene Branchen. Von Chatbots für den Kundenservice bis hin zu ausgefeilten Datenanalysetools - die Möglichkeiten dieser leistungsstarken Technologie verändern die Landschaft der digitalen Interaktion und Automatisierung.

Praktische Anwendungen von LLMs können jedoch durch den Bedarf an leistungsstarken Computern oder die Notwendigkeit schneller Reaktionszeiten eingeschränkt sein. Diese Modelle erfordern in der Regel anspruchsvolle Hardware und umfangreiche Abhängigkeiten, was ihren Einsatz in begrenzteren Umgebungen erschweren kann.

An dieser Stelle kommt LLaMa.cpp (oder LLaMa C++) zur Hilfe und bietet eine leichtere, portablere Alternative zu den schwergewichtigen Frameworks.

Llama.cpp logo(Quelle)

KI-Anwendungen entwickeln

Was ist Llama.cpp?

Llama.cpp wurde von Georgi Gerganov entwickelt. Es implementiert die LLaMa-Architektur von Meta in effizientem C/C++ und ist eine der dynamischsten Open-Source-Communities rund um die LLM-Inferenz mit mehr als 900 Mitwirkenden, 69000+ Sternen im offiziellen GitHub-Repository und 2600+ Veröffentlichungen.

Einige wichtige Vorteile der Verwendung von LLama.cpp für LLM-Inferenz

- Universelle Kompatibilität: Das Design von Llama.cpp als CPU-first C++ Bibliothek bedeutet weniger Komplexität und nahtlose Integration in andere Programmierumgebungen. Diese breite Kompatibilität beschleunigte die Einführung auf verschiedenen Plattformen.

- Umfassende Integration von Funktionen: Llama.cpp dient als Repository für kritische Low-Level-Funktionen und spiegelt den Ansatz von LangChain für High-Level-Funktionen wider, wodurch der Entwicklungsprozess vereinfacht wird, auch wenn es in Zukunft zu Problemen bei der Skalierbarkeit kommen kann.

- Gezielte Optimierung: Llama.cpp konzentriert sich auf eine einzige Modellarchitektur, die präzise und effektive Verbesserungen ermöglicht. Sein Engagement für Llama-Modelle durch Formate wie GGML und GGUF hat zu erheblichen Effizienzsteigerungen geführt.

Mit diesem Verständnis von Llama.cpp gehen wir in den nächsten Abschnitten dieses Tutorials durch die Implementierung eines Anwendungsfalls der Texterstellung. Wir beginnen damit, die Grundlagen von LLama.cpp zu erkunden, den gesamten Arbeitsablauf des vorliegenden Projekts zu verstehen und einige seiner Anwendungen in verschiedenen Branchen zu analysieren.

Llama.cpp Architektur

Das Rückgrat von Llama.cpp sind die ursprünglichen Llama-Modelle, die ebenfalls auf der Transformer-Architektur basieren. Die Autoren von Llama nutzen verschiedene Verbesserungen, die später vorgeschlagen wurden, und verwendeten verschiedene Modelle wie PaLM.

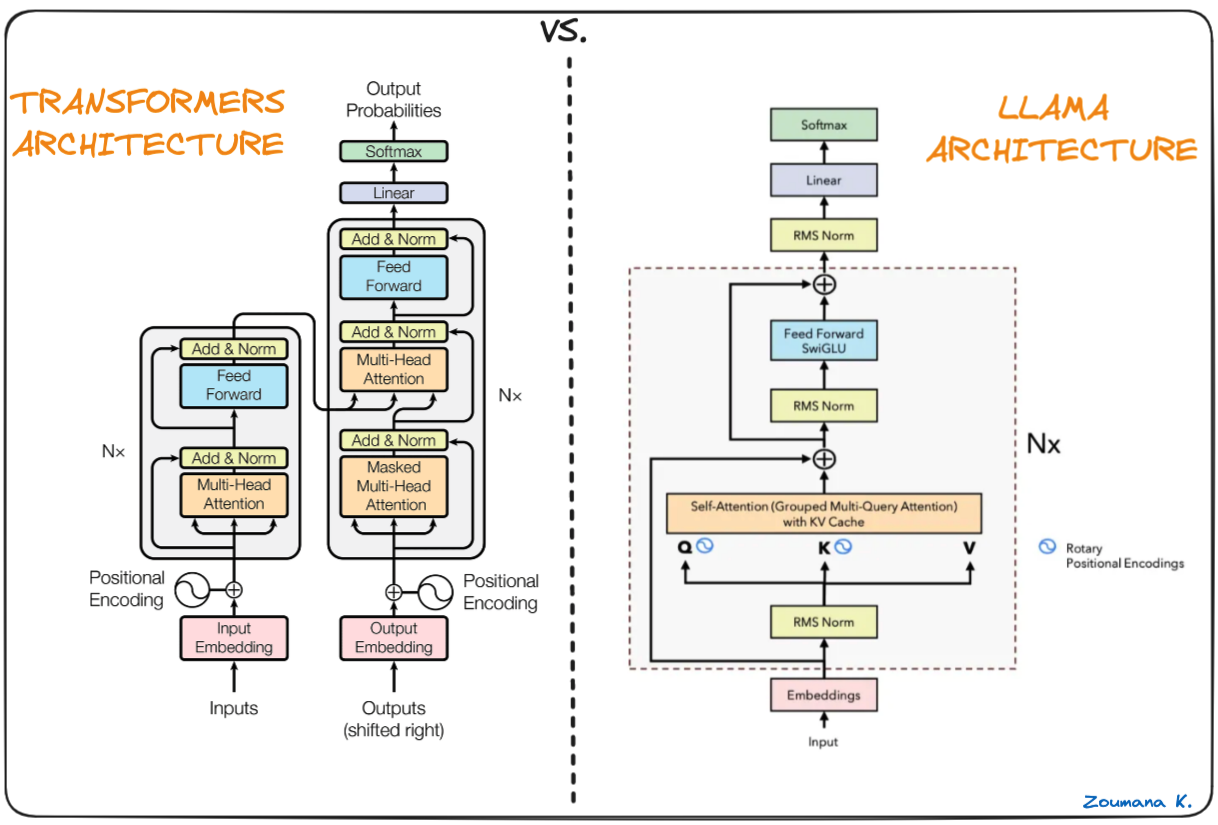

Der Unterschied zwischen Transformers und Llama-Architektur (Llama-Architektur von Umar Jamil)

Der Hauptunterschied zwischen der LLaMa-Architektur und der der Transformatoren:

- Vornormierung (GPT3): wird verwendet, um die Trainingsstabilität zu verbessern, indem der Eingang jeder Transformator-Teilschicht mit dem RMSNorm-Ansatz normalisiert wird, anstatt den Ausgang zu normalisieren.

- SwigGLU-Aktivierungsfunktion (PaLM): Die ursprüngliche nichtlineare ReLU-Aktivierungsfunktion wird durch die SwiGLU-Aktivierungsfunktion ersetzt, was zu Leistungsverbesserungen führt.

- Rotierende Einbettungen (GPTNeao): Die rotierenden Positionseinbettungen (RoPE) wurden in jeder Schicht des Netzes hinzugefügt, nachdem die absoluten Positionseinbettungen entfernt wurden.

Einrichten der Umgebung

Zu den Voraussetzungen für die Arbeit mit LLama.cpp gehören:

Python: um pip, den Python-Paketmanager, ausführen zu könnenLlama-cpp-python: die Python-Bindung für llama.cpp

Eine virtuelle Umgebung schaffen

Es wird empfohlen, eine virtuelle Umgebung zu erstellen, um Probleme bei der Installation zu vermeiden. conda ist ein guter Kandidat für die Erstellung einer solchen Umgebung.

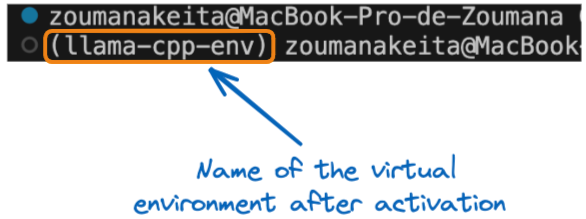

Alle Befehle in diesem Abschnitt werden über ein Terminal ausgeführt. Mit der Anweisung conda create erstellen wir eine virtuelle Umgebung namens llama-cpp-env.

conda create --name llama-cpp-envNachdem wir die virtuelle Umgebung erfolgreich erstellt haben, aktivieren wir die oben genannte virtuelle Umgebung mit der Anweisung conda activate, wie folgt:

conda activate llama-cpp-envDie obige Anweisung sollte den Namen der Umgebungsvariablen zwischen Klammern am Anfang des Terminals wie folgt anzeigen:

Name der virtuellen Umgebung nach der Aktivierung

Jetzt können wir das Paket llama-cpp-python wie folgt installieren:

pip install llama-cpp-python

or

pip install llama-cpp-python==0.1.48Die erfolgreiche Ausführung von llama_cpp_script.py bedeutet, dass die Bibliothek korrekt installiert ist.

Um sicherzustellen, dass die Installation erfolgreich ist, erstellen wir die Anweisung import, fügen sie hinzu und führen das Skript aus.

- Füge zuerst die

from llama_cpp import Llamazur Dateillama_cpp_script.pyhinzu, dann - Führe die Python

llama_cpp_script.pyaus, um die Datei auszuführen. Wenn die Bibliothek nicht importiert werden kann, wird eine Fehlermeldung ausgegeben; daher ist eine weitere Diagnose für den Installationsprozess erforderlich.

Llama.cpp-Grundlagen verstehen

In diesem Stadium sollte der Installationsprozess erfolgreich sein. Lass uns die Grundlagen von LLama.cpp verstehen.

Die oben importierte Llama-Klasse ist der Hauptkonstruktor, der bei der Verwendung von Llama.cpp verwendet wird. Er nimmt mehrere Parameter entgegen und ist nicht auf die unten aufgeführten beschränkt. Die vollständige Liste der Parameter findest du in der offiziellen Dokumentation:

model_path: Der Pfad zu der verwendeten Llama-Modelldateiprompt: Die Eingabeaufforderung für das Modell. Dieser Text wird tokenisiert und an das Modell übergeben.device: Das Gerät, auf dem das Llama-Modell ausgeführt wird; dies kann entweder eine CPU oder eine GPU sein.max_tokens: Die maximale Anzahl von Token, die in der Antwort des Modells generiert werdenstop: Eine Liste von Zeichenketten, die dazu führen, dass der Modellerstellungsprozess gestoppt wirdtemperature: Dieser Wert liegt zwischen 0 und 1. Je niedriger der Wert, desto deterministischer ist das Endergebnis. Andererseits führt ein höherer Wert zu mehr Zufälligkeit und damit zu einem vielfältigeren und kreativeren Ergebnis.top_p: Wird verwendet, um die Vielfalt der Vorhersagen zu kontrollieren, d.h. es werden die wahrscheinlichsten Token ausgewählt, deren kumulative Wahrscheinlichkeit einen bestimmten Schwellenwert überschreitet. Ausgehend von Null erhöht ein höherer Wert die Chance, ein besseres Ergebnis zu finden, erfordert aber zusätzliche Berechnungen.echo: Ein Boolescher Wert, der bestimmt, ob das Modell die ursprüngliche Aufforderung am Anfang enthält (True) oder nicht (False).

Nehmen wir zum Beispiel an, dass wir ein großes Sprachmodell namens verwenden wollen, das im aktuellen Arbeitsverzeichnis gespeichert ist. Der Instanzierungsprozess sieht folgendermaßen aus:

# Instanciate the model

my_aweseome_llama_model = Llama(model_path="./MY_AWESOME_MODEL")

prompt = "This is a prompt"

max_tokens = 100

temperature = 0.3

top_p = 0.1

echo = True

stop = ["Q", "\n"]

# Define the parameters

model_output = my_aweseome_llama_model(

prompt,

max_tokens=max_tokens,

temperature=temperature,

top_p=top_p,

echo=echo,

stop=stop,

)

final_result = model_output["choices"][0]["text"].strip()Der Code ist selbsterklärend und kann anhand der ersten Aufzählungspunkte, die die Bedeutung der einzelnen Parameter angeben, leicht verstanden werden.

Das Ergebnis des Modells ist ein Wörterbuch, das die generierte Antwort zusammen mit einigen zusätzlichen Metadaten enthält. Das Format der Ausgabe wird in den nächsten Abschnitten des Artikels erforscht.

Dein erstes Llama.cpp Projekt

Jetzt ist es an der Zeit, mit der Umsetzung des Texterstellungsprojekts zu beginnen. Wenn du ein neues Llama.cpp-Projekt startest, musst du nichts weiter tun, als der obigen Python-Codevorlage zu folgen, in der alle Schritte vom Laden des großen Sprachmodells, das dich interessiert, bis zur Erzeugung der endgültigen Antwort erklärt werden.

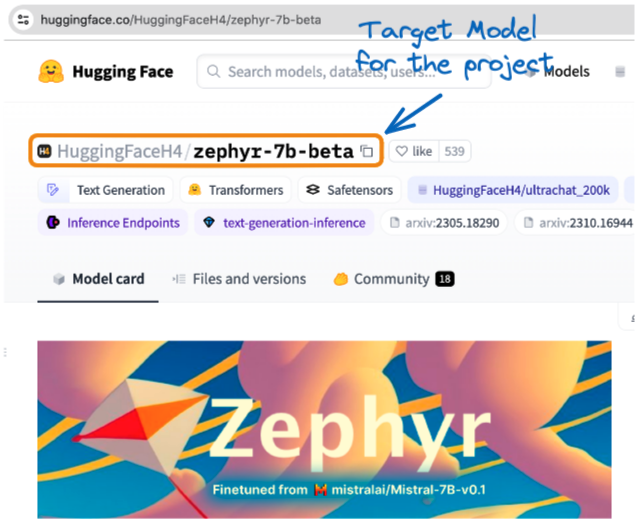

Das Projekt nutzt die GGUF-Version des Zephyr-7B-Beta von Hugging Face. Es ist eine fein abgestimmte Version von mistralai/Mistral-7B-v0.1, die auf einer Mischung aus öffentlich zugänglichen, synthetischen Datensätzen mit Hilfe von Direct Preference Optimization (DPO) trainiert wurde.

Unsere Einführung in die Nutzung von Transformers und Hugging Face vermittelt ein besseres Verständnis von Transformers und wie man ihre Kraft zur Lösung von Problemen im Alltag nutzen kann. Wir haben auch ein Mistral 7B-Tutorial.

Zephyr-Modell von Hugging Face(Quelle)

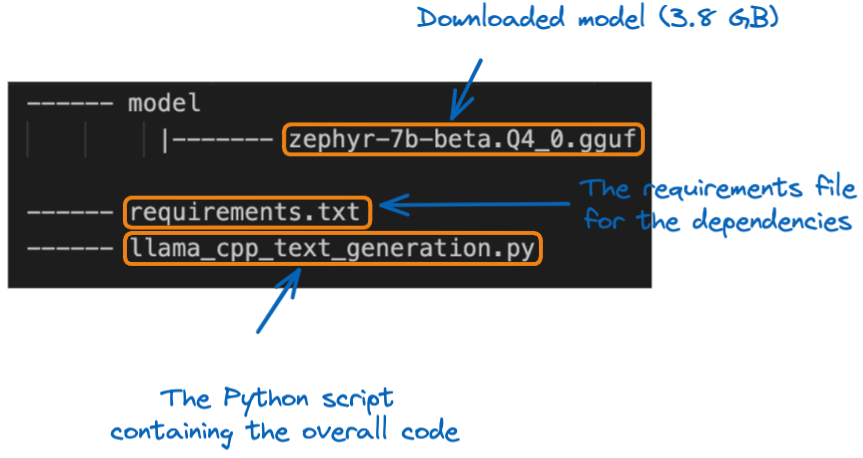

Sobald das Modell lokal heruntergeladen ist, können wir es in den Projektordner model verschieben. Bevor wir uns mit der Umsetzung beschäftigen, sollten wir die Projektstruktur verstehen:

Die Struktur des Projekts

Der erste Schritt besteht darin, das Modell mit dem Llama Konstruktor zu laden. Da es sich um ein großes Modell handelt, ist es wichtig, die maximale Kontextgröße des zu ladenden Modells anzugeben. In diesem speziellen Projekt verwenden wir 512 Token.

from llama_cpp import Llama

# GLOBAL VARIABLES

my_model_path = "./model/zephyr-7b-beta.Q4_0.gguf"

CONTEXT_SIZE = 512

# LOAD THE MODEL

zephyr_model = Llama(model_path=my_model_path,

n_ctx=CONTEXT_SIZE)Sobald das Modell geladen ist, ist der nächste Schritt die Phase der Texterzeugung, wobei wir die ursprüngliche Codevorlage verwenden, aber stattdessen eine Hilfsfunktion namens generate_text_from_prompt einsetzen.

def generate_text_from_prompt(user_prompt,

max_tokens = 100,

temperature = 0.3,

top_p = 0.1,

echo = True,

stop = ["Q", "\n"]):

# Define the parameters

model_output = zephyr_model(

user_prompt,

max_tokens=max_tokens,

temperature=temperature,

top_p=top_p,

echo=echo,

stop=stop,

)

return model_outputIn der __main__ Klausel kann die Funktion mit einer bestimmten Eingabeaufforderung ausgeführt werden.

if __name__ == "__main__":

my_prompt = "What do you think about the inclusion policies in Tech companies?"

zephyr_model_response = generate_text_from_prompt(my_prompt)

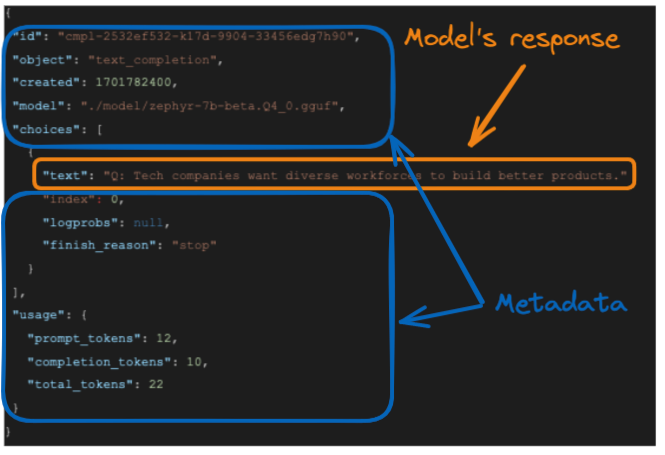

print(zephyr_model_response)Die Antwort des Modells findest du weiter unten:

Die Antwort des Modells

Die vom Modell erzeugte Reaktion ist und die genaue Reaktion des Modells ist in dem orangefarbenen Kasten hervorgehoben.

- Der ursprüngliche Prompt hat 12 Token

- Die Antwort- oder Abschluss-Token haben 10 Token und,

- Die Gesamtzahl der Token ist die Summe der beiden oben genannten Token, also 22.

Auch wenn diese vollständige Ausgabe für die weitere Verwendung nützlich sein kann, sind wir vielleicht nur an der textlichen Antwort des Modells interessiert. Wir können die Antwort so formatieren, dass wir ein solches Ergebnis erhalten, indem wir das Feld “text” des Elements "choices” wie folgt auswählen:

final_result = model_output["choices"][0]["text"].strip()

Die Funktion strip() wird verwendet, um alle führenden und nachfolgenden Leerzeichen aus einer Zeichenkette zu entfernen, und das Ergebnis ist:

Tech companies want diverse workforces to build better products.Llama.CPP - Anwendungen in der realen Welt

Dieser Abschnitt geht durch eine reale Anwendung von LLama.cpp und zeigt das zugrunde liegende Problem, die mögliche Lösung und die Vorteile der Verwendung von Llama.cpp auf.

Problem

Stell dir vor, ETP4Africa, ein Tech-Startup, braucht für seine Bildungs-App ein Sprachmodell, das auf verschiedenen Geräten effizient funktioniert, ohne Verzögerungen zu verursachen.

Lösung mit Llama.cpp

Sie implementieren Llama.cpp und nutzen die Vorteile der CPU-optimierten Leistung und der Möglichkeit, eine Schnittstelle zu ihrem Go-basierten Backend herzustellen.

Vorteile

- Tragbarkeit und Geschwindigkeit: Das leichtgewichtige Design von Llama.cpp sorgt für schnelle Reaktionen und Kompatibilität mit vielen Geräten.

- Anpassungen: Maßgeschneiderte Low-Level-Funktionen ermöglichen es der App, effektive Echtzeit-Codierungshilfe zu leisten.

Durch die Integration von Llama.cpp bietet die ETP4Africa-App sofortige, interaktive Programmierungsanleitungen und verbessert so das Nutzererlebnis und das Engagement.

Data Engineering ist eine Schlüsselkomponente jedes Data Science- und KI-Projekts. Unser Tutorial Introduction to LangChain for Data Engineering & Data Applications bietet einen vollständigen Leitfaden für die Integration von KI aus großen Sprachmodellen in Datenpipelines und Anwendungen.

Fazit

Zusammenfassend lässt sich sagen, dass dieser Artikel einen umfassenden Überblick über das Einrichten und Nutzen großer Sprachmodelle mit LLama.cpp gegeben hat.

Eine ausführliche Anleitung hilft dir, die Grundlagen von Llama.cpp zu verstehen, die Arbeitsumgebung einzurichten, die benötigte Bibliothek zu installieren und einen Anwendungsfall zur Texterzeugung (Fragenbeantwortung) zu implementieren.

Schließlich wurden praktische Einblicke in eine reale Anwendung gegeben und gezeigt, wie Llama.cpp verwendet werden kann, um das zugrunde liegende Problem effizient zu lösen.

Bist du bereit, tiefer in die Welt der großen Sprachmodelle einzutauchen? Verbessere deine Kenntnisse über die leistungsstarken Deep-Learning-Frameworks LangChain und Pytorch, die von KI-Profis verwendet werden, mit unserem Tutorial How to Build LLM Applications with LangChain und How to Train a LLM with PyTorch.

Verdiene eine Top-KI-Zertifizierung

FAQs

Wie unterscheidet sich Llama.cpp von anderen leichtgewichtigen LLM-Frameworks?

Llama.cpp ist speziell für die CPU-Nutzung optimiert, was es von anderen Frameworks unterscheidet, die sich stark auf die GPU-Beschleunigung verlassen können. Das macht es gut geeignet für Umgebungen mit begrenzten Hardware-Ressourcen.

Was sind die Systemanforderungen, um Llama.cpp effizient auszuführen?

Llama.cpp ist zwar leichtgewichtig, profitiert aber dennoch von einer modernen Multi-Core-CPU und ausreichend RAM, um größere Modelle zu verarbeiten. Die genauen Anforderungen hängen von der Modellgröße ab, mit der du arbeitest.

Kann Llama.cpp auch in andere Programmiersprachen außer Python integriert werden?

Ja, das Design von Llama.cpp als C++-Bibliothek ermöglicht die Integration in andere Programmierumgebungen als Python, obwohl für jede Sprache spezifische Bindungen implementiert werden müssten.

Was sind die Formate GGML und GGUF, die im Zusammenhang mit den Lama-Modellen erwähnt werden?

GGML (Georgi Gerganov Model Language) und GGUF sind Formate, die verwendet werden, um Llama-Modelle effizient zu speichern, wobei der Schwerpunkt auf der Verringerung der Speichergröße und der Erhöhung der Ladegeschwindigkeit liegt.

Wie geht Llama.cpp mit Aktualisierungen und Verbesserungen in LLaMa-Modellen um?

Die Open-Source-Gemeinschaft pflegt Llama.cpp aktiv und stellt sicher, dass sie die neuesten Fortschritte und Optimierungen in LLaMa-Modelle einbezieht, was zu ihrer Effizienz und Leistungssteigerung beiträgt.

Gibt es irgendwelche Einschränkungen oder bekannte Probleme bei der Verwendung von Llama.cpp?

Wie jede Software hat auch Llama.cpp ihre Grenzen, insbesondere was die Skalierbarkeit bei sehr großen Modellen oder komplexen Arbeitsabläufen angeht. Außerdem kann es sein, dass eine CPU-optimierte Lösung bei bestimmten Aufgaben nicht mit der Leistung von GPU-basierten Lösungen mithalten kann.

Wie beeinflusst der Temperaturparameter die Ausgabe von Llama.cpp?

Der Parametertemperature beeinflusst die Zufälligkeit der Modellantworten. Niedrigere Werte machen die Ausgabe deterministischer, während höhere Werte für Variabilität sorgen, was bei kreativen Anwendungen nützlich sein kann.

Zoumana ist ein vielseitiger Datenwissenschaftler, der sein Wissen gerne mit anderen teilt und anderen etwas zurückgibt. Er erstellt Inhalte auf YouTube und schreibt auf Medium. Er hat Freude am Sprechen, Programmieren und Unterrichten. Zoumana hat zwei Master-Abschlüsse. Den ersten in Informatik mit dem Schwerpunkt Maschinelles Lernen in Paris, Frankreich, und den zweiten in Datenwissenschaft an der Texas Tech University in den USA. Sein beruflicher Werdegang begann als Softwareentwickler bei Groupe OPEN in Frankreich, bevor er als Berater für maschinelles Lernen zu IBM wechselte, wo er End-to-End-KI-Lösungen für Versicherungsunternehmen entwickelte. Zoumana arbeitet bei Axionable, dem ersten nachhaltigen KI-Startup mit Sitz in Paris und Montreal. Dort arbeitete er als Data Scientist und implementierte KI-Produkte, vor allem NLP-Anwendungsfälle, für Kunden aus Frankreich, Montreal, Singapur und der Schweiz. Zusätzlich widmete er 5% seiner Zeit der Forschung und Entwicklung. Zurzeit arbeitet er als Senior Data Scientist bei der IFC, der Weltbankgruppe.