Cours

In this tutorial, I will walk you through a detailed comparison of three leading multi-agent AI frameworks: CrewAI, LangGraph, and AutoGen. Over the past year, the conversation around multi-agent systems has grown rapidly, and for good reason. As AI matures, building applications with a single intelligent agent often falls short. Instead, developers are discovering that orchestrating multiple agents, each with specific roles and responsibilities, leads to more adaptive and reliable solutions.

The significance of this comparison lies in how each framework approaches the challenge of multi-agent coordination. CrewAI adopts a role-based model inspired by real-world organizational structures, LangGraph embraces a graph-based workflow approach, and AutoGen focuses on conversational collaboration. Each framework offers unique design philosophies, strengths, and trade-offs.

My goal in this tutorial is to highlight these differences through hands-on explanations and concise examples, so you can make an informed decision when choosing one for your project.

If you are new to AI applications, consider taking one of our courses, such as AI Fundamentals, Developing AI Applications, or Retrieval Augmented Generation (RAG) with LangChain.

What Is an AI Agent?

Before diving into the frameworks, let me set the stage by explaining what an agent means in this context. In multi-agent AI frameworks, an agent is more than just a prompt wrapper around a large language model. It is an autonomous entity with a defined role, tools it can use, memory it can access, and behaviors it follows. Agents can work independently or collaborate with other agents to solve problems that would otherwise be too complex for a single agent.

I think of an agent as both a decision-maker and a collaborator. It takes input, reasons about it, performs actions (sometimes through external tools), and communicates with other agents when necessary.

For example, one agent may be responsible for gathering data, another for analyzing it, and another for reporting results. Together, they form a collaborative intelligence system.

However, agents are not always the best choice. If your workflow is straightforward, such as fetching data from a single API and displaying results, a simple script or single-agent orchestration may be more efficient. Multi-agent systems shine when tasks require coordination, specialization, or dynamic adaptation.

Understanding Multi-Agent AI Frameworks

Multi-agent frameworks extend the single-agent paradigm into systems that support collaboration, delegation, and adaptive workflows. They bring to life the idea of collaborative intelligence, where different agents specialize in parts of a problem and communicate to achieve an overall goal.

This approach mirrors how teams operate in organizations, with specialists working together under some orchestration layer.

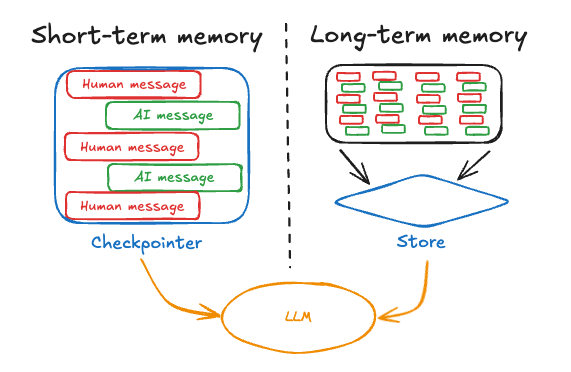

The key components of these frameworks often include state management, communication protocols, and memory systems. Memory deserves particular attention because it allows agents to recall past interactions and make informed decisions.

Short-term memory allows agents to maintain context during immediate interactions. Long-term memory enables learning from past experiences and building knowledge bases. Persistent memory ensures important information survives system restarts and can be accessed across sessions.

By enabling these components, multi-agent frameworks support flexible architectures that can tackle problems ranging from workflow automation to research analysis. The choice of framework depends on how much control, flexibility, and scalability your project requires.

Overview of CrewAI, LangGraph, and AutoGen

Now that we have a shared understanding of agents and multi-agent frameworks, let us briefly introduce our three subjects of comparison.

CrewAI emphasizes role-based collaboration with a workplace-inspired metaphor. Each agent has a defined role, responsibilities, and access to tools, making the system intuitive for team-based workflows.

The framework excels at task-oriented collaboration where clear roles and responsibilities drive efficient execution. CrewAI's strength lies in its intuitive approach to agent coordination and built-in support for common business workflow patterns.

LangGraph adopts a graph-based workflow design that treats agent interactions as nodes in a directed graph. This architectural approach provides exceptional flexibility for complex decision-making pipelines with conditional logic, branching workflows, and dynamic adaptation.

LangGraph shines in scenarios requiring sophisticated orchestration with multiple decision points and parallel processing capabilities.

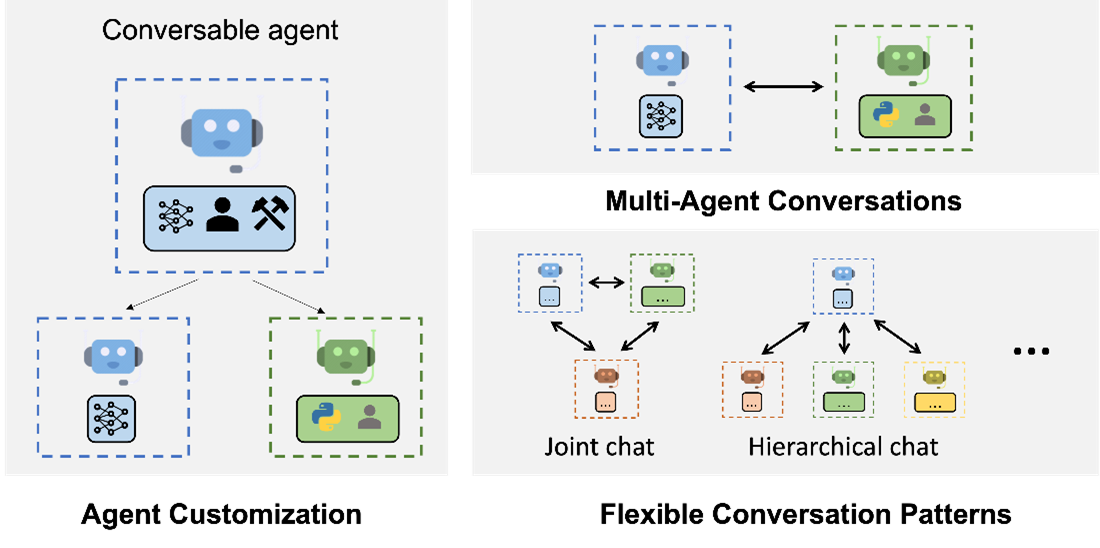

AutoGen focuses on conversational agent architecture, emphasizing natural language interactions and dynamic role-playing. The framework excels at creating flexible, conversation-driven workflows where agents can adapt their roles based on context. AutoGen's strength lies in rapid prototyping and human-in-the-loop scenarios where natural language interaction is paramount.

Overview of CrewAI, LangGraph, and AutoGen

In-Depth Comparison: CrewAI vs LangGraph vs AutoGen

Each framework approaches multi-agent orchestration from a unique angle. CrewAI emphasizes role assignment, LangGraph emphasizes workflow structure, and AutoGen emphasizes conversation.

These differences affect how developers design, manage, and scale their systems, and understanding them is essential before making a choice.

Let’s break down these differences across several important dimensions, starting with architecture.

Architectural differences

Architecture is the foundation of each framework. CrewAI follows a role-based model where agents behave like employees with specific responsibilities. This makes it easy to visualize workflows in terms of teamwork.

LangGraph, by contrast, focuses on graph-based orchestration, where workflows are represented as nodes and edges, enabling highly modular and conditional execution.

AutoGen takes a different route, modeling interactions as conversations between agents or between agents and humans, creating a natural dialogue-driven flow.

Ease of use

When it comes to ease of use, CrewAI feels intuitive for anyone thinking in terms of roles and tasks. You simply define agents with goals and let them collaborate.

LangGraph requires a deeper understanding of graph design, which can add a learning curve but pays off with more control over workflow logic.

AutoGen is conversational at its core, making it straightforward to start small projects while remaining flexible for iterative development.

Supported tools and integrations

Regarding integrations, all three frameworks provide extensive API support and integration with external tools. CrewAI provides built-in integrations for common cloud services and tools.

LangGraph benefits from the entire LangChain ecosystem, offering wide-ranging integrations with APIs and external systems.

AutoGen, meanwhile, prioritizes tool usage within conversations and allows humans to participate directly, which enhances flexibility in iterative or review-heavy workflows.

Memory support and types

Memory support varies significantly across frameworks. CrewAI uses structured, role-based memory with RAG support for contextual agent behavior.

LangGraph provides state-based memory with checkpointing for workflow continuity.

AutoGen focuses on conversation-based memory, maintaining dialogue history for multi-turn interactions. Each approach aligns with the framework's core philosophy.

Structured output

Handling structured output is another area of contrast. CrewAI enforces structure through its role logic—agents produce outputs aligned with their defined responsibilities.

LangGraph excels here, thanks to its state graphs, which can enforce strict output formats and transitions.

AutoGen, being more conversation-driven, produces flexible outputs that may vary in consistency, depending on how the conversation is orchestrated.

Multi-Agent Support

All three frameworks offer multi-agent capabilities, but the collaboration models differ.

CrewAI focuses on role assignment, where each agent has a clearly defined responsibility, making coordination feel like a structured team environment.

LangGraph enables workflow-level collaboration by treating each agent or function as a node in a graph, allowing them to interact through structured state transitions and conditional branches.

AutoGen emphasizes group chat models, where agents converse with each other and with humans in natural language, making collaboration dynamic and less rigid but also less predictable.

Human-in-the-loop features

Human oversight is a core differentiator across these frameworks. CrewAI integrates human checkpoints directly into task execution, allowing supervisors to review or refine outputs before tasks proceed.

LangGraph provides human-in-the-loop hooks within its workflow graphs, letting developers pause execution, gather user input, and resume from the same state.

AutoGen makes human involvement part of the conversational flow, where a user proxy agent can step in at any point to guide or redirect the dialogue.

This flexibility makes AutoGen particularly strong for interactive or review-driven workflows, while CrewAI and LangGraph offer more structured mechanisms for intervention.

Caching and replay

For caching and replay, CrewAI includes a comprehensive tool for caching with built-in error handling to keep tasks running smoothly.

LangGraph supports node-level caching with backends like memory or SQLite and provides replay and debugging through LangGraph Studio.

AutoGen focuses on LLM caching with backends such as disk or Redis, enabling shared caches across agents for cost savings and reproducibility.

Code execution

In terms of code execution, CrewAI enables execution through assigned tools (CodeInterpreterTool), maintaining its role-based philosophy.

LangGraph allows both native and external code execution within its graph nodes, offering more flexibility for computational workflows.

AutoGen integrates execution directly into its conversations, where agents (CodeExecutorAgent) can run and evaluate snippets as part of their dialogue.

Customizability

Looking at customizability, all three frameworks are strong, though in different ways. CrewAI is highly customizable but within its role-centric paradigm.

LangGraph provides the most modularity, letting developers design highly specialized workflows with conditional logic.

AutoGen offers conversational flexibility, allowing creative multi-agent dialogues, though it is less formal in structure.

Scalability

Scalability also varies. CrewAI supports scalability through parallel task execution and horizontal replication of agents within defined roles.

LangGraph is designed for scalability from the start, as graph-based workflows can be expanded into large distributed systems.

AutoGen scales conversationally, allowing multiple agents to collaborate in larger groups, though it has limitations for large-scale applications.

Documentation and community

Finally, the documentation and community play an important role in the long-term success of any framework.

CrewAI is steadily building a developer base, with more role-driven projects emerging and early adopters sharing practical use cases.

LangGraph benefits from being part of the broader LangChain ecosystem, which brings extensive documentation, tutorials, and active community support.

AutoGen, while less mature than the others, provides clear documentation, an active community, and an extensive list of tutorials.

Comparison Table

The following table summarizes the key differences between CrewAI, LangGraph, and AutoGen across all major dimensions to help guide your framework selection decision.

|

Feature |

CrewAI |

LangGraph |

AutoGen |

|

Architecture |

Role-based organizational structure |

Graph-based workflows with nodes and edges |

Conversational multi-agent interactions |

|

Ease of Use |

Intuitive role assignment |

Moderate learning curve (graph design) |

Simple conversational setup |

|

Memory Support |

Role-based memory Short/Long-term Entity Contextual |

State-based Short-/Long-term Checkpointing |

Message-based Short-term Conversation history |

|

Integrations |

Cloud tools Business workflows |

LangChain Ecosystem Platform/Studio |

Tool integrations |

|

Multi-Agent Support |

Yes |

Yes |

Yes |

|

Structured Output |

Role-enforced |

Strong state-based Strict format |

Flexible |

|

Caching & Replay |

Tool Caching |

Node-level Caching |

LLM Caching |

|

Code Execution |

Tool-based |

Native/external |

Integrated |

|

Human-in-the-loop |

Yes |

Yes |

Yes |

|

Customizability |

High within role paradigm |

Maximum modularity Conditional logic design |

Conversational flexibility |

|

Scalability |

Task parallelization |

Distributed graph execution |

Limited large-scale support |

|

Documentation Community |

Growing developer base |

Well-established LangChain ecosystem |

Clear docs Steadily growing |

Comparison Table: CrewAI vs. LangGraph vs. Autogen

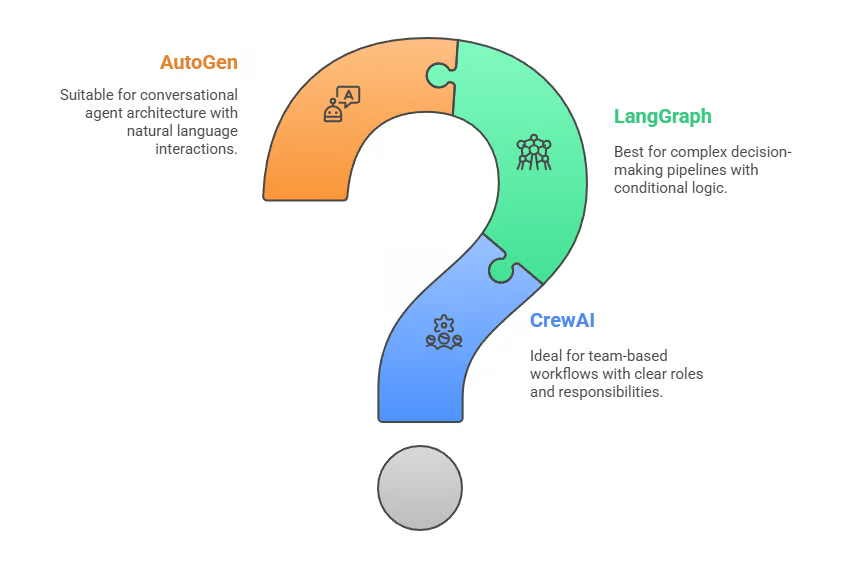

When to choose each

-

Choose CrewAI if your project maps naturally onto roles and responsibilities (e.g., a researcher handing work to a writer). It’s intuitive for team-like workflows, supports structured memory, and makes human checkpoints easy to insert.

-

Choose LangGraph if you need complex orchestration with branching logic. Its graph-based design is ideal for adaptive workflows, conditional execution, and projects that may need to scale into distributed systems.

-

Choose AutoGen if you want conversation-driven collaboration with flexible, human-in-the-loop oversight. It excels at iterative tasks, brainstorming, or review-heavy workflows where natural language is the organizing principle.

Now that we've compared the frameworks at a high level, let's examine each one in detail, starting with CrewAI's role-based approach.

CrewAI Framework Deep Dive

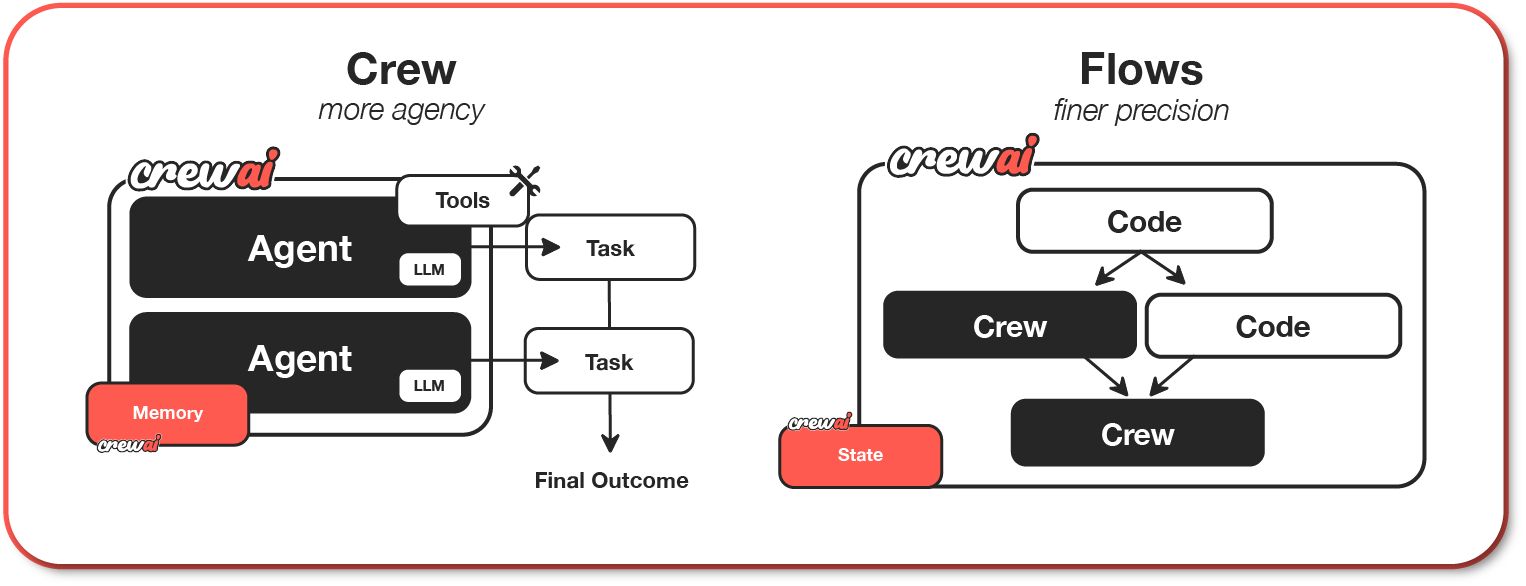

CrewAI is built around the metaphor of an organization. Agents are treated like employees, each with a role and set of responsibilities.

For instance, one agent may act as a “researcher,” gathering data, while another acts as a “writer,” preparing reports. This division of labor makes CrewAI particularly intuitive for scenarios where roles and responsibilities naturally exist.

CrewAI Architecture

CrewAI's memory system reflects this organizational structure. Each agent maintains role-specific memory that includes task context, entity relationships, and accumulated knowledge.

The framework supports multiple memory layers: short-term memory for immediate task context, long-term memory for insights across sessions, entity memory for tracking people and concepts, and contextual memory that ties everything together.

This structured approach means a researcher agent can build knowledge over time while a writer agent maintains its own stylistic preferences and writing history. Human-in-the-loop support is also built in, enabling supervisors to approve or modify agent outputs before tasks proceed.

Here is a simple CrewAI snippet where I define a research agent:

from crewai import Agent, Task, Crew

# Environment variables

os.environ["OPENAI_API_KEY"] = "your-api-key"

os.environ["OPENAI_API_BASE"] = "https://api.your-provider.com/v1"

os.environ["OPENAI_MODEL_NAME"] = "your-model-name"

# Define agents

researcher = Agent(

role="Researcher",

goal="Gather information on AI frameworks",

backstory="Expert in technical research and summarization",

)

writer = Agent(

role="Writer",

goal="Prepare well-structured reports",

backstory="Skilled technical writer who explains concepts clearly",

)

# Define tasks

research_task = Task(

description="Find details on CrewAI, LangGraph, and AutoGen",

expected_output="A structured summary of each framework, including use cases and differences.",

agent=researcher,

output_file="output/research_notes.md",

human_input=True

)

writing_task = Task(

description="Turn the research notes into a polished article section",

expected_output="A readable, clear article comparing the three frameworks.",

agent=writer,

output_file="output/final_article.md",

human_input=True

)

# Create a crew with both agents and tasks

crew = Crew(

agents=[researcher, writer],

tasks=[research_task, writing_task]

)

# Run the crew

result = crew.kickoff()

print(result)In this workflow, the researcher agent gathers information and produces structured notes, which are saved to a file. A human can step in to review or refine the notes before moving forward. Next, the writer agent transforms those notes into a polished article section, again allowing for optional human review before finalization.

CrewAI coordinates the process, ensuring the agents stay aligned with their roles while humans maintain control at key checkpoints. This feels like a small editorial team working together—exactly what CrewAI is designed to model.

CrewAI also introduces Flows, which complement Crews to provide fine-grained workflow control. While Crews represent autonomous teams of agents working together, Flows are event-driven, production-ready pipelines that manage execution paths, state, and branching logic.

This means you can combine the flexibility of autonomous decision-making in Crews with the precision of structured orchestration in Flows.

While CrewAI frames collaboration in terms of roles and responsibilities, LangGraph instead emphasizes structure and logic, treating workflows as interconnected nodes in a graph.

LangGraph Framework Deep Dive

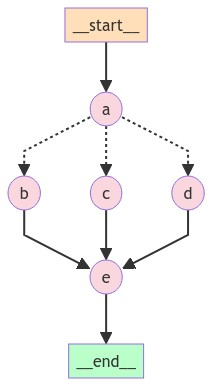

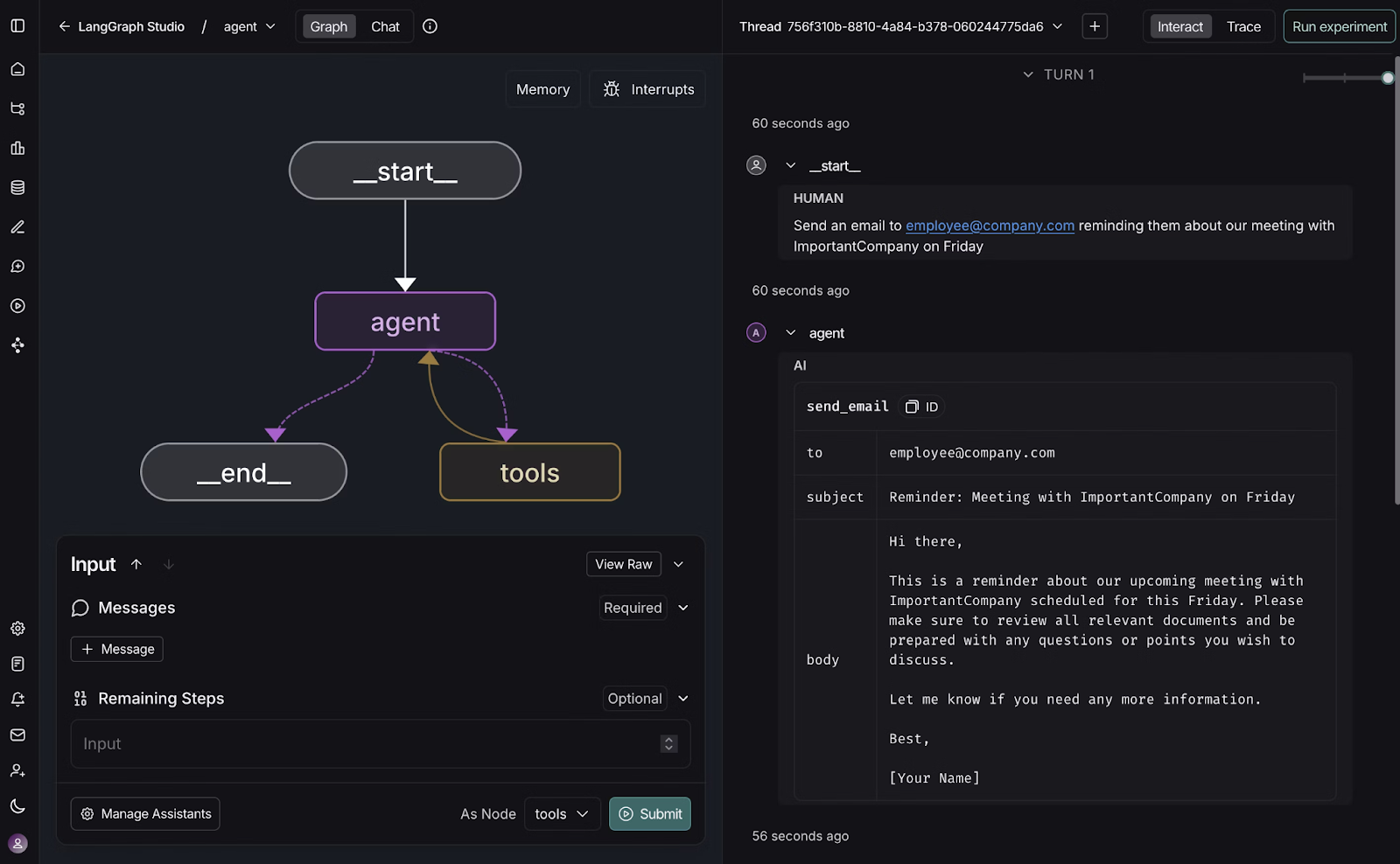

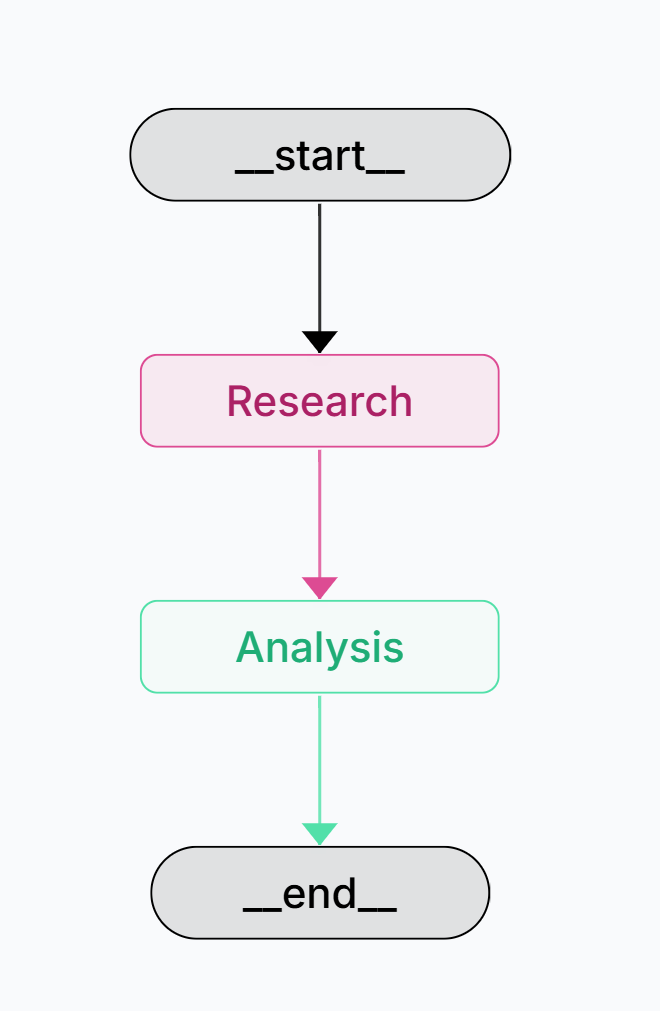

LangGraph takes a different approach by representing workflows as graphs of nodes. Each node can be an agent, a function, or a decision point. The system supports conditional branching, enabling dynamic adaptation based on results. This makes LangGraph powerful for orchestrating complex decision-making pipelines where tasks depend on prior outcomes.

LangGraph Branching Architecture

LangGraph also provides strong memory capabilities, which operate as part of the graph state. Short-term memory persists within active threads and can be checkpointed at any node, allowing workflows to pause and resume.

Long-term memory stores user-specific or application-level data that persists across different workflow executions. This state-based approach makes it particularly powerful for complex workflows where decisions at one node influence behavior at later nodes, even across different sessions.

LangGraph Studio, its visual development environment, further simplifies building and debugging these workflows.

Here is a LangGraph snippet that sets up a workflow with two agents: a researcher node that gathers information and an analysis node that interprets the results.

from langchain_openai import ChatOpenAI

from typing import Annotated

from typing_extensions import TypedDict

import os

from langchain.schema import HumanMessage

from langgraph.graph import StateGraph, START, END

from langgraph.graph.message import add_messages

# Environment variables

os.environ["OPENAI_API_KEY"] = "your-api-key"

llm = ChatOpenAI(

model="gpt-4o-mini",

)

# Define the graph state

class State(TypedDict):

messages: Annotated[list, add_messages]

# Define nodes powered by the LLM

def research_node(state: State):

"""Research agent: collects information using the LLM."""

prompt = "You are a researcher. Collect detailed information.\nUser query: "

user_query = state["messages"][-1].content

response = llm.invoke(prompt + user_query)

return {"messages": [response]}

def analysis_node(state: State):

"""Analysis agent: analyzes research results with the LLM."""

research_output = state["messages"][-1].content

prompt = "You are an analyst. Provide insights based on this research:\n"

response = llm.invoke(prompt + research_output)

return {"messages": [response]}

# Build the graph

graph_builder = StateGraph(State)

# Add nodes

graph_builder.add_node("Research", research_node)

graph_builder.add_node("Analysis", analysis_node)

# Add edges (workflow order)

graph_builder.add_edge(START, "Research")

graph_builder.add_edge("Research", "Analysis")

graph_builder.add_edge("Analysis", END)

# Compile the graph

graph = graph_builder.compile()

# Run the workflow

if __name__ == "__main__":

initial_messages = [HumanMessage(content="Please draft a comparison of CrewAI, LangGraph, and AutoGen.")]

result = graph.invoke({"messages": initial_messages})

print(f"Research and Analysis Complete. {result}")

print("\nFinal Report:")

for msg in result["messages"]:

print(f"{type(msg).__name__}: {msg.content}")

Research-Analysis Workflow

In this workflow, the research node collects raw information about the user’s query, and the analysis node builds insights based on those results. The edges define the order of execution, so once research is complete, the graph automatically routes the output to the analyst.

This illustrates how LangGraph formalizes workflows as modular graphs, making it easier to design pipelines where each step depends on the outcome of the previous one. Unlike CrewAI’s role metaphor or AutoGen’s free-flowing conversations, LangGraph gives you fine-grained structural control over how agents collaborate.

While LangGraph emphasizes structural workflows, AutoGen instead focuses on conversation as the organizing principle.

AutoGen Framework Deep Dive

AutoGen emphasizes conversation. It models workflows as dialogues between agents, and sometimes between agents and humans. This conversational approach is particularly useful for tasks that require iterative reasoning, negotiation, or oversight. For example, one agent may propose a solution, another may critique it, and a human supervisor may step in to guide the discussion.

AutoGen's memory is conversation-centric, storing the full dialogue history to maintain context across multi-turn interactions. This message-based memory allows agents to reference earlier parts of the conversation and build upon previous exchanges.

While simpler than the structured memory of other frameworks, this approach excels in scenarios where the natural flow of conversation drives the workflow, and agents need to maintain awareness of the entire dialogue context.

The RoundRobinGroupChat capability is especially powerful. It allows multiple agents to collaborate by taking turns, each response broadcast to all participants so that the entire team maintains a consistent context. This design makes it easy to implement the reflection pattern, where one agent creates a draft and another evaluates or critiques it.

Here is an AutoGen snippet that sets up a group chat with a writer, a reviewer, and a user proxy:

import asyncio

from autogen_agentchat.conditions import TextMentionTermination

from autogen_agentchat.agents import UserProxyAgent, AssistantAgent

from autogen_agentchat.conditions import MaxMessageTermination

from autogen_agentchat.messages import TextMessage

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_agentchat.teams import RoundRobinGroupChat

from autogen_agentchat.ui import Console

async def simple_user_agent():

# Model client

model_client = OpenAIChatCompletionClient(model="gpt-4o")

# Writer agent

writer = AssistantAgent(

"Writer",

model_client=model_client,

system_message=(

"You are a professional writer. "

"Always respond with a detailed draft when asked. "

),

)

# Reviewer agent

reviewer = AssistantAgent(

"Reviewer",

model_client=model_client,

system_message=(

"You are a reviewer who critiques drafts and suggests improvements. "

),

)

# User proxy agent

user_proxy = UserProxyAgent("User")

# Termination conditions

termination = MaxMessageTermination(max_messages=8)

text_termination = TextMentionTermination("TERMINATE")

# Group chat with Writer, Reviewer, and User

team = RoundRobinGroupChat(

[writer, reviewer, user_proxy],

termination_condition=termination | text_termination,

max_turns=8,

)

# Run the group chat

await Console(

team.run_stream(

task=TextMessage(

source=user_proxy.name,

content="Please draft a comparison of CrewAI, LangGraph, and AutoGen."

),

)

)

if __name__ == "__main__":

asyncio.run(simple_user_agent())In this workflow, the writer agent generates a draft, the reviewer agent evaluates the draft and provides feedback, and the user proxy agent represents the human participant.

The RoundRobinGroupChat ensures that each participant takes turns in order, keeping the discussion structured. Termination conditions, such as a message limit or a specific keyword, give you control over when the chat ends. This setup illustrates AutoGen’s conversational strength: agents collaborate dynamically, while humans can step in at any stage for oversight or guidance.

Use Case Scenarios and Applications

When thinking about where each framework fits best, I often map them to the nature of the problem. If I need simple workflow automation where roles are clear, for example, a “data fetcher” agent handing results to a “writer” agent, CrewAI is the most natural fit. Its organizational metaphor aligns perfectly with such scenarios.

For complex decision-making pipelines that require branching logic, LangGraph excels. Imagine orchestrating a multi-step customer support system where the path depends on conditions such as issue type or escalation level. LangGraph’s graph-based design is ideal for these adaptive workflows.

Finally, for human-in-the-loop systems, AutoGen shines. If I am building a collaborative research assistant where agents brainstorm together and a human supervises, the conversational architecture of AutoGen feels natural and effective.

Technical Implementation Considerations

From an implementation perspective, performance, scalability, and integration are crucial. CrewAI scales through horizontal agent replication and task parallelization within role hierarchies. LangGraph scales through distributed graph execution and parallel node processing. AutoGen scales through conversation sharding and distributed chat management, though this presents unique challenges for maintaining conversation context.

Integration is another factor. LangGraph benefits from the broader LangChain ecosystem, while AutoGen focuses on conversation interfaces and may require additional abstraction layers for traditional API integration. CrewAI integrates well with existing business systems through its structured approach to role-based workflows.

Enterprise and Production Readiness

In enterprise contexts, licensing and compliance cannot be ignored. CrewAI provides commercial licensing with enterprise support options. LangGraph, backed by LangChain, offers enterprise-grade support and consulting services. AutoGen provides Microsoft-backed support through its integration with Azure AI services.

Equally important is deployment flexibility. CrewAI can be deployed on-premises or in the cloud, making it suitable for organizations with strict data governance requirements.

LangGraph’s architecture integrates smoothly with existing enterprise systems and APIs, and it provides two complementary services—LangGraph Studio for workflow design and debugging, and the LangGraph Platform for managing deployments at scale.

AutoGen benefits from its native ties to Microsoft, making it a natural choice for teams already invested in the Azure ecosystem. It also offers Autogen Studio, still under development, a low-code interface for rapid prototyping.

Ultimately, each framework provides a different deployment pathway, and the right option depends on whether an organization prioritizes local control, modular integration, or seamless cloud adoption.

With these enterprise considerations in mind, let's summarize our findings and provide guidance for framework selection.

Conclusion

Throughout this tutorial, I walked you through the foundations of multi-agent AI frameworks and explored three distinct approaches: CrewAI, LangGraph, and AutoGen. CrewAI stands out for role-based collaboration, LangGraph shines in graph-driven orchestration, and AutoGen thrives in conversational, human-in-the-loop systems.

The key takeaway is that no single framework is universally better. The choice depends on your project’s needs. If you value structured roles, CrewAI may be the right fit. If your workflow requires adaptive branching, LangGraph will likely serve you well. If you want agents to collaborate conversationally, AutoGen provides a flexible environment.

I encourage you to experiment with each framework. The best way to learn their strengths and weaknesses is to build small prototypes and see how they behave with your specific tasks. By doing so, you will discover which framework feels most natural for your workflows and which aligns best with your long-term goals.

To keep learning, be sure to check out the following resources:

CrewAI vs LangGraph vs AutoGen FAQs

How does CrewAI handle task delegation compared to LangGraph and AutoGen?

CrewAI uses role-based task delegation, mapping agents to responsibilities. LangGraph uses workflow graphs, while AutoGen relies on conversational turns.

What are the main differences in agent collaboration between CrewAI and LangGraph?

CrewAI collaborates via role assignments, while LangGraph connects agents as nodes in a workflow graph with conditional branching and structured state.

How does AutoGen's approach to agent-to-agent chat differ from CrewAI's role-based design?

AutoGen emphasizes conversational group chats where agents critique and build on each other’s responses. CrewAI enforces structured, role-based exchanges.

Which framework offers the best support for human-in-the-loop systems?

All three frameworks support human involvement, but CrewAI integrates checkpoints in workflows, LangGraph provides pause/resume hooks, and AutoGen embeds humans directly in conversations.

How do the memory management capabilities of LangGraph compare to those of CrewAI and AutoGen?

LangGraph provides state-based memory with checkpointing and persistence. CrewAI uses structured, role-based memory with RAG, while AutoGen stores conversational history.

As the Founder of Martin Data Solutions and a Freelance Data Scientist, ML and AI Engineer, I bring a diverse portfolio in Regression, Classification, NLP, LLM, RAG, Neural Networks, Ensemble Methods, and Computer Vision.

- Successfully developed several end-to-end ML projects, including data cleaning, analytics, modeling, and deployment on AWS and GCP, delivering impactful and scalable solutions.

- Built interactive and scalable web applications using Streamlit and Gradio for diverse industry use cases.

- Taught and mentored students in data science and analytics, fostering their professional growth through personalized learning approaches.

- Designed course content for retrieval-augmented generation (RAG) applications tailored to enterprise requirements.

- Authored high-impact AI & ML technical blogs, covering topics like MLOps, vector databases, and LLMs, achieving significant engagement.

In each project I take on, I make sure to apply up-to-date practices in software engineering and DevOps, like CI/CD, code linting, formatting, model monitoring, experiment tracking, and robust error handling. I’m committed to delivering complete solutions, turning data insights into practical strategies that help businesses grow and make the most out of data science, machine learning, and AI.