Track

If you want to get the most out of the Gemini model, the best approach is to use the Google Gen AI API together with the Gemini Fullstack LangGraph chat application. This setup provides a full-stack AI research assistant, featuring a React frontend and a LangGraph backend.

In this tutorial, you will learn how to set up the application from scratch: you will install both the frontend and backend, run them together with a single command, and test the application to ensure everything works smoothly.

Afterward, we will walk through deploying the application using Docker, which is a much more beginner-friendly way to access the app and experience the highly accurate, detailed response generation capabilities of the Gemini model.

If you’re looking for other Gemini- or LangGraph-based projects, be sure to read our tutorials Gemini 2.5 Pro API: A Guide With Demo Project and LangGraph Tutorial: What Is LangGraph and How to Use It?.

1. Setting Up

If you are using Windows, it is highly recommended to use the Windows Subsystem for Linux (WSL) for a smoother development experience. WSL allows you to run a full Linux environment directly on Windows, making it easy to use Linux tools.

1. Open the PowerShell as Administrator and run the command wsl --install. This will install Ubuntu by default and may require a system restart.

2. After restarting, launch Ubuntu from the Start menu or by typing wsl in PowerShell.

3. Install Node.js 18 using NVM (Node Version Manager).

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.7/install.sh | bash

source ~/.bashrc

nvm install 18

nvm use 184. Check your installed Node.js version and ensure it is 18 or higher.

node --version

npm --version

v18.20.8

10.8.22. Installing Frontend and Backend

1. Clone the Gemini Fullstack LangGraph Quickstart repository and navigate into the project directory:

git clone https://github.com/google-gemini/gemini-fullstack-langgraph-quickstart.git

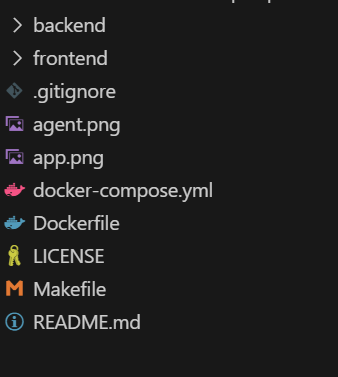

cd gemini-fullstack-langgraph-quickstartYour directory structure should now look like this:

2. Go to the Google AI Studio and generate a Gemini API key.

Source: Get API key | Google AI Studio

3. Navigate to the backend directory and create the .env file with the Gemini API key. Replace “YOUR_ACTUAL_API_KEY” with the key you generated.

4. Install all the Python packages using the PIP command.

cd backend

echo 'GEMINI_API_KEY="YOUR_ACTUAL_API_KEY"' > .env

pip install .5. Return to the project root and navigate to the frontend directory. Install frontend dependencies using npm.

cd ..

cd frontend

npm install 3. Running the Application

Once you have installed all dependencies, you can start both the frontend and backend servers in development mode from the project root.

1. From the root directory of your project, run:

make devThis command will launch both the frontend (using Vite) and the backend (using LangGraph/FastAPI) in development mode.

2. The frontend is powered by Vite, a modern build tool that provides a fast and efficient development experience for web projects.

> frontend@0.0.0 dev

> vite

VITE v6.3.4 ready in 392 ms

➜ Local: http://localhost:5173/app/

➜ Network: use --host to expose3. The backend server will start and display information like:

INFO:langgraph_api.cli:

Welcome to

╦ ┌─┐┌┐┌┌─┐╔═╗┬─┐┌─┐┌─┐┬ ┬

║ ├─┤││││ ┬║ ╦├┬┘├─┤├─┘├─┤

╩═╝┴ ┴┘└┘└─┘╚═╝┴└─┴ ┴┴ ┴ ┴

- 🚀 API: http://127.0.0.1:2024

- 🎨 Studio UI: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024

- 📚 API Docs: http://127.0.0.1:2024/docs

This in-memory server is designed for development and testing.

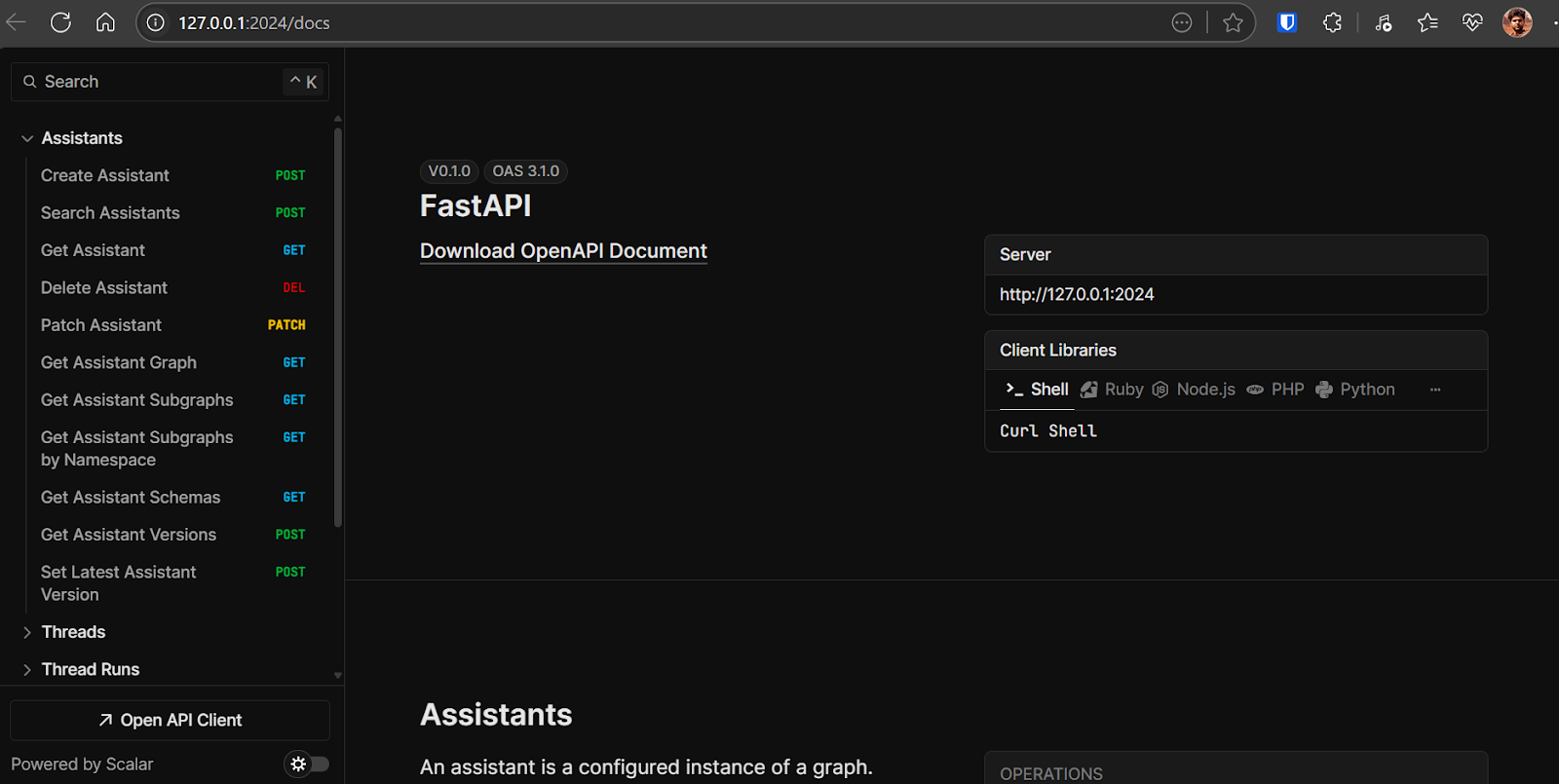

For production use, please use LangGraph Cloud.- Backend API: http://127.0.0.1:2024

- API Documentation: http://127.0.0.1:2024/docsYou can use this OpenAPI documentation to test backend endpoints directly from your browser.

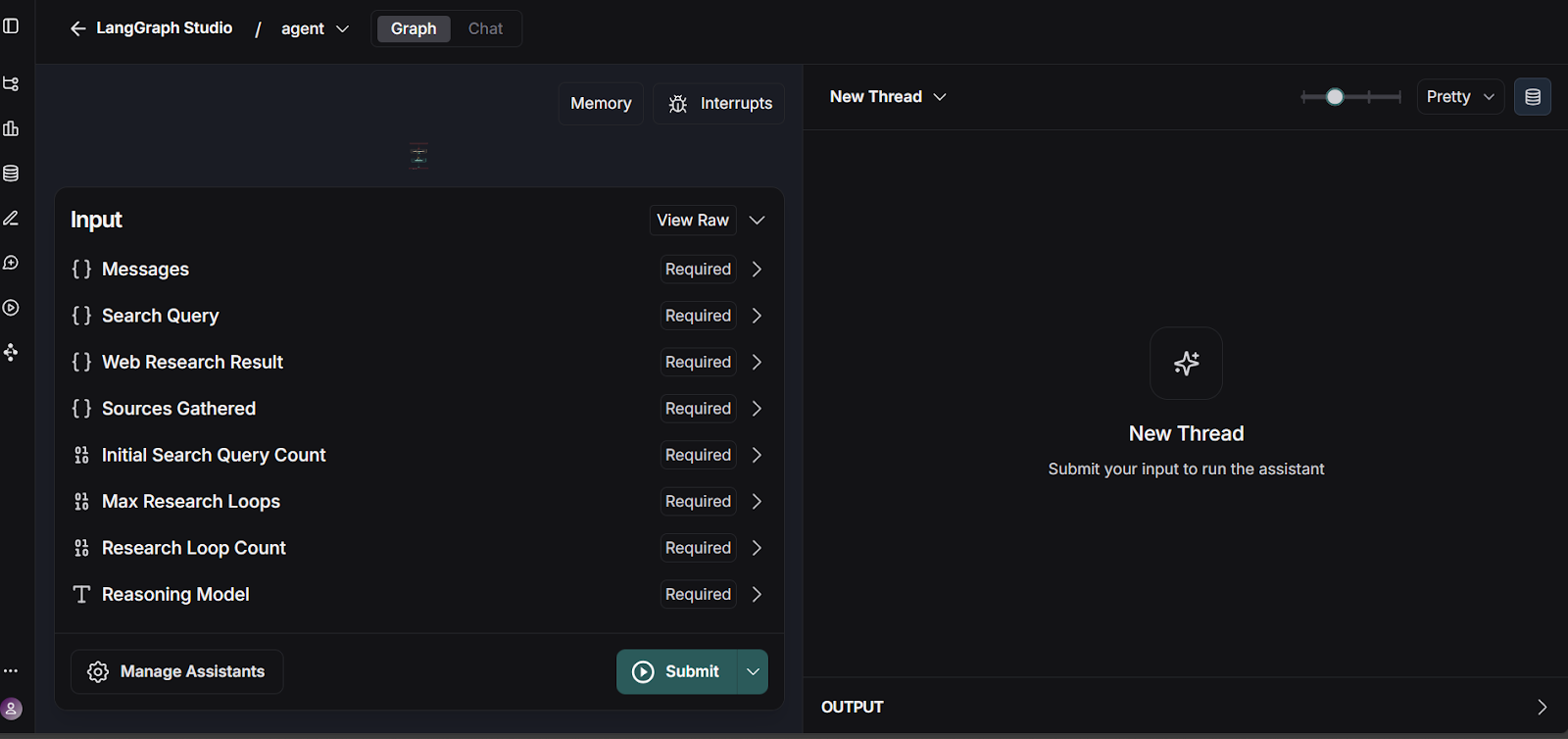

- LangGraph Studio UI: https://smith.langchain.com/studio/?baseUrl=http://127.0.0.1:2024This dashboard allows you to visualize and test your LangGraph workflows.

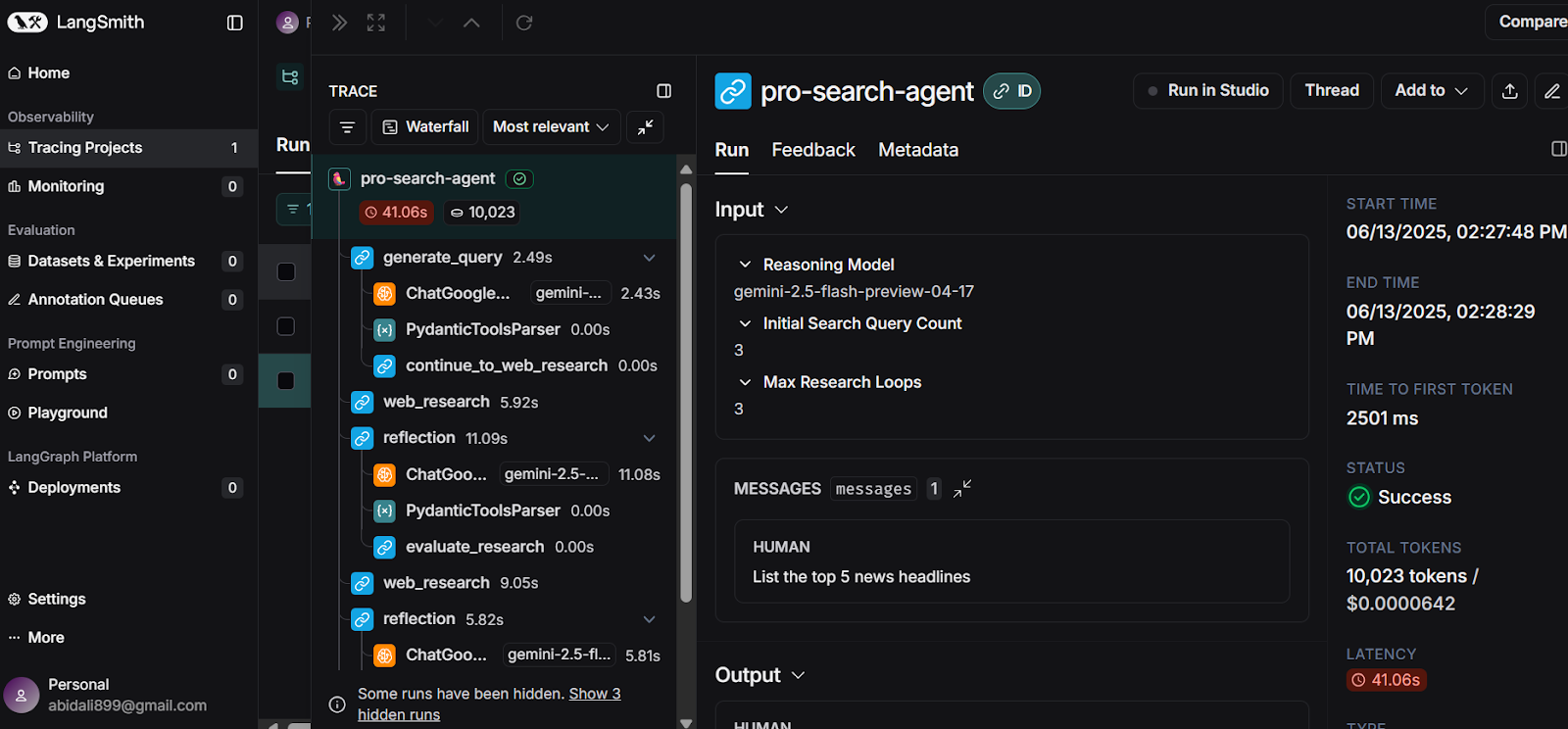

4. You can test the LangGraph workflow by going to the LangGraph Studio UI.

Source: smith.langchain.com

The backend API is located at: http://127.0.0.1:2024. API documentation can be found at: http://127.0.0.1:2024/docs. You can test each backend endpoint using the OpenAPI documentation.

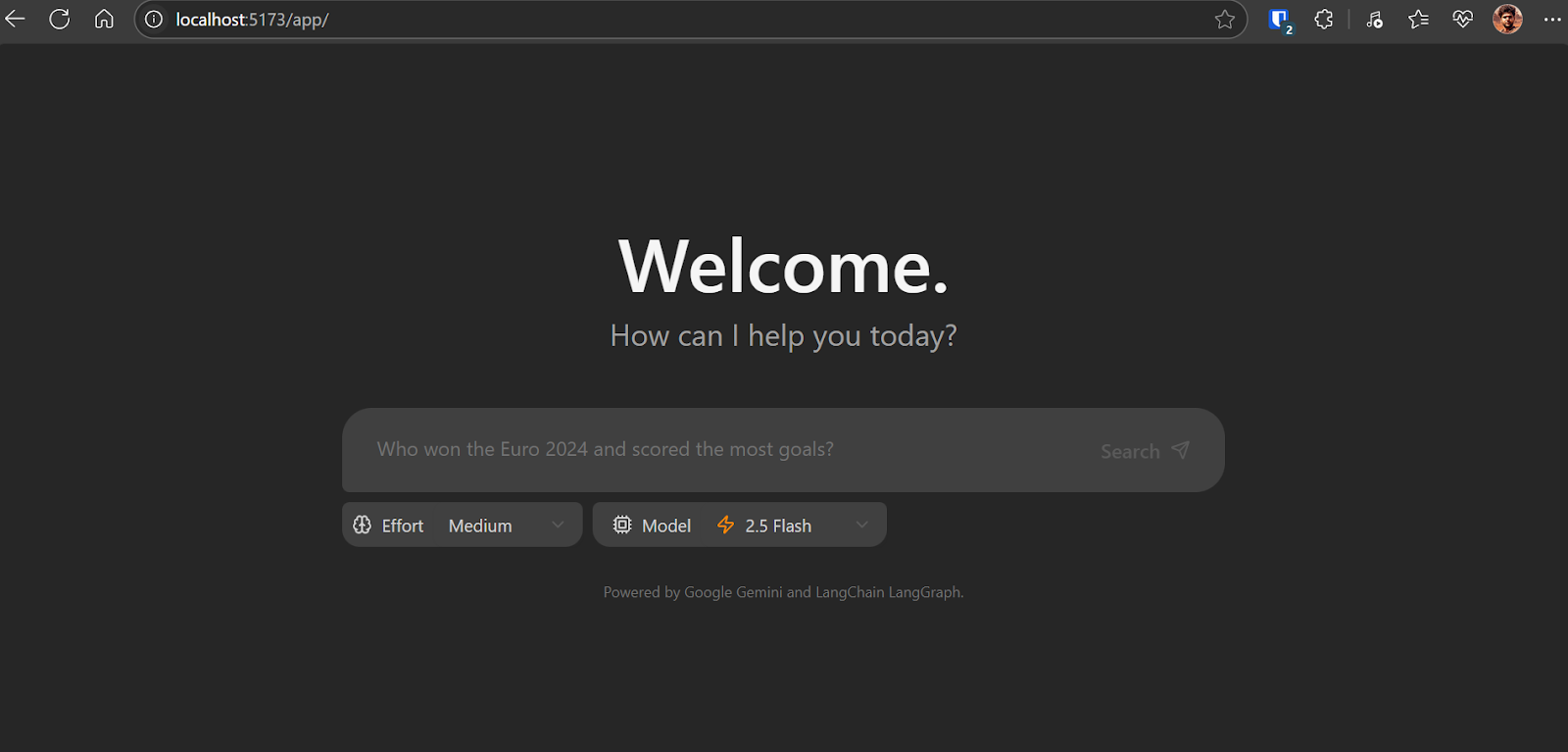

Open http://localhost:5173/app/ in your browser to access the chat application interface. The chat application is similar to the ChatGPT. Just write the question and it will start doing its magic.

How the chat application works at the backend:

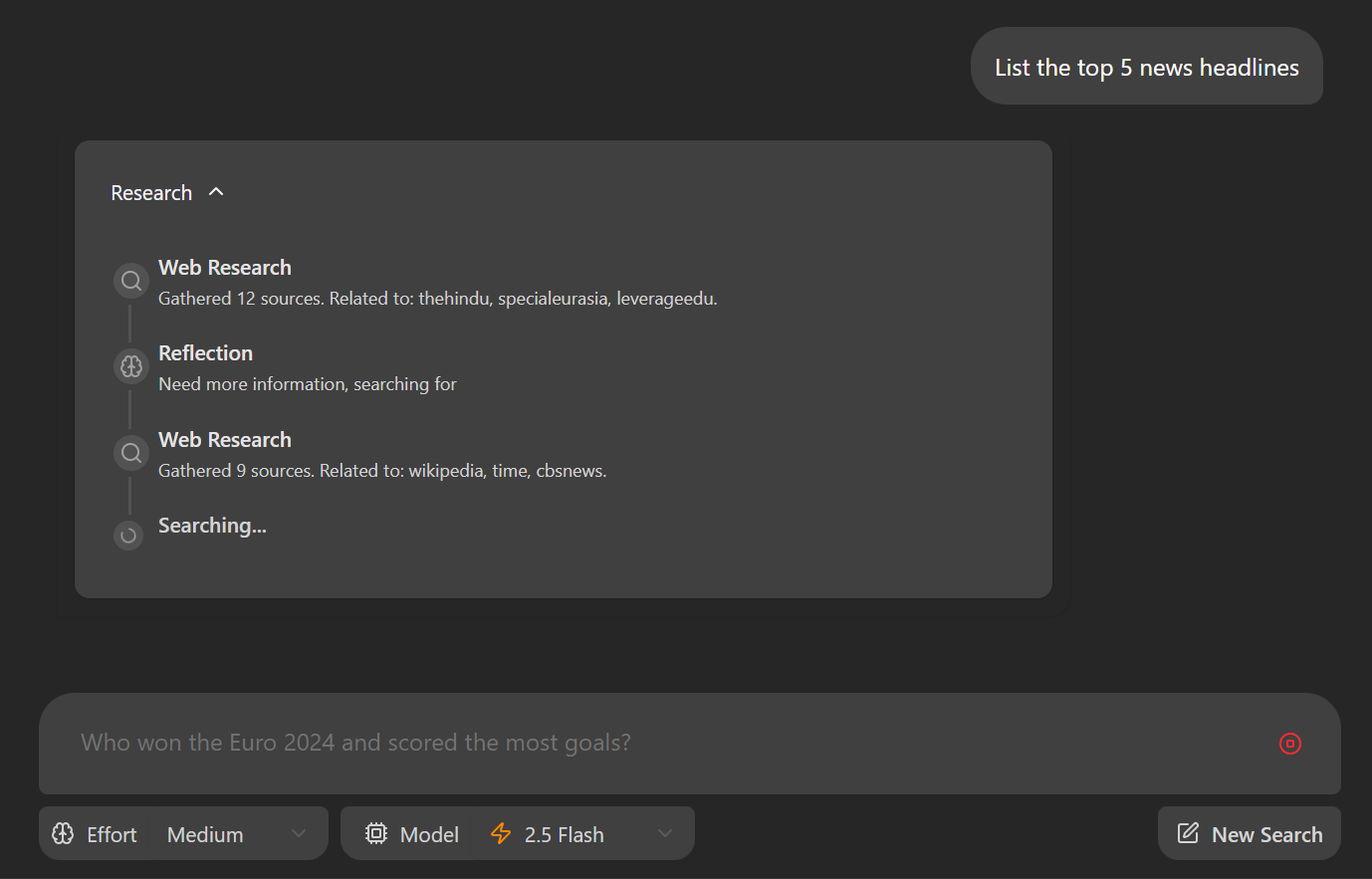

- Based on the user's input, it generates a set of initial search queries using a Gemini model.

- For each query, it uses the Gemini model with the Google Search API to find relevant web pages.

- The agent analyzes search results to identify knowledge gaps, using the Gemini model for reflection.

- If gaps are found, it generates follow-up queries and repeats the research and reflection steps (up to a set limit).

- Once sufficient information is gathered, the agent synthesizes a coherent answer with citations.

As this application is still in its early stages, you may encounter some bugs and inconsistencies, particularly in the frontend interface. One frequent issue is Inconsistent frontend rendering.

To resolve this, update line 57 in frontend/src/App.tsx as follows:

- : `Need more information, searching for ${event.reflection.follow_up_queries.join(

+ : `Need more information, searching for ${(event.reflection.follow_up_queries || []).join(4. Deploying the Application Using Docker

Deploying your application with Docker is straightforward and efficient. Docker allows you to package your app and its dependencies into a single container.

1. Download and install Docker Desktop for your operating system (Windows, macOS, or Linux). You can find the installer and detailed instructions on the official Docker website. Once installed, launch Docker Desktop to ensure it’s running properly.

2. Navigate to the project root directory and run the following command in your terminal to build the Docker image:

docker build -t gemini-fullstack-langgraph -f Dockerfile .3. To run the application, you will need two API keys:

- Gemini API Key: Obtain this from the Google AI Studio .

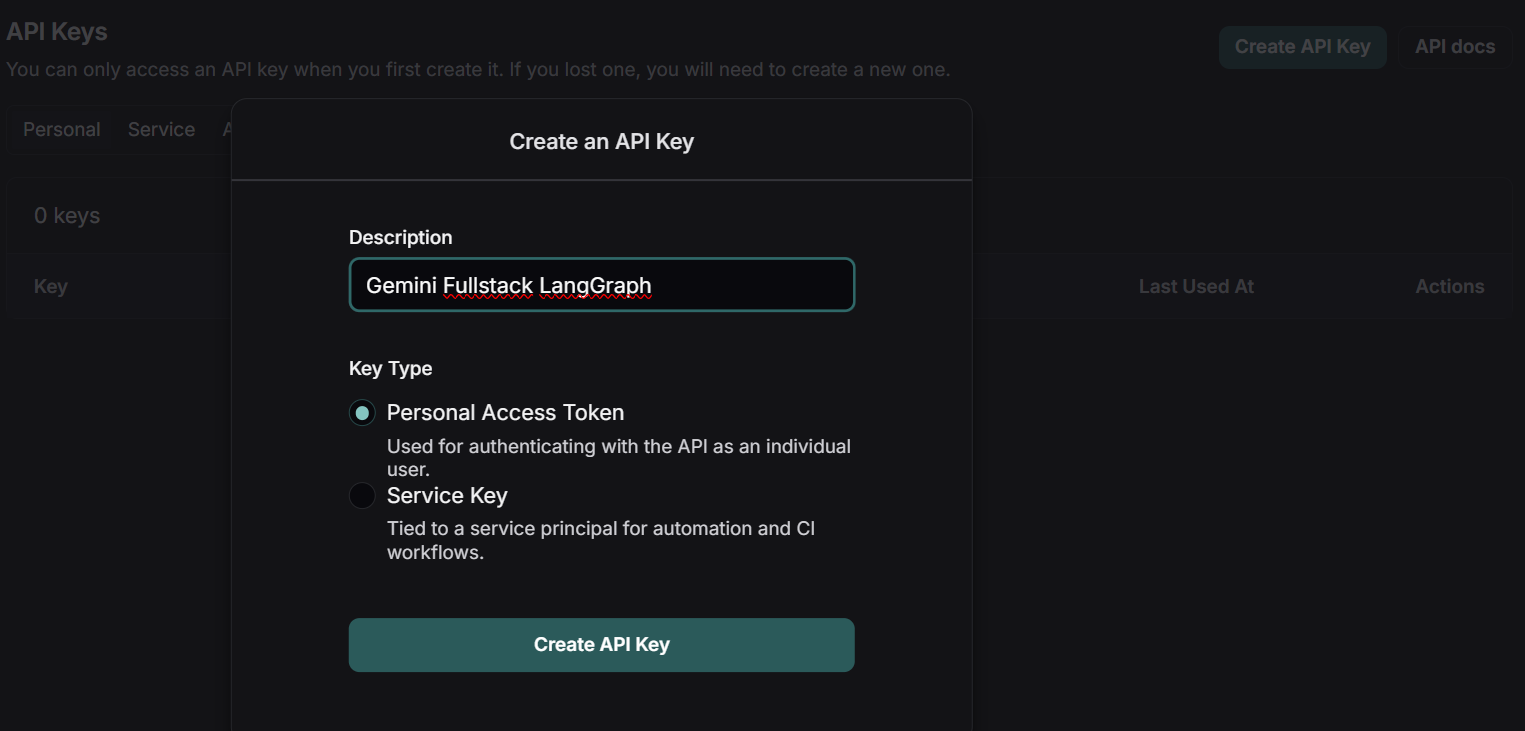

- LangSmith API Key: Visit the official LangSmith website, sign up for a free account, and generate your API key.

Source: LangSmith

4. Start the application using Docker Compose, replacing the placeholders with your actual API keys:

GEMINI_API_KEY=<your_gemini_api_key> LANGSMITH_API_KEY=<your_langsmith_api_key> docker-compose up5. Access the application:

- Frontend: http://localhost:8123/app/

- API Documentation: http://localhost:8123/docs

5. Testing the Application

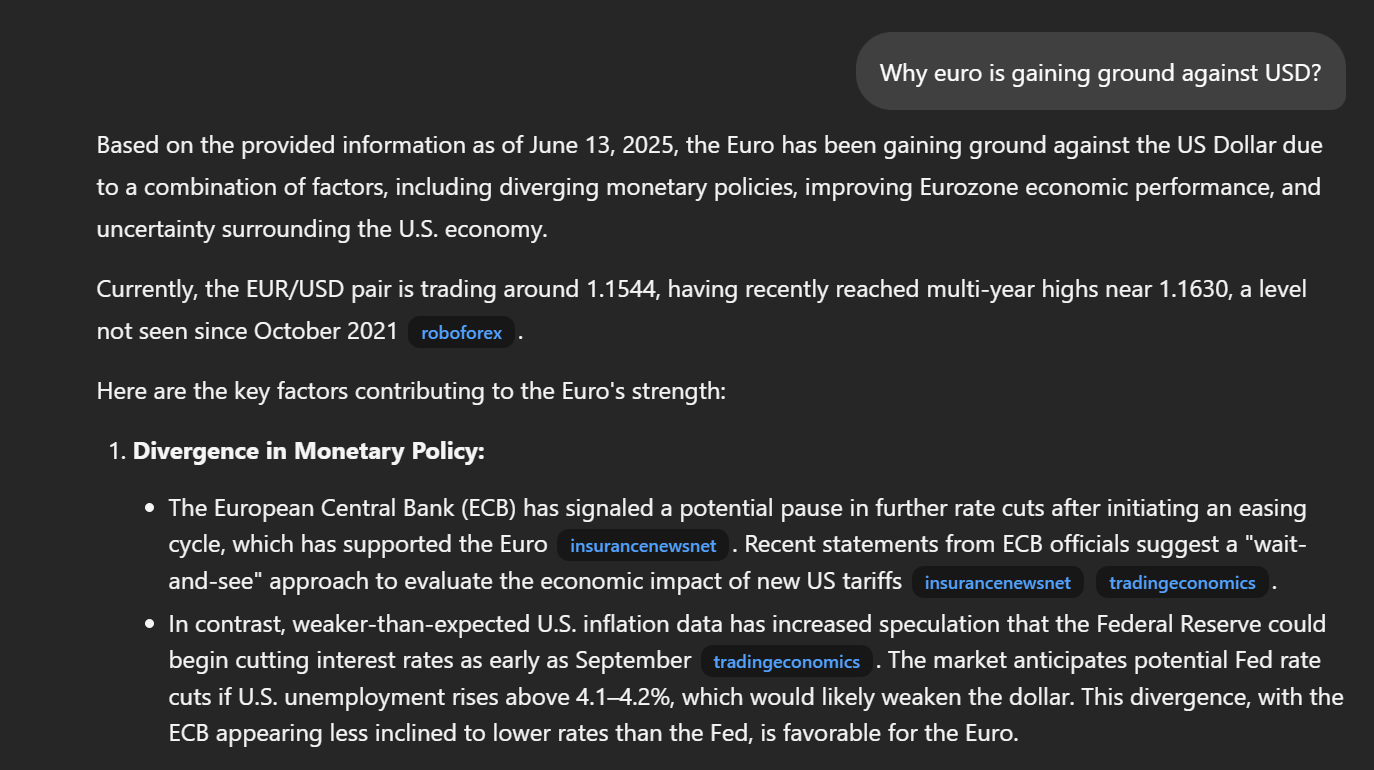

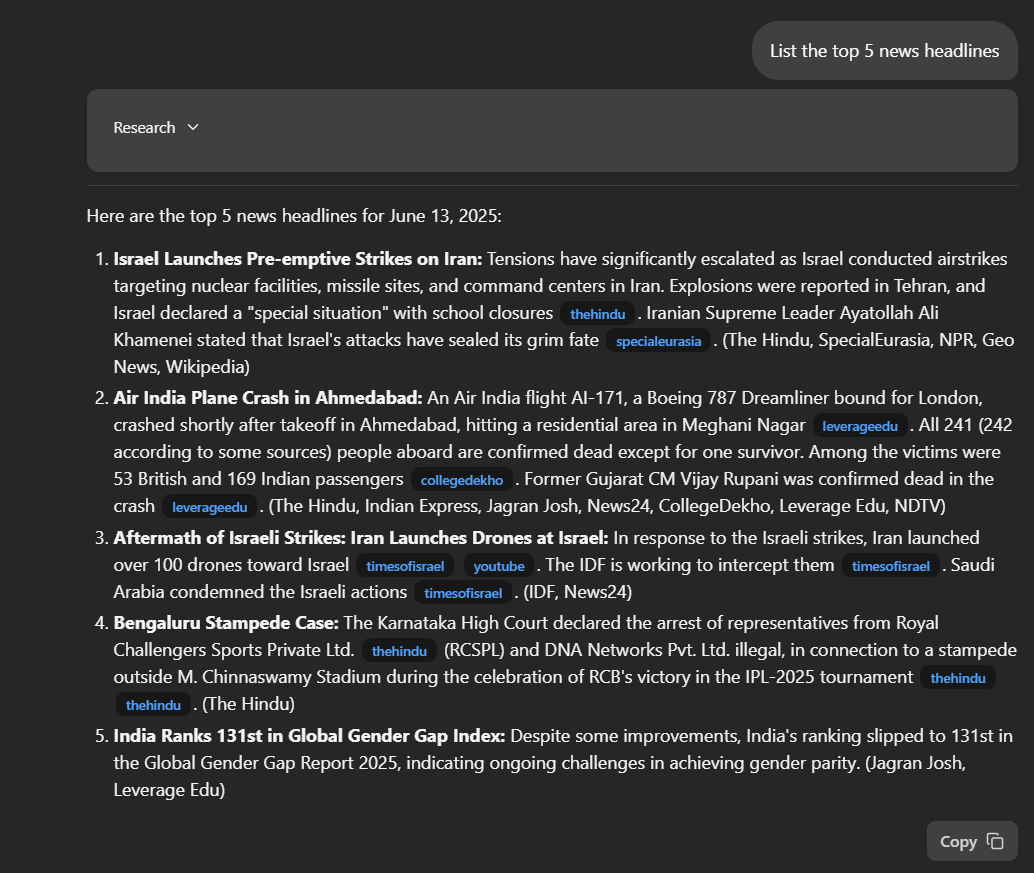

Navigate to http://localhost:8123/app/ in your web browser. Type a question into the input box “List the top 5 news headlines.”

Once you submit your question, the application will begin its research process. You will see a step-by-step breakdown of how the agent is gathering and analyzing information to answer your query.

The application will provide a detailed response, including direct links to the relevant publications. The quality and depth of the answers often surpass those of other deep research tools, offering more transparency and context in the results.

For even deeper insight, visit your LangSmith Dashboard. Here, you can review a comprehensive log of every step the agent took to generate your response. This detailed trace helps you understand the agent’s reasoning process and ensures transparency in how answers are constructed.

Source: LangSmith

Conclusion

In less than 10 minutes, you can build the Docker image and launch your own deep research AI application locally, using the free APIs provided by Google Gemini and LangSmith.

This guide has walked you through a straightforward implementation, demonstrating how the Gemini model is advancing in its ability to identify the right tools, generate highly detailed, accurate, and up-to-date research responses.

You have learned how to set up the application, install both the backend and frontend, and run everything locally. Additionally, we covered an alternative deployment method using Docker, making it even easier to get started and experience the power of deep research AI.

If you’re looking for your next project, check out our guide on Gemini Diffusion: A Guide With 8 Practical Examples.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.