Course

Since its launch in November 2022, ChatGPT has captivated global attention. This groundbreaking AI chatbot is adept at interpreting natural language instructions and producing responses that closely resemble human conversation across a vast array of subjects.

The advent of large language models like GPT-4 has opened up new possibilities in the field of natural language processing. With the release of the ChatGPT API by OpenAI, we can now easily integrate conversational AI capabilities into our applications. In this beginner's guide, we will explore what the ChatGPT API offers and how to get started with it using a Python client.

Develop AI Applications

What is GPT?

GPT, short for Generative Pre-trained Transformer, is a series of language models developed by OpenAI. These models, evolving from GPT-1 to GPT-4, are trained on vast text data and can be further refined for specific language tasks. They excel in generating coherent text by predicting subsequent words. ChatGPT, a conversational AI based on these models, interacts in natural language and is trained to be safe, reliable, and informative, with knowledge updated until March 2023.

What is the ChatGPT API?

An API (Application Programming Interface) allows two software programs to communicate with each other. APIs expose certain functions and data from an application to other applications. For example, the Twitter API allows developers to access user profiles, tweets, trends, etc, from Twitter and build their own applications using that data.

The ChatGPT API provides access to OpenAI’s conversational AI models like GPT-4, GPT-4 turbo, GPT-3, etc. It allows us to leverage these language models in our applications through API. There can be several use cases where, using these APIs, you can create interesting functionality and features in your applications that are useful for your users. This may include:

- Building chatbots and virtual assistants

- Automating customer support workflows

- Generating content like emails, reports, and more

- Answering domain-specific questions

Key Features of the ChatGPT API

Let’s look at some of the reasons you might choose to use the ChatGPT API for your project:

Natural Language Understanding

ChatGPT exhibits exceptional capabilities in understanding natural language. It is built on the GPT-3 architecture, which enables it to interpret and process a wide range of natural language inputs, including questions, commands, and statements.

This understanding is facilitated by its training on a vast corpus of text data, making it adept at recognizing various linguistic nuances and generating responses that are accurate and contextually relevant.

Contextual Response Generation

The API excels in generating text that is not only coherent but also contextually relevant. This means that ChatGPT can provide responses that seamlessly align with the flow of conversation, maintaining relevance to the previously provided context.

Its ability to handle long sequences of text allows it to understand the dependencies within a conversation, thus ensuring that the responses are not just accurate but also meaningful within the given context.

Some key capabilities offered by the API include:

- Natural language understanding

- Contextual response generation

- Ability to answer follow-up questions

- Support for conversational workflows

How to use the ChatGPT API

The OpenAI Python API library offers a simple and efficient way to interact with OpenAI's REST API from any Python 3.7+ application. This detailed guide aims to help you understand how to use the library effectively.

Installation

To start using the library, install it using pip:

!pip install openaiUsage

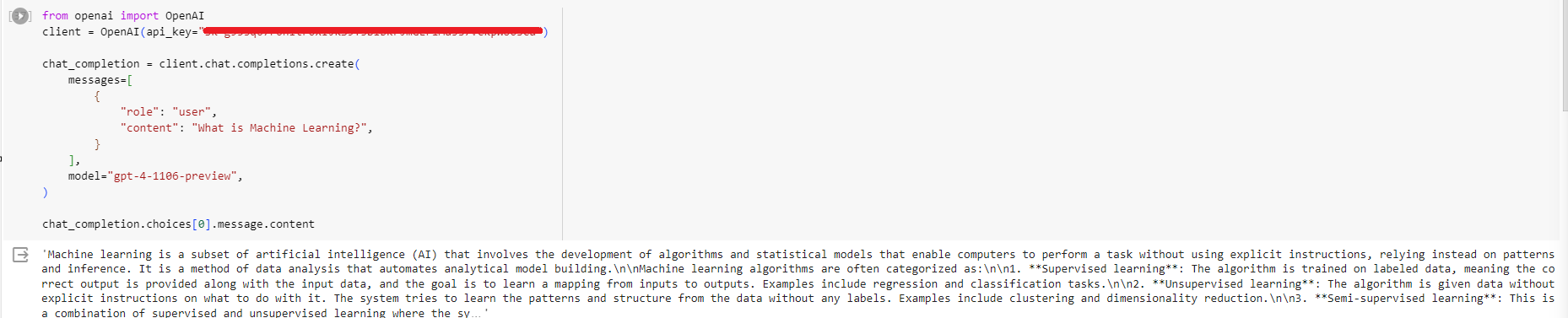

To use the library, you'll need to import it and create an OpenAI client:

from openai import OpenAI

client = OpenAI(api_key="...")

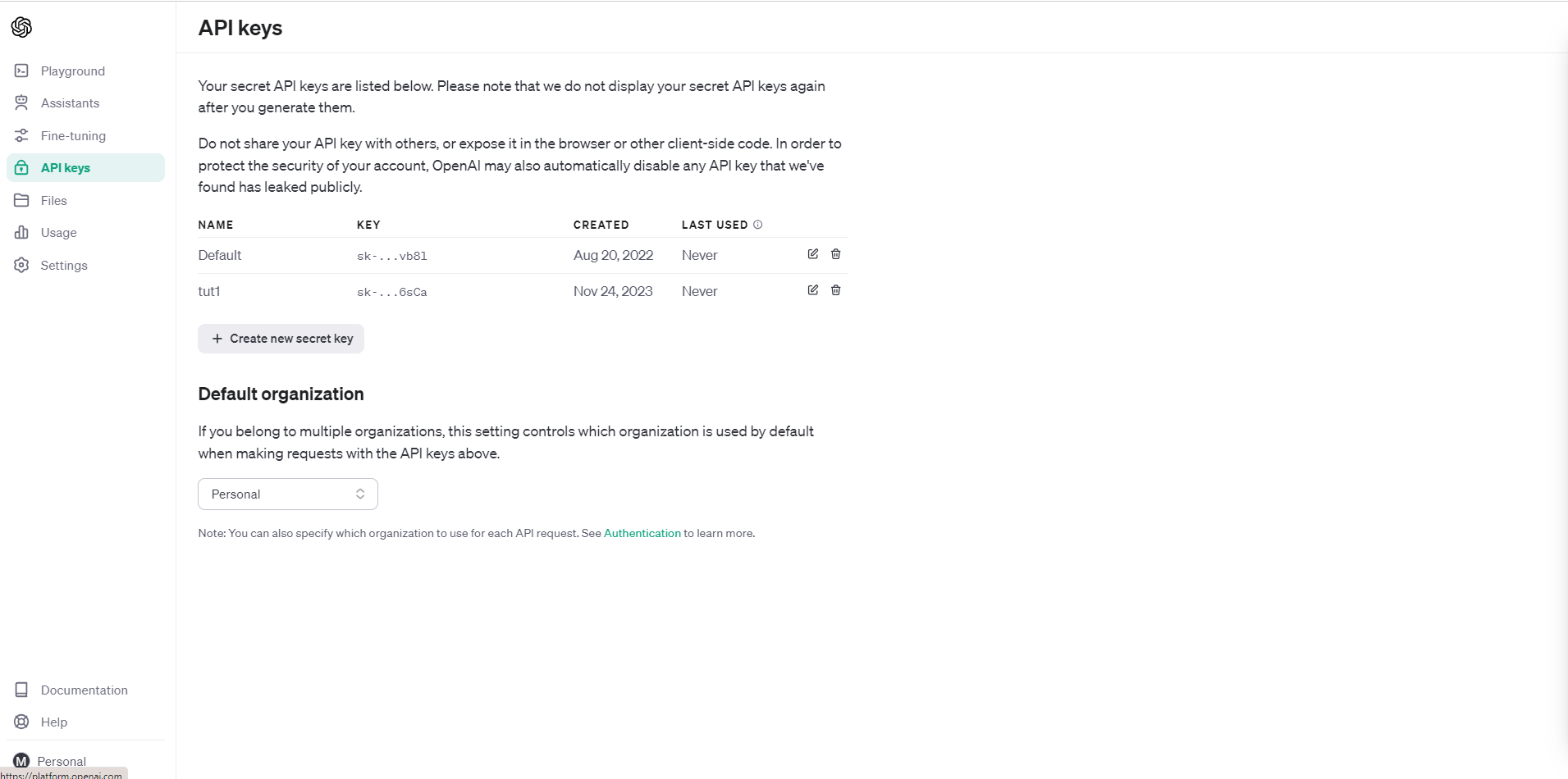

You can generate a key by signing into platform.openai.com

Once you have the key, you can then make API calls, such as creating chat completions:

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": "What is Machine Learning?",

}

],

model="gpt-4-1106-preview",

)

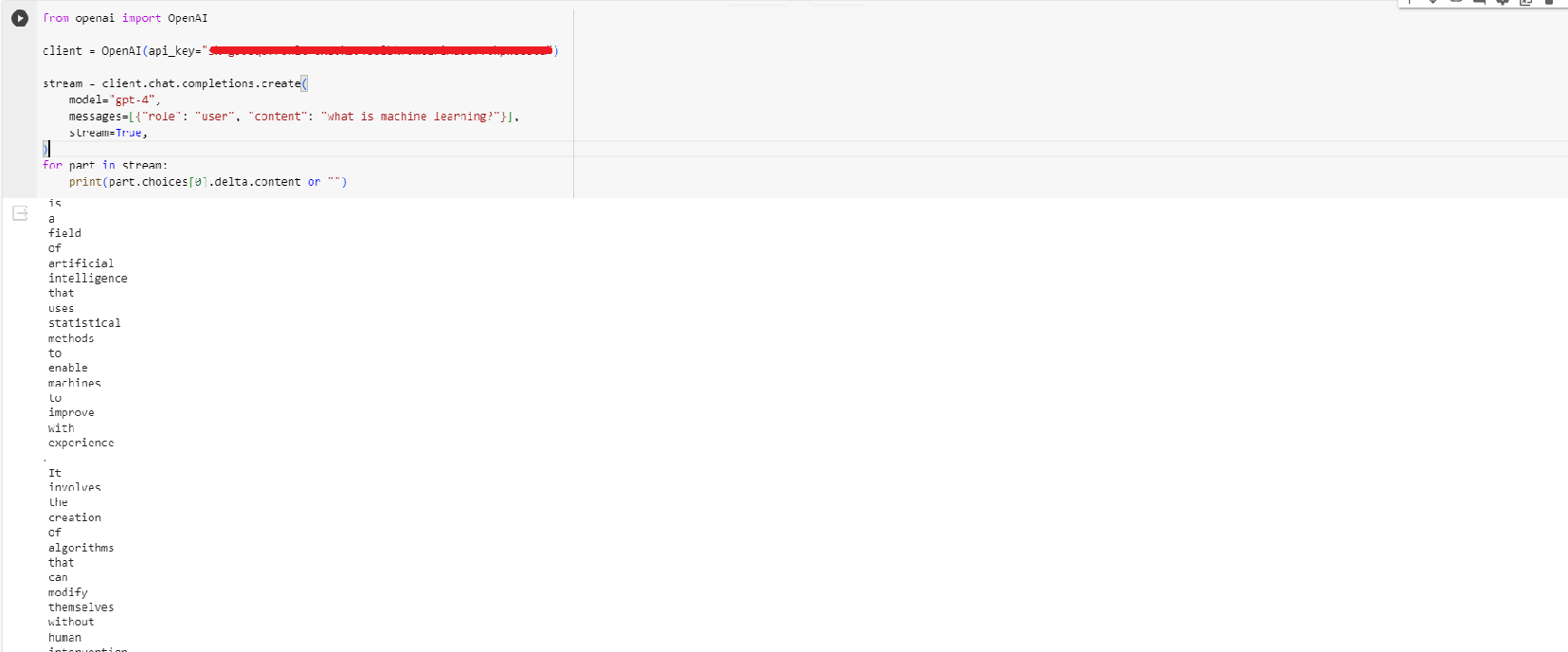

The library also supports streaming responses using Server-Side Events (SSE). Here's an example of how to stream responses:

from openai import OpenAI

client = OpenAI(api_key="...")

stream = client.chat.completions.create(

model="gpt-4",

messages=[{"role": "user", "content": "what is machine learning?"}],

stream=True,

)

for part in stream:

print(part.choices[0].delta.content or "")

OpenAI Models and Pricing

OpenAI offers a range of different AI models that users can leverage through their API. The models differ in their capabilities, pricing, and intended use cases.

The flagship GPT-4 model is the most capable and expensive, starting at $0.03 per 1,000 tokens for input and $0.06 per 1,000 tokens for output. GPT-4 represents the state-of-the-art in natural language processing, with the ability to understand and generate human-like text. It supports up to 128,000 tokens of context. The GPT-4 family includes the base GPT-4 model as well as GPT-4-32k, which uses 32,000 tokens of context.

A newly released GPT-4 turbo model comes with 128k context length, comes with vision support, and is more powerful than GPT-4. The best part is the pricing. It is priced at just $0.01 per 1,000 tokens for input and $0.03 per 1,000 tokens for output.

For more cost-effective natural language processing, OpenAI offers the GPT-3.5 family of models. GPT-3.5 Turbo is optimized for conversational applications with 16,000 tokens of context, priced at $0.0010 per 1,000 input tokens and $0.0020 per 1,000 output tokens. GPT-3.5 Turbo Instruct is an instruct model with 4,000 tokens of context, priced slightly higher at $0.0015 per 1,000 input tokens and $0.0020 per 1,000 output tokens.

In addition to the core language models, OpenAI provides other capabilities through their API. The Assistants API makes it easy to build AI assistants by providing tools like retrieval and code interpretation. Image models can generate and edit images. Embedding models can represent text as numerical vectors. There are also options to fine-tune models for specific applications.

OpenAI offers a range of powerful AI models that developers can leverage through a simple pay-as-you-go API. The choice of model depends on the specific application needs and budget. GPT-4 provides cutting-edge capabilities at a premium price point, while models like GPT-3.5 balance performance and cost for many applications.

You can check out the official documentation to learn more about all available models and their API pricing.

Flexibility and Customization

The API provides many parameters to customize the model's behavior as per your application's needs:

Authentication

- api_key (str): Your API key for authenticating requests. Required.

Models

- model (str): The ID of the model to use. Specifies which model to use for completion.

Input

- prompt (str): The prompt(s) to generate completions for. Typically text.

- suffix (str): The suffix that comes after a completion of generated text.

Output

- max_tokens (int): The maximum number of tokens to generate in the completion. Between 1 and 4096.

- stop (str): Up to 4 sequences where the API will stop generating further tokens.

- temperature (float): Controls randomness. Values range from 0.0 to 2.0. Higher values mean the model will take more risks.

- top_p (float): Alternative to sampling with temperature, called nucleus sampling. Values range from 0.0 to 1.0. Higher values means the model will take more risks.

- n (int): How many completions to generate for each prompt.

- stream (bool): Whether to stream back partial progress. If set, tokens will be sent as data-only server-sent events as they become available.

Shaping ChatGPT API Behavior

The three main message types that shape a chatbot's behavior are 'system', 'user', and 'assistant' messages. System messages represent the chatbot's internal processes, user messages are the inputs from humans, and assistant messages are the chatbot's responses.

The system messages allow the chatbot to keep track of the conversation state, understand context, and determine appropriate responses. For example, system messages may log the current topic of discussion, the user's mood, or past conversations with that user. This metadata shapes how the chatbot interprets user messages and crafts assistant messages.

User messages provide the raw conversational input that the chatbot must analyze and react to. The chatbot uses natural language processing to extract meaning from these messages and determine intent. Different user message phrasing, length, punctuation, and content will elicit different responses from the chatbot.

Finally, assistant messages represent the chatbot's responses shaped by its analysis of the system state and user message input. The tone, personality, and information content of assistant messages ultimately determine the user experience. Careful engineering of the chatbot rules and AI that generate assistant messages is key to creating engaging, helpful dialog.

Conclusion

The ChatGPT API represents a significant step forward in the realm of conversational AI, offering a versatile and powerful tool for developers and innovators. Its ability to understand and generate natural language, combined with the flexibility to be integrated into various applications, makes it an invaluable asset for creating sophisticated AI-driven solutions.

Whether it's for building advanced chatbots, automating customer support, generating creative content, or answering specific domain questions, the ChatGPT API provides the necessary tools and capabilities to bring these ideas to life.

The wide array of models offered by OpenAI, each tailored for different use cases and budget considerations, ensures that developers can select the most appropriate tool for their needs. From the state-of-the-art GPT-4 to the more cost-effective GPT-3.5 variants, the choice of model can be finely tuned to the application's specific requirements.

The comprehensive guide provided here serves as an excellent starting point for anyone looking to harness the power of this cutting-edge technology.

Interested in learning more about this topic? Check out this Hands-On Tutorial and Best Practices for OpenAI API, or you can also sign up for a free course on Working with the OpenAI API.