Course

With the release of ChatGPT, there has been a lot of hype about artificial intelligence and its applications all around the world, especially amongst non-technical senior business stakeholders. All businesses want to make use of the technology they experienced in ChatGPT tailored to their industry and their growth.

But how can we achieve that?

The answer is straightforward: businesses can use the rapidly evolving OpenAI API, built and made available by the makers of ChatGPT, to create customized solutions tailored to the business’s needs.

As beginner data scientists and machine learning practitioners, we know that OpenAI has been around for a while, even before the ChatGPT hype, but most of us weren’t using them to solve business problems in the industry. With all the progress in recent times, it’s more important than ever to understand the API comprehensively.

In this tutorial, we will do just that: introduce OpenAI and its API, go hands-on from creating an OpenAI account to using the API to get responses, and discuss best practices when using the API to provide a comprehensive overview of the OpenAI API.

Interested to see the OpenAPI in action? Check out our code-along on Building Chatbots with OpenAI API and Pinecone:

An Overview of OpenAI and its API

OpenAI is an AI research and deployment company working on the mission of ensuring that artificial general intelligence benefits all of humanity.

The company was founded as a non-profit in 2015, with the founding team including the likes of Elon Musk and Sam Altman. Through the years, OpenAI has transitioned from its non-profit beginnings towards a more diversified model to fulfill its mission, with partnerships with tech giants such as Microsoft.

What is OpenAI API?

OpenAI API (Application Programming Interface) serves as a bridge to OpenAI’s powerful machine learning models, allowing you to integrate cutting-edge AI capabilities into your projects with relative ease.

In simpler terms, the API is like a helper that lets you use OpenAI’s smart programs in your projects. For example, you can add cool features like understanding and creating text without having to know all the nitty-gritty details of the underlying models.

Develop AI Applications

OpenAI API Features

Here are some of the key features that make the OpenAI API a valuable tool for anyone looking to incorporate AI into their business:

1. Pre-trained AI models

Pre-trained models are machine learning models that have already been trained on a large dataset, often on a general task, before being used for a specific task. The OpenAI team has trained and released these models in API form, meaning “they train once, we use many times,” which can be a huge time and resource saver.

Some of the models released are:

- GPT-4: Improved versions of GPT-3.5 models, capable of understanding and creating both text and code.

- GPT-3.5:: Enhanced versions of GPT-3 models, able to comprehend and generate text or code.

- GPT Base: Models that can process and generate text or code, but lack instruction following capability.

- DALL·E: A model that creates and modifies images based on text prompts.

- Whisper: Transforms audio input into written text.

- Embeddings: Models that turn text into numeric values.

- Moderation: A fine-tuned model that identifies potentially sensitive or unsafe text.

These models have been trained on huge amounts of data and utilized significantly high computing power, often not available or affordable to individuals or even many organizations. Now, data scientists and businesses can easily utilize the pre-trained models in the form of an API.

2. Customizable AI models

Customizing models in the OpenAI API primarily involves a process known as fine-tuning, which allows users to adapt pre-trained models to better suit their specific use cases.

OpenAI API allows us to take the pre-trained models and use our training data, further train the models, and use the fine-tuned models for our use cases. You can read more about the process of fine-tuning a GPT-3 model here. Fine-tuning saves costs and enables lower latency requests for downstream applications.

3. Simple API interface

The OpenAI API platform is intuitive and simple for a beginner to use. With a few lines of code, thanks to the comprehensive documentation and quick start examples, it’s straightforward to start using the API in your use cases without having to traverse a steep learning curve.

This simplicity is helpful for individuals who are early in their data careers, making the step into AI less intimidating and more engaging.

4. Scalable infrastructure

OpenAI has scaled Kubernetes clusters to 7,500 nodes to provide a scalable infrastructure for large models like GPT-3, CLIP, and DALL·E, as well as for rapid small-scale iterative research. Azure OpenAI Service runs on the Azure global infrastructure to meet production needs such as critical enterprise security, compliance, and regional availability, indicating a scalable infrastructure to support OpenAI API usage.

As your projects become larger and more complex, you need an infrastructure that can grow with them. OpenAI API’s ability to scale when usage increases is especially useful when you move from small projects to larger, more demanding ones, making the OpenAI API a dependable tool for your projects.

Industry Use-Cases of OpenAI API

Over 300 applications are now utilizing GPT-3 via the OpenAI API, indicating a vast array of creative and unique applications being developed across the globe. Here are some of the common industry applications of the OpenAI API:

1. Chatbots and virtual assistants

The ability of the OpenAI API to comprehend and generate human-like text makes it a prime candidate for creating intelligent chatbots and virtual assistants. The pre-trained models like GPT-4 or ChatGPT can be employed to power conversational agents that can interact with users naturally and intuitively. These agents can be deployed on websites, applications, or customer service platforms to enhance user engagement and provide automated support.

2. Sentiment analysis

Sentiment Analysis is about understanding the sentiment behind textual data. With OpenAI API, you can analyze customer reviews, social media comments, or any textual data to gauge public opinion or customer satisfaction. By utilizing models like GPT-4 or 3.5, you can automate the process of sentiment analysis, deriving insights that can be pivotal for business strategies.

3. Image recognition

Although OpenAI is often associated with text processing, it also ventures into the domain of image recognition with models like CLIP. CLIP is a model that learns visual concepts from natural language descriptions and can be employed through the OpenAI API for tasks like object detection, image classification, and more. This opens up possibilities in various fields, including healthcare, where image recognition can be used to identify medical conditions from imaging data.

4. Gaming and reinforcement learning

The OpenAI API also finds its application in the gaming industry and reinforcement learning environments. Models can be trained or fine-tuned to interact with gaming environments, making decisions that could either play the game autonomously or assist players in gameplay.

OpenAI has showcased this with models like Dactyl, which learned to solve a Rubik’s cube using reinforcement learning, and OpenAI Five, which competed against human players in the game of Dota 2.

Now that we have understood the OpenAI API and its use cases, let’s go hands-on and start using it.

Hands-On: Getting Started with OpenAI API

Let’s assume you’re an absolute beginner at OpenAI API and get you to make your first API call in a step-by-step fashion.

Step 1: Create an OpenAI platform account

Before anything else, you’ll need an account in the OpenAI platform.

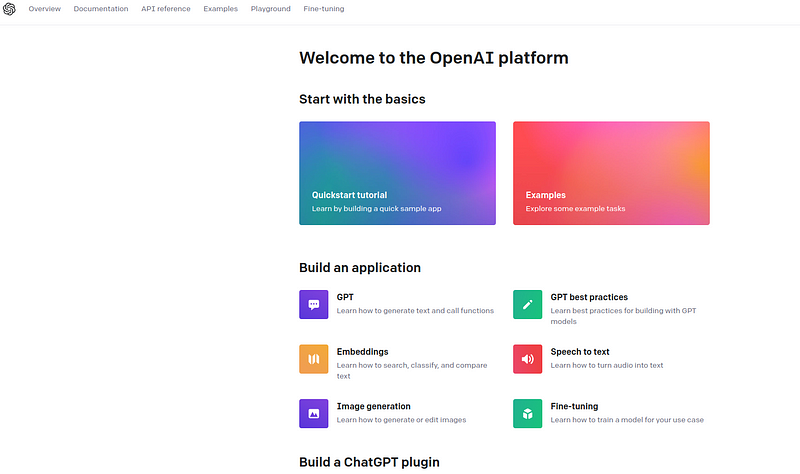

Head over to the OpenAI’s platform and follow the prompts to create an account. You should be seeing something like this once signed up:

Step 2: Get your API key

Once your account is set up, you’ll need to retrieve your API key, which is essential for interacting with the API.

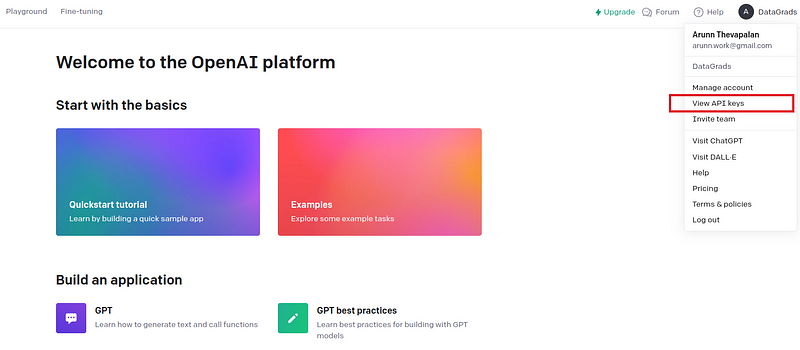

Navigate to the API keys page on your OpenAI account, as shown in the diagram below.

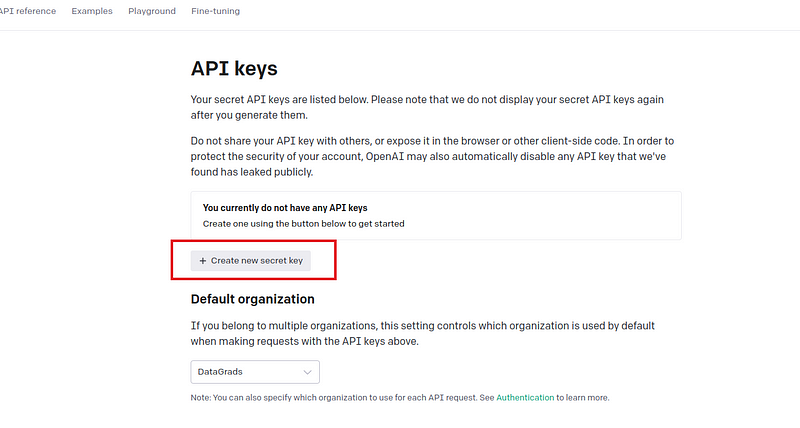

You can now create an API key, which you have to copy and keep safe, as you won’t be able to view it again. However, if you lose it or need a new one, a new one can be generated.

Step 3: Install the OpenAI Python library

Now that the account setup and the API keys are ready, the next step is to work on our local machine setup. We can access the OpenAI API from our local machine through OpenAI’s Python library.

You can install it using pip using the command below:

pip install openai

Step 4: Making your first API call

Now that you have your API key and the OpenAI library installed, it’s time to make your first API call.

Here’s the code to do so:

def get_chat_completion(prompt, model="gpt-3.5-turbo"):

# Creating a message as required by the API

messages = [{"role": "user", "content": prompt}]

# Calling the ChatCompletion API

response = openai.ChatCompletion.create(

model=model,

messages=messages,

temperature=0,

)

# Returning the extracted response

return response.choices[0].message["content"]

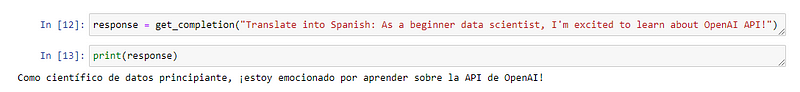

response = get_chat_completion("Translate into Spanish: As a beginner data scientist, I'm excited to learn about OpenAI API!")

print(response)

We write a helper function to use the ChatCompletion API by selecting the “gpt-3.5-turbo” model, which accepts the user-generated prompt and returns the response.

Here’s the response (please feel free to validate it if you know a bit of Spanish!):

Step 5: Exploring further

With your first API call under your belt, you’re well on your way! Here are a few next steps to consider:

- Explore different engines and find what works best for your use case.

- Experiment with different prompts and parameters to see how the API responds.

- Dive into the OpenAI documentation to learn more about what you can do with the API.

The idea here is that you have to brainstorm from here as to how you can use the API for the business problem at hand (there are more resources provided at the end of the tutorial.)

Best Practices When Using OpenAI API

Now that you’re comfortable hands-on with the API, here are some best practices to note before you use them in your projects:

1. Understanding the pricing of the API

The API provides a range of models, each with different capabilities and price points, in the pay-for-what-you-use approach. The costs are incurred per 1,000 tokens, where tokens are essentially pieces of words, and 1,000 tokens equate to about 750 words. You can find the updated pricing for all models from the OpenAI pricing page.

When you sign up, you start with $5 in free credit that can be utilized during your first three months. You get to try out the tutorial and understand the API better before you decide to pay.

2. Securing your API key

Instead of hardcoding your API key directly in your application’s source code, use environment variables to store and retrieve the API key, which is a safer practice.

It’s also challenging to manage hardcoded API keys, especially in a team environment. If the key needs to be rotated or changed, every instance of the hardcoded key in the source code needs to be found and updated.

3. Using the latest models

OpenAI recommends using the latest models for the problem you want to solve when multiple versions are available. This makes sense as the newer models are more capable but also come with a higher cost. In general, it’s worth experimenting with a few available models and choosing the one that best applies to your use-case needs, considering the cost vs performance trade-off.

4. Utilizing token limits efficiently through Batching

Batching is a technique that allows you to group multiple tasks into a single API request to efficiently process them together rather than sending individual requests for each task.

The OpenAI API imposes separate limits for the number of requests per minute and the number of tokens per minute you can process. If you’re hitting the requests per minute limit but have unused capacity in the tokens per minute limit, batching can help utilize this capacity efficiently.

By grouping multiple smaller tasks into a single request, you reduce the total number of requests sent while possibly processing more tokens in a single request, thus staying under the rate limits and optimizing costs.

Conclusion

If you’re a relative newcomer to data science, you may not have dabbled with APIs much. While that was normal in the past, with the rapid progress and capabilities in AI by companies like OpenAI, knowing how to effectively use their pre-trained models (and fine-tune them) from their API has become an essential skill for every data scientist to save time, costs, and other resources.

This tutorial introduced OpenAI, its API, and some common industry-specific use cases for it. It also took you through getting hands-on with the OpenAI API alongside best practices to follow.

The next step would be to broaden your knowledge of OpenAI API using tutorials such as:

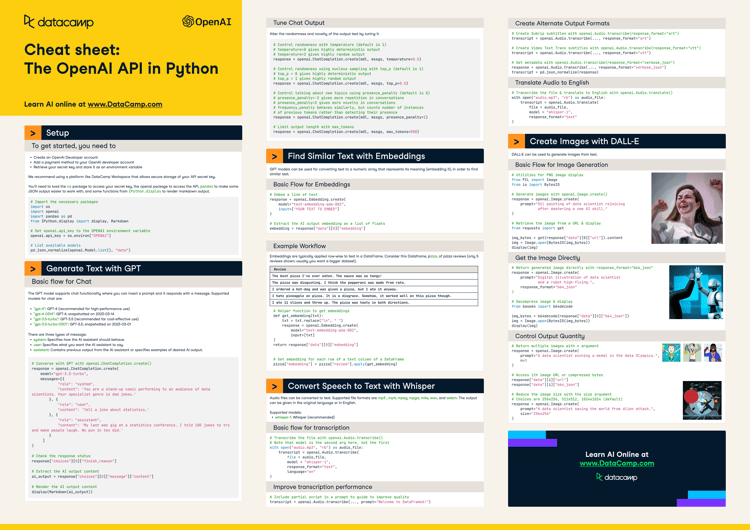

- OpenAI API cheatsheet in Python

- Using GPT-3.5 and GPT-4 via the OpenAI API in Python

- Introduction to Text Embeddings with the OpenAI API

- OpenAI Function Calling Tutorial

If you prefer a guided approach instead that gives you a hands-on experience that compliments this tutorial, check out our own Working with the OpenAI API course.

Earn a Top AI Certification

As a senior data scientist, I design, develop, and deploy large-scale machine-learning solutions to help businesses make better data-driven decisions. As a data science writer, I share learnings, career advice, and in-depth hands-on tutorials.