Course

Until a few years ago, could you imagine a machine that could create art, craft stories, or even understand complex data such as medical and legal documents?

Likely not. For most of us, it was limited to a science-fiction that seemed like a far-fetched reality.

But the emergence of the Generative AI era has redefined our understanding of what machines can do.

What is Generative AI?

Generative AI is a sophisticated branch of AI that has enabled machines to generate new content by leveraging existing content in the form of text, audio, video, and even code.

Gartner lists it as “one of the most impactful and rapidly evolving technologies that can bring productivity revolution”.

It is a fast-becoming reality that has given rise to many innovative applications transforming industries, for example, accelerating and amplifying content creativity in the media, marketing in retail, providing enhanced and personalized healthcare treatment, and providing adaptive style learning and education.

The Ethical Concerns of Generative AI

The pace at which such applications are reaching the marketplace is compelling.

But when Sam Altman, the CEO of OpenAI, warns us about the dangers of language models–the risk is real, making it incumbent to balance its benefits with caution and leverage this potent technology responsibly.

Image source: Business Insider

Let us understand some of the key ethical concerns of using Generative AI.

Distribution of harmful content

While the ability of Generative AI systems to generate human-like content can enhance business productivity, it can also lead to generating harmful or offensive content. The most concerning harm stems from the tools like Deepfakes that can create false images, videos, text, or speech that can be agenda-driven or fueled to spread hate speech.

Recently, a scammer cloned the voice of a young girl to demand ransom from her mother by faking a kidnapping. These tools have become so sophisticated that it becomes challenging to distinguish fake voices from real ones.

Further, the automatically generated content can perpetuate or amplify the biases learned from the training data, resulting in biased, explicit, or violent language. Such harmful content calls for human intervention to align it with the business ethics of the organization leveraging this technology.

Copyright and legal exposure

Like most AI models, Generative AI models are trained on data–lots and lots of it. By nature of which, it can infringe upon the copyrights and intellectual property rights of other companies. It can lead to legal, reputational, and financial risk for the company using pre-trained models and can negatively impact creators and copyright holders.

Data privacy violations

The underlying training data may contain sensitive information, including personally identifiable information (PII). PII, as defined by the US Department of Labor, is the data “that directly identifies an individual, e.g., name, address, social security number or other identifying number or code, telephone number, and email address.”

Breach of users’ privacy can lead to identity theft and can be misused for discrimination or manipulation.

Hence, it is imperative for both the developers of pre-trained models and the companies who fine-tune these models for specific tasks to adhere to the data privacy guidelines and ensure that PII data is removed from the model training.

We have a webinar explaining the data privacy regulations, GDPR in particular, to ensure the ethical use of data.

Sensitive information disclosure

Extending the significance of diligent use of user data, it is important to consider the risks arising from the inadvertent disclosure of sensitive information, a concern amplified by the rapid democratization of AI capabilities. The curiosity and convenience of generative AI tools, ChatGPT in particular, can sometimes entice users to overlook data security while exploring such new platforms.

Risks increase significantly if an employee uploads sensitive details like a legal contract, source code of a software product, or proprietary information, among others.

The downsides can be severe, whether financially, reputationally, or legally damaging for the organizations, and hence the demand for a clear data security policy becomes obvious and essential.

Amplification of existing bias

AI models are only as good (or bad) as the data they are trained on. If the training data represented biases prevalent in society, so will the model behave - or, so we thought. A recent study hosted at Bloomberg discusses how these models are even more biased than the real-world data they’re trained on.

The study brings harsh reality to the surface when it mentions that–“the world according to Stable Diffusion is run by white male CEOs. Women are rarely doctors, lawyers, or judges. Men with dark skin commit crimes, while women with dark skin flip burgers.”

To create an equitable world, the data must mirror that by including diverse teams and perspectives throughout the model development lifecycle.

Workforce roles and morale

On one side, Generative AI is seen as the next wave unleashing productivity, while the flip side implies the loss of jobs, as lesser humans are needed to do the same quantum of work.

It is also evident in the latest Mckinsey report that echoes the concerns associated with the displacement of current skills–“Current generative AI and other technologies have the potential to automate work activities that absorb 60 to 70 percent of employees’ time today.”

Though the flip side that puts most of the jobs at risk today does not mean to stop the transformation that comes with Generative AI, it certainly calls for measures to upskill or reskill workers to new roles created with the onset of the advanced AI era.

Data provenance

Most of the concerns surrounding the use of AI tools are associated with data, including user privacy, generating synthetic multimodal data such as text, images, videos, or audio.

Such systems require maintaining data assurance and integrity to avoid using biased data or data of questionable origin.

Lack of transparency

AI systems are black-box in nature, which implies that it is difficult to understand how they arrived at a particular response or what factors led to their decision-making.

The emergent capabilities of large models further intensify this gap, as even the developers are left surprised at its functionality, highlighting that–“researchers are racing not only to identify additional emergent abilities but also to figure out why and how they occur at all to try to predict unpredictability.”

Examples of AI-Generated Misinformation

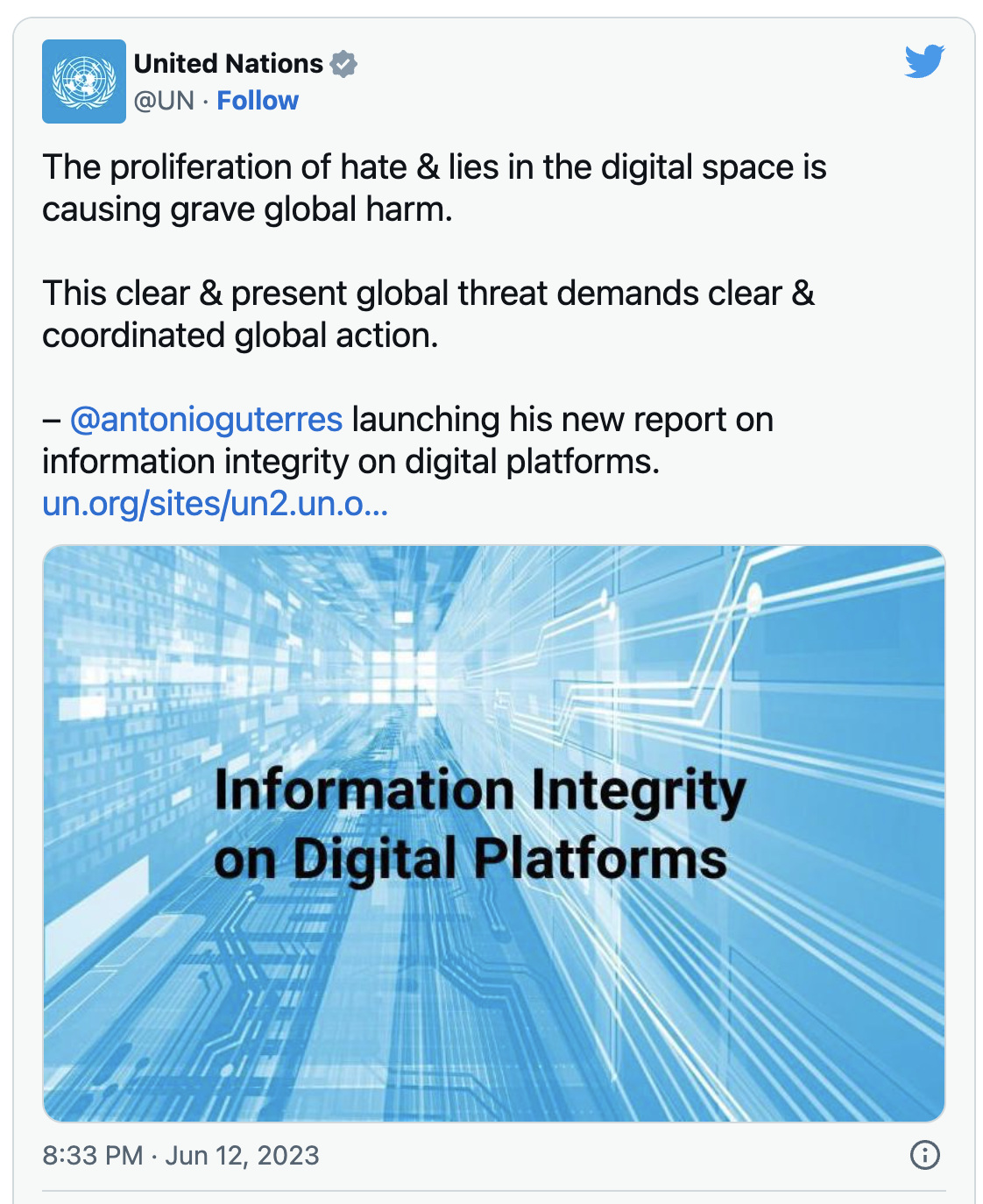

Recently, a study was conducted to assess if the participants can recognize AI-generated content compared to one written by a human. Unsurprisingly, the results showed that people had a hard time distinguishing content from language models, highlighting the ability of these models to spread misinformation, that too at scale.

An unprecedented example of such misinformation surfaced in the US court recently when a lawyer referred to an example of an inexistent legal case, relying on the ChatGPT-generated response.

The UN has also shared concerns over AI-generated misinformed content that can lead to conflicts and crimes.

Ethical Best Practices for Generative AI

The technological advancements brought forward by the development of Generative AI models have a greater potential to benefit society when used in a controlled and regulated manner. This necessitates adopting ethical best practices to minimize the potential harms that come with its advantages.

Stay informed and take action

Immerse yourself in the current and future landscape of data ethics. Understand the guidelines for applying them, whether you're an individual contributor or an organizational leader.

You can read more about the current and future landscape of data ethics and the guidelines for applying them in a separate article.

Align with global standards

It’s also worth familiarizing yourself with the UNESCO AI ethics guidelines, which were adopted by all 193 Member States in November 2021.

The guidelines emphasize four core values:

- Human rights and dignity

- Peaceful and just societies

- Diversity and inclusiveness

- Environmental flourishing

They also outline ten principles, including proportionality, safety, privacy, multi-stakeholder governance, responsibility, transparency, human oversight, sustainability, awareness, and fairness.

The guidelines also provide policy action areas for data governance, environment, gender, education, research, health, and social wellbeing.

Engage with ethical AI communities

There are various other groups, such as AI Ethics Lab and Montreal AI Ethics Institute, that address ethical literacy. The common ethical principles include transparency, accountability, data privacy, and robustness that focus on the technology providers.

Keeping informed of developments in these areas and engine with the discourse around AI ethics is an essential part of making sure generative AI remains safe for all users.

Cultivate awareness and learn

It is incumbent on us, as users, to dismiss the idea that everything available in the digital world can be trusted at face value.

Instead, most problems will be solved when we start challenging and suspecting the available information by fact-checking and verifying its authenticity and source of origin before putting that knowledge to use.

Awareness is the key to getting generative AI right and is the intent behind writing this post.

Final Thoughts

Generative AI holds immense potential to revolutionize various sectors, from healthcare to education, by creating new content and enhancing productivity.

However, this powerful technology also brings with it significant ethical concerns, including the distribution of harmful content, copyright infringements, data privacy violations, and the amplification of existing biases.

As we continue to harness the capabilities of Generative AI, it is crucial to adopt ethical best practices

You can stary your journey today with our Generative AI Concepts course, and start exploring generative AI projects you can start today.

I am an AI Strategist and Ethicist working at the intersection of data science, product, and engineering to build scalable machine learning systems. Listed as one of the "Top 200 Business and Technology Innovators" in the world, I am on a mission to democratize machine learning and break the jargon for everyone to be a part of this transformation.