Course

In this post, we'll explore five generative AI projects that will help you build and strengthen your machine learning and data science portfolio. These projects are designed to enhance your understanding of cutting-edge technologies like Stable Diffusion, Segment Anything, LangChain, Alpaca-LoRA, and more.

Whether you’re new to generative AI or have some experience, these projects will equip you with valuable skills that can make you stand out in job applications and interviews.

You can learn about the GPT series, including GPT-1, GPT-2, GPT-3, and GPT-4, by reviewing the article 'What is GPT-4 and Why Does it Matter?' You can also view some other AI projects in a separate post.

1. StableSAM: Stable Diffusion Inpainting with Segment Anything

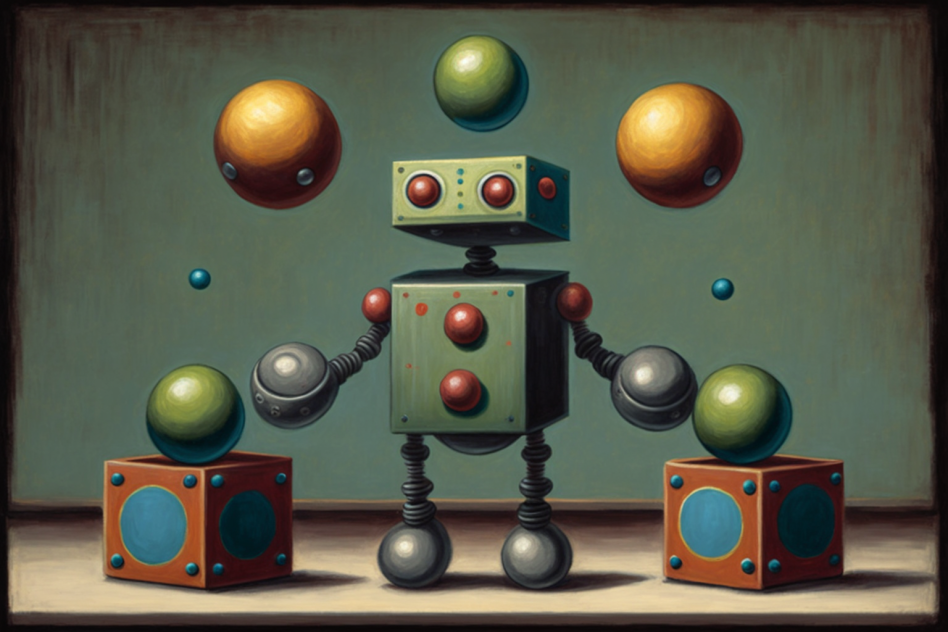

In this project, you will use Meta’s segment-anything, Hugging Face diffusers, and Gradio to create an app that can change the background, face, clothes, or anything else you select. It just wants the image, selected area, and prompt.

Use case:

StableSAM is perfect for creative professionals in the digital marketing, design, and entertainment industries. It allows users to edit specific parts of images with precision, making it ideal for tasks such as changing backgrounds, altering outfits, or modifying objects in promotional materials. For hobbyists, it’s a fantastic tool for image customization without the need for advanced design skills.

Skills you'll learn:

- Implementing an image inpainting pipeline using Stable Diffusion

- Working with Meta’s Segment Anything Model (SAM) for image segmentation

- Creating an interactive web application with Gradio

- Using Hugging Face diffusers for model deployment

Create Stable Diffusion Inpainting Pipeline

We will create a Stable Diffusion Inpainting pipeline using diffuser and model weights available on stabilityai/stable-diffusion-2-inpainting · Hugging Face. After that, we will add it to CUDA for GPU acceleration.

Defining Image Mask and Inpainting Function

The image mask function is created using SAM Predictor. It takes an image, the elected image section, and an is_backgroud boolean value to create a masked image and segmentation.

After that, the inpainting function uses the Stable Diffusion Inpainting pipeline to change selected parts of the images. The pipelines require input image, masked image, segmented image, prompt text, and negative prompt text.

Creating Gradio UI

You will create a row and add three image blocks. For a minimal viable product, you have to add another row with a submit button. After that, you have to modify the input image object to select pixels, generate mask and segmentation, and add action to the submit button to run the inpainting function.

Gradio is quite easy to learn. You can learn everything by reading the Gradio Docs.

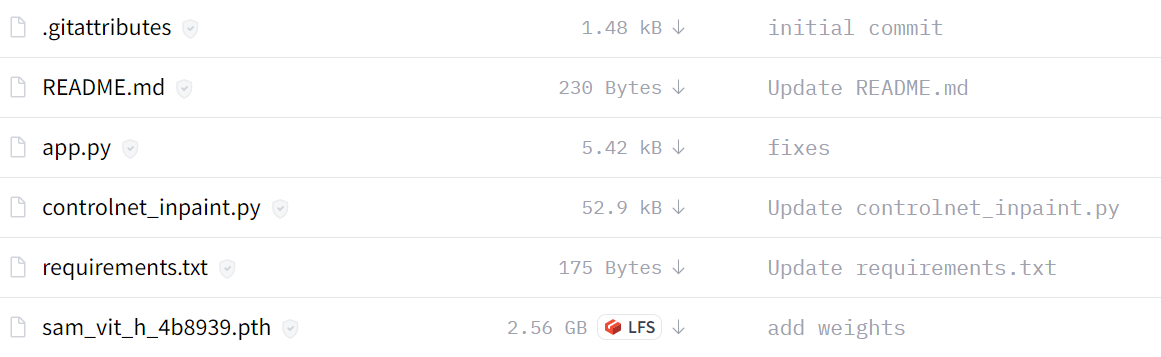

Improved Version of StableSAM

The improved version of StableSAM is available at Hugging Face, which includes a customized inpainting pipeline that uses ControlNet. It uses Runway ML Stable Diffusion Inpainting instead of Stability AI.

As you can see, the final version app looks clean with a segmentation block, clean button, background option, and negative prompt.

Resources:

- Hugging Face Demo: StableSAM

- Youtube Tutorial: Stable Diffusion Inpainting with Segment Anything Model (SAM)

2. Alpaca-LoRA: Build ChatGPT-like Chatbot with Minimal Resources

The Alpaca-LoRA provides all of the necessary components for you to create your own specialized ChatGPT-like chatbot using a single GPU. In this project, we will look at the initial setup, training, inference script, and native client for running inference on the CPU.

Use case:

This project is excellent for those interested in building customized conversational AI systems. Whether you’re creating a customer service chatbot for a small business or developing a personal assistant, Alpaca-LoRA provides an efficient way to train a specialized chatbot using limited computational resources. This project is ideal for experimenting with language model fine-tuning on a budget.

Skills you'll learn:

- Fine-tuning language models with LoRA on a single GPU

- Setting up and using the Stanford Alpaca dataset

- Running inference scripts on low-resource environments (e.g., Raspberry Pi)

- Deploying a Gradio-based chatbot interface

- Understanding how large language models like LLaMA are trained and optimized

Local Setup

- Clone repository: tloen/alpaca-lora

- Install dependencies using

pip install -r requirements.txt - If bitsandbytes doesn't work, install it from the source.

Training

In this part, we will look at the fine-tuning script that you can run on the LLaMA model using the cleaned Stanford Alpaca model.

You can look at the repository to tweak the hyperparameters for better performance.

python finetune.py \

--base_model 'decapoda-research/llama-7b-hf' \

--data_path 'yahma/alpaca-cleaned' \

--output_dir './lora-alpaca'Inference

The inference script reads the foundation LLaMA model from Hugging Face and loads LoRA weights to run a Gradio interface.

python generate.py \

--load_8bit \

--base_model 'decapoda-research/llama-7b-hf' \

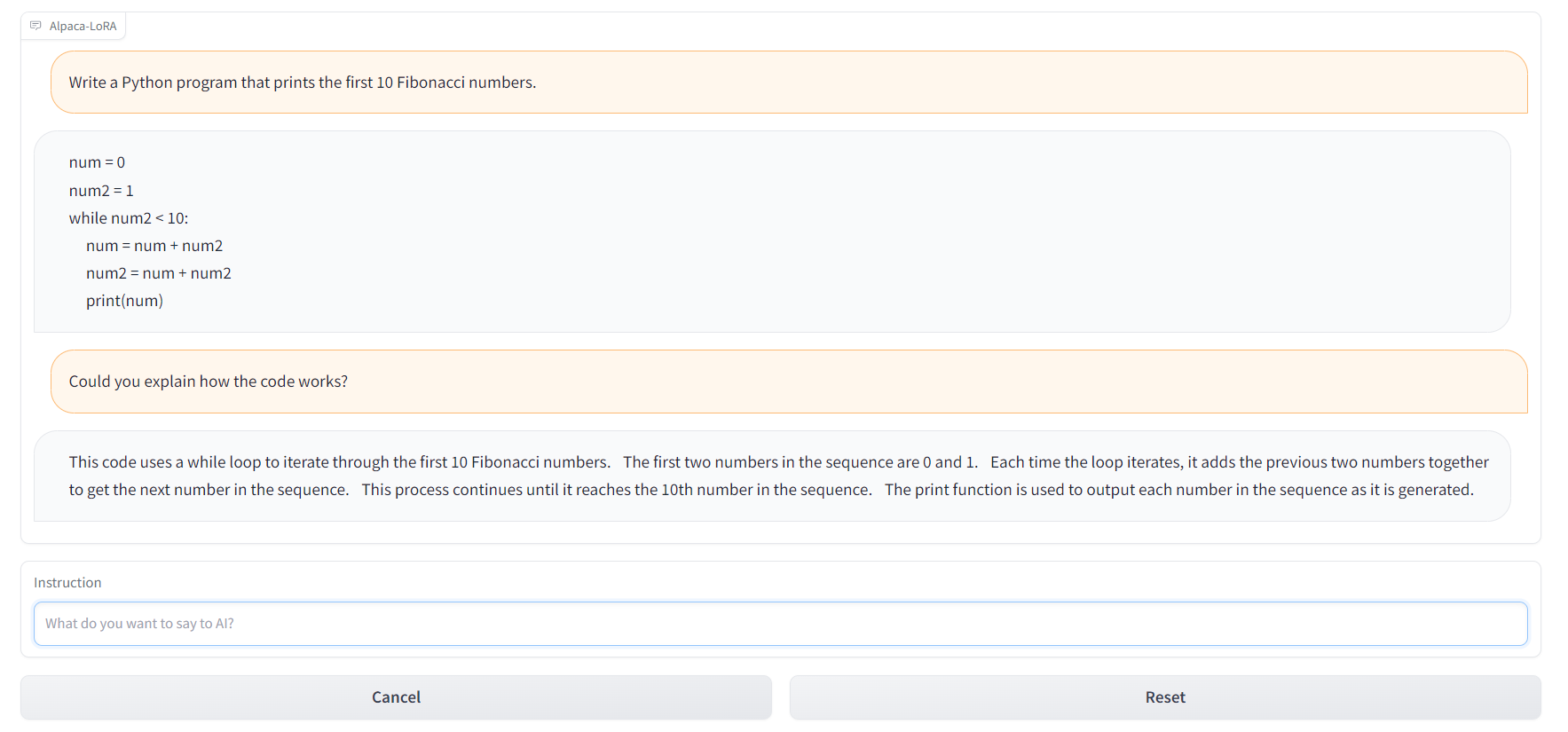

--lora_weights 'tloen/alpaca-lora-7b'You can also use alpaca.cpp for running alpaca models on CPU or 4GB RAM Raspberry Pi 4. Furthermore, you can use Alpaca-LoRA-Serve to create a ChatGPT-style interface, as shown below.

Resources:

- GitHub: tloen/alpaca-lora

- Model Card: tloen/alpaca-lora-7b

- Hugging Face Demo: Alpaca-LoRA-Serve

- ChatGPT-style interface: Alpaca-LoRA-Serve

- Alpaca Dataset: AlpacaDataCleaned

3. Automating PDF Interaction with LangChain and ChatGPT

Create your own ChatPDF clone using LangChain’s PDF loader, OpenAI Embeddings, and GPT-3.5. You will create a chatbot that can communicate with your book, legal documentation, and other important PDF documents.

Use case:

This project is useful for automating document-based workflows, such as analyzing research papers, legal contracts, or financial reports. You can build a chatbot that answers specific questions about PDF documents, which can be a powerful tool in industries like law, academia, or business intelligence, where processing large amounts of text is essential.

Skills you'll learn:

- Loading and processing PDF documents using LangChain’s PyPDFLoader

- Creating embeddings and vectorizing document data with OpenAIEmbeddings

- Building a vector database for document querying with Chroma

- Integrating ChatGPT with vectorized data for question-answering

- Developing a simple PDF chatbot interface using Gradio

Loading the document

We will use the LangChain document loader to load PDFs and read the contents.

from langchain.document_loaders import PyPDFLoader

pdf_path = "./paper.pdf"

loader = PyPDFLoader(pdf_path)

pages = loader.load_and_split()

print(pages[0].page_content)Creating embeddings and Vectorization

We will create embeddings using OpenAIEmbeddings class from LangChain API. After that, pass these embeddings to the Chroma class to create a vector database for the PDF document.

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

embeddings = OpenAIEmbeddings()

vectordb = Chroma.from_documents(pages, embedding=embeddings, persist_directory=".")

vectordb.persist()Querying the PDF

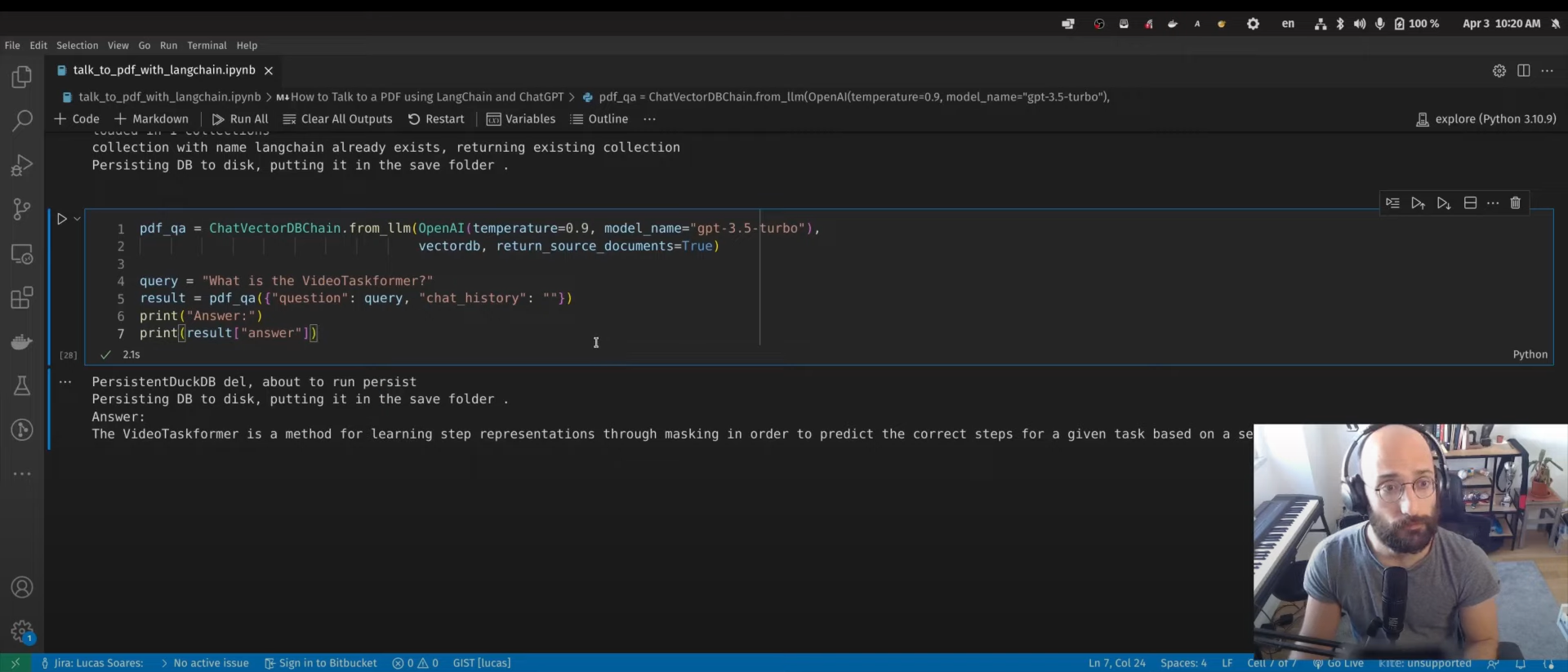

We will use the ChatVectorDBChain class to interact with ChatGPT using a generated vector database.

from langchain.chains import ChatVectorDBChain

from langchain.llms import OpenAI

pdf_qa = ChatVectorDBChain.from_llm(

OpenAI(temperature=0.9, model_name="gpt-3.5-turbo"),

vectordb,

return_source_documents=True,

)

query = "What is the VideoTaskformer?"

result = pdf_qa({"question": query, "chat_history": ""})

print("Answer:")

print(result["answer"])The next step is not mentioned in the video tutorial. You can use the Gradio framework to create a web app and share it with your colleagues and friends.

Resources:

- Youtube Tutorial: How to Talk to a PDF using LangChain and ChatGPT

- Blog with Code Source: Automating PDF Interaction with LangChain and ChatGPT

- Also, check out: Talk to your CSV & Excel with LangChain

4. Bing-GPT Voice Assistant

Build your own AI-powered personal assistant just like J.A.R.V.I.S. For that, you will need OpenAI API, text-to-speech library, speech recognition library, and generative AI.

Use case:

The Bing-GPT Voice Assistant is ideal for building voice-controlled AI personal assistants that can handle tasks like setting reminders, answering questions, or controlling smart home devices. This project can also be expanded to automate businesses, create voice-based customer service solutions, or build accessible AI tools for people with disabilities.

Skills you'll learn:

- Using OpenAI’s API for generating chatbot responses

- Implementing speech synthesis and recognition using Whisper and Polly

- Setting up wake word detection for voice-based interactions

- Building a hybrid AI assistant using EdgeGPT and ChatGPT

- Integrating text-to-speech and voice recognition technologies in Python

After loading the required libraries, you have to provide the OpenAI API key:

openai.api_key = "[paste your OpenAI API key here]"Wake word

Create a wake word function to activate the AI. In this case, the developer is using “‘bing” or “gpt”.

Speech Synthesis

Speech synthesis function provides text-to-speech inference. You can access the polly (text-to-speech) from boto3 and play the audio using pydub.

Transcription with Whisper

The speech recognition is done by openai/whisper. You just have to figure out the API to add it to your application.

ChatBot with EdgeGPT

In the end, you will use acheong08/EdgeGPT and OpenAI API to create a chatbot. If the user uses the wake word “bing'' it will use the EdgeGPT model and else the ChatGPT model.

If you are looking for a much simpler implementation of Voice Assistant, check out OpenAI Whisper, ChatGPT, TTS, and Gradio Web UI.

Resources:

- Youtube Tutorial: Create a ChatGPT & Bing Voice Assistant in 7 Minutes

- GitHub: Ai-Austin/Bing-GPT-Voice-Assistant

- Alternative Solution: youtube-stuffs/voiceGPT.ipynb

5. An End-to-End Data Science Project

In this project, we will use ChatGPT to work on end-to-end loan approval classifier applications. All we need is access to the ChatGPT interface and a personal machine to run the code.

Use case:

This project is designed for aspiring data scientists who want to build a fully functional machine learning pipeline using AI. You’ll learn how to use ChatGPT to plan, execute, and deploy a machine learning model, making it an excellent addition to any data science portfolio. Use this project to predict loan approvals, churn prediction, or fraud detection.

Skills you'll learn:

- Using ChatGPT to assist with exploratory data analysis (EDA)

- Automating feature engineering and data preprocessing tasks

- Building and tuning machine learning models with ChatGPT’s assistance

- Creating a machine learning web app using Gradio

Project Planning

In the planning phase, we will describe the dataset and what we want from it. Sometimes the answers are not perfect, but you can tweak the response by providing follow-up prompts.

After that, we will start following the brief plan.

Exploratory Data Analysis (EDA)

We will ask ChatGPT to generate Python code that will load the dataset and perform exploratory data analysis with various visualization techniques. You can even ask it to interpret the results.

Feature Engineering

We have asked ChatGPT to write a feature engineering code, and amazingly it has created two features from existing features. It means that AI now fully understands the dataset.

Preprocessing and Balancing the Data

We have an imbalanced dataset, and in this part, we will use ChatGPT to generate preprocessing and class balancing code.

Model Selection

We have just asked ChatGPT to write the model selection code by specifying the machine-learning models. After running the code, we will select the best-performing model.

Hyperparameter Tuning and Model Evaluation

To improve the performance, we will ask ChatGPT to write Python code for hyperparameter tuning and model evaluation, and save the best-performing model.

Creating a Web App using Gradio

We will ask ChatGPT to write Gradio app code using the saved model and preprocessing. The AI understood the input features and output results. As a result, we got a fully functioning web app.

Deploying the Web App on Spaces

At the final step, we have asked ChatGPT to deploy a web app on space. It has provided a few steps that we can follow and deploy in a few minutes.

This project will also teach tips on writing effective prompts, which is becoming essential for every field.

Resources:

- DataCamp Tutorial: A Guide to Using ChatGPT For Data Science Projects

- Hugging Face Demo: Loan Classifier

Conclusion

This is just a start of what’s to come with generative AI models. The open-source community is working hard to develop tools that will help you build any type of AI. You can use these tools to even create AGI (Artificial General Intelligence); check out Auto-GPT (experimental open-source for creating GPT-4 fully autonomous) and babyagi (AI-powered task management system).

In this post, we have covered the projects that can be understood by all levels and require fewer resources to get started. They all use open-source tools, models, datasets, and packages that are available for anyone to use.

If you're new to ChatGPT, consider taking an Introduction to ChatGPT course. Alternatively, if you're already familiar with generative AI, you can improve your prompting skills by reviewing the comprehensive ChatGPT Cheat Sheet for Data Science, or by checking out the following resources:

- Get started with ChatGPT in our Introduction to ChatGPT course.

- Download this handy reference cheat sheet of ChatGPT prompts for data science.

- Listen to this podcast episode on How ChatGPT and GPT-3 Are Augmenting Workflows to understand how ChatGPT can benefit your business.

- Tutorial on the Whisper API

- Start learning AI

FAQs

Can I complete these generative AI projects if I’m new to machine learning and AI?

Absolutely! While some of the projects may involve advanced tools and techniques, the step-by-step instructions provided in the blog post will guide you through the process. You don’t need to be an expert to get started, and many tools like Gradio, Hugging Face, and LangChain are designed to be beginner-friendly. Plus, these projects will help you build a strong foundation in AI, making it easier to tackle more complex topics in the future.

Do I need a high-end GPU to run these generative AI projects?

Not necessarily. Some projects, like Alpaca-LoRA, are designed to run on a single GPU or even a CPU, meaning you don’t need a high-end machine to complete them. For projects requiring more computational power, you can use cloud-based services like Google Colab, AWS, or Hugging Face Spaces, which provide access to GPUs for free or at low cost.

How can I showcase these AI projects in my portfolio to stand out in job applications?

These projects are perfect for showcasing in your portfolio as they highlight your ability to work with cutting-edge AI tools. When adding them to your portfolio, be sure to:

- Include detailed explanations of the projects, describing the problem you solved, the tools you used, and the final outcome.

- Share links to the live demos or GitHub repositories so potential employers can see your work in action.

- Emphasize any unique challenges you encountered and how you solved them, which demonstrates problem-solving and critical thinking skills.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.