Course

In the extensive field of Machine Learning, the challenge of navigating vast and complex datasets for finding optimal hyperparameters during model training is a common obstacle.

Conventional methods, often based on random and grid search, can be time-consuming and inefficient, especially when dealing with complex model architectures or large-scale data.

This is where the need for a more intelligent and adaptive approach like Bayesian optimization becomes increasingly critical to hyperparameter optimization.

The first goal of this article is to explain what Bayesian optimization is, providing the reader with a strong theoretical foundation. However, before diving into the Bayesian optimization concept, it is important to understand different hyperparameter optimization at our disposal.

The second part of the article covers a more practical implementation of the key concepts using the Python programming language. In the last section, the reader will learn how to leverage Bayesian optimization for the hyperparameter tuning of a machine learning model.

What is Bayesian Optimization?

Before diving into the definition of Bayesian optimization, let’s have an overview of the four main hyperparameter optimization methods using simple examples:

- Manual search

- Random search

- Grid search, and

- Bayesian optimization.

Manual search

This is the most basic method based on experience, intuition, or trial and error. It is often a time-consuming approach and relies heavily on the expertise of the practitioner.

For instance, an experienced baker sets the oven temperature and baking time based on past experience and intuition.

A typical starting point might be a temperature of 350°F and a baking time of 30 minutes, reflecting commonly successful settings. Adjustments to these settings are made based on the outcome of each baking attempt.

Random search

This approach involves randomly selecting combinations of temperature and time within a specified range. For example, temperatures may vary between 325°F to 375°F and times between 25 to 35 minutes.

The selection process might begin at 330°F for 27 minutes, followed by 370°F for 31 minutes, continuing randomly until the most effective combination is identified.

Grid search

This method involves systematically exploring multiple combinations of hyperparameter values.

To continue with the same baking example. The baker creates a structured grid of temperature and time combinations on the wall.

The grid might include every 5°F increment between 325°F and 375°F and every 2 minutes from 25 to 35 minutes. The cake would then be baked at every single combination in this grid (325°F for 25 minutes, 325°F for 27 minutes, ... , 375°F for 35 minutes) to find the best combination.

Bayesian search

Bayesian Optimization can be seen as a more intelligent approach to finding the best baking temperature and time for a cake, compared to random search.

Random Search might involve the baker trying a wide variety of temperature and time combinations at random, many of which may result in undercooked or overcooked cakes.

In Bayesian optimization, the baker's search for the optimal baking conditions is guided and informed by previous results.

For instance, if the baker finds that cakes baked at around 350°F for 30 minutes tend to be more successful, the baker will then focus more on temperature and time combinations close to these values in future baking attempts.

This method reduces the number of trials needed because it 'learns' which combinations are more likely to provide a perfectly baked cake based on the outcomes of previous baking sessions.

Overall, Bayesian optimization is based on the Bayes theorem for optimizing objective functions that are expensive to evaluate. It's particularly effective for scenarios where sampling is costly, and the objective function is unknown but can be sampled.

Bayesian optimization typically employs a probabilistic model, like a Gaussian Process, to estimate the objective function and then uses an acquisition function to decide where to sample next.

Challenges of Bayesian Optimization

This method has been successfully applied in multiple domains. However, it is important to note that it presents some challenges, and the main ones are presented below:

Batch / Parallel Evaluation

One challenge is efficiently conducting batch or parallel evaluations. Bayesian optimization traditionally operates in a sequential manner, selecting one point at a time to evaluate. Extending this to batch settings, where multiple points are evaluated simultaneously, is non-trivial and requires careful balancing to ensure diversity and efficiency in the chosen points.

Non-myopic Optimization

This refers to the challenge of making long-term, strategic decisions about which points to evaluate. Non-myopic methods consider the future impact of current evaluations, which can be computationally intensive and complex to model.

The Dimensionality of Space

As the dimensionality of the search space increases, Bayesian optimization can become less effective. This is due to the 'curse of dimensionality', where the volume of the space increases exponentially, making it harder to find optima with a limited number of evaluations.

Structured Search Space

In many real-world problems, the search space is not a simple, continuous n-dimensional space but has a complex structure, such as dependencies between variables or constraints. Handling these structured spaces effectively is a significant challenge.

Multi-task/Objective Search, Multi-fidelity Search

Extending Bayesian optimization to handle multiple objectives simultaneously or to integrate information from evaluations of varying fidelity (accuracy) is complex. These scenarios require balancing trade-offs between different objectives or different levels of detail in the evaluations.

Warm-start Search

This involves utilizing prior knowledge or data to accelerate the optimization process. Effectively incorporating this information into the Bayesian framework without biasing the results is challenging.

Large Number of Evaluations

While Bayesian optimization is designed for scenarios where evaluations are expensive, in some cases, a large number of evaluations may still be required. Managing and efficiently navigating this large number of evaluations can be challenging, particularly in terms of computational resources and time.

Nasty Objective Functions

Dealing with objective functions that have many local optima, discontinuities, or non-stationarity (where the function changes over time) is difficult. These functions can mislead the optimization process and make finding a global optimum challenging.

Indirect Observation

In some scenarios, the objective function cannot be observed directly, but only through indirect measurements. This adds a layer of complexity as the optimization algorithm must infer the actual objective function from these indirect observations.

Acquisition Functions and Decision-Making

In Bayesian optimization, acquisition functions are used to guide the search for the optimum values.

Acquisition functions and surrogate models are interdependent in Bayesian optimization.

The surrogate model provides a probabilistic prediction of the objective function, which the acquisition function then uses to determine the most promising points to evaluate next. Also, surrogate models like Gaussian Process Regression are particularly useful when the objective function is complex or expensive to evaluate directly.

Furthermore, by continuously updating the surrogate model with new data points, the acquisition function can be more accurately directed toward the areas of the search space that are most likely to yield the best results.

Several acquisition functions can be used in Bayesian optimization, including:

- Thompson Sampling: this function selects the points based on a probabilistic estimate of the objective function.

- Upper Confidence Bound (UCB): this function is used to select the point with the highest probability of improving the best-observed value. It uses the difference between the potential new solution’s outcome and the current best, calculating the probability of its improvement.

- Probability of Improvement (PI): used to select the point that has the highest probability of best-observed value so far.

- Expected Improvement (EI): used to select the point with the highest expected improvement over the current best-observed value.

There is no best or worst function. Each of these acquisition function has its strengths and is chosen based on the specific requirements of the optimization problem at hand.

Applications of Bayesian Optimization

Bayesian optimization has been successfully applied in many industries, and this section focuses on its illustration in four use cases:

- Natural Language Processing

- Machine Learning and hyperparameter tuning

- A/B testing, and

- Reinforcement learning and robotics

These examples are particularly relevant and informative for Data Scientists.

Natural Language Processing

When applying Machine Learning to Natural Language Processing problems, there are multiple choices to make about the representation of input texts, and these choices can affect the end result of the underlying model.

Bayesian optimization is used to automate the process of choosing the best way to represent text in NLP models, thereby simplifying the model development process and making it more efficient, while still remaining or even enhancing the overall performance of these models.

To learn more about embeddings, our course Introduction to Embeddings with OpenAI API provides a complete guide to using OpenAI API to create embeddings.

Machine Learning and hyperparameter tuning

Bayesian optimization in automatic machine learning (AutoML) automates the selection of the best model and its hyperparameters for a given problem on a given dataset.

Recent applications of Bayesian optimization have shown its effectiveness in tuning complex models like deep belief networks, convolutional neural networks, and Markov chain Monte Carlo methods.

Furthermore, it has been beneficial in automating the selection process among models offered by popular machine learning libraries like WEKA and scikit-learn.

A/B Testing

In A/B Testing, Bayesian Optimization is used to enhance the efficiency of testing different product configurations, like ads, apps, games, or websites.

By presenting two options (A and B) to a small user subset, developers collect feedback to optimize various metrics such as user engagement or click-through rates, and the key challenge is to identify the best product configuration within a limited budget for user queries.

Bayesian Optimization facilitates this process by using feedback from earlier tests to make informed decisions about which user subsets to query next, aiming to optimize the overall performance of the product while minimizing opportunity costs.

Reinforcement Learning and Robotics

Bayesian Optimization has been effectively applied in both Robotics and Reinforcement Learning.

In robotics, it has been used to optimize a robot's gait for specific objectives like velocity or smoothness, as demonstrated with the Sony AIBO ERS-7 robot. It have also been employed in navigational tasks, helping robots minimize uncertainty about their location and map estimates while moving through environments.

Bayesian optimization has been applied in hierarchical reinforcement learning to automatically tune parameters of neural network policies and learn value functions at various levels of a hierarchical system.

Additionally, the technique has been applied to develop attention policies in image tracking using deep networks, showcasing its versatility in adapting to different aspects of learning and decision-making in complex environments.

A Step-by-Step Guide To Implementing Bayesian Optimization

The previous sections provided a comprehensive understanding of the Bayesian optimization. This section demonstrates how to optimize the hyperparameters of an XGBRegressor using the GPyOpt library.

PyMC3 is another powerful library used for Bayesian optimization, and our course Bayesian Data Analysis in Python provides a complete guide along with some real world examples.

For those interested in applying Bayesian optimization using the R programming language, our course Fundamentals of Bayesian Data Analysis in R is the right fit.

Two methods are applied: Grid search, and Bayesian optimization. The goal is to identify which one provides the optimal hyperparameters, leading to a better model. The complete source code is available in this DataLab workbook; you can create your own workbook copy to edit and run the code in the browser without installing anything on your computer.

Prerequisites

The following libraries are necessary to successfully execute both techniques.

- numpy: a library for numerical computing in Python.

- scipy: a library for scientific computing in Python.

- sklearn: a library for machine learning in Python.

- GPyOpt: a library for Bayesian optimization in Python.

- Xgboost for the XGBoost model

These libraries can be installed using the pip command as follows, from Jupyter notebook:

!pip -q install xgboost scikit-learn GPyOpt numpyAfter successfully running the previous code, the imports are performed using the import statement:

import xgboost as xgb

from sklearn.datasets import fetch_california_housing as fch

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import RandomizedSearchCV

from GPyOpt.methods import BayesianOptimization

from sklearn.model_selection import cross_val_score

import numpy as npDataset preparation

The benchmarking analysis is based on the California housing dataset.

- 70% of the data is used for training the XGBoost model

- The remaining 30% is used for testing

The training and testing split is described below:

# Load dataset

california_housing = fch()

X = california_housing.data

y = california_housing.target

# Split the dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.3,

random_state=2024)After loading the training and testing data, we can check their sizes using .shape function.

print(f"Training Shape: {X_train.shape}")

print(f"Testing Shape: {X_test.shape}")Hyperparameter tuning with Random Search

This section explains how to set up and execute the hyperparameter tuning with the Gridsearch approach for an XGBoost regression using RandomizedSearchCV class from the scikit-learn module.

Set up the parameter grid

A dictionary named param_dist is created with different hyperparameters as keys and lists of their possible values as corresponding values. These hyperparameters include:

- max_depth: Maximum depth of a tree. Values: 3, 10, 5, 15.

- min_child_weight: Minimum sum of instance weight (hessian) needed in a child. Values: 1, 5, 10.

- subsample: Subsample ratio of the training instances. Values: 0.5, 0.7, 1.0.

- colsample_bytree: Subsample ratio of columns when constructing each tree. Values: 0.5, 0.7, 1.0.

- n_estimators: Number of gradient boosted trees. Equivalent to number of boosting rounds. Values: 100, 200, 300, 400.

param_dist = {

'max_depth': [3, 10, 5, 15],

'min_child_weight': [1, 5, 10],

'subsample': [0.5, 0.7, 1.0],

'colsample_bytree': [0.5, 0.7, 1.0],

'n_estimators': [100, 200, 300, 400],

'learning_rate': [0.01, 0.05, 0.1, 0.15, 0.2]

}Initialize XGBoost Regressor

An instance of the XGBRegressor class from the XGBoost library is created:

xgb_reg = xgb.XGBRegressor()Configure the RandomizedSearchCV

RandomizedSearchCV is set up with the following parameters:

- The estimator (xgb_reg) is the XGBoost regressor.

- param_distributions=param_dist specifies the dictionary of parameters to sample.

- n_iter=25 indicates that 25 different combinations will be tried.

- scoring='neg_mean_squared_error' sets the scoring metric to the negative mean squared error.

- cv=3 sets the cross-validation splitting strategy to 3 folds.

- verbose=1 enables verbose output.

- random_state=42 ensures reproducibility.

random_search = RandomizedSearchCV(

xgb_reg, param_distributions=param_dist, n_iter=25,

scoring='neg_mean_squared_error', cv=3, verbose=1, random_state=2024

)Fitting the model and access parameters

The fit method is used to run the randomized search on the provided training data (X_train and y_train):

random_search.fit(X_train, y_train)The best_params attribute from the fitted model contains the information about the best hyperparameters that lead to the model with better performance.

Those parameters can be acquired as follows:

print("Random Search Best Parameters:", random_search.best_params_)

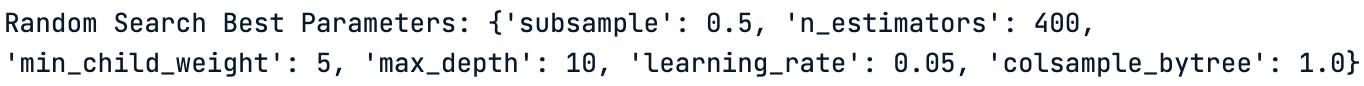

Random search best parameters

Out of all the possible parameters, the best ones for the XGBoost model using the Random search are given below:

'subsample': 0.5,

'n_estimators': 400,

'min_child_weight': 5,

'max_depth': 10,

'learning_rate': 0.05,

'colsample_bytree': 1.0

}Evaluate the model

Using these values, we can evaluate the model and retrieve its performance as follows:

First, we initialize the model using the best hyperparameters before training.

# Initialize and train the model

model_random_search = xgb.XGBRegressor(**params_random_search)

model_random_search.fit(X_train, y_train)Then, the mode is evaluated on the test data

predictions_random_search = model_random_search.predict(X_test)

mse_random_search = mean_squared_error(y_test, predictions_random_search)

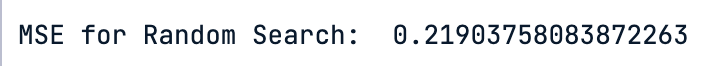

print("MSE for Random Search: ", mse_random_search)The successful execution of the above codes generate the following mean square error value:

MSE for XGBosst using Random search hyperparameters

Hyperparameter tuning with Baysian Optimization

Similarly to the random search, we apply a step-by-step process to generate the best hyperparameters using Bayesian optimization.

Set up the parameter bounds

The bounds for the hyperparameters are defined in a list baysian_opt_bounds. Each hyperparameter is represented as a dictionary with keys: name, type, and domain.

- max_depth, min_child_weight, n_estimators are discrete variables.

- subsample, colsample_bytree, learning_rate are continuous variables.

- The domain specifies the range of values for each hyperparameter.

baysian_opt_bounds = [

{'name': 'max_depth', 'type': 'discrete', 'domain': (3, 10, 5, 15)},

{'name': 'min_child_weight', 'type': 'discrete', 'domain': (1, 5, 10)},

{'name': 'subsample', 'type': 'continuous', 'domain': (0.5, 1.0)},

{'name': 'colsample_bytree', 'type': 'continuous', 'domain': (0.5, 1.0)},

{'name': 'n_estimators', 'type': 'discrete', 'domain': (100, 200, 300, 400)},

{'name': 'learning_rate', 'type': 'continuous', 'domain': (0.01, 0.2)}

]Define of the objective function

An objective function xgb_cv_score is defined to calculate the cross-validated negative mean squared error of an XGBoost model.

- The function takes a set of parameters and converts them into the required format.

- cross_val_score is used to evaluate the XGBoost model using the specified parameters.

- The negative mean of the scores from cross-validation is returned as the objective to minimize.

def xgb_cv_score(parameters):

parameters = parameters[0]

score = -cross_val_score(

xgb.XGBRegressor(

max_depth=int(parameters[0]),

min_child_weight=int(parameters[1]),

subsample=parameters[2],

colsample_bytree=parameters[3],

n_estimators=int(parameters[4]),

learning_rate=parameters[5]),

X_train, y_train, scoring='neg_mean_squared_error', cv=3).mean()

return scoreInitialize and run the Bayesian model

Bayesian optimization is instantiated with the following parameters:

- f=xgb_cv_score: The objective function to minimize.

- domain=baysian_opt_bounds: The hyperparameter bounds.

- model_type='GP': Specifies the use of Gaussian Processes.

- acquisition_type='EI': Uses Expected Improvement as the acquisition function.

- max_iter=25: The number of iterations for the optimization process.

The optimization process is started with optimizer.run_optimization()

optimizer = BayesianOptimization(

f=xgb_cv_score, domain=baysian_opt_bounds, model_type='GP',

acquisition_type='EI', max_iter=25

)

optimizer.run_optimization()Access the best parameters

After optimization, the best parameters are extracted and formatted:

- Parameters are retrieved from optimizer.x_opt.

- Discrete parameters (max_depth, min_child_weight, n_estimators) are cast to integers.

The best parameters are printed out:

print("Bayesian Optimization Best Parameters:", best_params_bayesian)

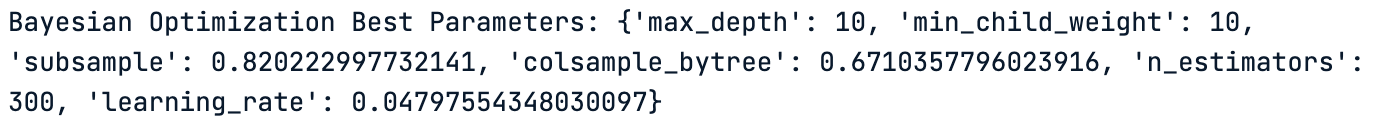

Bayesian optimization best parameters

Evaluate the model

The initialization and fitting process is the same as before. But this time, we use the parameters from the Bayesian optimization:

params_bayesian_opt = {

'max_depth': 10,

'min_child_weight': 10,

'subsample': 0.820222997732141,

'colsample_bytree': 0.6710357796023916,

'n_estimators': 300,

'learning_rate': 0.04797554348030097

}

# Initialize and train the model

model_bayesian_opt = xgb.XGBRegressor(**params_bayesian_opt)

model_bayesian_opt.fit(X_train, y_train)

# Make predictions and evaluate

predictions_bayesian_opt = model_bayesian_opt.predict(X_test)

mse_bayesian_opt = mean_squared_error(y_test, predictions_bayesian_opt)

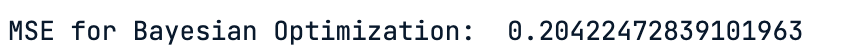

print("MSE for Bayesian Optimization: ", mse_bayesian_opt)The model performance is given after successfully executing the above code, and the result is given below:

MSE for XGBosst using Bayesian optimization hyperparameters

Result interpretation

From the two experimentations, we notice that:

- Lower MSE in Bayesian Optimization: The model trained with hyperparameters from Bayesian Optimization has a lower MSE (0.204) compared to the one from Random Search (0.219). This lower value suggests that the model is, on average, closer to the true data points.

- Model Performance: The lower MSE indicates that the Bayesian Optimization method was more effective in tuning the hyperparameters for the XGBoost model. This result suggests that the model tuned by Bayesian Optimization is better at generalizing from the training data to unseen data (in this case, the test set).

- Efficiency of Bayesian optimization: Bayesian optimization generally leads to better performance in hyperparameter tuning because it uses a probabilistic model to guide the search for the best parameters. It learns from previous evaluations and directs the search towards the most promising hyperparameters, which often leads to finding better values than Random Search.

In this specific case, the Bayesian Optimization method provided better results as evidenced by the lower MSE. This suggests that for this particular dataset and model (XGBoost), Bayesian Optimization was more effective in identifying the set of hyperparameters that minimized prediction error on the test set.

It's important to note that while Bayesian Optimization has shown better performance in this instance, the choice between Random Search and Bayesian Optimization can depend on various factors like the complexity of the model, the nature of the data, and computational resources.

However, in scenarios where precision in hyperparameter tuning is crucial and computational resources allow, Bayesian Optimization often proves to be a superior choice.

Conclusion

This article has provided a thorough exploration of Bayesian Optimization, a key technique in optimizing complex functions.

We began by defining what Bayesian optimization is before addressing the challenges in function optimization, leading to the relevance of Bayesian Optimization.

Through a practical guide, we dived into the implementation aspects, focusing on Gaussian Process Regression and acquisition functions. A significant part of the discussion centered on applying Bayesian Optimization for hyperparameter tuning in machine learning models, showcasing its real-world utility.

Overall, this article serves as both an introductory overview and a practical guide, highlighting Bayesian Optimization's critical role in machine learning and computational optimization.

It offers valuable insights for practitioners, researchers, and enthusiasts in these fields, emphasizing the method's applicability and impact in modern computational challenges.

For those looking to deepen their engagement with Bayesian optimization and its implementation in Python, our course Hyperparameter Tuning in Python provides practical experience in using some common methodologies for automated hyperparameter tuning using the Scikit Learn library. Some of the advanced methodologies include Bayesian and Genetic algorithms.

A multi-talented data scientist who enjoys sharing his knowledge and giving back to others, Zoumana is a YouTube content creator and a top tech writer on Medium. He finds joy in speaking, coding, and teaching . Zoumana holds two master’s degrees. The first one in computer science with a focus in Machine Learning from Paris, France, and the second one in Data Science from Texas Tech University in the US. His career path started as a Software Developer at Groupe OPEN in France, before moving on to IBM as a Machine Learning Consultant, where he developed end-to-end AI solutions for insurance companies. Zoumana joined Axionable, the first Sustainable AI startup based in Paris and Montreal. There, he served as a Data Scientist and implemented AI products, mostly NLP use cases, for clients from France, Montreal, Singapore, and Switzerland. Additionally, 5% of his time was dedicated to Research and Development. As of now, he is working as a Senior Data Scientist at IFC-the world Bank Group.