Track

The OpenAI Dev Day marked a significant milestone in artificial intelligence, unveiling a suite of groundbreaking AI services, including GPTs, the GPTs App Store, GPT-4 Turbo, and the innovative Assistants API.

This API empowers developers with advanced AI tools, enabling the creation of custom AI assistants adept at handling diverse tasks.

In this tutorial, we delve into the world of the Assistants, providing a detailed exploration of their capabilities, practical applications across industries, and a step-by-step guide on implementing them using Python. Join us as we navigate the intricacies of building your own personalized AI assistant.

An Overview of the Assistants API

At the time of writing this article, the Assistants API is beta, and the OpenAI developer team is actively working on including more features, but before that, let’s explore what the current version has to offer!

This section provides more insights into how the Assistants work and the interaction between the underlying components.

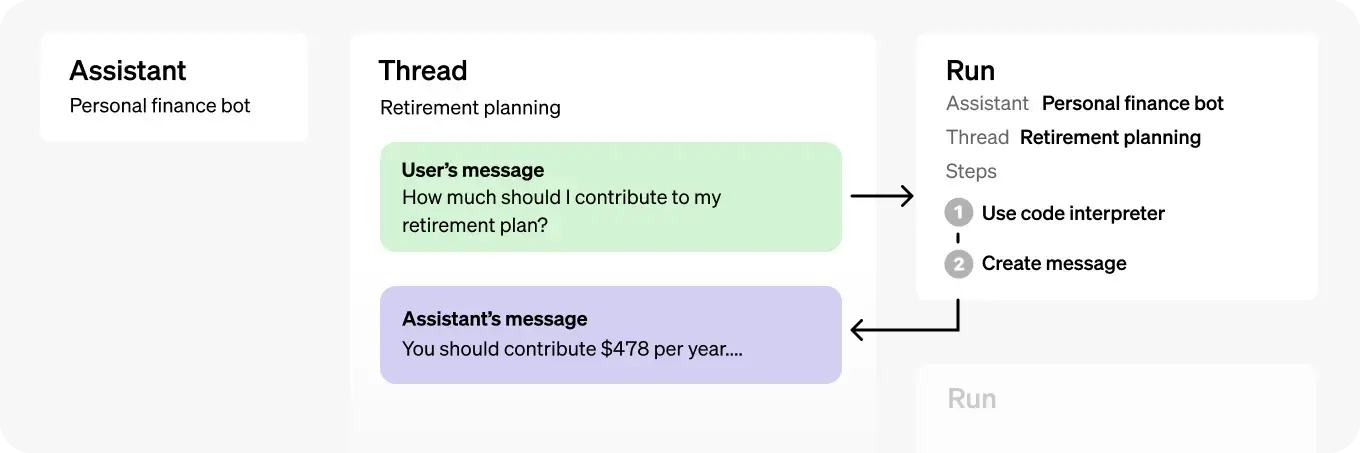

How does Assistants API work?

The two main backbones of the Assistants are OpenAI’s models and tools.

- There is a diverse set of models including but not limited to: GPT-4, GPT-4 Turbo, GPT-3.5, GPT-3, DALL-E, TTS, Whisper, Embeddings, Moderation. These models are used by the Assistants to perform a specific intelligent task.

- The tools, on the other hand, give the Assistants access to OpenAI’s hosted tools like Code interpreter, and Knowledge Retrieval. It even allows users to build their own tools by using the Function Calling feature.

Now, let’s explore the five main steps involved in the implementation of an Assistant.

- Create and describe an Assistant: An Assistant is a specialized AI developed using models, guidelines, and various tools.

- Initiate a Thread: a thread represents a user-initiated dialogue sequence where messages can be added, forming an engaging exchange.

- Add Messages: Messages are comprised of the user's textual inputs and may encompass text, files, and imagery.

- Trigger the Assistant: The Assistant is activated to analyze the Thread, utilize specific tools as required, and produce a fitting response.

Industry Use-Cases of Assistants API

Leveraging the Assistant API can be a powerful way to integrate AI into various business processes, allowing them to enhance efficiency, automate boring tasks, and provide faster and more accurate responses to users’ queries.

Below are five compelling industry use cases for the Assistant API.

Development support

The Assistant can serve as an on-demand coding assistant. Utilizing the code interpreter, it can translate snippets of code from one programming language to another, aiding in software development and easing the learning curve for new languages. This feature is especially useful in teams working with multiple programming languages or in educational settings for teaching coding.

Enterprise knowledge management

The retrieval feature can be leveraged for knowledge management within organizations. By uploading and processing internal documents, reports, and manuals, the Assistant becomes a centralized knowledge repository. Employees can query the Assistant for specific information, improving efficiency and reducing the time spent searching for information in large document sets.

Customer support automation

The Assistant can be integrated into customer support systems. Using function calling, it can answer customer queries by fetching information from external APIs or databases, effectively automating responses to common questions. This integration can enhance customer experience by providing quick, accurate responses and reducing the load on human support staff.

Data analysis

By describing functions for data analysis, users can ask the Assistant to perform complex data manipulations and generate reports, translating natural language queries into structured data analysis tasks.

IT operation automation

IT teams can use the Assistant to automate routine operations. By defining functions that correspond to common IT tasks, such as system diagnostics, network checks, or software updates, the Assistant can execute these tasks in response to user commands. This capability can significantly reduce the time IT professionals spend on routine maintenance, allowing them to focus on more complex issues.

Hands-On: Getting Started with OpenAI Assistants API

With this understanding of the OpenAI API, let’s get into a more practical use case. This section focuses on guiding through the process of creating an assistant that retrieves knowledge from PDF files.

The complete end-to-end notebook is available on DataLab.

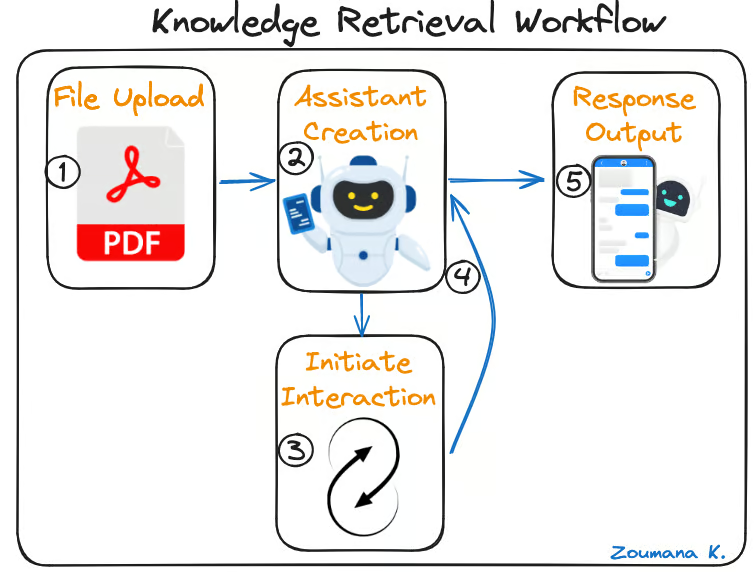

Knowledge retrieval workflow

For any technical implementation, it is better to provide a visual workflow illustrating the interaction between the main components of the overall application being developed.

This workflow highlights the five main steps involved in the implementation of the assistant, from file upload to generating the final response.

Five main steps of the knowledge retrieval workflow

- File upload: the process starts with the upload of the PDF files users want to interact with.

- Assistant creation: the assistant is specifically designed to process and understand the information from the uploaded PDF files.

- Initiate interaction: this is the starting point of the conversation thread, where users provide input messages to be processed by the assistant.

- Trigger the assistant: now, the assistant processes the input messages to generate a relevant response.

- Response output: this is the final phase, where the assistant displays the responses to the users’ message.

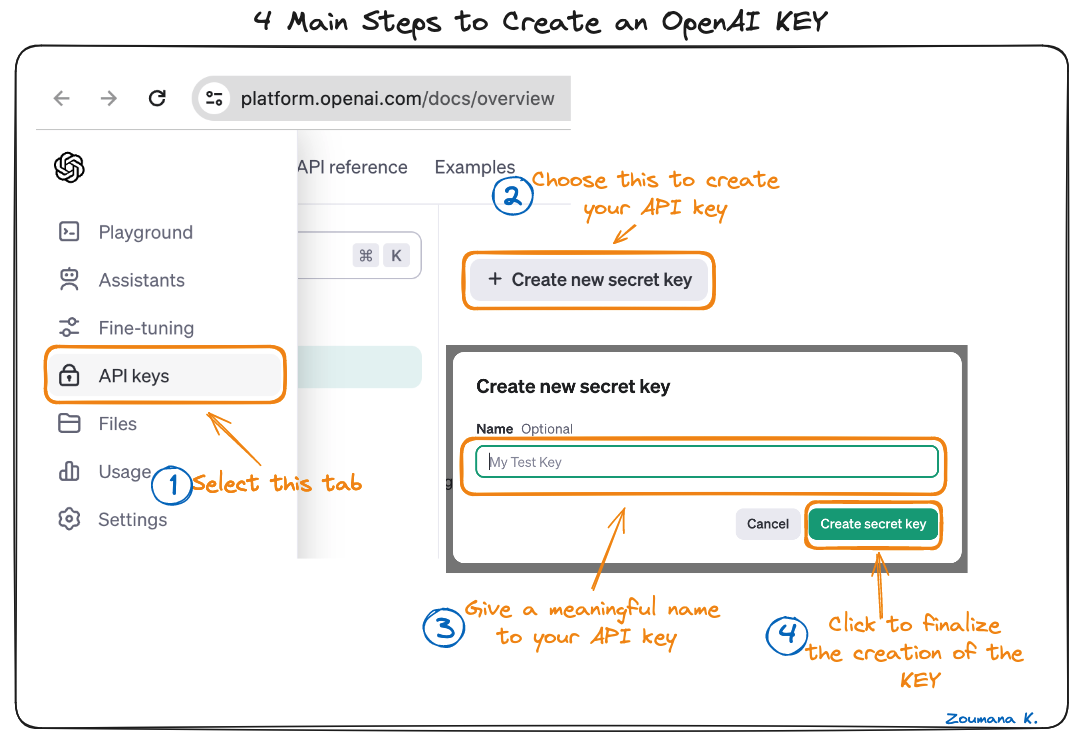

Set Up the OpenAI KEY

The main tools required to successfully reproduce the results of this tutorial are:

- Python: this is the main programming language we’ll use for this tutorial. An alternative option is NodeJS.

- OpenAI: the package to interact with OpenAI services. Our OpenAI API in Python cheatsheet provides a complete overview to master the basics of how to leverage the OpenAI API.

- OS: the operating system package to configure the environment variable.

The first step is to acquire the OpenAI KEY, which helps access the DALL-E 3 model. The main steps are illustrated below:

Four main steps to create an OpenAI KEY

Four main steps to create an OpenAI KEY

The above main four steps are self-explanatory. However, it is important to create an account from the official OpenAI website.

You can further explore the OpenAI API from Our Beginner's Guide to The OpenAI API: Hands-On Tutorial and Best Practices. It introduces you to the OpenAI API, it's use-cases, a hands-on approach to using the API, and all the best practices to follow.

Start building the assistant

Once you’ve acquired the KEY, make sure not to share it with anyone. The key should remain private. Next, set up the key to the environment variable as follows to be able to work with:

import os

OPENAI_API_KEY= "<YOUR PRIVATE KEY>"

os.environ["OPENAI_API_KEY"] = OPENAI_API_KEYFile Upload

The following helper function leverages the “create” function to automatically upload the file the users want to interact with into its OpenAI account.

The “create” function has two main parameters:

- The path to the file to upload

- The purpose of use of that file, which in this case is “assistants”

def upload_file(file_path):

# Upload a file with an "assistants" purpose

file_to_upload = client.files.create(

file=open(file_path, "rb"),

purpose='assistants'

)

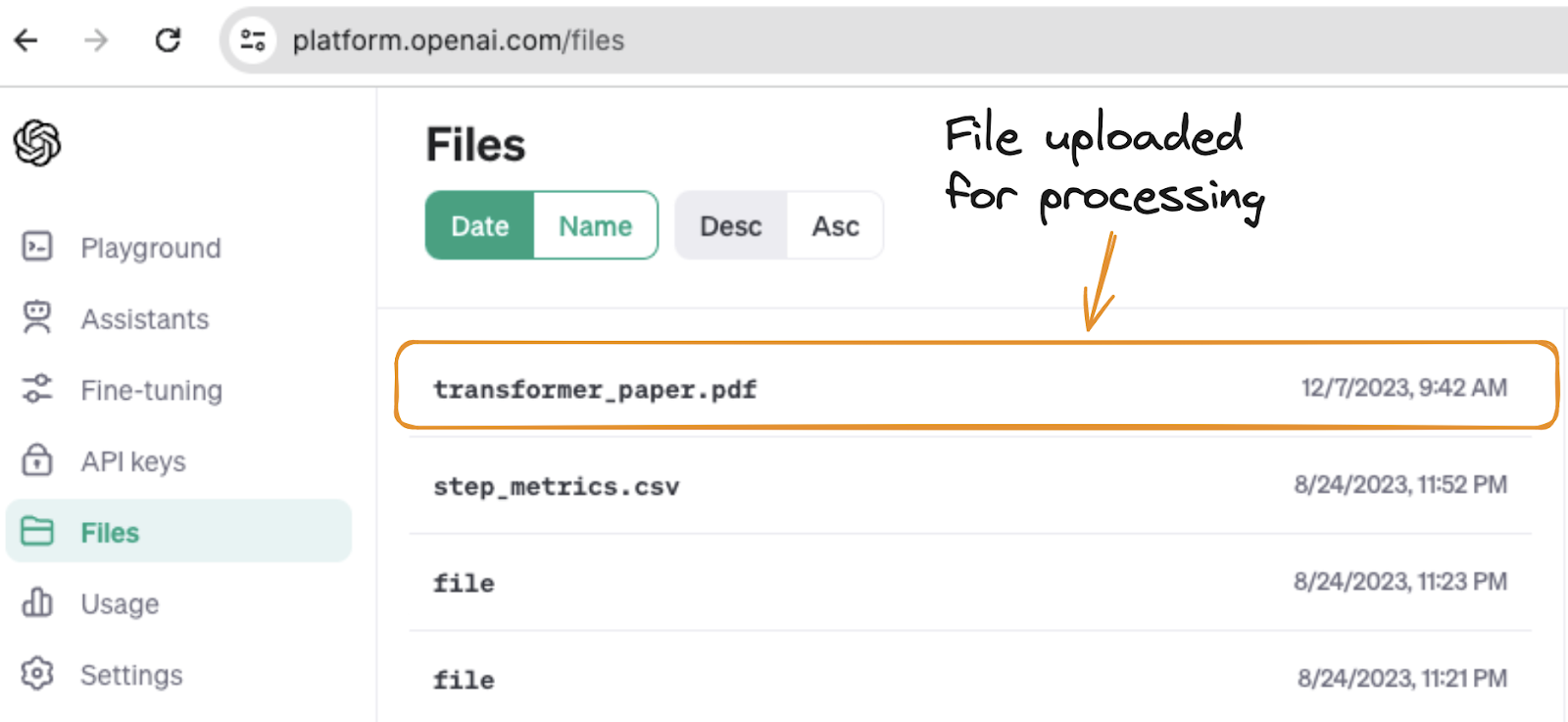

return file_to_uploadThe helper function is finally used to trigger the upload process of the transformers paper located in the data folder.

transformer_paper_path = "./data/transformer_paper.pdf"

file_to_upload = upload_file(transformer_paper_path)After successful execution of the function, the transformer_paper.pdf is uploaded and visible from the files tab, as shown below.

Transformer paper uploaded to OpenAI account

Assistant creation

The assistant creation is done using the create function from the client.beta.assistants module, and it takes five main parameters:

- Name: this is the name given to the assistant by its creator. Let’s call ours Scientific Paper Assistant.

- Instructions: describes how the assistant should behave or answer questions. In this use case, the assistant applies the following instruction: “You are a polite and expert knowledge retrieval assistant. Use the documents provided as a knowledge base to answer questions.”

- Model: the underlying model to be used for the knowledge retrieval. Any GPT-3 or GPT-4 models can be used. This article leverages the latest model at the time of writing this article which is gpt-4-1106-preview model.

- Tools: the type of tool to be used. The two possible choices are code interpreter and retrieval. The focus of this article is retrieval, hence we ignore the interpreter.

- File_ids: a list of unique identifiers of each file in the knowledge base.

The helper function create_assistant combines all this information to create the assistant as illustrated below:

def create_assistant(assistant_name,

my_instruction,

uploaded_file,

model="gpt-4-1106-preview"):

my_assistant = client.beta.assistants.create(

name = assistant_name,

instructions = my_instruction,

model="gpt-4-1106-preview",

tools=[{"type": "retrieval"}],

file_ids=[uploaded_file.id]

)

return my_assistantNow we can run the function to create the assistant using the instruction and assistant name:

inst="You are a polite and expert knowledge retrieval assistant. Use the documents provided as a knowledge base to answer questions"

assistant_name="Scientific Paper Assistant"

my_assistant = create_assistant(assistant_name, inst, uploaded_file)Initiate interaction

Once the assistant is created, the natural next step is to initiate an interaction thread using the users’ message or request.

The thread and the message are created using the create functions from the client.beta.threads and client.beta.threads.messages respectively.

These two features are combined using the initiate_interaction helper function.

def initiate_interaction(user_message, uploaded_file):

my_thread = client.beta.threads.create()

message = client.beta.threads.messages.create(thread_id=my_thread.id,

role="user",

content=user_message,

file_ids=[uploaded_file.id]

)

return my_threadAn interaction can be initiated from the user_message variable:

user_message = "Why do authors use the self-attention strategy in the paper?"

my_thread = initiate_interaction(user_message, uploaded_file)Trigger the assistant and generate a response

From the above thread, the assistant can be triggered to provide a response to the user’s message. The trigger action is defined in the helper function trigger_assistant, and the response is given by the response variable.

def trigger_assistant():

run = client.beta.threads.runs.create(

thread_id = my_thread.id,

assistant_id = my_assistant.id,

)

trigger_assistant()

messages = client.beta.threads.messages.list(

thread_id = my_thread.id

)

response = messages.data[0].content[0].text.value

responseThe following response is generated after a successful execution of the above code.

Assistant’s response to the user’s message

This response is exactly what is mentioned by the authors as the answer to the question on page 6.

Passage mentioning the response from the transformer paper

Best Practices When Using OpenAI Assistants API

When leveraging the Assistant API into a project, it is important to adhere to best practices, ensuring optimal performance and user experience. Below are some key points to consider.

- Define clear objectives: establish specific goals for the Assistant to ensure it meets the intended purpose and user needs effectively.

- Optimize data input: quality and relevance of the data used, like uploaded files, are crucial for the accuracy and efficiency of the Assistant's responses.

- Prioritize user privacy: implement robust data privacy protocols to protect user information and comply with relevant data protection laws.

- Test and iterate: regularly test the Assistant in real-world scenarios and iterate based on feedback to improve performance and user experience.

- Provide clear documentation: offer comprehensive guidance on using the Assistant, helping users understand its functionalities and limitations.

Conclusion

In summary, this article has explored the Assistants API, highlighting how it works and how it can be used in different industries. We began by introducing the API and its key functions, setting the stage for a deeper understanding.

Next, we looked into the specific features of the Assistants API and discussed how these can be applied in various business sectors, showing its wide-ranging usefulness.

We also provided a step-by-step guide on how to start using the Assistants API, making it easier for you to set it up for your needs.

Moreover, we shared some important best practices to keep in mind while using the API, ensuring you get the most out of it.

In short, the Assistants API is a versatile and valuable tool that can be adapted for many uses, offering significant benefits for those who learn to use it effectively.

Are you eager to elevate your experience with OpenAI’s APIs and embrace a more creative approach? Our Comprehensive Guide to the DALL-E 3 API will help you discover the transformative power of DALL-E 3 API, covering its key features, industry applications, and tips for users to unlock their creative potential.

If you’re a total newcomer to the subject, why not start with our Working with the OpenAI API course.

A multi-talented data scientist who enjoys sharing his knowledge and giving back to others, Zoumana is a YouTube content creator and a top tech writer on Medium. He finds joy in speaking, coding, and teaching . Zoumana holds two master’s degrees. The first one in computer science with a focus in Machine Learning from Paris, France, and the second one in Data Science from Texas Tech University in the US. His career path started as a Software Developer at Groupe OPEN in France, before moving on to IBM as a Machine Learning Consultant, where he developed end-to-end AI solutions for insurance companies. Zoumana joined Axionable, the first Sustainable AI startup based in Paris and Montreal. There, he served as a Data Scientist and implemented AI products, mostly NLP use cases, for clients from France, Montreal, Singapore, and Switzerland. Additionally, 5% of his time was dedicated to Research and Development. As of now, he is working as a Senior Data Scientist at IFC-the world Bank Group.