Course

The core of this guide is to make the AWS interview process easier to understand by offering a carefully selected list of interview questions and answers. This range includes everything from the basic principles that form the foundation of AWS's extensive ecosystem to the detailed, scenario-based questions that test your deep understanding and practical use of AWS services.

Whether you're at the beginning of your data career or are an experienced professional, this article aims to provide you with the knowledge and confidence needed to tackle any AWS interview question. By exploring basic, intermediate, and advanced AWS interview questions, along with questions based on real-world situations, this guide aims to cover all the important areas, ensuring a well-rounded preparation strategy.

AWS Cloud Practitioner

Why AWS?

Before exploring the questions and answers, it is important to understand why it is worth considering the AWS Cloud as the go-to platform.

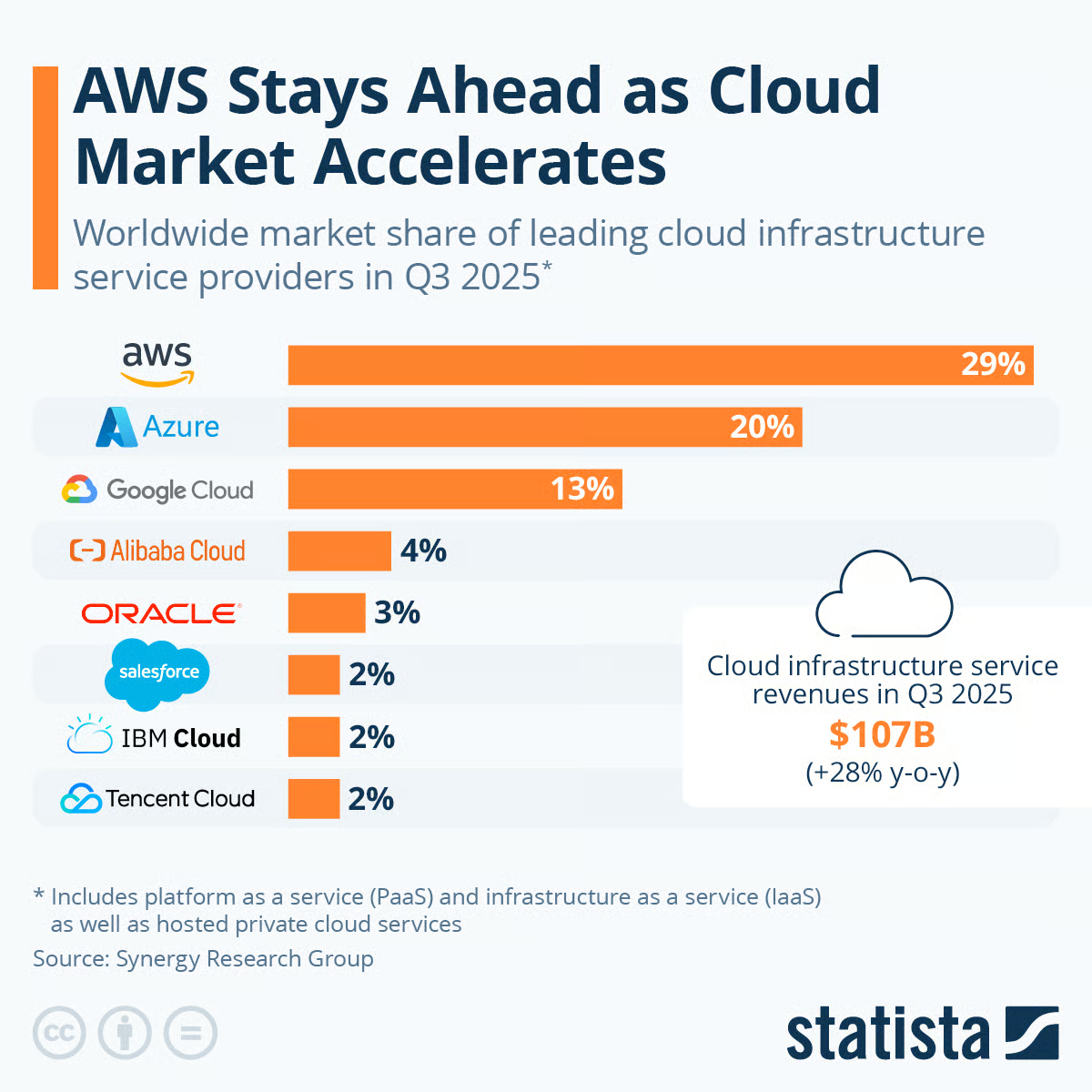

The following graphic provides the worldwide market share of leading cloud infrastructure service providers for the third quarter (Q3) of 2025. Below is a breakdown of the market shares depicted:

- Amazon Web Services (AWS) has the largest market share at 29%.

- Microsoft Azure follows with 20%.

- Google Cloud holds 13% of the market.

- Alibaba Cloud has a 4% share.

- Oracle has been growing to reach 3%.

- Salesforce, IBM Cloud, and Tencent Cloud are at the bottom, with 2% each.

Source (Statista)

The graphic also notes that the data includes platform as a service (PaaS) and infrastructure as a service (IaaS), as well as hosted private cloud services. Additionally, there's a mention that cloud infrastructure service revenues in Q3 2025 amounted to $107 billion, which is a significant jump from Q3 2024, when they were $84 billion.

Amazon Web Services (AWS) continues to be the dominant player in the cloud market as of Q3 2025, holding a significant lead over its closest competitor, Microsoft Azure.

AWS's leadership in the cloud market highlights its importance for upskilling and offers significant career advantages due to its wide adoption and the value placed on AWS skills in the tech industry.

Our cheat sheet AWS, Azure and GCP Service comparison for Data Science & AI provides a comparison of the main services needed for data and AI-related work from data engineering to data analysis and data science to creating data applications.

Basic AWS Interview Questions

Starting with the fundamentals, this section introduces basic AWS interview questions essential for building a foundational understanding. It's tailored for those new to AWS or needing a refresher, setting the stage for more detailed exploration later.

What is cloud computing?

Cloud computing provides on-demand access to IT resources like compute, storage, and databases over the internet. Users pay only for what they use instead of owning physical infrastructure.

Cloud enables accessing technology services flexibly as needed without big upfront investments. Leading providers like AWS offer a wide range of cloud services via the pay-as-you-go consumption model. Our AWS Cloud Concepts course covers many of these basics.

What is the problem with the traditional IT approach compared to using the Cloud?

Multiple industries are moving away from traditional IT to adopt cloud infrastructures for multiple reasons. This is because the Cloud approach provides greater business agility, faster innovation, flexible scaling and lower total cost of ownership compared to traditional IT. Below are some of the characteristics that differentiate them:

|

Traditional IT |

Cloud computing |

|

|

How many types of deployment models exist in the cloud?

There are three different types of deployment models in the cloud, and they are illustrated below:

- Private cloud: this type of service is used by a single organization and is not exposed to the public. It is adapted to organizations using sensitive applications.

- Public cloud: these cloud resources are owned and operated by third-party cloud services like Amazon Web Services, Microsoft Azure, and all those mentioned in the AWS market share section.

- Hybrid cloud: this is the combination of both private and public clouds. It is designed to keep some servers on-premises while extending the remaining capabilities to the cloud. Hybrid cloud provides flexibility and cost-effectiveness of the public cloud.

What are the five characteristics of cloud computing?

Cloud computing is composed of five main characteristics, and they are illustrated below:

- On-demand self-service: Users can provision cloud services as needed without human interaction with the service provider.

- Broad network access: Services are available over the network and accessed through standard mechanisms like mobile phones, laptops, and tablets.

- Multi-tenacy and resource pooling: Resources are pooled to serve multiple customers, with different virtual and physical resources dynamically assigned based on demand.

- Rapid elasticity and scalability: Capabilities can be elastically provisioned and scaled up or down quickly and automatically to match capacity with demand.

- Measured service: Resource usage is monitored, controlled, reported, and billed transparently based on utilization. Usage can be managed, controlled, and reported, providing transparency for the provider and consumer.

What are the main types of Cloud Computing?

There are three main types of cloud computing: IaaS, PaaS, and SaaS

- Infrastructure as a Service (IaaS): Provides basic building blocks for cloud IT like compute, storage, and networking that users can access on-demand without needing to manage the underlying infrastructure. Examples: AWS EC2, S3, VPC.

- Platform as a Service (PaaS): Provides a managed platform or environment for developing, deploying, and managing cloud-based apps without needing to build the underlying infrastructure. Examples: AWS Elastic Beanstalk, Heroku

- Software as a Service (SaaS): Provides access to complete end-user applications running in the cloud that users can use over the internet. Users don't manage infrastructure or platforms. Examples: AWS Simple Email Service, Google Docs, Salesforce CRM.

You can explore these in more detail in our Understanding Cloud Computing course.

What is Amazon EC2, and what are its main uses?

Amazon EC2 (Elastic Compute Cloud) provides scalable virtual servers called instances in the AWS Cloud. It is used to run a variety of workloads flexibly and cost-effectively. Some of its main uses are illustrated below:

- Host websites and web applications

- Run backend processes and batch jobs

- Implement hybrid cloud solutions

- Achieve high availability and scalability

- Reduce time to market for new use cases

What is Amazon S3, and why is it important?

Amazon Simple Storage Service (S3) is a versatile, scalable, and secure object storage service. It serves as the foundation for many cloud-based applications and workloads. Below are a few features highlighting its importance:

- Durable with 99.999999999% durability and 99.99% availability, making it suitable for critical data.

- Supports robust security features like access policies, encryption, VPC endpoints.

- Integrates seamlessly with other AWS services like Lambda, EC2, EBS, just to name a few.

- Low latency and high throughput make it ideal for big data analytics, mobile applications, media storage and delivery.

- Flexible management features for monitoring, access logs, replication, versioning, lifecycle policies.

- Backed by the AWS global infrastructure for low latency access worldwide.

Explain the concept of ‘Regions’ and ‘Availability Zones’ in AWS

- AWS Regions correspond to separate geographic locations where AWS resources are located. Businesses choose regions close to their customers to reduce latency, and cross-region replication provides better disaster recovery.

- Availability Zones consist of one or more discrete data centers with redundant power, networking, and connectivity. They allow the deployment of resources in a more fault-tolerant way.

Our course AWS Cloud Concepts provides readers with a complete guide to learning about AWS’s main core services, best practices for designing AWS applications, and the benefits of using AWS for businesses.

What is IAM, and why is it important?

AWS Identity and Access Management (IAM) is a service that helps you securely control access to AWS services and resources. IAM allows you to manage users, groups, and roles with fine-grained permissions. It’s important because it helps enforce the principle of least privilege, ensuring users only have access to the resources they need, thereby enhancing security and compliance.

Our Complete Guide to AWS IAM explains the service in full detail.

What is Amazon RDS, and how does it differ from traditional databases?

Amazon Relational Database Service (RDS) is a managed database service that allows users to set up, operate, and scale databases without worrying about infrastructure management tasks like backups, patches, and scaling. Unlike traditional databases, Amazon RDS is scalable and highly available out of the box, supports automated backups, and allows read replicas and multi-AZ deployments for failover and redundancy.

Here's a table highlighting the differences between RDS and more traditional databases for those of you who are more visual:

| Feature | Amazon RDS | Traditional databases |

|---|---|---|

| Scalability | Easily scales vertically or horizontally | Requires hardware upgrades; scaling can be costly |

| Availability | Supports Multi-AZ deployments for high availability | High availability setup requires complex configuration |

| Maintenance | Managed by AWS, including backups, updates, and patches | Manually managed, including regular updates and backups |

| Backup and recovery | Automated backups and snapshots | Requires manual backup processes |

| Cost | Pay-as-you-go pricing | Fixed costs; higher upfront investment required |

What is Amazon VPC, and why is it used?

Amazon Virtual Private Cloud (VPC) enables you to create a virtual network in AWS that closely resembles a traditional network in an on-premises data center. VPC is used to isolate resources, control inbound and outbound traffic, and segment workloads into subnets with strict security configurations. It provides granular control over IP ranges, security groups, and network access control lists.

What is Amazon CloudWatch, and what are its main components?

Amazon CloudWatch is a monitoring and observability service designed to track various metrics, set alarms, and automatically respond to changes in AWS resources. It helps improve visibility into application performance, system health, and operational issues, making it an essential tool for AWS users. Here are the main components of CloudWatch:

- Metrics: CloudWatch collects data points, or metrics, that provide insights into resource utilization, application performance, and operational health. This data allows for trend analysis and proactive scaling.

- Alarms: Alarms notify users or trigger automated actions based on specific metric thresholds. For example, if CPU usage exceeds a set threshold, an alarm can initiate auto-scaling to handle increased load.

- Logs: CloudWatch Logs provides centralized storage for application and infrastructure logs, which is essential for troubleshooting and identifying issues. Logs can be filtered, monitored, and analyzed to maintain smooth operations.

- Events: CloudWatch Events (or Amazon EventBridge) detects changes in AWS resources and can trigger predefined actions, such as invoking a Lambda function when a specific event occurs. This allows for greater automation and rapid response to critical events.

What is AWS Lambda, and how does it enable serverless computing?

AWS Lambda is a serverless compute service that eliminates the need to manage servers, making it easier for developers to run their code in the cloud. Here’s how it works and why it’s an enabler of serverless computing:

- Code execution on demand: Lambda runs code only when it’s triggered by an event—like an HTTP request or a file upload in Amazon S3. This ensures you only use resources when needed, optimizing costs and efficiency.

- Automatic scaling: Lambda scales automatically based on the number of incoming requests. It can handle from a single request to thousands per second, so applications remain responsive even as traffic varies.

- Focus on code, not infrastructure: Since Lambda abstracts away the server infrastructure, developers can focus solely on writing and deploying code without worrying about provisioning, managing, or scaling servers.

Through these features, Lambda embodies the principles of serverless computing—removing the burden of infrastructure management and allowing developers to build, test, and scale applications with greater agility.

What is Elastic Load Balancing (ELB) in AWS?

Elastic Load Balancing (ELB) is a service that automatically distributes incoming application traffic across multiple targets, ensuring your application remains responsive and resilient. ELB offers several benefits that make it an essential component of scalable AWS architectures:

- Traffic distribution: ELB intelligently balances incoming traffic across multiple targets, including EC2 instances, containers, and IP addresses. This helps avoid overloading any single resource, ensuring consistent application performance.

- Fault tolerance and high availability: ELB provides fault tolerance by distributing traffic across multiple Availability Zones, helping your application remain available even if one zone experiences issues.

- Enhanced reliability and scalability: ELB automatically adjusts traffic distribution as demand changes, making it easier to handle sudden spikes in traffic without impacting application performance.

Become a Data Engineer

AWS Interview Questions for Intermediate and Experienced

AWS DevOps interview questions

Moving to specialized roles, the emphasis here is on how AWS supports DevOps practices. This part examines the automation and optimization of AWS environments, challenging individuals to showcase their skills in leveraging AWS for continuous integration and delivery. If you're going for an advanced AWS role, check out our Data Architect Interview Questions blog post to practice some data infrastructure and architecture questions.

How do you use AWS CodePipeline to automate a CI/CD pipeline for a multi-tier application?

CodePipeline can be used to automate the flow from code check-in to build, test, and deployment across multiple environments to streamline the delivery of updates while maintaining high standards of quality.

The following steps can be followed to automate a CI/CD pipeline:

- Create a pipeline: Start by creating a pipeline in AWS CodePipeline, specifying your source code repository (e.g., GitHub, AWS CodeCommit).

- Define build stage: Connect to a build service like AWS CodeBuild to compile your code, run tests, and create deployable artifacts.

- Setup deployment stages: Configure deployment stages for each tier of your application. Use AWS CodeDeploy to automate deployments to Amazon EC2 instances, AWS Elastic Beanstalk for web applications, or AWS ECS for containerized applications.

- Add approval steps (optional): For critical environments, insert manual approval steps before deployment stages to ensure quality and control.

- Monitor and iterate: Monitor the pipeline's performance and adjust as necessary. Utilize feedback and iteration to continuously improve the deployment process.

What key factors should be considered in designing a deployment solution on AWS to effectively provision, configure, deploy, scale, and monitor applications?

Creating a well-architected AWS deployment involves tailoring AWS services to your app's needs, covering compute, storage, and database requirements. This process, complicated by AWS's vast service catalog, includes several crucial steps:

- Provisioning: Set up essential AWS infrastructure such as EC2, VPC, subnets or managed services like S3, RDS, CloudFront for underlying applications.

- Configuring: Adjust your setup to meet specific requirements related to the environment, security, availability, and performance.

- Deploying: Efficiently roll out or update app components, ensuring smooth version transitions.

- Scaling: Dynamically modify resource allocation based on predefined criteria to handle load changes.

- Monitoring: Keep track of resource usage, deployment outcomes, app health, and logs to ensure everything runs as expected.

What is Infrastructure as a Code? Describe in your own words

Infrastructure as Code (IaC) is a method of managing and provisioning computer data centers through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools.

Essentially, it allows developers and IT operations teams to automatically manage, monitor, and provision resources through code, rather than manually setting up and configuring hardware.

Also, IaC enables consistent environments to be deployed rapidly and scalably by codifying infrastructure, thereby reducing human error and increasing efficiency.

What is your approach to handling continuous integration and deployment in AWS DevOps?

In AWS DevOps, continuous integration and deployment can be managed by utilizing AWS Developer Tools. Begin by storing and versioning your application's source code with these tools.

Then, leverage services like AWS CodePipeline for orchestrating the build, test, and deployment processes. CodePipeline serves as the backbone, integrating with AWS CodeBuild for compiling and testing code, and AWS CodeDeploy for automating the deployment to various environments. This streamlined approach ensures efficient, automated workflows for continuous integration and delivery.

How does Amazon ECS benefit AWS DevOps?

Amazon ECS is a scalable container management service that simplifies running Docker containers on EC2 instances through a managed cluster, enhancing application deployment and operation.

What are some strategies for blue/green deployments on AWS?

Blue/green deployments minimize downtime and risk by running two environments: one (blue) with the current version and one (green) with the new version. In AWS, this can be achieved using services like Elastic Beanstalk, AWS CodeDeploy, or ECS. You can shift traffic between environments using Route 53 or an Application Load Balancer, test the green environment safely, and roll back instantly if needed.

Why might ECS be preferred over Kubernetes?

ECS offers greater flexibility, scalability, and simplicity in implementation compared to Kubernetes, making it a preferred choice for some deployments.

How would you manage and secure secrets for a CI/CD pipeline in AWS?

To securely manage secrets in an AWS CI/CD pipeline, you can use AWS Secrets Manager or AWS Systems Manager Parameter Store to store sensitive information such as API keys, database passwords, and certificates. Both services integrate with AWS services like CodePipeline and CodeBuild, allowing secure access to secrets without hardcoding them in your codebase.

By controlling access permissions with IAM, you can ensure that only authorized entities can access sensitive data, enhancing security within the CI/CD process.

How do you use AWS Systems Manager in a production environment?

AWS Systems Manager helps automate and manage your infrastructure at scale. In a production environment, it’s commonly used for patch management, remote command execution, inventory collection, and securely storing configuration parameters and secrets. It integrates with EC2, RDS, and other AWS services, enabling centralized visibility and operational control.

What is AWS CloudFormation, and how does it facilitate DevOps practices?

AWS CloudFormation automates the provisioning and management of AWS infrastructure through code, enabling Infrastructure as Code (IaC). This service lets you define your infrastructure as templates, making it easy to version, test, and replicate environments across development, staging, and production.

In a DevOps setting, CloudFormation helps maintain consistency, reduces manual configuration errors, and supports automated deployments, making it integral to continuous delivery and environment replication.

To close the DevOps set of questions, here's a table summarizing the different AWS services used in this area, as well as their use cases:

| Service | Purpose | Use cases in DevOps |

|---|---|---|

| AWS CodePipeline | Automates CI/CD workflows across multiple environments | Continuous integration and deployment for streamlined updates |

| AWS CodeBuild | Compiles code, runs tests, and produces deployable artifacts | Build automation, testing, and artifact generation |

| AWS CodeDeploy | Manages application deployments to various AWS environments (e.g., EC2, Lambda) | Automated deployments across environments with rollback capabilities |

| Amazon ECS | Container management for deploying Docker containers | Running microservices, simplifying app deployment and management |

| AWS Secrets Manager | Stores and manages sensitive information securely | Secure storage of API keys, passwords, and other sensitive data |

| AWS CloudFormation | Automates infrastructure setup through code (IaC) | Infrastructure consistency, environment replication, IaC best practices |

AWS solution architect interview questions

For solution architects, the focus is on designing AWS solutions that meet specific requirements. This segment tests the ability to create scalable, efficient, and cost-effective systems using AWS, highlighting architectural best practices.

What is the role of an AWS solution architect?

AWS solutions architects design and oversee applications on AWS, ensuring scalability and optimal performance. They guide developers, system administrators, and customers on utilizing AWS effectively for their business needs and communicate complex concepts to both technical and non-technical stakeholders.

What are the key security best practices for AWS EC2?

Essential EC2 security practices include using IAM for access management, restricting access to trusted hosts, minimizing permissions, disabling password-based logins for AMIs, and implementing multi-factor authentication for enhanced security.

How do you ensure multi-region redundancy in an AWS architecture?

To design for multi-region redundancy, deploy critical resources like EC2 instances, RDS databases, and S3 buckets in multiple AWS Regions. Use Route 53 for geo-based DNS routing and S3 Cross-Region Replication for data backup. Employ active-active or active-passive configurations depending on your failover strategy, and monitor performance and replication using CloudWatch and AWS Global Accelerator.

What are the strategies to create a highly available and fault-tolerant AWS architecture for critical web applications?

Building a highly available and fault-tolerant architecture on AWS involves several strategies to reduce the impact of failure and ensure continuous operation. Key principles include:

- Implementing redundancy across system components to eliminate single points of failure

- Using load balancing to distribute traffic evenly and ensure optimal performance

- Setting up automated monitoring for real-time failure detection and response. Systems should be designed for scalability to handle varying loads, with a distributed architecture to enhance fault tolerance.

- Employing fault isolation, regular backups, and disaster recovery plans are essential for data protection and quick recovery.

- Designing for graceful degradation maintains functionality during outages, while continuous testing and deployment practices improve system reliability.

Explain how you would choose between Amazon RDS, Amazon DynamoDB, and Amazon Redshift for a data-driven application.

Choosing between Amazon RDS, DynamoDB, and Redshift for a data-driven application depends on your specific needs:

- Amazon RDS is ideal for applications that require a traditional relational database with standard SQL support, transactions, and complex queries.

- Amazon DynamoDB suits applications needing a highly scalable, NoSQL database with fast, predictable performance at any scale. It's great for flexible data models and rapid development.

- Amazon Redshift is best for analytical applications requiring complex queries over large datasets, offering fast query performance by using columnar storage and data warehousing technology.

What considerations would you take into account when migrating an existing on-premises application to AWS? Use an example of choice.

When moving a company's customer relationship management (CRM) software from an in-house server setup to Amazon Web Services (AWS), it's essential to follow a strategic framework similar to the one AWS suggests, tailored for this specific scenario:

- Initial preparation and strategy formation

- Evaluate the existing CRM setup to identify limitations and areas for improvement.

- Set clear migration goals, such as achieving better scalability, enhancing data analysis features, or cutting down on maintenance costs.

- Identify AWS solutions required, like leveraging Amazon EC2 for computing resources and Amazon RDS for managing the database.

- Assessment and strategy planning

- Catalog CRM components to prioritize which parts to migrate first.

- Select appropriate migration techniques, for example, moving the CRM database with AWS Database Migration Service (DMS).

- Plan for a steady network connection during the move, potentially using AWS Direct Connect.

- Execution and validation

- Map out a detailed migration strategy beginning with less critical CRM modules as a trial run.

- Secure approval from key stakeholders before migrating the main CRM functions, employing AWS services.

- Test the migrated CRM's performance and security on AWS, making adjustments as needed.

- Transition to cloud operation

- Switch to fully managing the CRM application in the AWS environment, phasing out old on-premises components.

- Utilize AWS's suite of monitoring and management tools for continuous oversight and refinement.

- Apply insights gained from this migration to inform future transitions, considering broader cloud adoption across other applications.

This approach ensures the CRM migration to AWS is aligned with strategic business objectives, maximizing the benefits of cloud computing in terms of scalability, efficiency, and cost savings.

Describe how you would use AWS services to implement a microservices architecture.

Implementing a microservice architecture involves breaking down a software application into small, independent services that communicate through APIs. Here’s a concise guide to setting up microservices:

- Adopt Agile Development: Use agile methodologies to facilitate rapid development and deployment of individual microservices.

- Embrace API-First Design: Develop APIs for microservices interaction first to ensure clear, consistent communication between services.

- Leverage CI/CD Practices: Implement continuous integration and continuous delivery (CI/CD) to automate testing and deployment, enhancing development speed and reliability.

- Incorporate Twelve-Factor App Principles: Apply these principles to create scalable, maintainable services that are easy to deploy on cloud platforms like AWS.

- Choose the Right Architecture Pattern: Consider API-driven, event-driven, or data streaming patterns based on your application’s needs to optimize communication and data flow between services.

- Leverage AWS for Deployment: Use AWS services such as container technologies for scalable microservices or serverless computing to reduce operational complexity and focus on building application logic.

- Implement Serverless Principles: When appropriate, use serverless architectures to eliminate infrastructure management, scale automatically, and pay only for what you use, enhancing system efficiency and cost-effectiveness.

- Ensure System Resilience: Design microservices for fault tolerance and resilience, using AWS's built-in availability features to maintain service continuity.

- Focus on Cross-Service Aspects: Address distributed monitoring, logging, tracing, and data consistency to maintain system health and performance.

- Review with AWS Well-Architected Framework: Use the AWS Well-Architected Tool to evaluate your architecture against AWS’s best practices, ensuring reliability, security, efficiency, and cost-effectiveness.

By carefully considering these points, teams can effectively implement a microservice architecture that is scalable, flexible, and suitable for their specific application needs, all while leveraging AWS’s extensive cloud capabilities.

What is the relationship between AWS Glue and AWS Lake Formation?

AWS Lake Formation builds on AWS Glue's infrastructure, incorporating its ETL capabilities, control console, data catalog, and serverless architecture. While AWS Glue focuses on ETL processes, Lake Formation adds features for building, securing, and managing data lakes, enhancing Glue's functions.

For AWS Glue interview questions, it's important to understand how Glue supports Lake Formation. Candidates should be ready to discuss Glue's role in data lake management within AWS, showing their grasp of both services' integration and functionalities in the AWS ecosystem. This demonstrates a deep understanding of how these services collaborate to process and manage data efficiently.

How do you optimize AWS costs for a high-traffic web application?

To optimize AWS costs for a high-traffic application, you can start by using AWS Cost Explorer and AWS Budgets to monitor and manage spending. Then, consider these strategies:

- Use Reserved and Spot Instances for predictable and flexible workloads, respectively.

- Auto-scaling helps adjust resource allocation based on demand, reducing costs during low-traffic periods.

- Optimize storage with Amazon S3 lifecycle policies and S3 Intelligent-Tiering to move infrequently accessed data to cost-effective storage classes.

- Implement caching with Amazon CloudFront and Amazon ElastiCache to reduce repeated requests to backend resources, saving bandwidth and compute costs.

This approach ensures the application is cost-efficient without compromising on performance or availability.

What are the key pillars of the AWS Well-Architected Framework?

The AWS Well-Architected Framework provides a structured approach to designing secure, efficient, and resilient AWS architectures. It consists of five main pillars:

- Operational excellence: Focuses on supporting development and operations through monitoring, incident response, and automation.

- Security: Covers protecting data, systems, and assets through identity management, encryption, and incident response.

- Reliability: Involves building systems that can recover from failures, scaling resources dynamically, and handling network issues.

- Performance efficiency: Encourages the use of scalable resources and optimized workloads.

- Cost optimization: Focuses on managing costs by selecting the right resources and using pricing models such as Reserved Instances.

Understanding these pillars allows AWS architects to build well-balanced solutions that align with best practices for security, performance, reliability, and cost management.

Advanced AWS Interview Questions and Answers

AWS data engineer interview questions

Addressing data engineers, this section dives into AWS services for data handling, including warehousing and real-time processing. It looks at the expertise required to build scalable data pipelines with AWS.

Describe the difference between Amazon Redshift, RDS, and S3, and when should each one be used?

- Amazon S3 is an object storage service that provides scalable and durable storage for any amount of data. It can be used to store raw, unstructured data like log files, CSVs, images, etc.

- Amazon Redshift is a cloud data warehouse optimized for analytics and business intelligence. It integrates with S3 and can load data stored there to perform complex queries and generate reports.

- Amazon RDS provides managed relational databases like PostgreSQL, MySQL, etc. It can power transactional applications that need ACID-compliant databases with features like indexing, constraints, etc.

Describe a scenario where you would use Amazon Kinesis over AWS Lambda for data processing. What are the key considerations?

Kinesis can be used to handle large amounts of streaming data and allows reading and processing the streams with consumer applications.

Some of the key considerations are illustrated below:

- Data volume: Kinesis can handle up to megabytes per second of data vs Lambda's limit of 6MB per invocation, which is useful for high throughput streams.

- Streaming processing: Kinesis consumers can continuously process data in real-time as it arrives vs Lambda's batch invocations, and this helps with low latency processing.

- Replay capability: Kinesis streams retain data for a configured period, allowing replaying and reprocessing if needed, whereas Lambda not suited for replay.

- Ordering: Kinesis shards allow ordered processing of related records. Lambda on the other hand may process out of order.

- Scaling and parallelism: Kinesis shards can scale to handle load. Lambda may need orchestraation.

- Integration: Kinesis integrates well with other AWS services like Firehose, Redshift, EMR for analytics.

Furthermore, for high-volume, continuous, ordered, and replayable stream processing cases like real-time analytics, Kinesis provides native streaming support compared to Lambda's batch approach.

To learn more about data streaming, our course Streaming Data with AWS Kinesis and Lambda helps users learn how to leverage these technologies to ingest data from millions of sources and analyze them in real-time. This can help better prepare for AWS lambda interview questions.

What are the key differences between batch and real-time data processing? When would you choose one approach over the other for a data engineering project?

Batch processing involves collecting data over a period of time and processing it in large chunks or batches. This works well for analyzing historical, less frequent data.

Real-time streaming processing analyzes data continuously as it arrives in small increments. It allows for analyzing fresh, frequently updated data.

For a data engineering project, real-time streaming could be chosen when:

- You need immediate insights and can't wait for a batch process to run. For example, fraud detection.

- The data is constantly changing and analysis needs to keep up, like social media monitoring.

- Low latency is required, like for automated trading systems.

Batch processing may be better when:

- Historical data needs complex modeling or analysis, like demand forecasting.

- Data comes from various sources that only provide periodic dumps.

- Lower processing costs are critical over processing speed.

So real-time is best for rapidly evolving data needing continuous analysis, while batch suits periodically available data requiring historical modeling.

How can you automate schema evolution in a data pipeline on AWS?

Schema evolution can be managed using AWS Glue’s dynamic frame and schema inference features. Combined with the Glue Data Catalog, you can automatically track schema changes. To avoid breaking downstream processes, implement schema validation steps with tools like AWS Deequ or integrate custom logic into your ETL scripts to log and resolve mismatches.

How do you handle schema-on-read vs schema-on-write in AWS data lakes?

Schema-on-read is commonly used in data lakes where raw, semi-structured data is stored (e.g., in S3), and the schema is applied only during query time using tools like Athena or Redshift Spectrum. This approach offers flexibility for diverse data sources. Schema-on-write, often used in RDS or Redshift, enforces structure upfront and is preferred for transactional or structured datasets needing strict data validation.

What is an operational data store, and how does it complement a data warehouse?

An operational data store (ODS) is a database designed to support real-time business operations and analytics. It acts as an interim platform between transactional systems and the data warehouse.

While a data warehouse contains high-quality data optimized for business intelligence and reporting, an ODS contains up-to-date, subject-oriented, integrated data from multiple sources.

Below are the key features of an ODS:

- It provides real-time data for operations monitoring and decision-making

- Integrates live data from multiple sources

- It is optimized for fast queries and analytics vs long-term storage

- ODS contains granular, atomic data vs aggregated in warehouse

An ODS and data warehouse are complementary systems. ODS supports real-time operations using current data, while the data warehouse enables strategic reporting and analysis leveraging integrated historical data. When combined, they provide a comprehensive platform for both operational and analytical needs.

How would you set up a data lake on AWS, and what services would you use?

To build a data lake on AWS, the core service to start with is Amazon S3 for storing raw, structured, and unstructured data in a scalable and durable way. Here’s a step-by-step approach and additional services involved:

- Storage layer: Use Amazon S3 to store large volumes of data. Organize data with a structured folder hierarchy based on data type, source, or freshness.

- Data cataloging: Use AWS Glue to create a data catalog, which makes it easier to search and query data stored in S3 by creating metadata definitions.

- Data transformation and ETL: Use AWS Glue ETL to prepare and transform raw data into a format that’s ready for analysis.

- Security and access control: Implement AWS IAM and AWS Lake Formation to manage access, permissions, and data encryption.

- Analytics and querying: Use Amazon Athena for ad-hoc querying, Amazon Redshift Spectrum for analytics, and Amazon QuickSight for visualization.

This setup provides a flexible, scalable data lake architecture that can handle large volumes of data for both structured and unstructured analysis.

Explain the different storage classes in Amazon S3 and when to use each.

Amazon S3 offers multiple storage classes, each optimized for specific use cases and cost requirements. The following table summarizes them:

| Storage class | Use case | Access frequency | Cost efficiency |

|---|---|---|---|

| S3 Standard | Frequently accessed data | High | Standard pricing |

| S3 Intelligent-Tiering | Unpredictable access patterns | Automatically adjusted | Cost-effective with automated tiering |

| S3 Standard-IA | Infrequently accessed but quickly retrievable | Low | Lower cost, rapid retrieval |

| S3 One Zone-IA | Infrequent access in a single AZ | Low | Lower cost, less redundancy |

| S3 Glacier | Long-term archival with infrequent access | Rare | Low-cost, retrieval in minutes or hours |

| S3 Glacier Deep Archive | Regulatory or compliance archiving | Very rare | Lowest cost, retrieval in 12–48 hours |

Understanding S3 storage classes helps optimize storage costs and access times based on specific data needs.

AWS Scenario-based Questions

Focusing on practical application, these questions assess problem-solving abilities in realistic scenarios, demanding a comprehensive understanding of how to employ AWS services to tackle complex challenges.

The following table summarizes scenarios that are typically asked during AWS interviews, along with their description and potential solutions:

|

Case type |

Description |

Solution |

|

Application migration |

A company plans to migrate its legacy application to AWS. The application is data-intensive and requires low-latency access for users across the globe. What AWS services and architecture would you recommend to ensure high availability and low latency? |

|

|

Disaster recovery |

Your organization wants to implement a disaster recovery plan for its critical AWS workloads with an RPO (Recovery Point Objective) of 5 minutes and an RTO (Recovery Time Objective) of 1 hour. Describe the AWS services you would use to meet these objectives. |

|

|

DDos attacks protection |

Consider a scenario where you need to design a scalable and secure web application infrastructure on AWS. The application should handle sudden spikes in traffic and protect against DDoS attacks. What AWS services and features would you use in your design? |

|

|

Real-time data analytics |

An IoT startup wants to process and analyze real-time data from thousands of sensors across the globe. The solution needs to be highly scalable and cost-effective. Which AWS services would you use to build this platform, and how would you ensure it scales with demand? |

|

|

Large-volume data analysis |

A financial services company requires a data analytics solution on AWS to process and analyze large volumes of transaction data in real time. The solution must also comply with stringent security and compliance standards. How would you architect this solution using AWS, and what measures would you put in place to ensure security and compliance? |

|

Non-Technical AWS Interview Questions

Besides technical prowess, understanding the broader impact of AWS solutions is vital to a successful interview, and below are a few questions, along with their answers. These answers can be different from one candidate to another, depending on their experience and background.

How do you stay updated with AWS and cloud technology trends?

- Expected from candidate: The interviewer wants to know about your commitment to continuous learning and how they keep your skills relevant. They are looking for specific resources or practices they use to stay informed.

- Example answer: "I stay updated by reading AWS official blogs and participating in community forums like the AWS subreddit. I also attend local AWS user group meetups and webinars. These activities help me stay informed about the latest AWS features and best practices."

Describe a time when you had to explain a complex AWS concept to someone without a technical background. How did you go about it?

- Expected from candidate: This question assesses your communication skills and ability to simplify complex information. The interviewer is looking for evidence of your teaching ability and patience.

- Example answer: "In my previous role, I had to explain cloud storage benefits to our non-technical stakeholders. I used the analogy of storing files in a cloud drive versus a physical hard drive, highlighting ease of access and security. This helped them understand the concept without getting into the technicalities."

What motivates you to work in the cloud computing industry, specifically with AWS?

- Expected from candidate: The interviewer wants to gauge your passion for the field and understand what drives you. They're looking for genuine motivations that align with the role and company values.

- Example answer: "What excites me about cloud computing, especially AWS, is its transformative power in scaling businesses and driving innovation. The constant evolution of AWS services motivates me to solve new challenges and contribute to impactful projects."

Can you describe a challenging project you managed and how you ensured its success?

- Expected from candidate: Here, the focus is on your project management and problem-solving skills. The interviewer is interested in your approach to overcoming obstacles and driving projects to completion.

- Example answer: "In a previous project, we faced significant delays due to resource constraints. I prioritized tasks based on impact, negotiated for additional resources, and kept clear communication with the team and stakeholders. This approach helped us meet our project milestones and ultimately deliver on time."

How do you handle tight deadlines when multiple projects are demanding your attention?

- Expected from candidate: This question tests your time management and prioritization skills. The interviewer wants to know how you manage stress and workload effectively.

- Example answer: "I use a combination of prioritization and delegation. I assess each project's urgency and impact, prioritize accordingly, and delegate tasks when appropriate. I also communicate regularly with stakeholders about progress and any adjustments needed to meet deadlines."

What do you think sets AWS apart from other cloud service providers?

- Expected from candidate: The interviewer is looking for your understanding of AWS's unique value proposition. The goal is to see that you have a good grasp of what makes AWS a leader in the cloud industry.

- Example answer: "AWS sets itself apart through its extensive global infrastructure, which offers unmatched scalability and reliability. Additionally, AWS's commitment to innovation, with a broad and deep range of services, allows for more flexible and tailored cloud solutions compared to its competitors."

How do you approach learning new AWS tools or services when they’re introduced?

- Expected from candidate: This question assesses your adaptability and learning style. The interviewer wants to see that you have a proactive approach to mastering new technologies, which is essential in the fast-evolving field of cloud computing.

- Example answer: "When AWS introduces a new service, I start by reviewing the official documentation and release notes to understand its purpose and functionality. I then explore hands-on tutorials and experiment in a sandbox environment for practical experience. If possible, I discuss the service with colleagues or participate in forums to see how others are leveraging it. This combination of theory and practice helps me get comfortable with new tools quickly."

Describe how you balance security and efficiency when designing AWS solutions.

- Expected from candidate: The interviewer is assessing your ability to think strategically about security while also considering performance. The goal is to see that you can balance best practices for security with the need for operational efficiency.

- Example answer: "I believe that security and efficiency go hand-in-hand. When designing AWS solutions, I start with a security-first mindset by implementing IAM policies, network isolation with VPCs, and data encryption. For efficiency, I ensure that these security practices don’t introduce unnecessary latency by optimizing configurations and choosing scalable services like AWS Lambda for compute-intensive tasks. My approach is to build secure architectures that are also responsive and cost-effective."

Conclusion

This article has offered a comprehensive roadmap of AWS interview questions for candidates at various levels of expertise—from those just starting to explore the world of AWS to seasoned professionals seeking to elevate their careers.

Whether one is preparing for your first AWS interview or aiming to secure a more advanced position, this guide serves as an invaluable resource. It prepares you not just to respond to interview questions but to engage deeply with the AWS platform, enhancing your understanding and application of its vast capabilities.

Get certified in your dream Data Engineer role

Our certification programs help you stand out and prove your skills are job-ready to potential employers.

FAQs

Do I need an AWS certification to land a cloud-related job?

While not mandatory, AWS certifications like the AWS Certified Solutions Architect Associate or AWS Certified Developer Associate validate your expertise and enhance your resume. Many employers value certifications as proof of your skills, but hands-on experience is equally important.

What are the most important AWS services to focus on for interviews?

The key AWS services depend on the role you're applying for. Some universally important ones include:

- Compute: EC2, Lambda.

- Storage: S3, EBS, Glacier.

- Networking: VPC, Route 53, ELB.

- Security: IAM, KMS.

- Databases: RDS, DynamoDB.

- DevOps Tools: CloudFormation, CodePipeline.

What non-technical skills are essential for succeeding in an AWS interview?

In addition to technical expertise, employers often assess:

- Problem-solving: Can you architect scalable, cost-effective solutions?

- Communication: Are you able to explain technical concepts clearly to stakeholders?

- Time management: How do you prioritize tasks and meet deadlines in dynamic environments?

- Teamwork: Are you able to collaborate effectively in cross-functional teams?

What if I don’t know the answer to a technical question during an AWS interview?

It’s okay not to know everything. Instead of guessing, be honest:

- Explain how you would approach finding the answer (e.g., consulting AWS documentation or conducting tests).

- Highlight related knowledge that demonstrates your understanding of the broader concept.

How can I negotiate my salary for an AWS-related role?

- Research market rates for your role and location using sites like Glassdoor or Payscale.

- Highlight your certifications, relevant experience, and projects during negotiations.

- Demonstrate how your skills can bring value to the company, such as cost savings or improving infrastructure reliability.

What should I do after failing an AWS certification exam or interview?

- Identify your weak areas using feedback or your exam report.

- Create a study or practice plan to strengthen those areas.

- Leverage additional resources, like practice exams or hands-on labs.

- Don’t get discouraged—many professionals pass after their second or third attempt.

A multi-talented data scientist who enjoys sharing his knowledge and giving back to others, Zoumana is a YouTube content creator and a top tech writer on Medium. He finds joy in speaking, coding, and teaching . Zoumana holds two master’s degrees. The first one in computer science with a focus in Machine Learning from Paris, France, and the second one in Data Science from Texas Tech University in the US. His career path started as a Software Developer at Groupe OPEN in France, before moving on to IBM as a Machine Learning Consultant, where he developed end-to-end AI solutions for insurance companies. Zoumana joined Axionable, the first Sustainable AI startup based in Paris and Montreal. There, he served as a Data Scientist and implemented AI products, mostly NLP use cases, for clients from France, Montreal, Singapore, and Switzerland. Additionally, 5% of his time was dedicated to Research and Development. As of now, he is working as a Senior Data Scientist at IFC-the world Bank Group.