Course

It’s no secret how fast artificial intelligence has evolved in the last couple of years, especially in the field of large language models (LLMs).

Traditionally, to get LLMs to perform a specific task, you'd need to train them on many examples. This process can be time-consuming and resource-intensive. However, there are some techniques that allow LLMs to tackle a wide range of tasks without task-specific training.

One of these techniques is called zero-shot prompting.

In this tutorial, we will:

- Learn what zero-shot prompting is.

- Explore the fundamental concepts behind zero-shot prompting.

- Examine how LLMs enable this capability.

- Learn how to create effective prompts for various tasks.

- Discover real-world applications and use cases.

- Understand the limitations and challenges of this approach.

This tutorial is part of my “Prompt Engineering: From Zero to Hero” series of blog posts:

- Prompt Engineering for Everyone

- Zero-Shot Prompting

- Few-Shot Prompting

- Prompt Chaining

Looking to get started with Generative AI?

Learn how to work with LLMs in Python right in your browser

What Is Zero-Shot Prompting?

Zero-shot prompting is a technique that uses LLMs' generalization capabilities to attempt new tasks without prior specific training or examples.

It uses LLMs' extensive pre-training on large and diverse datasets, enabling them to apply their broad knowledge to new tasks based solely on clear and concise instructions. While this technique can be highly effective, its success depends on the task's complexity and the prompt's quality.

In a nutshell, you describe the task you want the model to perform without providing examples. The model then accesses its extensive pre-trained knowledge to generate a relevant response.

This approach contrasts with one-shot or few-shot prompting, where you give the model one or a few examples to guide its output.

Zero-shot prompting showcases the impressive ability of LLMs to generalize their understanding across diverse domains. With just a clear instruction, you can tap into the model's knowledge base to generate creative, informative, or task-specific content without additional training.

Let’s go over a quick example:

Prompt: Classify the animal based on its characteristics. Animal: This creature has eight legs, spins webs, and often eats insects.

Output: Spider.

In this example, the prompt doesn't provide any examples of how to classify animals or what classifications to use. Also, the prompt clearly defines the task (classify the animal) based on given characteristics.

The large language model uses its broad knowledge about animals and their traits to make the classification and correctly identifies that the described creature is a spider based on the key characteristics provided. It provides just the classification requested, demonstrating its ability to follow instructions precisely.

Zero-shot prompting eliminates the need for task-specific fine-tuning, dramatically expands the range of tasks a model can perform, and makes advanced capabilities more accessible to non-technical users.

How Zero-Shot Prompting Works

To understand how zero-shot prompting works, two important aspects must be clear: the pre-training of LLMs and the concept of prompt design.

Language model pre-training

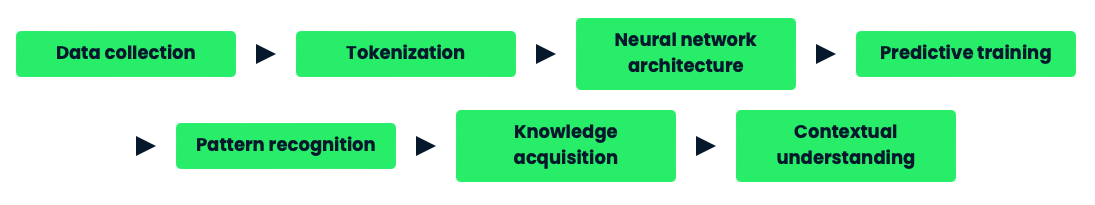

LLMs undergo extensive pre-training on massive datasets, which forms the foundation for their zero-shot capabilities. The pre-training process is as follows:

- Data collection: LLMs are trained on diverse text data from various sources. This data can amount to hundreds of billions of words.

- Tokenization: The text is broken down into smaller units called tokens, which can be words, subwords, or characters.

- Neural network architecture: The model, typically based on transformer architecture, processes these tokens through multiple layers of neural networks.

- Predictive training: The model is trained to predict the next token in a sequence, given the previous tokens.

- Pattern recognition: Through this process, the model learns to recognize patterns in language, including grammar, syntax, and semantic relationships.

- Knowledge acquisition: The model builds a broad knowledge base spanning various topics and domains.

- Contextual understanding: The model learns to understand context and generate contextually appropriate responses.

This gives LLMs the knowledge, understanding, and adaptability necessary for zero-shot prompting. This extensive pre-training allows these models to perform tasks they weren't explicitly trained for simply by understanding and responding to natural language prompts.

Prompt design

Effective prompt design is crucial for successful zero-shot prompting. It bridges the user's intent and the model's capabilities. But how do we make sure that our prompts are effective? Let’s have a look at these strategies:

Clear instructions

Prompts should provide explicit, unambiguous instructions that communicate the desired task to the LLM.

Example: "Translate the following English sentence to French:" This prompt clearly states the task (translation) and specifies both the source (English) and target (French) languages, leaving no room for misinterpretation.

Task framing

Frame the task in a way that leverages the model's pre-existing knowledge and capabilities.

Example: "Classify the sentiment of this movie review as positive, negative, or neutral:" This prompt frames the task as a classification problem with specific categories, which aligns with the model's training in text classification tasks.

Context provision

Include relevant context or background information to help the model understand the task's requirements and constraints.

Example: "Given that 'Python' in this context refers to a programming language, explain what Python is:" This prompt provides crucial context (Python as a programming language, not a snake) to ensure the model focuses on the relevant information.

Output format specification

Clearly define the expected format of the response.

Example: "List three main causes of climate change, each in a separate bullet point." This prompt specifies both the number of items (three) and the format (bullet points) for the response, ensuring a structured output.

Avoid ambiguity

Use precise language and avoid vague or open-ended instructions that could lead to misinterpretation by the model.

Example: "Describe the specific steps involved in the water cycle, starting with evaporation." This prompt uses precise language ("specific steps," "water cycle") and provides a starting point, reducing the chance of misinterpretation.

Use natural language

Phrase prompts in a way that feels natural and conversational.

Example: "Imagine you're a career counselor. What advice would you give to a recent college graduate looking for their first job?" This prompt uses a conversational tone and sets up a relatable scenario, encouraging a more natural and engaging response from the model.

Iterative refinement

If initial results are unsatisfactory, refine the prompt by adding more specificity or adjusting the language.

Example: Initial prompt: "Tell me about renewable energy." Refined prompt: "Explain the three most common types of renewable energy sources, their benefits, and current challenges in implementation." This shows how an initial broad prompt can be refined to produce a more focused and comprehensive response.

Advantages of Zero-Shot Prompting

Zero-shot prompting has significant advantages over other techniques out there.

One of them is its great flexibility. This allows LLMs to adapt to a range of tasks without requiring task-specific training data. Flexibility is very important because it allows a single model to perform numerous tasks across various domains, from language translation to sentiment analysis to content generation. It enables quick adaptation to new tasks immediately, without the need for time-consuming retraining or fine-tuning processes.

Also, zero-shot models can handle new situations or tasks that weren't explicitly considered before. You can also customize the model's behavior to specific needs simply by adjusting the prompt, without modifying the underlying model.

As the model's general knowledge grows, its ability to handle diverse tasks improves naturally, without task-specific interventions.

Zero-shot prompting also offers substantial efficiency benefits, particularly in terms of time and resource savings. It eliminates the need for extensive task-specific datasets, which can be time-consuming and expensive to gather.

It also removes the requirement for labeled training data, reducing costs and potential biases associated with human annotators. Without task-specific fine-tuning, computational demands are significantly lower. This allows for faster deployment of new applications since there is no waiting period for data collection and model training. A single model can handle many tasks, reducing the need for multiple specialized models. Prompt engineering enables quick iterations and improvements without the need for retraining.

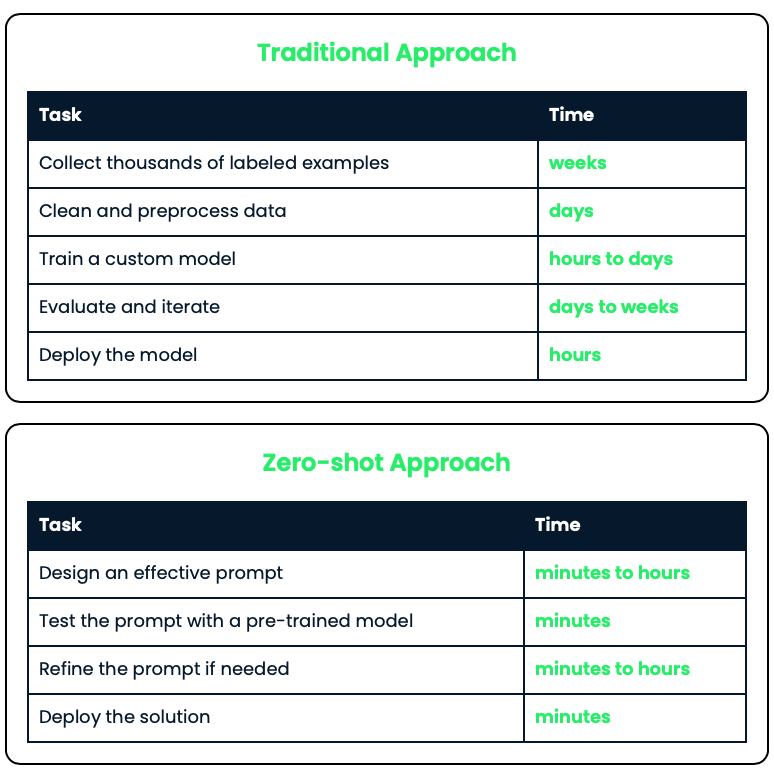

Let’s consider the traditional process versus zero-shot prompting for developing a sentiment analysis tool:

This comparison shows the significant time and resource savings achieved with zero-shot prompting, allowing for faster development and deployment of AI solutions across various fields.

Applications of Zero-Shot Prompting

Zero-shot prompting has led to many new uses in different fields. Let's look at some important areas where this technique makes a difference.

Text generation

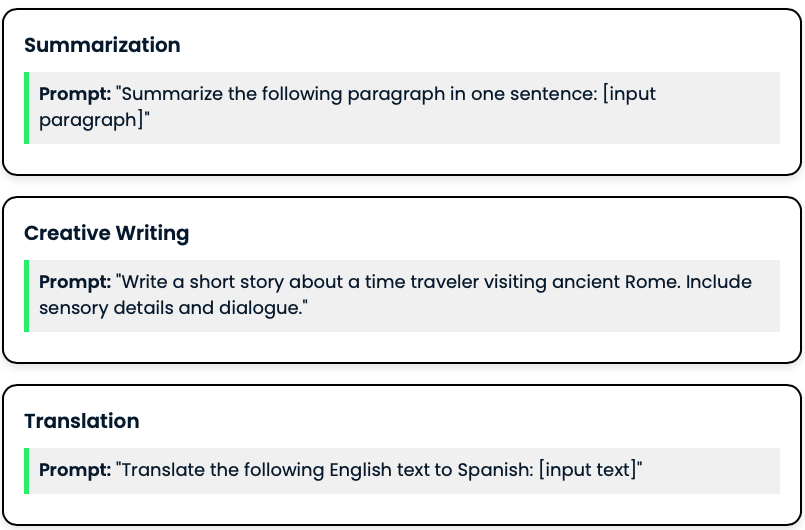

Zero-shot prompting works great in various text generation tasks, allowing models to produce diverse types of content without specific training. Some include summarization, creative writing, and translation. Here are some examples:

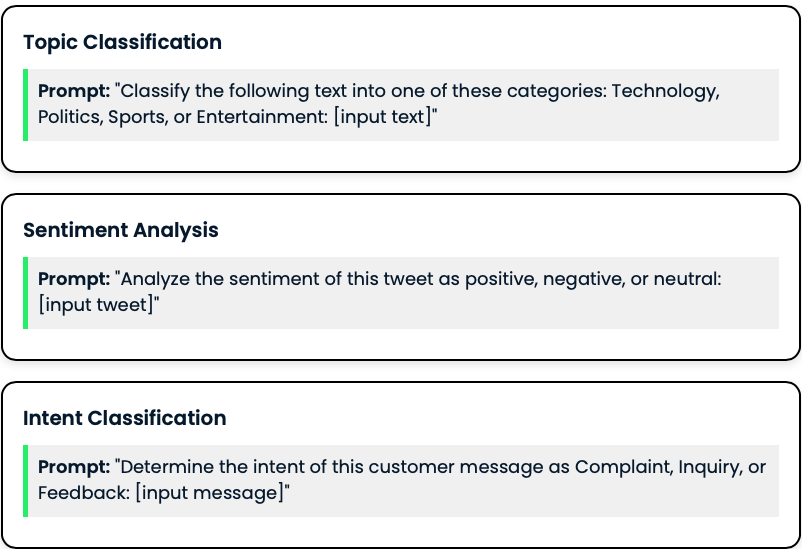

Classification and sentiment analysis

Zero-shot prompting can be used for various classification tasks, including topic classification, sentiment analysis, and intent classification, without needing labeled training data.

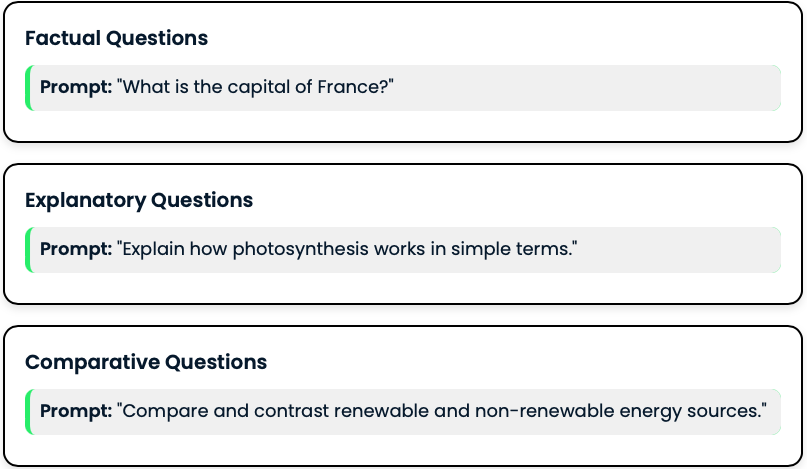

Question answering

Zero-shot prompting gives models the ability to answer many different questions, such as factual, explanatory, and comparative questions, based on their general knowledge and understanding of language.

Limitations of Zero-Shot Prompting

While zero-shot prompting has great capabilities, it's important to understand its limitations and challenges.

Accuracy

Zero-shot prompting, while strong, might not always be as accurate as models specially trained for specific tasks. This limitation comes from several factors:

- Lack of task-specific optimization: Fine-tuned models benefit from targeted training on task-specific data, which can lead to higher accuracy for that particular task.

- Interpretation variability: The model's interpretation of the prompt may not always align perfectly with the user's intent, potentially leading to inaccuracies.

- Complex task handling: Highly specialized or complex tasks might require a more nuanced understanding than what's possible through zero-shot prompting alone.

Prompt sensitivity

The performance of zero-shot prompting can be highly sensitive to the wording of the prompt.

- Wording variations: Slight changes in vocabulary or sentence structure can alter the model's interpretation of the task.

- Instruction clarity: Ambiguous or imprecise instructions may lead to unexpected or inconsistent results.

- Context dependency: The effectiveness of a prompt may vary depending on the specific input or context it's applied to.

Let’s look at a specific example with a sample text and explain the outputs for each prompt. This will illustrate how slight changes in prompting can lead to different responses from the model.

We will use the following sample text:

Sample text: "The Industrial Revolution, which took place from the 18th to 19th centuries, was a period during which predominantly agrarian, rural societies in Europe and America became industrial and urban. Prior to the Industrial Revolution, which began in Britain in the late 1700s, manufacturing was often done in people's homes, using hand tools or basic machines. Industrialization marked a shift to powered, special-purpose machinery, factories and mass production. The iron and textile industries, along with the development of the steam engine, played central roles in the Industrial Revolution, which also saw improved systems of transportation, communication and banking."

Now, let's apply our three prompts:

Prompt 1: "Summarize this text in one sentence:"

Result 1: The Industrial Revolution was a transformative period in the 18th and 19th centuries that shifted European and American societies from agrarian to industrial, characterized by the rise of factories, mass production, and technological advancements in manufacturing, transportation, and communication.

This response provides a concise, one-sentence summary that captures the essence of the Industrial Revolution, its time frame, location, and key characteristics.

Prompt 2: "Provide a brief summary of the following:"

Result 2: The Industrial Revolution was a significant period of change from the 18th to 19th centuries, primarily affecting Europe and America. It marked a transition from agrarian, rural societies to industrial and urban ones. Before this era, manufacturing was typically done at home using simple tools. The revolution brought about powered machinery, factories, and mass production methods. Key industries like iron and textiles played crucial roles, as did the invention of the steam engine. This period also saw advancements in transportation, communication, and banking systems, fundamentally altering the economic and social landscape.

This response is longer and more detailed than the first. It covers more aspects of the Industrial Revolution, including its impact on specific industries and broader societal changes. The model interpreted "brief summary" as allowing for multiple sentences and more comprehensive coverage.

Prompt 3: "What's the main idea of this passage?"

Result 3: The main idea of this passage is the fundamental shift from agrarian to industrial society during the Industrial Revolution, highlighting the major changes in manufacturing methods, technology, and urban development that occurred in Europe and America from the 18th to 19th centuries.

This response focuses on the central theme or core concept of the passage rather than trying to summarize all the details. It identifies the key transformation (agrarian to industrial) and mentions broad areas of change without getting into specifics like the iron and textile industries or the steam engine.

Bias

Pre-trained models used for zero-shot prompting can exhibit biases present in their training data, which can lead to problematic outputs:

- Demographic biases: Models may produce biased results related to race, gender, age, or other demographic factors.

- Cultural biases: The model's responses might favor certain cultural perspectives over others.

- Recency bias: Information more prevalent in the training data (often more recent) may be overrepresented in the model's knowledge.

- Stereotyping: The model might reinforce harmful stereotypes present in its training data.

Understanding these limitations and challenges is key for effectively using zero-shot prompting. It is important that you are aware of potential inaccuracies, the importance of careful prompt design, and the need to critically evaluate outputs for potential biases.

If you’re interested in this topic, check out this course on AI Ethics.

Earn a Top AI Certification

Conclusion

In this tutorial we have learnt that Zero-shot prompting is a technique that provides a powerful and flexible way to handle many language tasks without needing special training data or fine-tuning the model.

Zero-shot prompting allows LLMs to perform various tasks, such as text generation, classification, and question answering, through carefully designed prompts.

It eliminates the need for task-specific data collection and model training, significantly reducing the time and resources needed to deploy AI solutions.

This method makes advanced AI capabilities more accessible to non-technical people, as complex tasks can be performed by simply describing them in natural language.

However, zero-shot prompting has limitations in accuracy, prompt sensitivity, and potential biases. Understanding these challenges is crucial for responsible and effective use.

And now, it is time to put this knowledge into practice! I would like to encourage you to explore zero-shot prompting in your own projects and experimenting with different prompts and tasks. So have fun and discover the potential of this technique in your own work – enjoy!

FAQs

How does zero-shot prompting compare to few-shot prompting in terms of accuracy and efficiency?

Zero-shot prompting is generally more efficient as it requires no examples, but few-shot prompting often provides better accuracy, especially for complex tasks. The choice depends on the specific use case, available resources, and desired performance balance.

What are the ethical implications of using zero-shot prompting in decision-making processes or automated systems?

Ethical concerns include potential biases in the model's pre-training data, lack of task-specific safeguards, and the risk of overreliance on AI for critical decisions. Careful monitoring and human oversight are key when implementing zero-shot prompting in sensitive areas.

Can zero-shot prompting be combined with other AI techniques to enhance its capabilities, and if so, how?

Yes, zero-shot prompting can be combined with techniques like transfer learning, multi-task learning, or reinforcement learning. For instance, combining zero-shot prompting with transfer learning allows models to adapt more quickly to domain-specific tasks while maintaining generalization abilities.

Are there specific industries or fields where zero-shot prompting has shown particularly promising results or applications?

Zero-shot prompting has been quite successful in fields like customer service (for handling diverse queries), content creation (for generating varied content types), and scientific research (for hypothesis generation and literature review). It's also proving value in multilingual applications and rapid prototyping of AI solutions.

How might zero-shot prompting evolve in the next 5-10 years, and what potential breakthroughs could we see in this area?

We might see more sophisticated context understanding, improved cross-domain generalization, and integration with multimodal AI systems. Potential breakthroughs could involve zero-shot prompting for complex reasoning tasks, real-time adaptation to user feedback, and seamless integration with external knowledge bases for better accuracy and expansion of capabilities.

Ana Rojo Echeburúa is an AI and data specialist with a PhD in Applied Mathematics. She loves turning data into actionable insights and has extensive experience leading technical teams. Ana enjoys working closely with clients to solve their business problems and create innovative AI solutions. Known for her problem-solving skills and clear communication, she is passionate about AI, especially generative AI. Ana is dedicated to continuous learning and ethical AI development, as well as simplifying complex problems and explaining technology in accessible ways.