Cours

It’s only been a month since we saw the launch of GPT-5.1. But a month is a long time in the world of AI. In the intervening time, Google released Gemini 3, which took top rank on most of the most-watched benchmark tests, and Antropic released Claude Opus 4.5, which once again topped the leaderboard in software engineering.

OpenAI is eager to present itself as the company best equipped to deliver business value. But after slipping on key leaderboards, it may have feared losing users or even enterprise clients. According to reports, that pressure triggered a “code red” and pushed the team to accelerate the release of GPT-5.2.

In this article, I’ll help you understand everything announced with the release of GPT-5.2, including what enterprises stand to gain from the three new models, which are currently rolling out in ChatGPT for all paid users (and are now available in the API). You can also check out our guide to the new ChatGPT Images tool.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week's key stories. Subscribe and stay sharp in just a few minutes a week:

What’s New in GPT-5.2?

GPT-5.2 brings specific and targeted improvements across reasoning, memory, and tool use. In OpenAI’s framing, these enhancements translate into better enterprise workflows and fewer failure points during your work. Each of the three models in the series takes a different approach to delivering that value.

GPT‑5.2 Instant

GPT-5.2 Instant prioritizes low latency and rapid response times. It is pitched as the everyday workhorse for getting info, drafting, and translating. It’s the model most people will interact with by default, and its advantage is throughput rather than depth. In practical terms, it fills the gap when you need quick answers or lightweight automation without paying for heavier reasoning.

GPT‑5.2 Thinking

GPT-5.2 Thinking incorporates extended reasoning capabilities that allow the model to work through complex problems step by step before delivering its response.

On OpenAI’s own benchmarks, it’s the model that sets new highs on knowledge-work, coding, and long-context tasks, especially when it can use tools like spreadsheets and presentations.

It’s OpenAI's attempt at a general-purpose knowledge-work engine, and it’s the model you choose when accuracy improves with deliberate thinking. For many organizations, this will be the choice for analysis, multi-step workflows, and agentic tasks.

GPT‑5.2 Pro

This one is the flagship, and it’s been built with enterprise customers in mind.

It’s also the most expensive option in the lineup because it is aimed at high-stakes scenarios where incremental gains in reasoning quality, factual accuracy, and abstract problem-solving justify the higher per-token cost.

Pro is aimed for use in environments where errors are costly, and where teams need a model that can maintain coherence across very long contexts. It’s the model that could be used in decision-support systems, complex planning, and any workload where reliability matters as much as raw capability.

When Is GPT-5.2 Available?

GPT-5.2 is already rolling out. OpenAI officially launched the model on December 11, 2025, with paid ChatGPT plans getting access first, and availability continuing to expand across regions and platforms. Users can access all three GPT-5.2 models directly inside ChatGPT, and developers can use them through the OpenAI API via the Responses and Chat Completions endpoints.

GPT-5.2 Benchmarks

The release of GPT 5.1 was notably light on benchmark results because it focused on user experience. It’s no surprise then that GPT 5.2 is attempting to right the ship by focusing more on results.

Work tasks

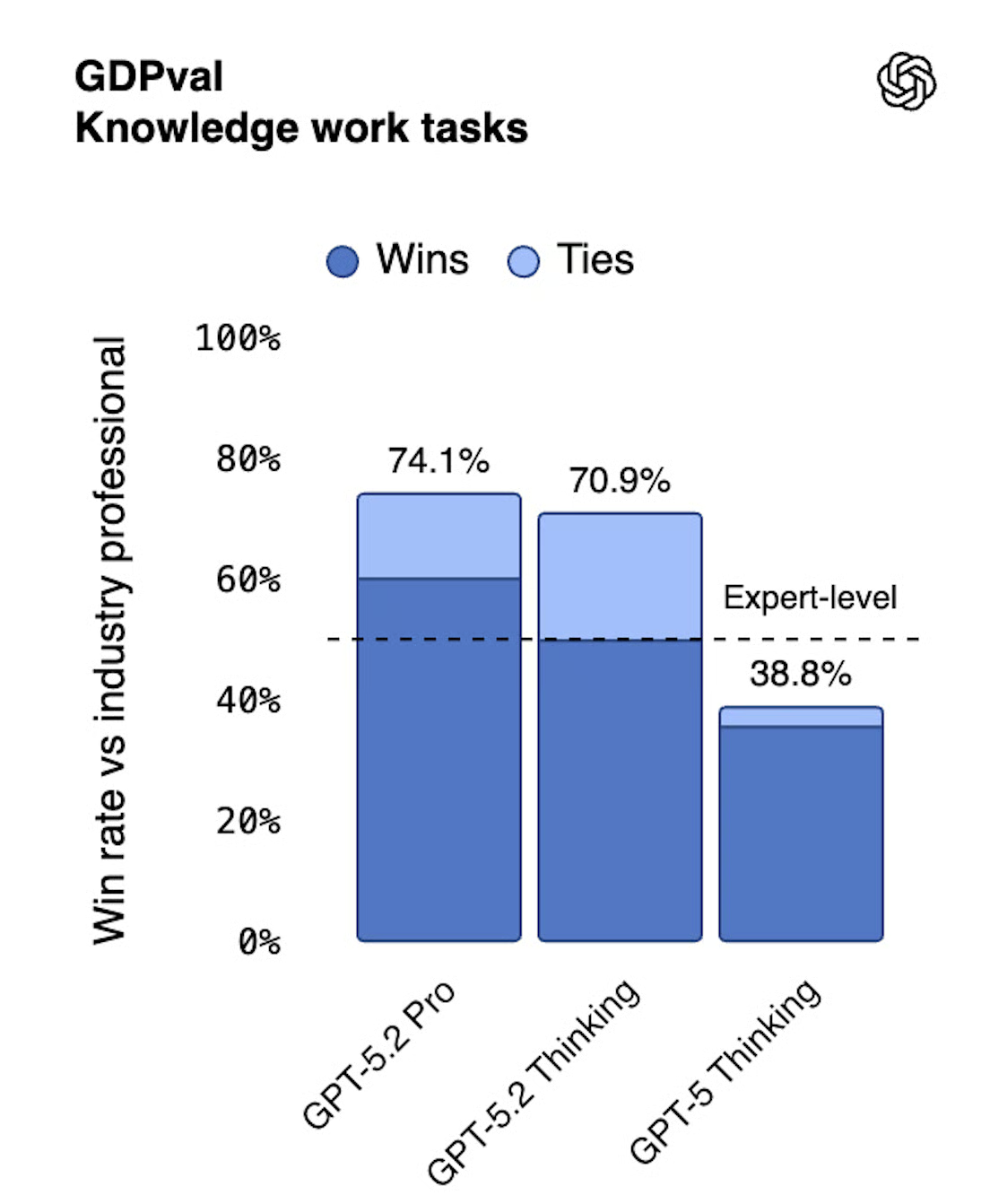

GDPval is the most highlighted benchmark result in the new release. But it was only introduced on the arxiv in early October 2025, so highlighting this benchmark result reveals a lot about the nature of the release.

GDPval is a test of the tasks that working professionals perform in their jobs every day, such as building reports and making presentations. The test questions are picked from 44 occupations across the top nine industries contributing to the U.S. GDP. This includes everything from a nurse to a data scientist to a college professor. The tasks require real work products, such as spreadsheets, accounting ledgers, videos, and presentations.

Coding

The second-most highlighted benchmark in this release is SWE-Bench Pro, a difficult software engineering eval on which GPT-5.2 scored 55.6%. SWE-Bench Pro requires solving long-horizon issues from real repos, problems that involve things like making multiple file changes.

You might have heard of SWE-bench Verified, which is an easier version of the test. On this one, GPT 5.2 scored 80%. For context, we recently reported that Claude Opus 4.5 scored 80.9% on this test, so the two models are roughly comparable.

Long-context reasoning

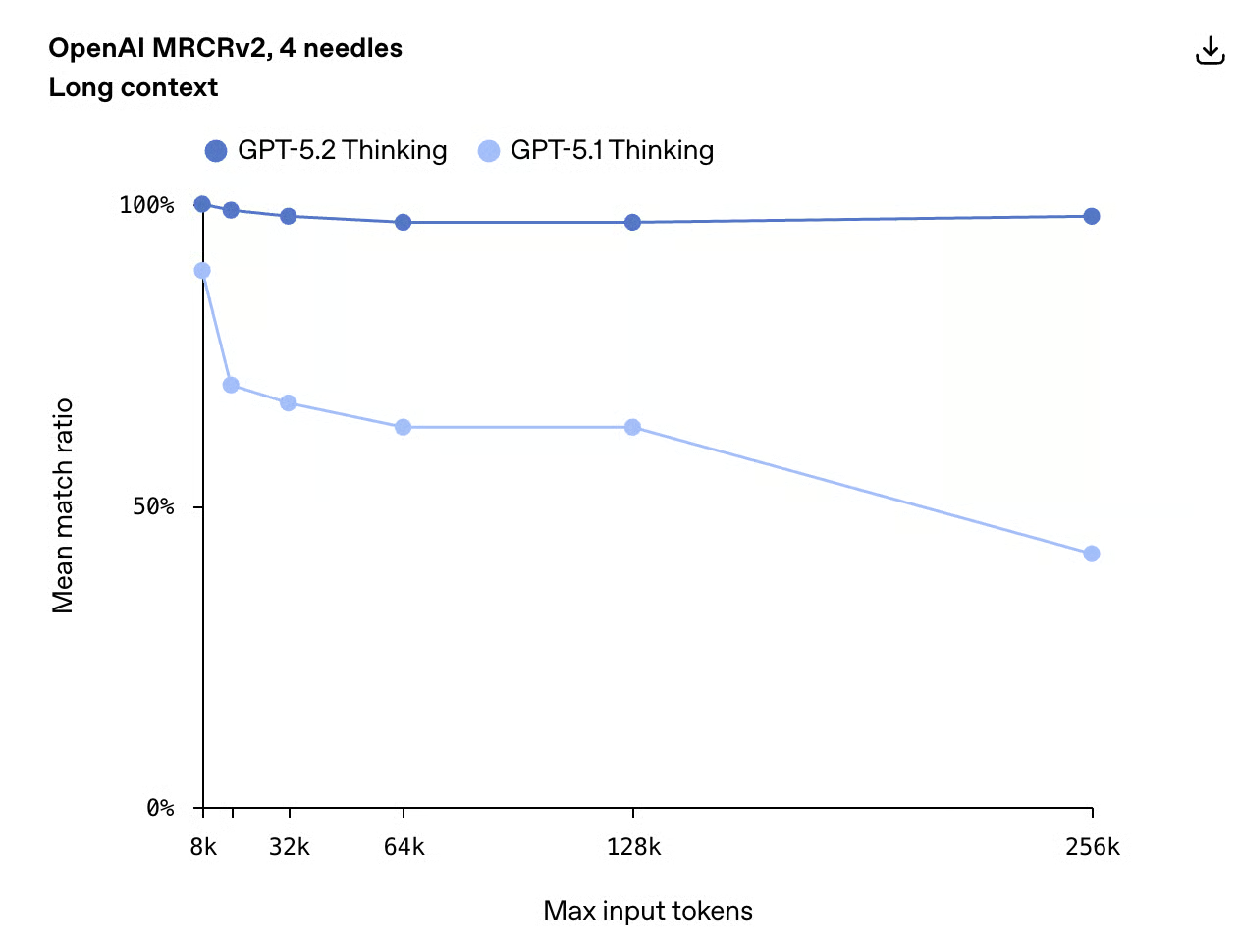

GPT 5.2 shows great performance in another benchmark called MRCR v2, which stands for Multi-Round Coreference Resolution (version 2). It scores an almost perfect score, which OpenAI wants us to understand means we can use GPT 5.2 to work with long documents.

To its credit, it achieves almost perfect accuracy up to 256k tokens. That’s two hundred thousand words or so, which is roughly the size of a novel.

GPT-5.2 Examples: Testing the New Capabilities

OpenAI confidently asserted that GPT 5.2 brings big improvements in intelligence, long-context understanding, agentic tool-calling, and vision, so it makes sense to evaluate each of those in turn.

Long-context understanding

To test GPT-5.2’s long-context understanding, I uploaded an online version of J.M. Coetzee’s novel, Disgrace. I then asked the model to tell me a small, easily forgettable detail deep inside a long passage.

I asked GPT-5.2 for an answer to this question:

What did David serve Melanie for supper in one of their early encounters?GPT-5.2 found the right answer, even though it felt to me like a needle-in-a-haystack detail. This dinner was mentioned once in the book, and the event had no real narrative importance.

This test demonstrated to me that GPT-5.2’s retrieval is not based on pattern-guessing but on genuine retention across a long sequence.

This kind of retention matters because real long-context performance is about maintaining granular state across vast stretches of text. If a model can remember a fleeting dinner recipe buried deep in a 90-page narrative, it can track variables in a codebase, legal clauses in a contract, or financial details across a 200-page report.

Agentic tool-calling

Test 1

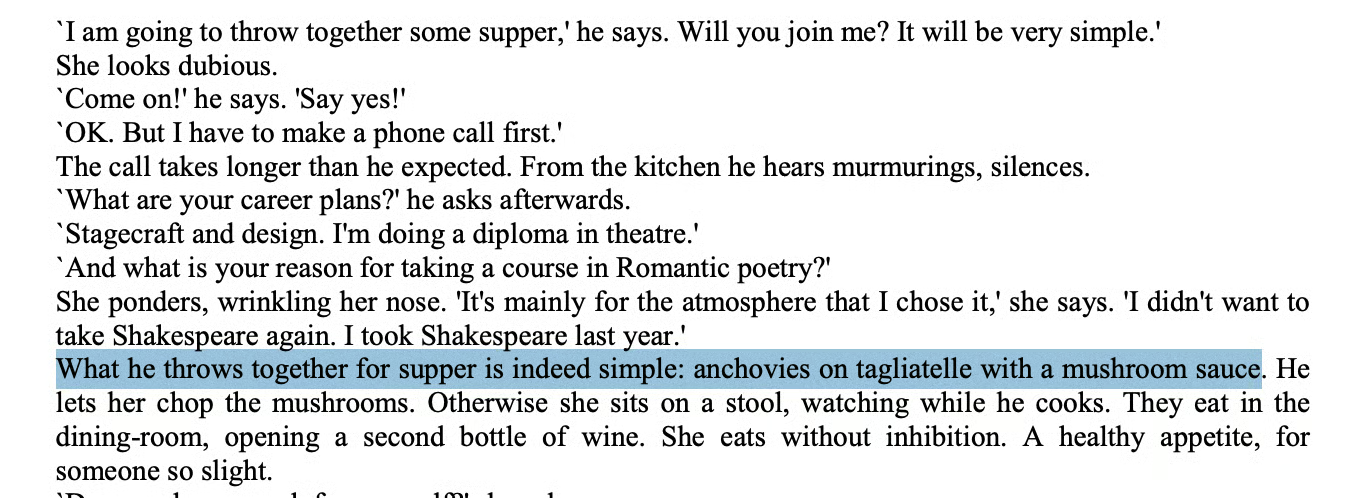

To test GPT-5.2’s agentic capabilities, I gave it a task that requires navigation and clicking. I asked it to behave like an autonomous apartment-hunting agent. This capability would be helpful for people interested in moving but too busy to trawl rental sites themselves.

The model had to use a browser, apply filters, click into listings, extract details, and assemble a comparison report.

Here was the prompt in essence:

You are my autonomous apartment-hunting agent.

Find apartments for rent in Queens.

Open a rental site, apply a price and neighborhood filter, scroll through the results,

click into each relevant listing, and extract structured details (price, address,

beds/baths, square footage, amenities, pet policy, parking, fees, lease terms, and a

short description of the layout/photos).

Then assemble everything into a table, rank the units by overall value, and write a brief

report naming the best-value pick, the cheapest acceptable unit, and the one with the

best amenities. Save the table as a CSV in the VM and show the ranked table in chat.This setup of this prompt was designed to test long-horizon reasoning, tool-calling, and UI interaction in one go.

GPT-5.2 navigated to a listings site, set the filters, and worked through the results on its own. It clicked into individual units, pulled out structured fields, picked both a neighborhood and a price band (I could have asked for these filters myself, but I’m not actually looking to move), and then it compiled a small, ranked short-list.

I’m sure the research wasn’t perfect. I could see in the model’s trace that some sites blocked scraping, but the agent recovered by switching sites, and in the end, it still produced a usable set of recs.

One thing I noticed in the final table was that the model didn’t provide me with real URLs; instead, it gave me those screenshot-style references. That’s because most rental sites block automated access, and the browsing tool isn’t allowed to extract or share the actual listing links. When that happens, it just inserts a pointer to the screenshot it captured inside the sandbox. So that part was a little annoying.

Test 2

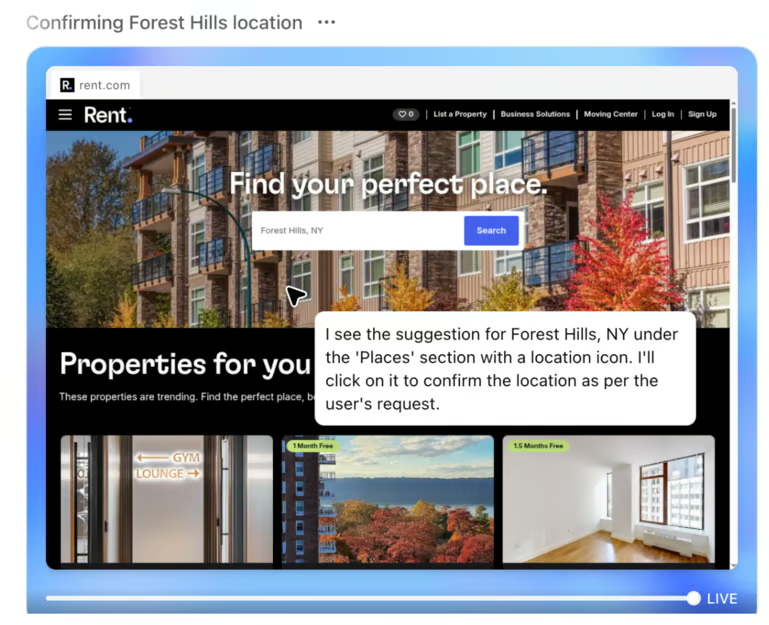

I wanted to see how far these agentic capabilities would stretch in a domain with far fewer clean data sources, so I tried a second experiment in genealogy.

I asked GPT-5.2 to investigate whether members of the Waples family appear in English parish registers and whether there might be any historical or genealogical link to Herefordshire.

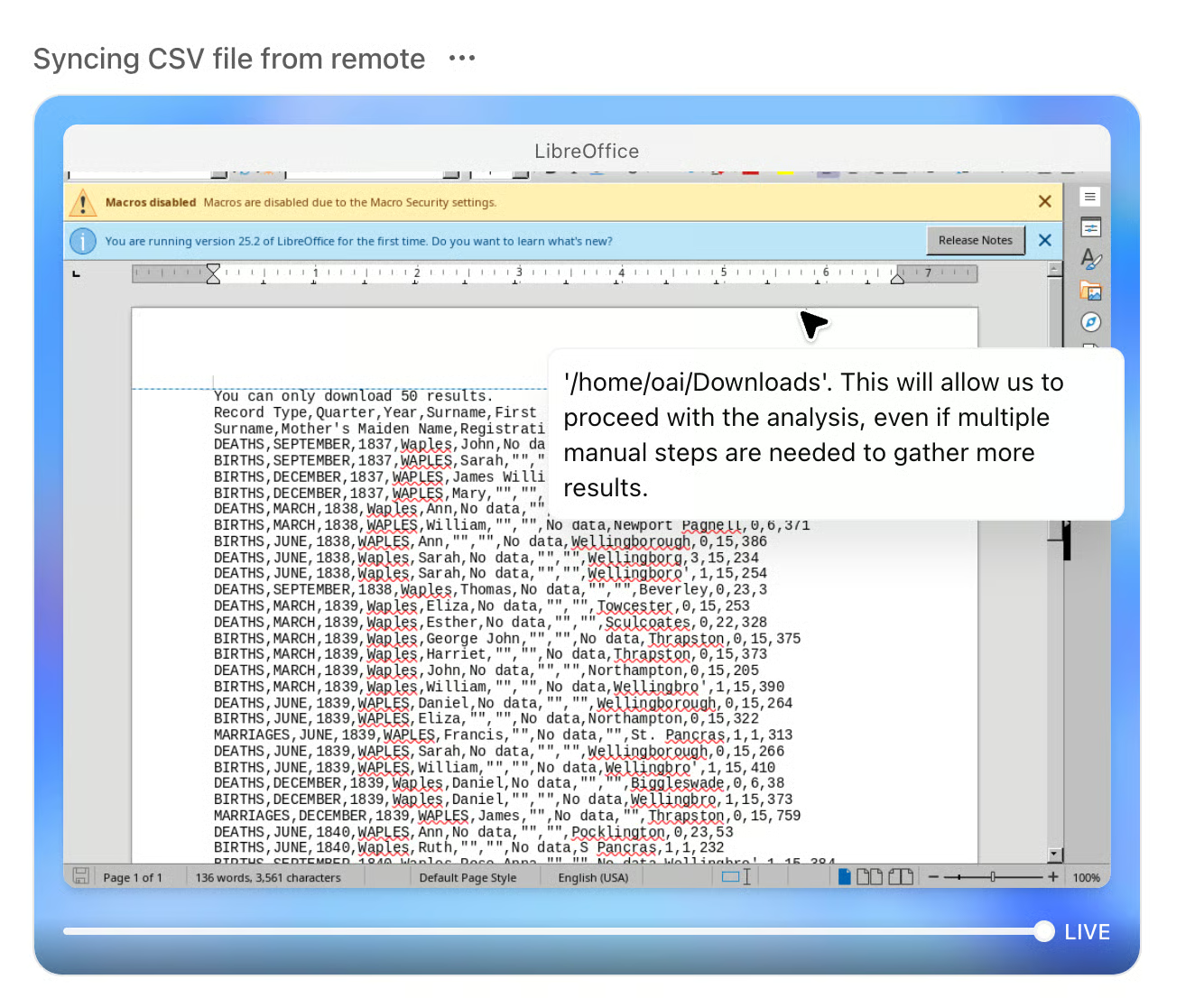

GPT-5.2 took specific actions, like accessing a free genealogy and clicking through pages to find and download records.

The model broke the problem into subtasks, executed lookups in resources like FreeBMD and Forebears, and even drafted a targeted enquiry email to pursue records that are not digitized.

At the same time, I learned that genealogy as a research domain places some hard limits. Many of the key sources, like parish registers or presentment rolls, exist only in archives or behind restricted catalogues. FreeBMD imposes a 50-record download cap, HARC’s catalogue cannot be accessed without session cookies, and historically relevant documents are really obtained through paid research services.

The model can recognize these barriers and suggest next actions, but it cannot cross them. This test failed to produce anything very useful, but that wasn’t the fault of the model.

All this goes to show why OpenAI is focused on business use cases, because that's where the opportunity is. But it's a good reminder that a lot of data is still offline, fragmented, paywalled, or locked behind real doors.

Vision + general intelligence

For my final test, I uploaded a Sudoku as a test of both vision and general intelligence.

Sudoku itself isn’t heavy math because it’s constrained. But when the puzzle is shown as a picture and not as structured text, the model has to use several skills at the same time: reading the grid, preserving the “givens,” and then applying logic to solve it.

can you fill out this sudoku for meThe image wasn't perfectly drawn, but that was part of the test:

GPT-5.2 gave me an accurate puzzle, but it replaced at least one of the hardcoded numbers it wasn’t supposed to. You could say it cheated without meaning to.

This kind of mistake isn’t a failure of reasoning. It’s more of a breakdown in vision and fidelity. The model either misread a given cell, failed to understand which were fixed positions, or lost track of constraints as it converted the image into an internal grid.

GPT-5.2 Costs and API Access

The tests I ran above show what you can do inside ChatGPT, but the real flexibility comes when you move to the API, where you control the reasoning effort, token budgets, and tool integrations yourself.

All three models are available now through OpenAI's Responses API and Chat Completions API:

|

Model |

API model string |

|

GPT-5.2 Instant |

gpt-5.2-chat-latest |

|

GPT-5.2 Thinking |

gpt-5.2 |

|

GPT-5.2 Pro |

gpt-5.2-pro |

Here is the pricing:

- For GPT-5.2 Thinking: $1.75 per million input tokens and $14 per million output tokens for the base Thinking model, but there’s a 90% discount on cached inputs.

- GPT-5.2 Pro: The top-tier model is much more expensive: $21 input, $168 output.

Two additions for power users: a 1xhigh1 reasoning effort setting and a /compact endpoint that extends the effective context window for long-running workflows.

GPT-5.2 vs Competitors

Let's look at how GPT-5.2 compares to the other recent releases:

GPT-5.2 vs Gemini 3

Gemini 3, released in mid-November, crushed the scores on many of the most-watched benchmark tests. It holds the top spot on Humanity's Last Exam, and it also beats GPT-5.2 Pro on GPQA Diamond, but just a little bit (93.8% vs. 93.2%).

Gemini’s big increase in performance likely reflected improvements in both mixture-of-experts implementation and training infrastructure. Gemini 3 uses token-level sparse routing, and it was trained on Google's custom TPU infrastructure. Where GPT-5.2 is doing well now is on the professional work benchmarks like GDPval and the enterprise tool-calling evals. This is where OpenAI has clearly concentrated its effort.

GPT-5.2 vs Claude Opus 4.5

Claude Opus 4.5, released in late November, took a different path. Anthropic focused on what they called "hybrid reasoning"—combining extended thinking with stronger baseline intelligence—and the result was a model that excels at software engineering and open-ended tasks. On SWE-bench Verified, Opus 4.5 scores 80.9%, just a hair above GPT-5.2's 80%.

Where the models diverge is in style: Opus 4.5 tends toward longer, more deliberative responses, while GPT-5.2 Thinking emphasizes tool use and structured outputs like spreadsheets and presentations. For coding agents and complex refactors, they're effectively neck and neck; for enterprise workflows involving slides and reports, OpenAI is betting GPT-5.2 has the edge.

Conclusion

There’s also been a lot of attention on GPT benchmark results, and there must be a lot of pressure at OpenAI to deliver. A month ago, the release of GPT 5.1 was overshadowed by Gemini 3. Now, in the release of GPT 5.2, OpenAI falls in a close second place to Gemini 3 on GPQA and a close second to Claude Opus 4.5 on SWE-Bench Verified, which must be frustrating for the team.

OpenAI has instead highlighted high performance on GDPval and SWE-Bench Pro, but the former test, especially, is obscure compared to the industry standards that grab headlines. One might think that OpenAI is trying to tell a winning story without bringing attention to the leaderboards it's currently losing.

But the race is super close, and these companies still have a lot of room to prove themselves. One thing we will be watching in particular is how new models perform on the upcoming ARC-AGI-3 benchmark, which is in development.

GPT 5.2 FAQs

What prompted the unusually fast rollout of GPT 5.2?

The accelerated release of GPT 5.2 was driven by competitive pressure from Google’s Gemini 3 and other emerging models. OpenAI aimed to quickly close performance gaps, especially in areas like reasoning, memory, and tool usage, to maintain its position in the increasingly competitive AI landscape.

Will GPT 5.2 completely replace GPT 5.1 and older models?

No, GPT 5.1 will remain available alongside GPT 5.2 for the time being. This allows users to transition gradually, maintaining access to legacy models while adapting to the new improvements in GPT 5.2.

How do the improvements in GPT 5.2 affect everyday (non-enterprise) users?

For everyday users, GPT 5.2 enhances response speed and accuracy, particularly in handling long conversations and context retention. These improvements aim to provide a more seamless and reliable experience, even outside enterprise workflows.

Are there new safety or content-control features tied to GPT 5.2’s release?

Alongside its performance improvements, GPT 5.2 introduces ongoing updates to safety features, such as enhanced content filtering and more refined user controls. OpenAI is also preparing for future updates, including a planned "adult mode" for more controlled interactions.

I'm a data science writer and editor with contributions to research articles in scientific journals. I'm especially interested in linear algebra, statistics, R, and the like. I also play a fair amount of chess!