Track

Training large language models (LLMs) often demands significant computational resources, which can be a barrier for many organizations and researchers.

The mixture of experts (MoE) technique addresses this challenge by breaking down large models into smaller, specialized networks.

The concept MoE originated from the 1991 paper Adaptive Mixture of Local Experts. Since then, MoEs have been employed in multi-trillion parameter models, such as the 1.6 trillion parameter open-sourced Switch Transformers.

In this article, I’ll provide an in-depth exploration of MoE, including its applications, benefits, and challenges.

Develop AI Applications

What Is a Mixture of Experts (MoE)?

Imagine an AI model as a team of specialists, each with their own unique expertise. A mixture of experts (MoE) model operates on this principle by dividing a complex task among smaller, specialized networks known as “experts.”

Each expert focuses on a specific aspect of the problem, enabling the model to address the task more efficiently and accurately. It’s similar to having a doctor for medical issues, a mechanic for car problems, and a chef for cooking—each expert handles what they do best.

By collaborating, these specialists can solve a broader range of problems more effectively than a single generalist.

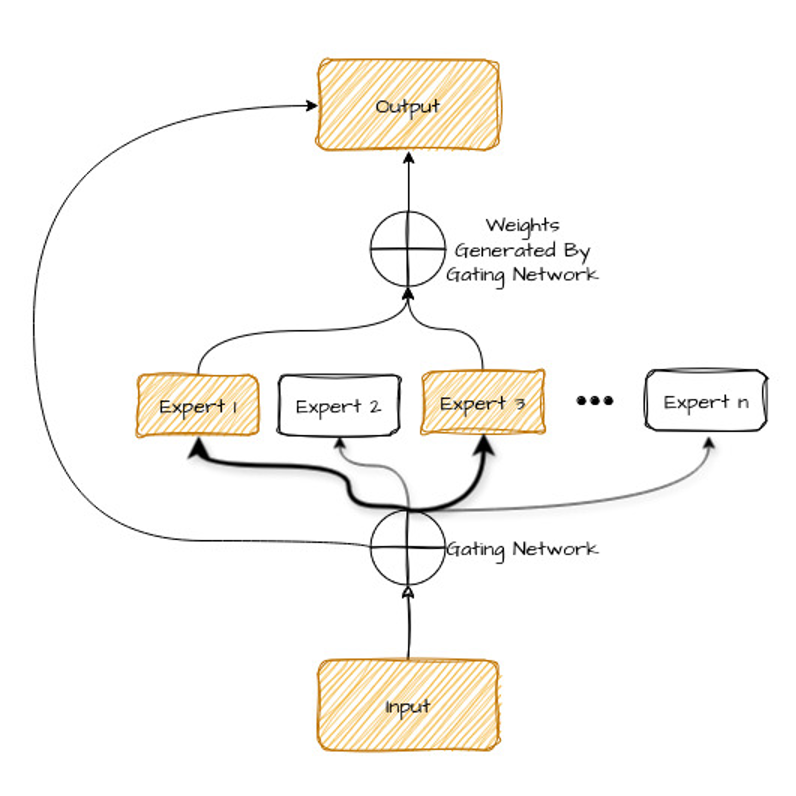

Let’s take a look at the diagram below—we’ll explain it shortly after.

Let’s break down the components of this diagram:

- Input: This is the problem or data you want the AI to handle.

- Experts: These are smaller AI models, each trained to be really good at a specific part of the overall problem. Think of them like the different specialists on your team.

- Gating network: This is like a manager who decides which expert is best suited for each part of the problem. It looks at the input and figures out who should work on what.

- Output: This is the final answer or solution that the AI model produces after the experts have done their work.

The advantages of using MoE are:

- Efficiency: Only the experts who are good at a particular part of the problem are used, saving time and computing power.

- Flexibility: You can easily add more experts or change their specialties, making the system adaptable to different problems.

- Better results: Because each expert focuses on what they're good at, the overall solution is usually more accurate and reliable.

Let’s get into a little more detail with expert networks and gating networks.

Expert networks

Think of the "expert networks" in a MoE model as a team of specialists. Instead of having one AI model do everything, each expert focuses on a particular type of task or data.

In an MoE model, these experts are like individual neural networks, each trained on different datasets or tasks.

They are designed to be sparse meaning only a few are active at any given time, depending on the nature of the input. This prevents the system from being overwhelmed and ensures that the most relevant experts are working on the problem.

But how does the model know which experts to choose? That's where the gating network comes in.

Gating networks

The gating network (the router) is another type of neural network that learns to analyze the input data (like a sentence to be translated) and determine which experts are best suited to handle it.

It does this by assigning a "weight" or importance score to each expert based on the characteristics of the input. The experts with the highest weights are then selected to process the data.

There are various ways (called "routing algorithms") that the gating network can select the right experts. Here are a few common ones:

- Top-k routing: This is the simplest method. The gating network picks the top 'k' experts with the highest affinity scores and sends the input data to them.

- Expert choice routing: In this method, instead of the data choosing the experts, the experts decide which data they can handle best. This strategy aims to achieve the best load balancing and allows for a varied way of mapping data to experts.

- Sparse routing: This approach only activates a few experts for each piece of data, creating a sparse network. Sparse routing uses less computational power compared to dense routing, where all experts are active for every piece of data.

During the process of making predictions, the model combines the outputs from the experts, following the same process it used to assign tasks to the experts. For a single task, more than one expert might be needed, depending on how complex and varied the problem is.

Now, let's understand how an MoE works.

How Mixture of Experts (MoE) Works

MoE operates in two stages:

- The training phase

- The Inference phase

Training phase

Similar to other machine learning models, MoE begins by training on a dataset. However, the training process is not applied to the entire model but is instead conducted on its components individually.

Expert training

Each component of an MoE framework undergoes training on a specific subset of data or tasks. The aim is to enable each component to focus on a particular aspect of the broader problem.

This focus is achieved by providing each component with data relevant to its assigned task. For instance, in a language processing task, one component might concentrate on syntax while another on semantics.

The training for each component follows a standard neural network training process, where the model learns to minimize the loss function for its specific data subset.

Gating network training

The gating network is tasked with learning to select the most suitable expert for a given input.

During the training of the gating network, is trained alongside the expert networks. It receives the same input as the experts and learns to predict a probability distribution over the experts. This distribution indicates which expert is best suited to handle the current input.

The gating network is typically trained using optimization methods that include both the accuracy of the gating network and the performance of the selected experts.

Joint training

In the joint training phase, the entire MoE system, which includes both the expert models and the gating network, is trained together.

This strategy ensures that both the gating network and the experts are optimized to work in harmony. The loss function in joint training combines the losses from the individual experts and the gating network, encouraging a collaborative optimization approach.

The combined loss gradients are then propagated through both the gating network and the expert models, facilitating updates that improve the overall performance of the MoE system.

Inference phase

Inference involves generating outputs by combining context from gating networks with outputs from experts. In MoE, this process is designed to keep inference costs minimal.

Input routing

In the context of MoE, the role of the gating network is pivotal in deciding which models should process a specific input.

Upon receiving an input, the gating network assesses it and creates a probability distribution across all the models. This distribution then directs the input to the most suitable models, leveraging the patterns learned during the training phase. This ensures that the right expertise is applied to each task, optimizing the decision-making process.

Expert selection

Only a select few models, usually one or a few, are chosen to process each input. This selection is determined by the probabilities assigned by the gating network.

Choosing a limited number of models for each input helps in the efficient use of computational resources while still benefiting from the specialized knowledge within the MoE framework.

The output from the gating network ensures that the chosen models are the most appropriate for handling the input, thereby improving the system's overall efficiency and performance.

Output combination

The last step in the inference process involves merging the outputs from the selected models.

This merging is often achieved through weighted averaging, where the weights reflect the probabilities assigned by the gating network. In certain scenarios, alternative methods like voting or learned combination techniques might be employed to merge the expert outputs. The aim is to integrate the varied insights from the selected models into a unified and accurate final prediction, thereby leveraging the strengths of the MoE architecture.

With the rapid advancement of technology, there is an increasing need for fast, efficient, and optimized techniques to handle large models. MoE is emerging as a promising solution in this regard. What other benefits does MoE offer?

Benefits of Mixture of Experts (MoE)

Mixture of Experts (MoE) architecture offers several advantages:

- Performance: By selectively activating only the relevant experts for a given task, MoE models avoid unnecessary computation, leading to improved speed and reduced resource consumption.

- Flexibility: The diverse capabilities of experts make MoE models highly flexible. By calling on experts with specialized capabilities, the MoE model can succeed in a wider range of tasks.

- Fault tolerance: MoE’s "divide and conquer" approach, where tasks are executed separately, enhances the model's resilience to failures. If one expert encounters an issue, it doesn't necessarily affect the entire model's functionality.

- Scalability - Decomposing complex problems into smaller, more manageable tasks helps MoE models handle increasingly complicated inputs.

Applications of Mixture of Experts (MoE)

The fact that MoEs have been around for the last 30 years makes it a widely used technique in different areas of machine learning.

Natural language processing (NLP)

MoE offers a unique approach to training large models with improved efficiency, faster pre-training, and competitive inference speeds.

In traditional dense models, all parameters are used for all inputs. Sparsity, however, allows the model to run only specific parts of the system based on the input, significantly reducing computation.

One example is Microsoft’s translation API, Z-code. The MoE architecture in Z-code supports a massive scale of model parameters while keeping the amount of compute constant.

Computer vision

Google's V-MoEs, a sparse architecture based on Vision Transformers (ViT), showcase the effectiveness of MoE in computer vision tasks.

By partitioning images into smaller patches and feeding them to a gating/routing layer, V-MoEs can dynamically select the most appropriate experts for each patch, optimizing both accuracy and efficiency.

A notable advantage of this approach is its flexibility. You can decrease the number of selected experts per token to save time and compute, without any further training on the model weights.

Recommendation systems

MoE has also been successfully applied to recommendation systems. For example, Google researchers have proposed an MMoE (Multi-Gate Mixture of Experts) based ranking system for YouTube video recommendations.

They first group their task objective into two categories: engagement and satisfaction. Given the list of candidate videos from the retrieval step, their ranking system uses candidate, user, and context features to learn to predict the probabilities corresponding to the two categories of user behavior.

One thing to note in this approach is that they did not apply the MoE layer directly to the input because the high dimensionality of input would lead to significant model training and serving costs.

MoEs have seen a wide-scale adoption in the industry for several applications. Their learning procedure divides the task into appropriate subtasks, each of which can be solved by a very simple expert network. This capability translates to parallelizable training and fast inference, making MoEs lucrative for large-scale systems.

Mixture of Experts (MoE): Challenges

Experts are particularly beneficial for high-throughput scenarios involving many machines. Given a fixed compute budget for pretraining, a sparse model can be more efficient.

However, sparse models require substantial memory during execution, as all experts need to be stored in memory. This can be a significant limitation in systems with low VRAM, where such models may struggle.

Let’s explore other limitations of MoEs.

Training complexity

Training MoE models is more complex than training a single model. Here’s why:

- Coordination: You need the gating network to learn how to correctly route inputs to the right experts while each expert specializes in different parts of the data. Balancing this can be tricky.

- Optimization: The loss function used in joint training must balance the performance of the experts and the gating network, which complicates the optimization process.

- Hyperparameter tuning: MoE models have more hyperparameters, such as the number of experts and the architecture of the gating network. Tuning these can be time-consuming and complicated.

Inference efficiency

Inference in MoE models can be less efficient due to a few factors:

- Gating network: The gating network needs to run for each input to determine the right experts. This adds extra computation.

- Expert selection and activation: Even though only a subset of experts is activated for each input, selecting and activating these experts adds overhead, potentially increasing inference times.

- Parallelism: Running multiple experts in parallel can be challenging, especially in environments with limited computational resources. Effective parallelism requires advanced scheduling and resource management.

Increased model size

MoE models tend to be larger than single models due to the multiple experts:

- Storage requirements: Storing multiple expert networks and the gating network increases the overall storage needs, which can be a drawback in storage-limited environments.

- Memory usage: Training and inference require more memory because multiple models need to be loaded and maintained in memory at the same time. This can be problematic in resource-constrained settings.

- Deployment challenges: Deploying MoE models is harder due to their size and complexity. Efficient deployment on various platforms, including edge devices, may require additional optimization and engineering efforts.

Conclusion

In this article, we explored the Mixture of Experts (MoE) technique, a sophisticated approach for scaling neural networks to handle complex tasks and diverse data. MoE uses multiple specialized experts and a gating network to route inputs effectively.

We covered the core components of MoE, including expert networks and the gating network, and discussed its training and inference processes.

Benefits such as improved performance, scalability, and adaptability were highlighted, along with applications in natural language processing, computer vision, and recommendation systems.

Despite challenges in training complexity and model size, MoE offers a promising method for advancing AI capabilities.

Earn a Top AI Certification

Senior GenAI Engineer and Content Creator who has garnered 20 million views by sharing knowledge on GenAI and data science.