Course

Hot on the heels of the impressive Gemini 3 release from Google, Anthropic recently announced Claude Opus 4.5, hailing it as the ‘best model in the world for coding, agents, and computer use.’

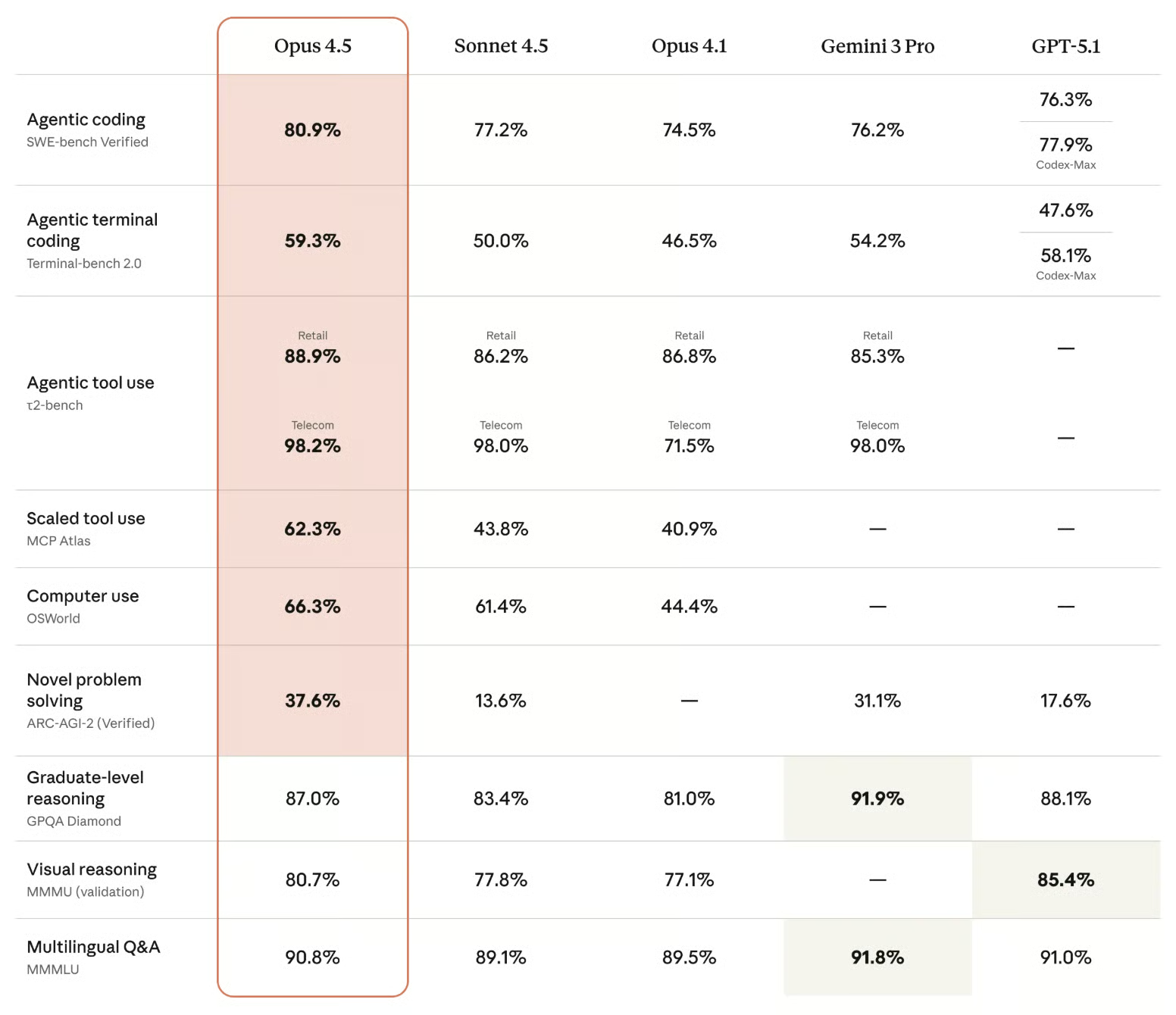

Despite Gemini 3’s extremely impressive benchmarks, it still came behind Claude Sonnet 4.5 on the SWE bench, which is a test of software engineering skills. With Claude Opus 4.5, Anthropic beats its own score on the SWE bench and other tests.

Claude Opus 4.5 marks Anthropic's third major model launch in just two months. after Sonnet 4.5 and Haiku 4.5. And now that Anthropic has a valuation north of $350 billion, we know they have the resources to keep running at this pace.

In this article, I’ll explore everything that’s new with Claude Opus 4.5, looking at the benchmarks, new features, and getting hands-on to test its capabilities.

What is Claude Opus 4.5?

Claude Opus 4.5 is the latest large language model from Anthropic. Following on from Opus 4, it is Anthropic’s most advanced model, with a focus on coding, reasoning, and long-running tasks. The model scores 80.9% on the SWE-bench and 59.3% on the Terminal-bench.

Claude Opus 4.5 is now available on Anthropic’s apps, the API, and major cloud platforms.

What’s new with Claude Opus 4.5?

From the announcement, these things stood out to me:

- Agentic coding: Opus 4.5 is state-of-the-art on SWE-bench Verified, outperforming Google's Gemini 3 Pro and OpenAI's GPT-5.1. Anthropic also tested the model on a difficult take-home exam that they give to prospective performance engineers. It scored higher than any human candidate ever had.

- Computer use: Anthropic claims Opus 4.5 is "the best model in the world for computer use," meaning it can interact with software interfaces, clicking buttons, filling forms, navigating websites, as a human would.

- Everyday tasks: The model is "meaningfully better" at working with spreadsheets and slides and conducting deep research, according to Anthropic.

Alongside the model launch, Anthropic announced several product updates, which I’ll discuss in more detail below. They include Claude for Chrome, the browser extension that lets Claude take action across tabs, and Claude for Excel.

Testing Claude Opus 4.5

A write-up wouldn’t be complete without putting the new model through a test or two. Let’s see how the new improvements handle a range of tasks:

Testing Opus 4.5 with an economics question

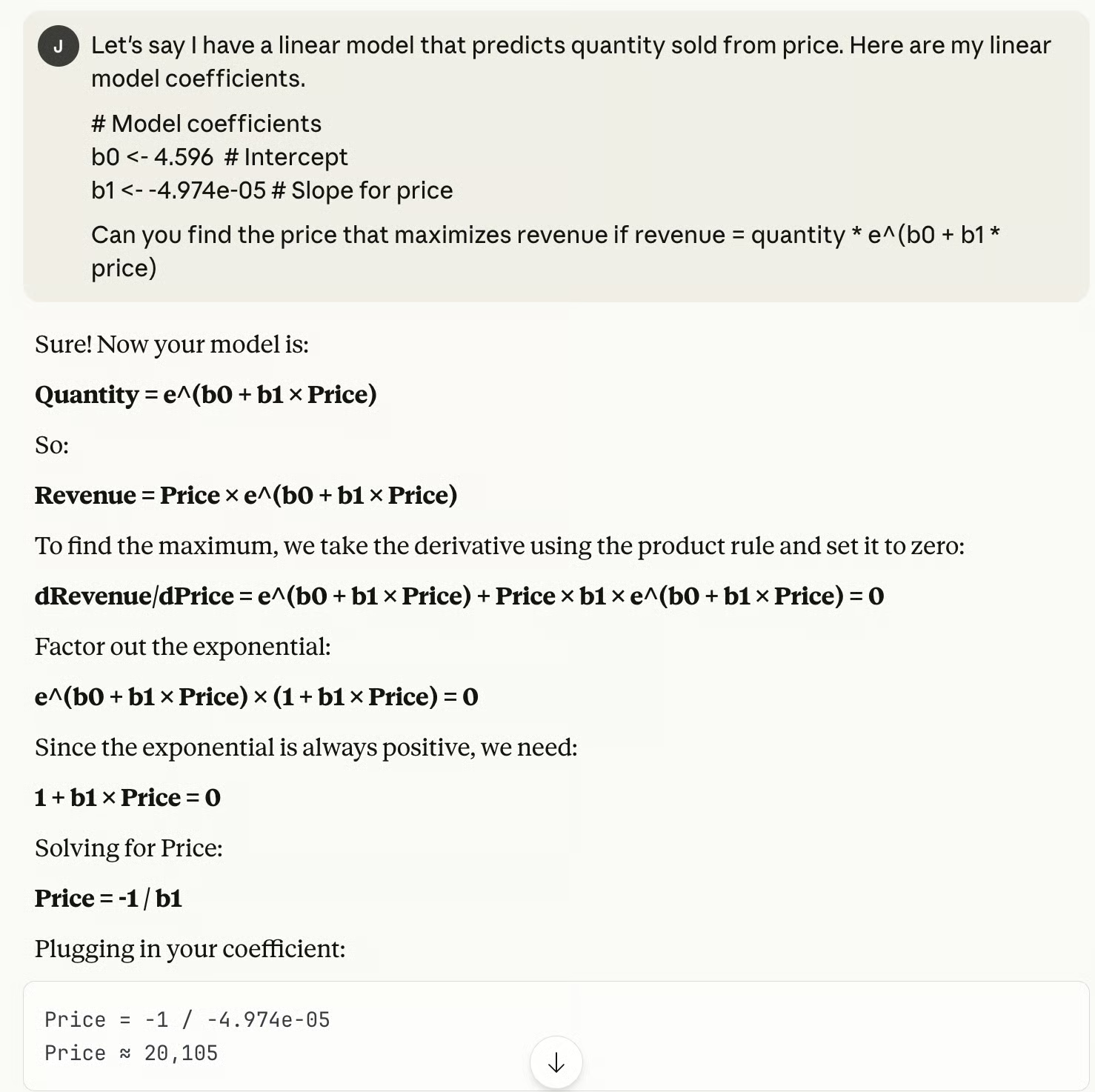

First, I wanted to see how Opus 4.5 handled a classic economics optimization problem. I'm picturing a log-linear demand model that predicts quantity sold from price. It's the kind of thing you'd see in an econometrics course, but it's also genuinely useful. If you know your demand curve and your costs, you can solve for the price that maximizes revenue.

Opus 4.5 gave me the right answer, without thinking long about it, and it happened all in one go.

I was impressed by this because just about any company that sells anything will have some idea of quantity sold and price, but not every company will have the resources to sketch out answers to rudimentary optimization problems.

But here, with a well-designed prompt, you can find your answer easily. Of course, it would still be up to the analyst to decide if enough of the constraints were really modeled properly.

Here’s what I also liked: Opus 4.5 showed its work. It didn't just give me a number. It walked through the derivative, the factoring, and the algebra. If it had made an error, I could have spotted where.

Testing Opus 4.5 with a stats question

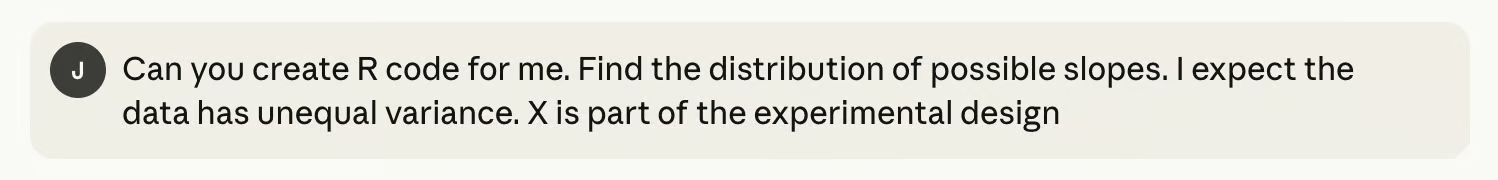

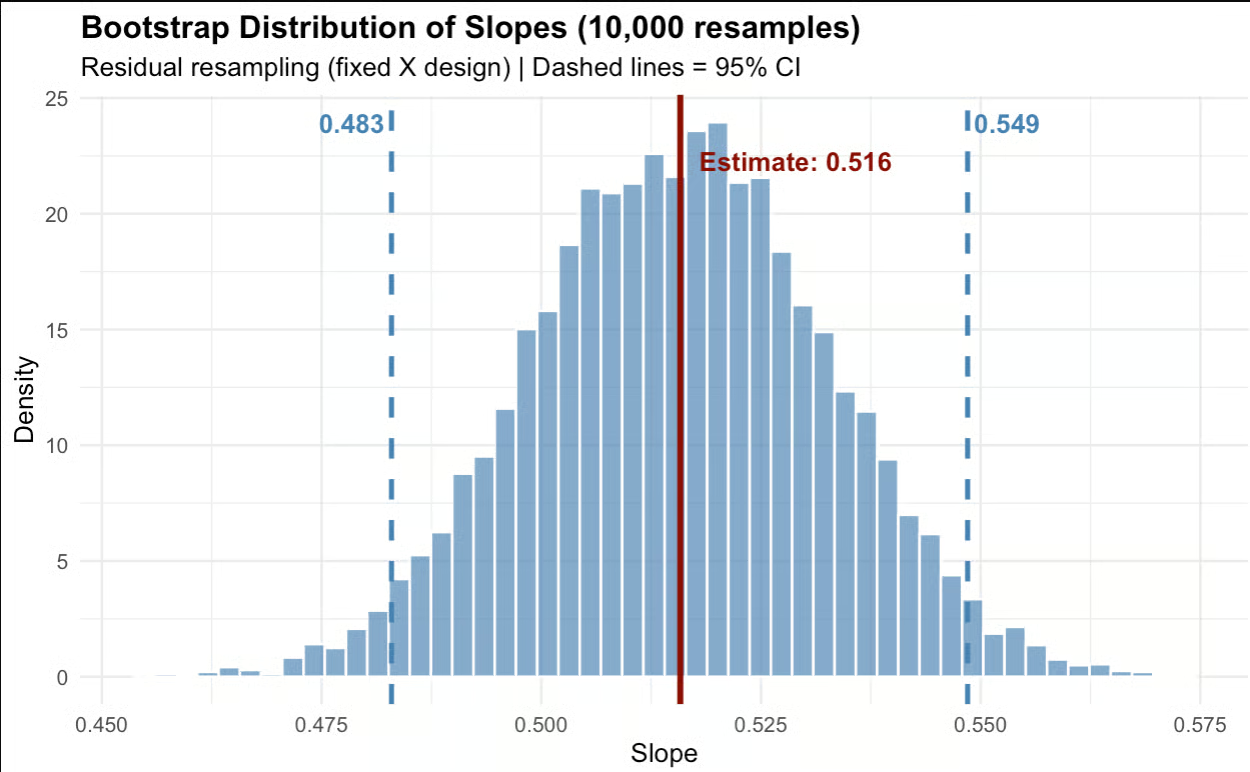

Next, I wanted to test Opus 4.5 on a stats question. I created a dataset where yield was a function of temperature with noise added.

library(tidyverse)

set.seed(1024)

# Generate data

n <- 100

df <- data.frame(

temperature = seq(0, 100, length.out = n)

) %>%

mutate(yield = 0.5 * temperature + 10 + rnorm(n, 0, 5))I then fed Claude this prompt.

Claude returned to me the following, which I used to create a histogram, down below.

# Fit model

fit <- lm(yield ~ temperature, data = df)

fitted_vals <- fitted(fit)

resids <- residuals(fit)

original_slope <- coef(fit)[2]

# Residual bootstrap

set.seed(123)

n_boot <- 1000

boot_slopes <- numeric(n_boot)

for (i in 1:n_boot) {

new_y <- fitted_vals + sample(resids, replace = TRUE)

boot_fit <- lm(new_y ~ df$temperature)

boot_slopes[i] <- coef(boot_fit)[2]

}

# Confidence intervals

ci_lower <- quantile(boot_slopes, 0.025)

ci_upper <- quantile(boot_slopes, 0.975)

# Plot

ggplot(data.frame(slope = boot_slopes), aes(x = slope)) +

geom_histogram(bins = 40, fill = "gray70", color = "white") +

geom_vline(xintercept = original_slope, color = "red", linewidth = 1) +

geom_vline(xintercept = ci_lower, color = "steelblue", linetype = "dashed", linewidth = 1) +

geom_vline(xintercept = ci_upper, color = "steelblue", linetype = "dashed", linewidth = 1) +

labs(

title = "Bootstrap Estimate: Effect of Temperature on Yield",

subtitle = paste0("Estimate: ", round(original_slope, 3),

" | 95% CI: [", round(ci_lower, 3), ", ", round(ci_upper, 3), "]"),

x = "Slope (yield per °C)",

y = "Count"

) +

theme_minimal()

I have to say that I like this result. Opus 4.5 found a confidence interval for the slope, which is what I was really asking for.

It used a bootstrap method, which is a good technique for finding confidence intervals when heteroscedasticity is present. It also more specifically used a bootstrap on the residuals, instead of another bootstrap method that resamples (X,Y) pairs, which would have assumed error in the X term.

All this is a finer but likely important point for people who do this kind of work: A residual bootstrap would be better when X is fixed by design, as I specified in my prompt, and when you therefore want inference conditional on those exact X values, like with a scientific study. What I’m trying to say is that Opus 4.5 listened to the subtleties in the prompt.

Testing Opus 4.5 with a math question

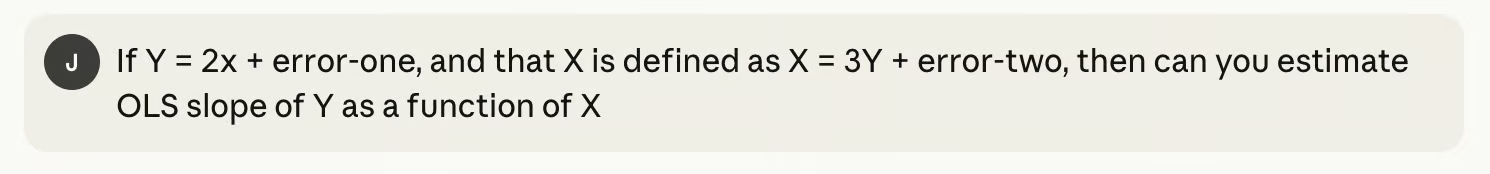

Next, I wanted to see if I could trip Opus 4.5 up in some way.

In this case, the model was way ahead of me. Opus 4.5 understood that my question was circular - I was defining X by Y and vice-versa, so we couldn’t pull out a meaningful estimate for the slope of a regression line, given what we were working with.

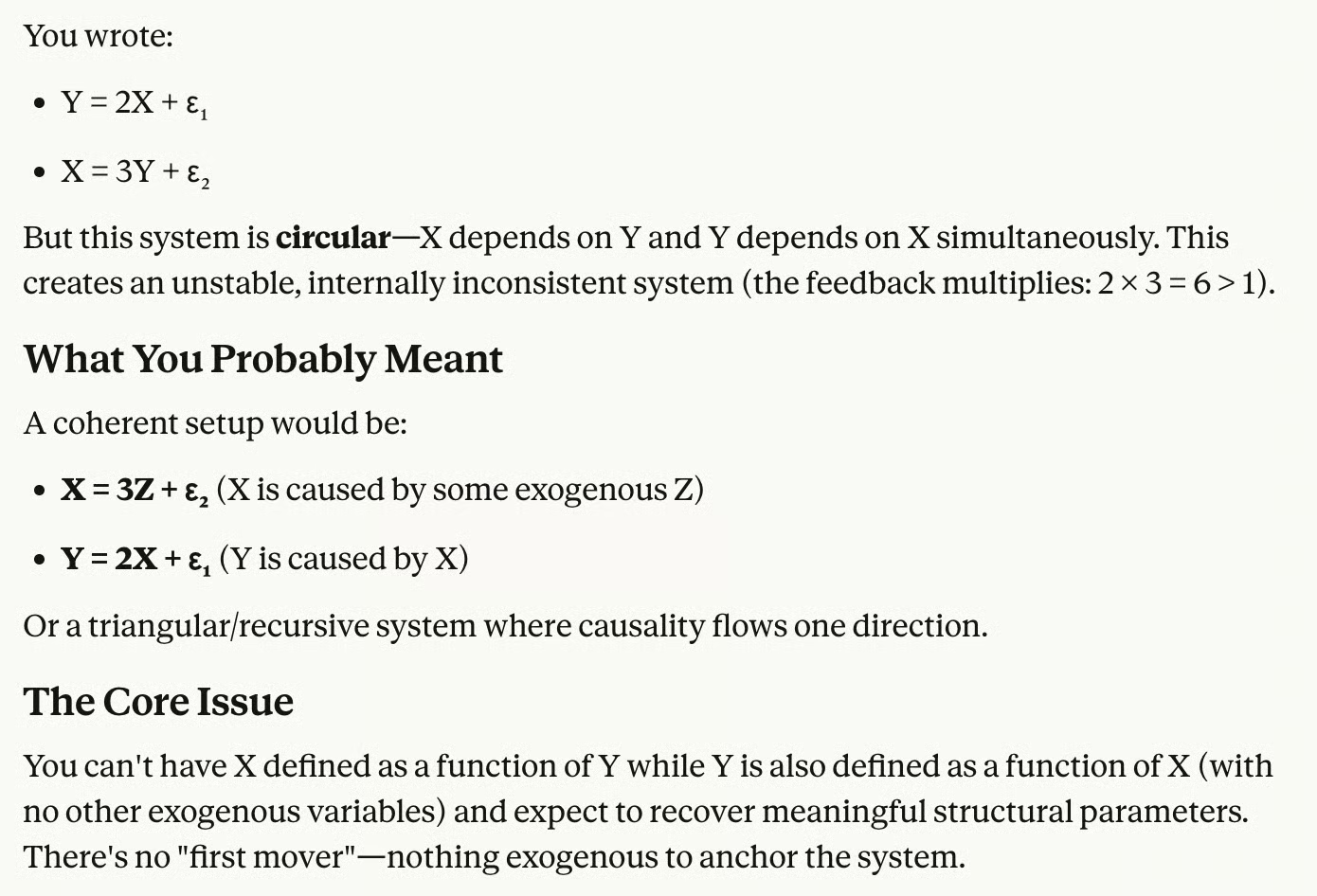

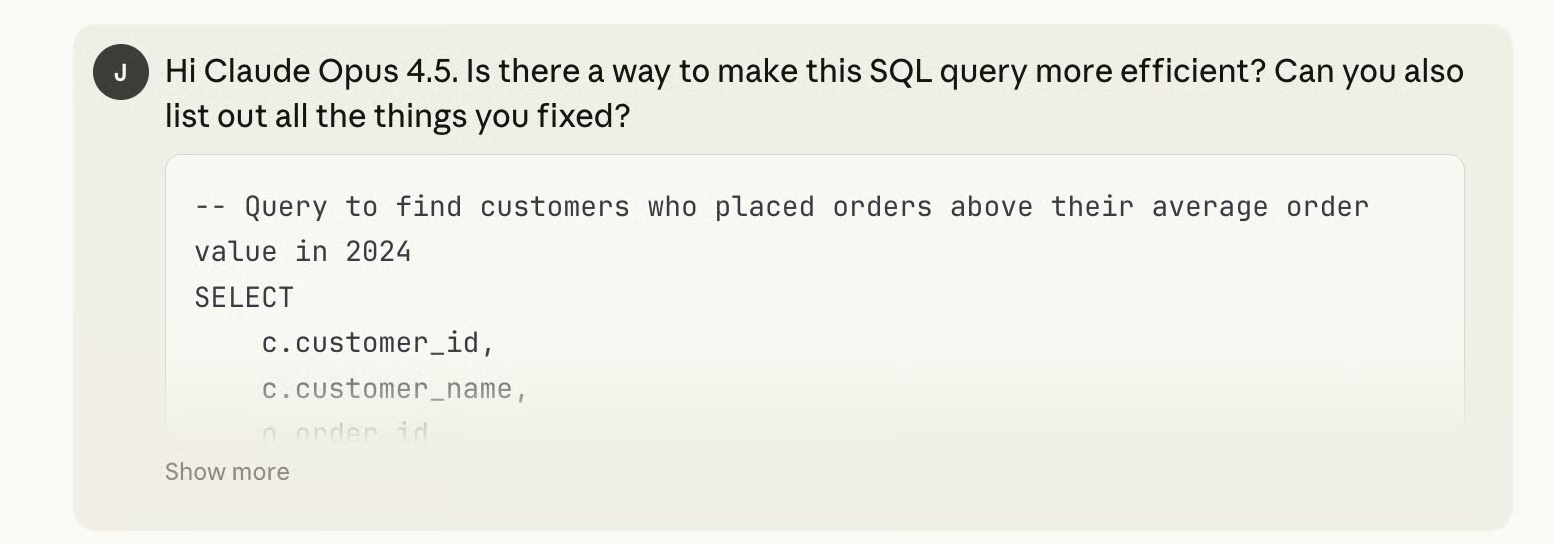

Testing Opus 4.5 for SQL optimization

Finally, I thought it might be fun to test Opus 4.5 on a SQL question. I shipped it a workable but highly inefficient SQL query.

-- Find customers who placed orders above their average order value in 2024

SELECT

c.customer_id,

c.customer_name,

o.order_id,

o.order_date,

o.order_total

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id

WHERE o.order_date >= '2024-01-01'

AND o.order_date < '2025-01-01'

AND o.order_total > (

SELECT AVG(o2.order_total)

FROM orders o2

WHERE o2.customer_id = c.customer_id

)

AND c.customer_id IN (

SELECT DISTINCT o3.customer_id

FROM orders o3

WHERE o3.order_date >= '2024-01-01'

)

AND EXISTS (

SELECT 1

FROM customer_preferences cp

WHERE cp.customer_id = c.customer_id

AND cp.email_opt_in = 1

)

ORDER BY (

SELECT COUNT(*)

FROM orders o4

WHERE o4.customer_id = c.customer_id

) DESC;I asked Opus 4.5 to make the query better. It gave me an answer in about a second.

-- Find customers who placed orders above their average order value in 2024

WITH customer_avg AS (

SELECT

customer_id,

AVG(order_total) AS avg_order_total,

COUNT(*) AS order_count

FROM orders

GROUP BY customer_id

)

SELECT

c.customer_id,

c.customer_name,

o.order_id,

o.order_date,

o.order_total

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id

JOIN customer_avg ca ON c.customer_id = ca.customer_id

JOIN customer_preferences cp ON c.customer_id = cp.customer_id

WHERE o.order_date >= '2024-01-01'

AND o.order_date < '2025-01-01'

AND o.order_total > ca.avg_order_total

AND cp.email_opt_in = 1

ORDER BY ca.order_count DESC;Among other things, Opus 4.5 replaced the correlated subquery for AVG with a CTE. (The original query recalculated AVG(order_total) for every single row being evaluated, but the CTE now computes each customer's average once upfront.) Opus 4.5 also removed the redundant IN (SELECT DISTINCT ...) clause, which was doing extra work for nothing. And it converted the EXISTS subquery to a JOIN, which is better.

Claude Opus 4.5 Agents and Agentic Capabilities

There were other highlights from this latest release from Anthropic. Let’s take a look in more detail:

Developer platform features

Anthropic has added several new building blocks for developers. The most notable is the effort parameter, which lets you control how much thinking the model does before responding. Set it low for quick, lightweight tasks; set it higher when you need the model to chew on something.

According to Anthropic, at medium effort, Opus 4.5 matches Sonnet 4.5's best SWE-bench score while using 76% fewer output tokens. At high effort, it beats Sonnet by over 4% — still using nearly half the tokens.

There's also improved context management and memory. For long-running agents, Claude can now automatically summarize earlier context so it doesn't hit a wall mid-task. This pairs with context compaction, which keeps agents running longer with less intervention.

Finally, Opus 4.5 is apparently quite good at multi-agent orchestration, which means it could manage a team of subagents.

Claude Opus 4.5 Deep Research

Apparently, Opus 4.5’s performance on a deep research evaluation increased by roughly 15%.

I put the deep research capability through my own test. I asked it to give me a report on Old English words that survive today but are not commonly used, and how these kinds of words have changed over time. The report was ready in seven minutes:

I was actually fairly impressed with the quality of the report in terms of how interesting it was, the writing quality, the organization, and the depth of research.

It wasn’t a totally dry document that was overly dense with archaic or obsolete words. I admit some of the sections could have been better differentiated. The middle of the report was a little more boring than the rest. But - and here’s the real point - it was extremely well researched. Just look at these citations:

Plus, I learned a very nice new word: apricity, meaning the warmth of the sun in winter.

Claude Code

Anthropic has shipped two upgrades to Claude Code.

What is called Plan Mode now builds more precise plans before executing. In practice, this means Claude asks clarifying questions upfront, then generates an editable plan.md file you can review and tweak before it starts working. The idea here is to save you from a false start.

Desktop app integration is the other big one. Claude Code is now available in the desktop app, which means you can run multiple local and remote sessions in parallel. To use Anthropic's own example: one agent fixes bugs, another researches GitHub issues, and a third updates docs.

Consumer App Agents

A few agentic features have landed in the Claude consumer apps:

Claude for Chrome lets Claude handle tasks across your browser tabs. It's now available to all Max users. Think of it as a browsing agent that can navigate, click, fill forms, and pull information across multiple sites.

Claude for Excel brings spreadsheet automation to Claude. This one was announced earlier, but Anthropic has now expanded beta access to all Max, Team, and Enterprise users, so it’s becoming more real.

Long conversation handling fixes what was an annoying limitation. Previously, long chats would hit a context limit and just stop, in which case you'd have to start a new conversation. Now Claude automatically summarizes earlier parts of the conversation in the background, which frees up space so you don’t hit a wall.

Claude Opus 4.5 Benchmark Results

Opus 4.5, like the previous Claude models, was tested on the common benchmarks in agentic coding, tool use, computer use, and problem solving. Here are the big results for Opus 4.5.

Opus 4.5 gets top rank in a lot of the most important tests. Anything involving actually doing things, like writing code that passes tests (SWE-bench), using tools in multi-step workflows (τ2-bench, MCP Atlas), and operating a computer (OSWorld). Opus 4.5 leads, often by a lot.

The scaled tool use gap is actually very large: 62.3% vs. 43.8% for the next best, which is also Claude! However, this demonstrates how much Anthropic is investing in improving its performance, particularly on agentic tasks. Although their older model might currently lead in some categories, they don’t slow down their progress.

It looks like Gemini 3 Pro has the edge on some of the knowledge-intensive benchmarks, like graduate-level reasoning (GPQA Diamond) and multilingual Q&A (MMMLU). These benchmarks probably reward breadth of training data and careful reasoning over memorized facts, and Google, of course, has a lot of resources.

Advances in Safety and Alignment

The improvements from Anthropic aren’t just around coding, research, and computer use. There is very much an emphasis on safety, claiming that this model is their safest and ‘most robustly aligned model’ they have released yet.

This claim is backed up by a reduction in what they call ‘concerning behavior score’, which is lower than the other 4.5 models, as well as GPT-5.1 and Gemini 3 Pro. Anthropic has also made Opus 4.5 more robust against prompt injection attacks, which can fool the model into harmful behaviour.

Claude 4.5 Opus Pricing and Availability

Claude Opus 4.5 is available today across Anthropic’s full product lineup, including the Claude app, API, and all three major cloud platforms. Developers can access it directly via the model ID claude-opus-4-5-20251101.

Pricing has also taken a notable step down. At $5 per million input tokens and $25 per million output tokens, Opus 4.5 makes Anthropic’s top-tier capabilities far more accessible.

For enterprise users, the pricing change could be especially meaningful. The combination of reduced cost, expanded API access, and broader availability across cloud providers positions Opus 4.5 as a competitive option.

Conclusion

Claude Opus 4.5 is Anthropic's clearest statement yet about where it sees itself in the AI race. While Google pushes multimodal understanding and on-device models, Anthropic is betting heavily on actions: agentic coding, tool use, and computer interaction.

The benchmark results really tell the story: Opus 4.5 achieves the highest scores ever recorded on software engineering benchmarks and handles multi-system debugging without much guidance.

My testing confirms what the benchmarks suggest: Opus 4.5 is very capable at multi-step work. Whether it was running bootstrap simulations or synthesizing research across papers, the model approached problems the way a thinker would: adaptively and with clear reasoning. I would think if you are looking to augment your workflows, this is what matters.

If you're keen to learn more about Claude models, I recommend checking out the course, Introduction to Claude Models.

Claude Opus 4.5 FAQs

How does Claude Opus 4.5 differ from Sonnet 4.5 and Haiku 4.5?

Opus 4.5 is the most powerful model, built for complex reasoning, coding, and long-running tasks. Sonnet 4.5 balances performance and cost, while Haiku 4.5 is optimized for speed and efficiency.

Where can I access Claude Opus 4.5?

It’s available through the Claude web and mobile apps, the Claude API, and all major cloud platforms, making it easy to integrate into different workflows.

Did Anthropic increase Claude’s context or memory capabilities?

Yes. Opus 4.5 introduces stronger context management, longer conversation handling, and improved summarization to support extended reasoning and multi-step tasks.

What safety standards does Opus 4.5 follow?

It meets Anthropic’s highest safety tier, with major upgrades in alignment, reduced “concerning behavior” scores, and greater resistance to prompt injection attacks.

What types of use cases benefit most from Opus 4.5?

Opus 4.5 is ideal for complex, multi-step reasoning, like debugging, research, and multi-agent orchestration. For simpler or faster tasks, Sonnet or Haiku may be more cost-effective.

I'm a data science writer and editor with contributions to research articles in scientific journals. I'm especially interested in linear algebra, statistics, R, and the like. I also play a fair amount of chess!

A senior editor in the AI and edtech space. Committed to exploring data and AI trends.