Curso

A adoção maciça de ferramentas como o ChatGPT e outras ferramentas de IA generativa resultou em um grande debate sobre os benefícios e os desafios da IA e sobre como ela reformulará nossa sociedade. Para avaliar melhor essas questões, é importante saber como funcionam os chamados Modelos de Linguagem Grande (LLMs) que estão por trás das ferramentas de IA de última geração.

Este artigo apresenta uma introdução ao Reinforcement Learning from Human Feedback (RLHF), uma técnica inovadora que combina técnicas de aprendizado por reforço e orientação humana para ajudar LLMSs como o ChatGPT a fornecer resultados impressionantes. Abordaremos o que é RLHF, seus benefícios, limitações e sua relevância no desenvolvimento futuro do campo acelerado da IA generativa. Continue lendo!

Entendendo o RLHF

Para entender o papel do RLHF, primeiro precisamos falar sobre o processo de treinamento dos LLMs.

A tecnologia subjacente dos LLMs mais populares é um transformador. Desde que foram desenvolvidos pelos pesquisadores do Google, os transformadores se tornaram o modelo de última geração no campo da IA e da aprendizagem profunda, pois oferecem um método mais eficaz para lidar com dados sequenciais, como as palavras em uma frase.

Para obter uma introdução mais detalhada sobre LLMs e transformadores, confira nosso Curso de Conceitos de Modelos de Linguagem Grande (LLMs).

Os Transformers são pré-treinados com um enorme corpus de texto coletado da Internet usando aprendizado autossupervisionado, um tipo inovador de treinamento que não exige ação humana para rotular os dados. Os transformadores pré-treinados são capazes de resolver uma grande variedade de problemas de processamento de linguagem natural (NLP).

No entanto, para que uma ferramenta de IA como o ChatGPT forneça respostas envolventes, precisas e semelhantes às humanas, usar um LLM pré-treinado não será suficiente. No final, a comunicação humana é um processo criativo e subjetivo. O que torna um texto "bom" é profundamente influenciado pelos valores e preferências humanos, o que torna muito difícil medir ou capturar usando uma solução algorítmica clara.

A ideia por trás do ELF é usar o feedback humano para medir e melhorar o desempenho do modelo. O que torna a RLHF única em comparação com outras técnicas de aprendizagem por reforço é o uso da participação humana para otimizar o modelo, em vez de uma função estatisticamente predefinida para maximizar a recompensa do agente.

Essa estratégia permite uma experiência de aprendizado mais adaptável e personalizada, tornando os LLMs adequados para todos os tipos de aplicações específicas do setor, como assistência a códigos, pesquisa jurídica, redação de ensaios e geração de poemas.

Como funciona o RLHF?

O RLHF é um processo desafiador que envolve um processo de treinamento com vários modelos e diferentes estágios de implantação. Em essência, ele pode ser dividido em três etapas diferentes.

1. Selecione um modelo pré-treinado

O primeiro estágio envolve a seleção de um LLM pré-treinado que será posteriormente ajustado usando RLHF.

Você também pode fazer o pré-treinamento do seu LLM do zero, mas esse é um processo caro e demorado. Por isso, é altamente recomendável que você escolha um dos muitos LLMs pré-treinados disponíveis para o público.

Se você quiser saber mais sobre como treinar o LLM, nosso tutorial How to Train a LLM with PyTorch fornece um exemplo ilustrativo.

Observe que, para atender à necessidade específica do seu modelo, antes de iniciar a fase de ajuste fino usando feedback humano, você pode ajustar seu modelo em textos ou condições adicionais.

Por exemplo, se você quiser desenvolver um assistente jurídico de IA, poderá ajustar seu modelo com um corpus de texto jurídico para que seu LLM se familiarize especialmente com a redação e os conceitos jurídicos.

2. Feedback humano

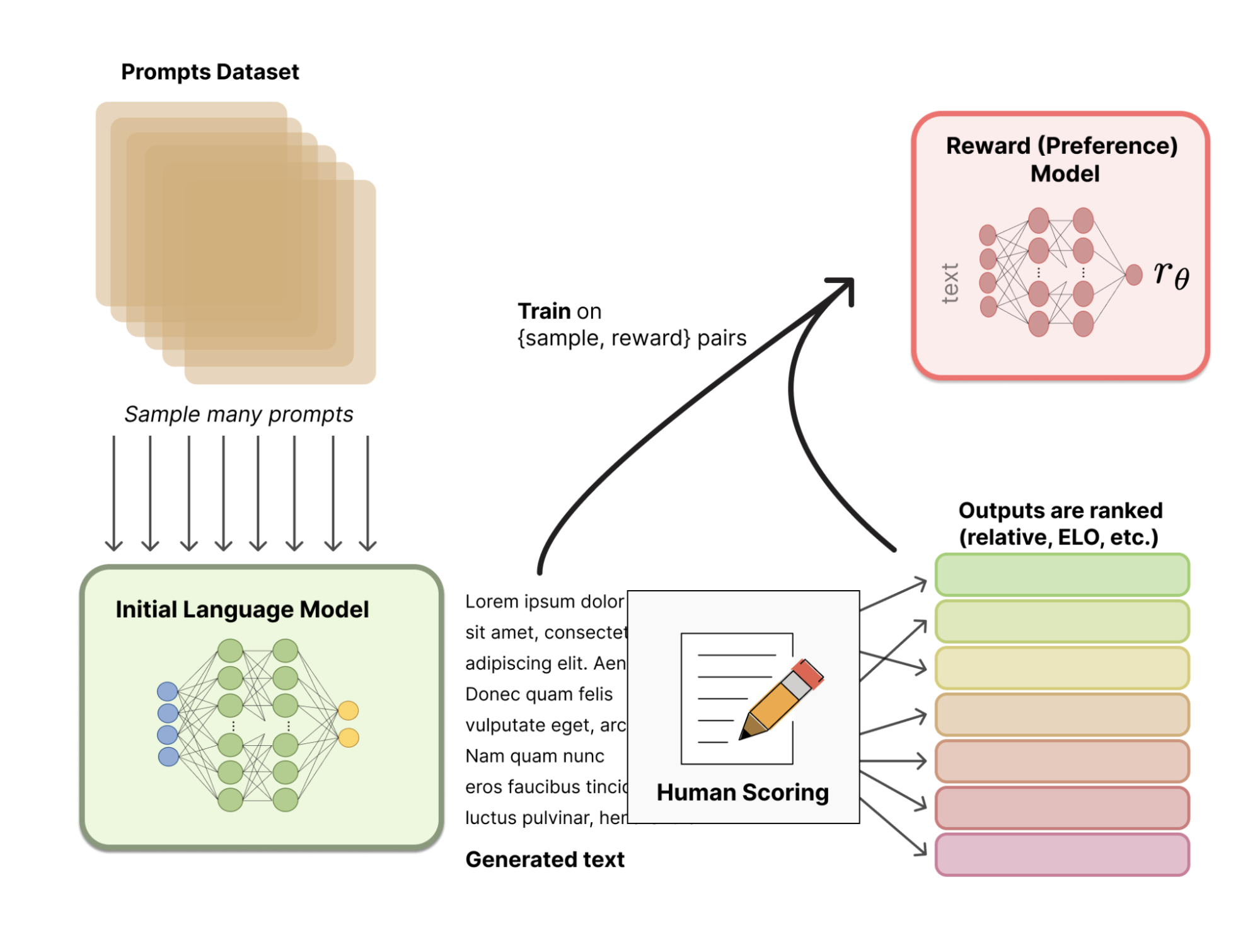

Em vez de usar um modelo de recompensa pré-definido estatisticamente (que seria muito restritivo para calibrar as preferências humanas), o RLHF usa o feedback humano para ajudar o modelo a desenvolver um modelo de recompensa mais sutil. O processo é o seguinte:

- Primeiro, um conjunto de treinamento de pares de prompts de entrada/textos gerados é criado pelo modelo pré-treinado por meio da amostragem de um conjunto de prompts.

- Em seguida, os testadores humanos fornecem uma classificação para os textos gerados, usando determinadas diretrizes para alinhar o modelo com os valores e as preferências humanas, além de mantê-lo seguro. Essas classificações podem ser transformadas em resultados de pontuação usando várias técnicas, como os sistemas de classificação Elo.

- Por fim, o feedback humano acumulado é usado pelo sistema para avaliar seu desempenho e desenvolver um modelo de recompensa.

A imagem a seguir ilustra todo o processo:

Fonte: Cara de abraço

3. Ajuste fino com aprendizado por reforço

No último estágio, o LLM produz novos textos e usa seu modelo de recompensa baseado em feedback humano para produzir uma pontuação de qualidade. A pontuação é então usada pelo modelo para melhorar seu desempenho nos prompts subsequentes.

O feedback humano e o ajuste fino com técnicas de aprendizagem por reforço são, portanto, combinados em um processo iterativo que continua até que um determinado grau de precisão seja alcançado.

Aplicações do aprendizado por reforço a partir do feedback humano

O RLHF é uma técnica de última geração para fazer o ajuste fino dos LLMs, como o ChatGPT. No entanto, a RLHF é um tópico popular, com uma literatura crescente que explora outras possibilidades além dos problemas de PNL. Abaixo, você encontra uma lista de outras áreas em que o RLHF foi aplicado com sucesso:

- Chatbots. O ChatGPT é o exemplo mais proeminente das possibilidades do RLHF. Para saber mais sobre como o ChatGPT usa o RLHF, confira este artigo, "O que é o ChatGPT?" , em que perguntamos diretamente ao ChatGPT como ele funciona.

- Robótica. A robótica é uma das principais áreas em que a RLHF está apresentando resultados promissores. O uso do feedback humano pode ajudar um robô a executar tarefas e movimentos que podem ser difíceis de especificar em uma função de recompensa. Os pesquisadores da OpenAI conseguiram ensinar um robô a dar um salto mortal para trás - uma tarefa bastante difícil de modelar - usando RLHF.

- Jogos. Técnicas de aprendizagem por reforço foram usadas para desenvolver bots de videogame. No entanto, o RLHF pode ser usado para treinar bots com base nas preferências humanas, tornando-os jogadores mais parecidos com os humanos, em vez de simples máquinas de maximização de recompensas. Por exemplo, a OpenAI e a DeepMind treinaram bots para jogar jogos de Atari com RLHF.

Os benefícios da RLHF

A RLHF é uma técnica avançada e promissora sem a qual as ferramentas de IA de última geração não seriam possíveis. Aqui estão alguns dos benefícios do RLHF:

- Desempenho aprimorado. O feedback humano é a chave para que LLMs como o ChatGPT "pensem" e soem como humanos. O HLHF permite que as máquinas lidem com tarefas complexas, como problemas de PNL, que envolvem valores ou preferências humanas.

- Adaptabilidade. Como os LLMs são ajustados usando o feedback humano em todos os tipos de avisos, o RLHF permite que as máquinas executem uma série de tarefas múltiplas e se adaptem às situações esperadas. Isso faz com que os LLMs nos aproximem do limiar da IA de uso geral.

- Melhoria contínua. O RLHF é um processo iterativo, o que significa que o sistema é aprimorado continuamente à medida que sua função de aprendizado é atualizada de acordo com o novo feedback humano.

- Segurança aprimorada. Ao receber feedback humano, o sistema não apenas aprende como fazer as coisas, mas também aprende o que não fazer, garantindo sistemas eficazes, mais seguros e confiáveis.

Limitações do RLHF

No entanto, o RLHF não é infalível. Essa técnica também apresenta certos riscos e limitações. Abaixo, você pode ver alguns dos mais relevantes:

- Feedback humano limitado e caro. A RLHF depende da qualidade e da disponibilidade do feedback humano. No entanto, a realização do trabalho pode ser lenta, trabalhosa e cara, especialmente se o trabalho em questão exigir muito feedback.

- Preconceito no feedback humano. Apesar do uso de diretrizes padronizadas para fornecer feedback, a pontuação ou classificação é, em última análise, influenciada por preferências e valores humanos. Se as tarefas de classificação não forem bem enquadradas ou se a qualidade do feedback humano for ruim, o modelo poderá se tornar tendencioso ou reforçar resultados indesejáveis.

- Generalização para novos contextos. Mesmo que os LLMs sejam ajustados com feedback humano substancial, sempre podem surgir contextos inesperados. Nesse caso, o desafio é tornar o agente resistente a situações com feedback limitado.

- Alucinações. Quando o feedback humano é limitado ou ruim, os agentes podem experimentar as chamadas alucinações, ou seja, comportamentos indesejáveis, falsos ou sem sentido.

Tendências e desenvolvimentos futuros em RLHF

O RLHF é um dos pilares das ferramentas modernas de IA generativa, como o ChatGPT e o GPT-4. Apesar de seus resultados impressionantes, a RLHF é uma técnica relativamente nova, e ainda há uma ampla margem para aprimoramento. Pesquisas futuras sobre técnicas de RLHF são essenciais para tornar os LLMs mais eficientes, reduzir sua pegada ambiental e abordar alguns dos riscos e limitações dos LLMs.

Para ficar por dentro dos últimos desenvolvimentos em IA generativa, aprendizado de máquina e LLMs, recomendamos que você confira nossos materiais de aprendizado selecionados:

- Introdução ao aprendizado por reforço - Este tutorial aborda os conceitos básicos e as terminologias do aprendizado por reforço.

- Aprendizagem profunda em Python - Este curso ajuda os alunos a expandir seu conhecimento de aprendizagem profunda e levar suas habilidades de aprendizagem de máquina para o próximo nível.

- Tutorial de aprendizagem profunda - Este tutorial oferece uma visão geral da aprendizagem profunda e da aprendizagem por reforço.

- Como usar eticamente o aprendizado de máquina para tomar decisões - Esta postagem do blog discute a importância das considerações éticas ao usar modelos de aprendizado de máquina.

Sou analista de dados freelancer, colaborando com empresas e organizações em todo o mundo em projetos de ciência de dados. Também sou instrutor de ciência de dados com mais de 2 anos de experiência. Escrevo regularmente artigos relacionados à ciência de dados em inglês e espanhol, alguns dos quais foram publicados em sites consagrados, como DataCamp, Towards Data Science e Analytics Vidhya Como cientista de dados com formação em ciência política e direito, meu objetivo é trabalhar na interação de políticas públicas, direito e tecnologia, aproveitando o poder das ideias para promover soluções e narrativas inovadoras que possam nos ajudar a enfrentar desafios urgentes, como a crise climática. Eu me considero uma pessoa autodidata, um aprendiz constante e um firme defensor da multidisciplinaridade. Nunca é tarde demais para aprender coisas novas.