Track

AI Moderation for Quality

Embracing the AI revolution in application development marks a new era in human-AI interaction. While businesses leverage AI to enhance user experiences, the integration of LLM-based solutions also presents challenges in maintaining content integrity, accuracy, and ethical standards.

The need for responsible AI moderation becomes evident as applications expand beyond controlled environments, where ensuring reasonable and accurate responses to users might not be technically very straightforward but critical.

In customer service interactions, for example, misinformation or inappropriate content can lead to customer dissatisfaction and even reputational damage to the business. But as a developer, how can you ensure your AI-based application is giving reasonable and accurate responses to your users? That is where AI moderation kicks in!

In this article, we will delve into a technique for moderating GPT-based applications using GPT models.

Building a GPT-Based Quality Agent

Moderating AI quality also includes ensuring non-biased and appropriate response generation when working with LLMs. OpenAI has already introduced an API designed for such moderation needs. If you are keen on detecting biased or inappropriate responses from your model or addressing user misbehavior, you will find valuable insights in the article titled ChatGPT Moderation API: Input/Output Control.

However, the present article takes a distinct approach to AI moderation. Our focus here is on guaranteeing the quality of a model’s responses in terms of accuracy and meeting user requirements. To the best of my knowledge, there isn’t an official endpoint specifically tailored for this purpose.

Nevertheless, given our extensive use of GPT models across various applications, why not employ them as quality checkers for instances of the same model?

We can harness GPT models to assess the outcome generated by the model itself in response to the user’s requests. This testing approach helps prevent vague and incorrect responses and enhances the model’s ability to fulfill the user’s requests effectively.

Scope and objectives

This article covers how to employ GPT models for moderating GPT-based applications in terms of quality and correctness within the scope of your application.

For example, if you are using GPT models to power your business chatbot, I am sure you will be highly interested in ensuring that your chatbot does not provide any information outside your catalog items or characteristics.

In the forthcoming sections, we will bring this last example to life by using simple calls to the OpenAI API by means of the openai Python package and the well-known Jupyter Notebooks.

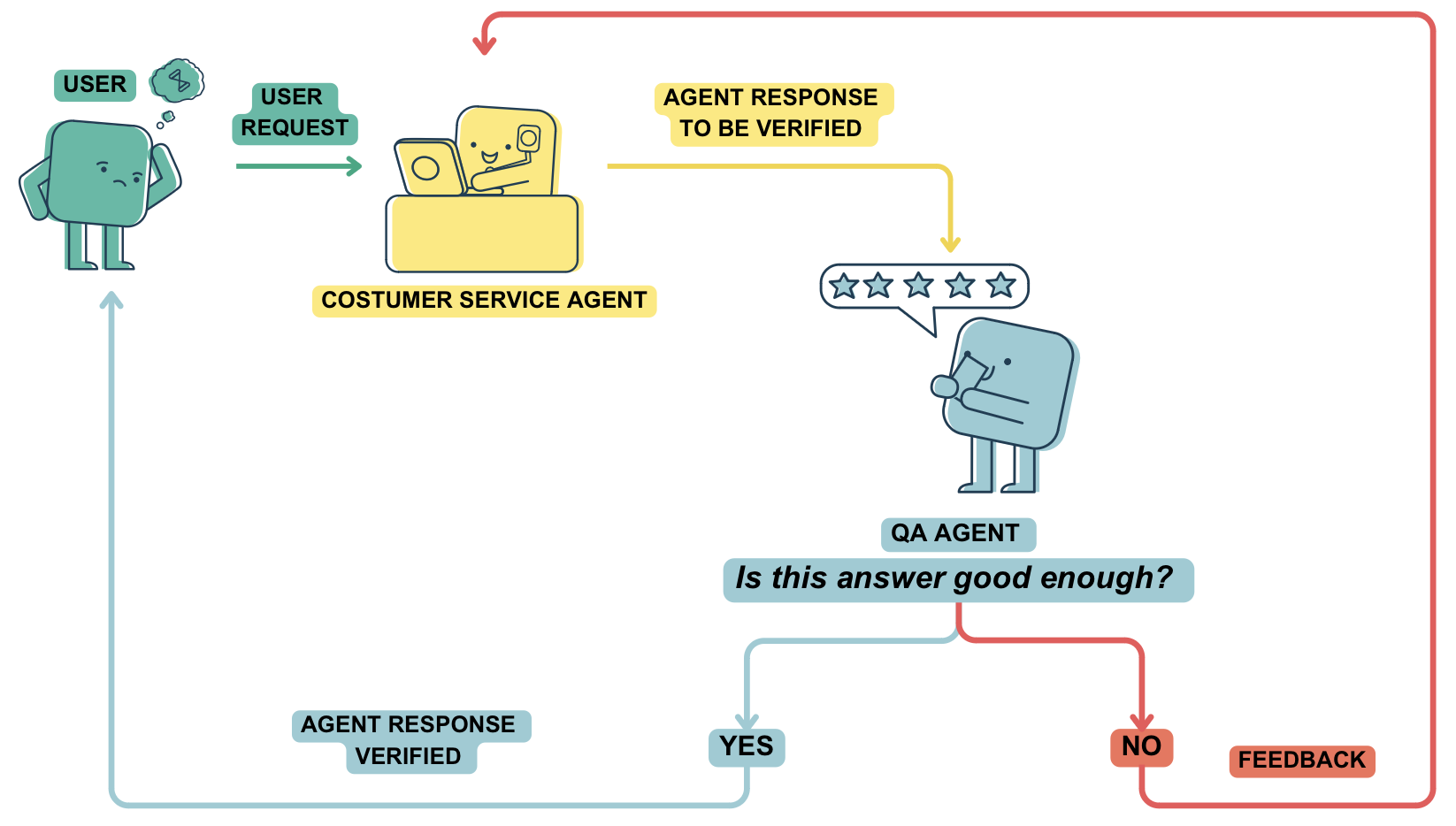

The main objective will be to generate a simple LLM-based application and moderate its output with an LLM-based quality checker. For our example, we will need to create our sample customer service agent, the quality checker agent —QA agent from now on — and, more importantly, define the interactions between the two.

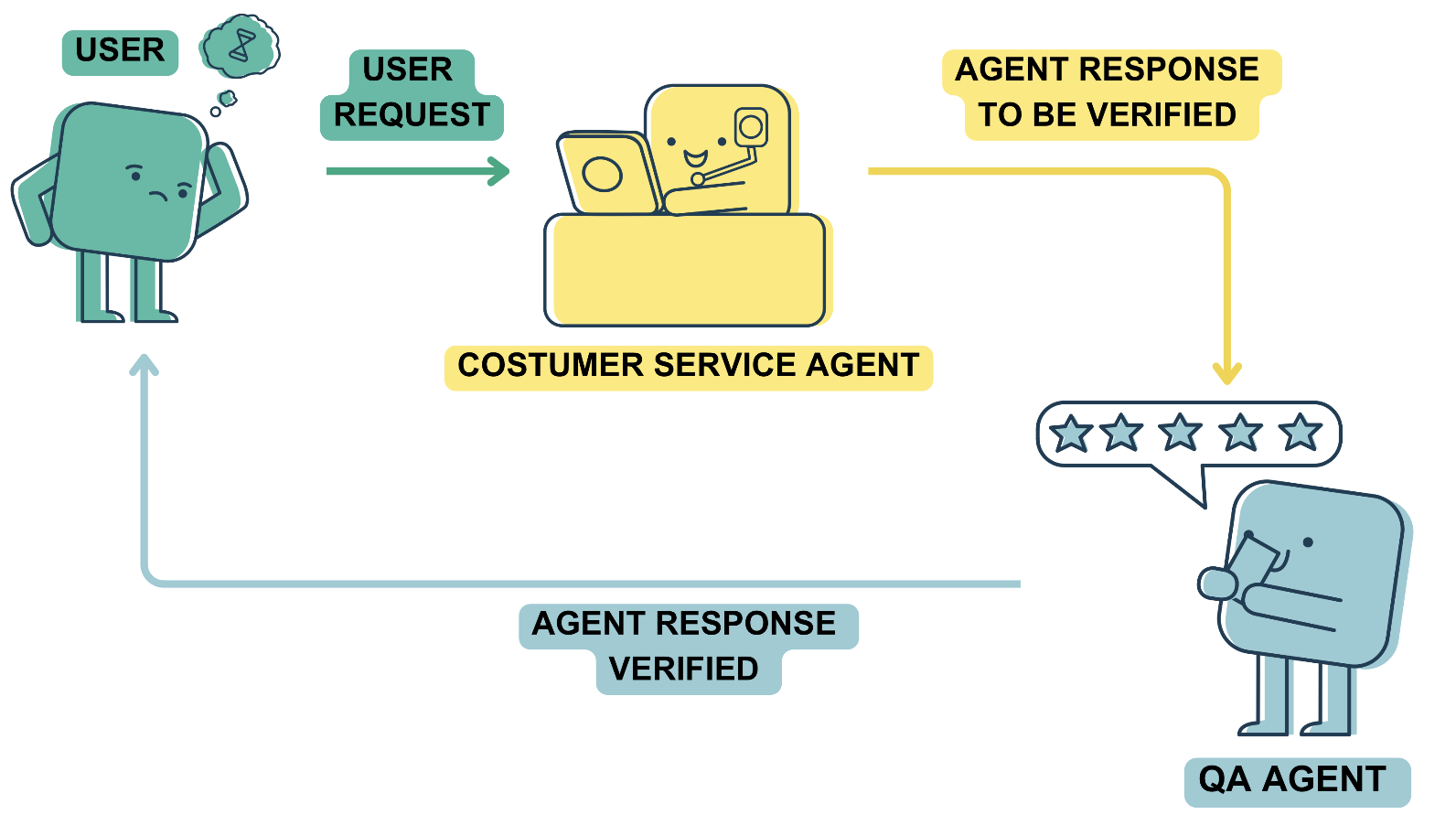

The following schema gives us a nice representation of the aforementioned workflow:

Self-made image. Representation of the moderation workflow: 1. The user sends a request to the LLM-based application, a customer service chatbot in this example. 2. The chatbot generates the answer, but it sends it to the QA agent first. 3. The QA agent sends the answer back to the user after checking that the answer is appropriate.

Let’s go step by step!

Building the GPT Agents

Let’s start by building a conversational agent for the customer service of a store.

Feel free to skip this first section if you already have a working LLM-powered application or to implement your preferred example! And if you are still wondering if your business can benefit from an LLM-based application, there is an interesting podcast discussion you should follow!

Crafting a sample customer service chatbot

Let’s imagine we are building a customer service agent for our shop. We are interested in using a model like ChatGPT behind this customer agent to make use of its natural language capabilities for understanding user queries and replying to them in a natural way.

To define our customer service chatbot, we will need two key ingredients:

- A sample product catalog to restrict the agent to our business scope. By feeding our product catalog to the model, we can let the model know which information to give to our users.

- A system message to define the high-level behavior of the model. While GPT models have been generally trained in multiple tasks, you can restrict the model behavior by using the so-called system messages.

Finally, as in any other LLM-based application, we need a way to call the OpenAI API from our scripts. In this article, I will be using the following implementation that relies only on the openai package:

import openai

import os

# Get the OpenAI key from the environment

openai_api_key = os.environ["OPENAI_API_KEY"]

# Simple OpenAI API call with a memory of past interactions

def gpt_call(prompt, message_history, model="gpt-3.5-turbo"):

message_history.append({'role': 'user', 'content': prompt})

response = openai.ChatCompletion.create(

model=model,

messages=message_history

)

response_text = response.choices[0].message["content"]

message_history.append({'role': 'assistant', 'content': response_text})

return response_textThe idea behind this is to initialize a separate message history — containing the system message — for each model instance and keep updating it with the upcoming interactions with the model.

If you are looking for a more optimized way to handle your interactions, I would definitely recommend using the langchain framework as we did in Building Context-Aware Chatbots: Leveraging LangChain Framework for ChatGPT.

If you are new to the OpenAI API, consider checking out the webinar on Getting Started with the OpenAI API and ChatGPT.

Now that we have identified the required building blocks, let’s put them together:

# Define our sample product catalog

product_information = """

{ "name": "UltraView QLED TV", "category": "Televisions and Home Theater Systems", "brand": "UltraView", "model_number": "UV-QLED65", "warranty": "3 years", "rating": 4.9, "features": [ "65-inch QLED display", "8K resolution", "Quantum HDR", "Dolby Vision", "Smart TV" ], "description": "Experience lifelike colors and incredible clarity with this high-end QLED TV.", "price": 2499.99 }

{ "name": "ViewTech Android TV", "category": "Televisions and Home Theater Systems", "brand": "ViewTech", "model_number": "VT-ATV55", "warranty": "2 years", "rating": 4.7, "features": [ "55-inch 4K display", "Android TV OS", "Voice remote", "Chromecast built-in" ], "description": "Access your favorite apps and content on this smart Android TV.", "price": 799.99 }

{ "name": "SlimView OLED TV", "category": "Televisions and Home Theater Systems", "brand": "SlimView", "model_number": "SL-OLED75", "warranty": "2 years", "rating": 4.8, "features": [ "75-inch OLED display", "4K resolution", "HDR10+", "Dolby Atmos", "Smart TV" ], "description": "Immerse yourself in a theater-like experience with this ultra-thin OLED TV.", "price": 3499.99 }

{ "name": "TechGen X Pro", "category": "Smartphones and Accessories", "brand": "TechGen", "model_number": "TG-XP20", "warranty": "1 year", "rating": 4.5, "features": [ "6.4-inch AMOLED display", "128GB storage", "48MP triple camera", "5G", "Fast charging" ], "description": "A feature-packed smartphone designed for power users and mobile enthusiasts.", "price": 899.99 }

{ "name": "GigaPhone 12X", "category": "Smartphones and Accessories", "brand": "GigaPhone", "model_number": "GP-12X", "warranty": "2 years", "rating": 4.6, "features": [ "6.7-inch IPS display", "256GB storage", "108MP quad camera", "5G", "Wireless charging" ], "description": "Unleash the power of 5G and high-resolution photography with the GigaPhone 12X.", "price": 1199.99 }

{ "name": "Zephyr Z1", "category": "Smartphones and Accessories", "brand": "Zephyr", "model_number": "ZP-Z1", "warranty": "1 year", "rating": 4.4, "features": [ "6.2-inch LCD display", "64GB storage", "16MP dual camera", "4G LTE", "Long battery life" ], "description": "A budget-friendly smartphone with reliable performance for everyday use.", "price": 349.99 }

{ "name": "PixelMaster Pro DSLR", "category": "Cameras and Camcorders", "brand": "PixelMaster", "model_number": "PM-DSLR500", "warranty": "2 years", "rating": 4.8, "features": [ "30.4MP full-frame sensor", "4K video", "Dual Pixel AF", "3.2-inch touchscreen" ], "description": "Unleash your creativity with this professional-grade DSLR camera.", "price": 1999.99 }

{ "name": "ActionX Waterproof Camera", "category": "Cameras and Camcorders", "brand": "ActionX", "model_number": "AX-WPC100", "warranty": "1 year", "rating": 4.6, "features": [ "20MP sensor", "4K video", "Waterproof up to 50m", "Wi-Fi connectivity" ], "description": "Capture your adventures with this rugged and versatile action camera.", "price": 299.99 }

{ "name": "SonicBlast Wireless Headphones", "category": "Audio and Headphones", "brand": "SonicBlast", "model_number": "SB-WH200", "warranty": "1 year", "rating": 4.7, "features": [ "Active noise cancellation", "50mm drivers", "30-hour battery life", "Comfortable earpads" ], "description": "Immerse yourself in superior sound quality with these wireless headphones.", "price": 149.99 }

"""

# Define a proper system message for our use case

customer_agent_sysmessage = f"""

You are a customer service agent that responds to \

customer questions about the products in the catalog. \

The product catalog will be delimited by 3 backticks, i.e. ```. \

Respond in a friendly and human-like tone giving details with \

the information available from the product catalog. \

Product catalog: ```{product_information}```

"""

# Initialize your model's memory

customer_agent_history = [{'role': 'system', 'content': customer_agent_sysmessage}]As we can observe, we have defined a sample catalog (product_catalog) in jsonl format, and a system message (customer_agent_sysmessage) with three requirements:

- Behave as a customer service agent.

- Respond in a friendly and human-like tone.

- Provide information from the catalog only.

Finally, we have also initialized the message history for the customer agent (customer_agent_history).

It is interesting to note that we have used a characteristic style when writing the system message and the additional information, e.g., the triple backticks. This is one of the best practices for prompting! If you are interested in some more best practices, the webinar A Beginner's Guide to Prompt Engineering with ChatGPT is for you!

At this point, we can start using our sample customer chatbot as follows:

user_prompt = "Which is the best TV in your shop?"

customer_agent_response = gpt_call(user_prompt, customer_agent_history)

print(customer_agent_response)

# Output: In our shop, the UltraView QLED TV is considered to be the best TV. It has a rating of 4.9 and offers a 65-inch QLED display with 8K resolution. It also features Quantum HDR, Dolby Vision, and Smart TV capabilities. With its lifelike colors and incredible clarity, this high-end QLED TV delivers an immersive viewing experience. The UltraView QLED TV is priced at $2499.99 and comes with a warranty of 3 years.

It seems a natural answer, right? Let’s do a follow-up interaction:

user_prompt = "I want to buy the latest iphone. Can you help me?"

customer_agent_response = gpt_call(user_prompt, customer_agent_history)

print(customer_agent_response)

# Output: Of course! I'd be happy to help you find the latest iPhone. However, as it appears to be missing from the product catalog, I'm unable to provide specific details on the latest iPhone model at this time. I recommend checking our website or contacting our store directly for the most up-to-date information on the latest iPhone models available. Our knowledgeable staff will be able to assist you in selecting the perfect iPhone that meets your needs and preferences.

Fair enough! The agent is able to identify that there is no iPhone in our product catalog and respond to the user accordingly. It even encourages the user to contact the shop!

Let’s try one more request:

user_prompt = "Can you help me buy a Samsung TV?"

customer_agent_response = gpt_call(user_prompt, customer_agent_history)

print(customer_agent_response)

# Output: Absolutely! I'd be delighted to assist you in purchasing a Samsung TV. Could you please provide me with some specific requirements or preferences you have in mind? This way, I can recommend the best Samsung TV model that suits your needs.

Right, it seems in some cases the agent might skip the information in the catalog. In those cases, the QA agent can help in filtering the undesired responses.

Developing our custom QA agent

As we have already discussed, the purpose of the QA agent is to check the quality of the customer service agent given the user query and the product catalog. Therefore, it is important to define a system message that sets this exact high-level behavior:

qa_sysmessage = f"""

You are a quality assistant that evaluates whether \

a customer service agent answers to the customer \

questions correctly. \

You must also validate that the customer service agent \

provides information only from the product catalog \

of our store and gently refuses any other product outside the catalog. \

The customer message, the customer service agent's response, and \

the product catalog will be delimited by \

3 backticks, i.e. ```. \

Give a reason for your answer.

"""

In the case of the customer agent, the user prompt is unpredictable since it is up to the user's necessity and writing style. In the case of the QA agent, we are the ones in charge of passing the user request, the customer agent response, and the product catalog to the model. Thus, our prompt will always have the same structure but with varying user query (user_request) and model’s response (customer_agent_response):

qa_prompt = f"""

Customer question: ```{user_prompt}```

Product catalog: ```{product_information}```

Agent response: ```{customer_agent_response}```

"""

Once the system message and the QA prompt have been defined, we can test the QA agent with the latest customer service response as follows:

# Initialize the QA-agent memory

qa_history = [{'role': 'system', 'content': qa_sysmessage}]

# Call the QA-agent with our templated query

qa_response = chatgpt_call(qa_prompt, qa_history)

print(qa_response)

# Output: Quality Check: Incorrect Response

Feedback: The customer asked for help in buying a Samsung TV but the agent did not address that request. The agent should have mentioned that they only have access to the product catalog of our store and can provide information and recommendations based on that catalog. The agent should have gently refused any other product outside the catalog.

To evaluate the QA-agent response, let’s break down the interaction it will analyze:

- User request: “

Can you help me buy a Samsung TV?” - Customer agent response: “

Absolutely! I'd be delighted to assist you in purchasing a Samsung TV. Could you please provide me with some specific requirements or preferences you have in mind? This way, I can recommend the best Samsung TV model that suits your needs.” - QA agent: “

Quality Check: Incorrect ResponseFeedback: The customer asked for help in buying a Samsung TV but the agent did not address that request. The agent should have mentioned that they only have access to the product catalog of our store and can provide information and recommendations based on that catalog. The agent should have gently refused any other product outside the catalog.”

The quality agent is able to spot inappropriate responses from the customer agent!

Interaction Between the Agents

Now that we have the two agents working independently, it’s time to define the interaction between them.

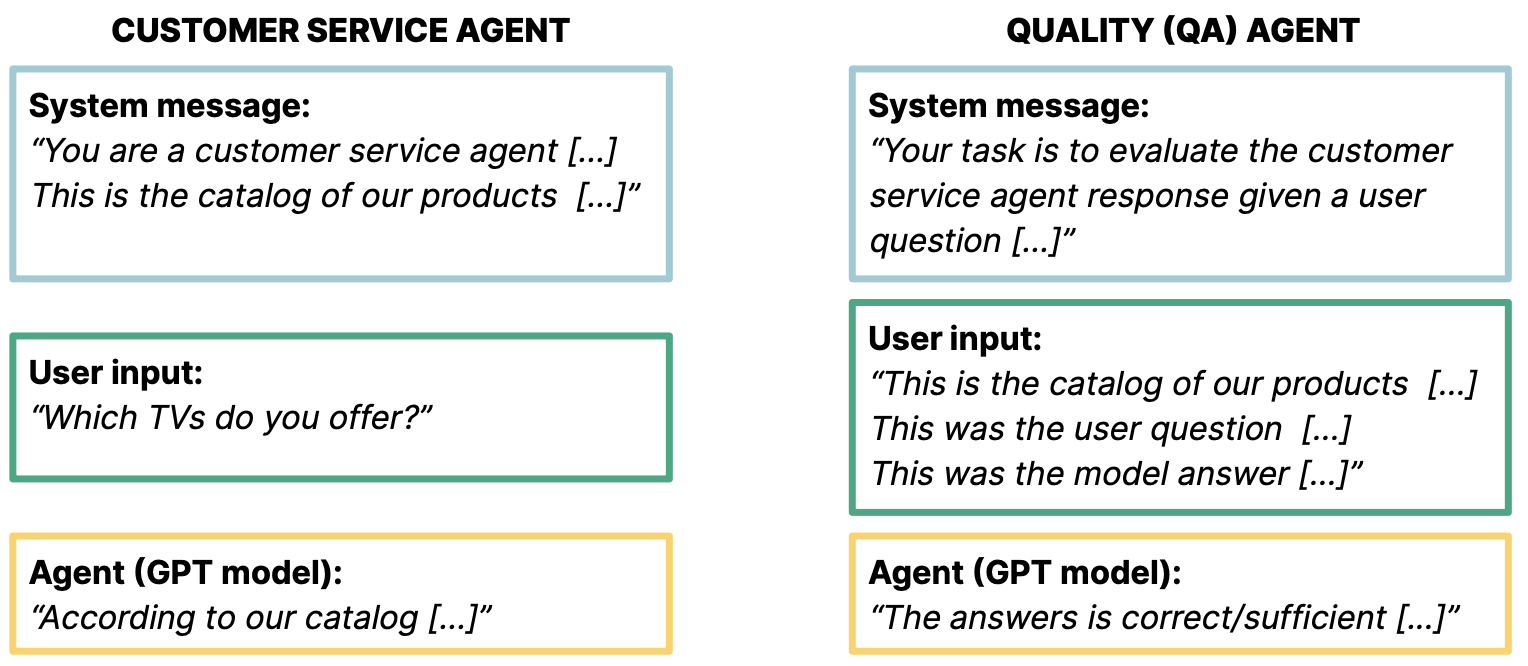

We can depict both agents and their requirements in a simple schema:

Self-made image. Representation of the three building blocks of each agent: system message (blue), model input (green), and model output (yellow).

What is next? Now, we need to implement the interaction between both models!

Here is a proposed idea for filtering inaccurate responses:

At first, we let the customer agent generate the response given the user query. Then, if the QA agent considers that the customer agent's response is good enough for the user query and according to the product catalog, we simply send the answer back to the user.

On the contrary, if the QA agent determines that the answer does not fulfill the user's request or contains unreal information regarding the catalog, we can ask the customer agent to improve the answer before sending it to the user.

Given this idea, we can improve the last part of our original schema as follows:

Self-made image. Representation of the extended moderation workflow. We can use the judgment of the QA agent to provide feedback to the LLM-based application.

Orchestrating the Agent’s Interaction

In order to use the QA agent as a filter, we need to make sure it outputs a consistent response in every iteration.

One way to achieve that is to slightly change the QA agent system message and ask it to output only True if the customer agent response is good enough or False otherwise:

qa_sysmessage = f"""

You are a quality assistant that evaluates whether \

a customer service agent answers to the customer \

questions correctly. \

You must also validate that the customer service agent \

provides information only from the product catalog \

of our store and gently refuses any other product outside the catalog. \

The customer message, the customer service agent's response and \

the product catalog will be delimited by \

3 backticks, i.e. ```. \

Respond with True or False no punctuation: \

True - if the agent sufficiently answers the question \

AND the response correctly uses product information \

False - otherwise

"""

Therefore, when evaluating again the latest customer agent response, we will simply get a boolean output:

# Let's reinitialize the memory

qa_history = [{'role': 'system', 'content': qa_sysmessage}]

qa_response = gpt_call(qa_prompt, qa_history)

print(qa_response)

# Output: False

We can further work with this boolean value to send the response to the user - if the QA agent evaluates to True - or to give the model a second chance to generate a new response - if the QA agent evaluates to False.

Demonstrating the agents’ interaction

Let’s put everything together!

Given that we have already initialized the two memories - with their respective system messages and additional information - each customer request can be processed as follows:

user_prompt = "Can you help me buy a Samsung TV?"

customer_agent_response = gpt_call(user_prompt, customer_agent_history)

qa_response = gpt_call(qa_prompt, qa_history)

if qa_response == "False":

print("The chatbot response is not sufficient!")

else:

print("Sending response to user...")

print(customer_agent_response)

As described above, we have filtered the responses based on their correctness. I let you the task of deciding what to do with the not suitable responses. We have proposed the idea of sending feedback to the customer agent and asking it to retry, but what about asking the QA agent for a better response instead? There are multiple possibilities!

Conclusion

In this article, we have explored the potential of using GPT models as moderators for other model instances of the same kind. We have demonstrated that the same powerful capabilities that have led us to use LLM models in our applications can help our applications enhance the accuracy and integrity of user interactions.

Contrary to misconceptions, implementing moderation levels does not necessarily imply increasing the complexity of our application, and, as we have shown, sometimes it can be achieved with a few well-engineered lines of code, significantly upgrading application functionality.

In today's AI-driven world, responsible LLM moderation is imperative. It's not just a choice but an ethical obligation. By integrating AI moderation, we ensure our applications are not only powerful but also reliable and ethical. Let's move forward with responsible development so that we can continue taking benefit from AI while upholding accuracy.

Many thanks for reading! If you liked the topic of AI moderation, I encourage you to continue with Promoting Responsible AI: Content Moderation in ChatGPT as a follow-up material!

Andrea Valenzuela is currently working on the CMS experiment at the particle accelerator (CERN) in Geneva, Switzerland. With expertise in data engineering and analysis for the past six years, her duties include data analysis and software development. She is now working towards democratizing the learning of data-related technologies through the Medium publication ForCode'Sake.

She holds a BS in Engineering Physics from the Polytechnic University of Catalonia, as well as an MS in Intelligent Interactive Systems from Pompeu Fabra University. Her research experience includes professional work with previous OpenAI algorithms for image generation, such as Normalizing Flows.