Many organizations today want to use data to guide their decisions, but need help managing their growing data sources. More importantly, when they can't transform their raw data into usable formats, they may have poor data availability, which can hinder the development of a data culture.

ETL (Extract, Transform, Load) tools are an important part of solving these problems. There are many different ETL tools to choose from, which gives companies the power to select the best option. However, reviewing all the available options can be time-consuming.

In this post, we’ve compiled a top 24 ETL tools list, detailing some of the best options on the market.

For a structured introduction to ETL, check out this course on ETL and ELT in Python.

Become a Data Engineer

What Is ETL?

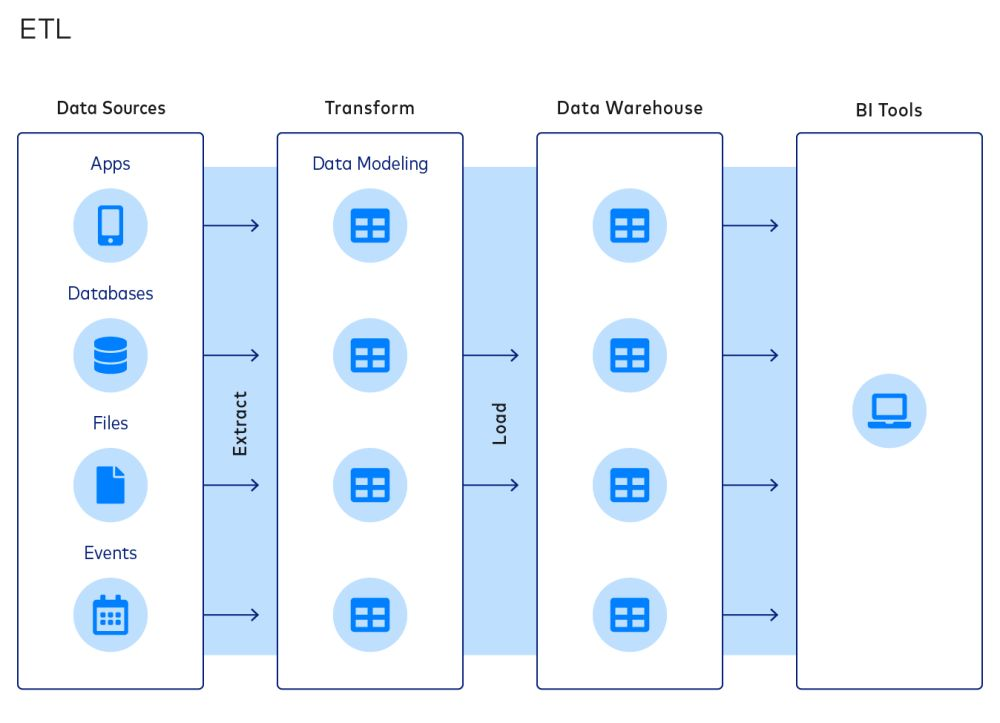

ETL is a common approach to integrating data and organizing data stacks. A typical ETL process comprises the following stages:

- Extracting data from sources

- Transforming data into data models

- Loading data into data warehouses

The ETL paradigm is popular because it allows companies to reduce the size of their data warehouses, which can save on computation, storage, and bandwidth costs.

However, these cost savings are becoming less important as these constraints disappear. As a result, ELT (Extract, Load, Transform) is becoming more popular. In the ELT process, data is loaded to a destination after extraction, and transformation is the final step in the process. Despite this, many companies still rely on ETL.

What Are ETL Tools?

Just as the name suggests, ETL tools are a set of software tools that are used to extract, transform, and load data from one or more sources into a target system or database. ETL tools are designed to automate and simplify the process of extracting data from various sources, transforming it into a consistent and clean format, and loading it into the target system in a timely and efficient manner. In the next section, we’ll see key considerations data teams should apply when considering an ETL tool.

Considerations of ETL Tools

Here are three key considerations to take into account when selecting an ETL tool:

- The extent of data integration. ETL tools can connect to a variety of data sources and destinations. Data teams should opt for ETL tools that offer a wide range of integrations. For example, teams who want to move data from Google Sheets to Amazon Redshift should select ETL tools that support such connectors.

- Level of customizability. Companies should choose their ETL tools based on their requirements for customizability and technical expertise of its IT team. A start-up might find built-in connectors and transformations in most ETL tools sufficient; a large enterprise with bespoke data collection will probably need the flexibility to craft bespoke transformations with the help of a strong team of engineers.

- Cost structure. When choosing an ETL tool, organizations should consider not only the cost of the tool itself but also the costs of the infrastructure and human resources needed to maintain the solution over the long term. In some cases, an ETL tool with a higher upfront cost but lower downtime and maintenance requirements may be more cost-effective in the long run. Conversely, there are free, open-source ETL tools that can have high maintenance costs.

Some other considerations include:

- The level of automation provided

- The level of security and compliance

- The performance and reliability of the tool.

The Top 24 ETL Tools

With those considerations in mind, we present the top 24 ETL tools available in the market in 2025. Note that the tools are not ordered by quality, as different tools have different strengths and weaknesses.

1. Apache Airflow

Apache Airflow is an open-source platform to programmatically author, schedule, and monitor workflows. The platform features a web-based user interface and a command-line interface for managing and triggering workflows.

Workflows are defined using directed acyclic graphs (DAGs), which allow for clear visualization and management of tasks and dependencies. Airflow also integrates with other tools commonly used in data engineering and data science, such as Apache Spark and Pandas.

Companies using Airflow can benefit from its ability to scale and manage complex workflows, as well as its active open-source community and extensive documentation. You can learn about Airflow in the following DataCamp course.

2. Databricks Delta Live Tables

Databricks Delta Live Tables (DLT) is an ETL framework built on top of Apache Spark that automates data pipelines (creating them and managing them). It allows data teams to build reliable, maintainable, and declarative pipelines with minimal effort.

Delta Live Tables simplifies ETL by using a declarative approach: users define the what (the transformations and dependencies), and the system handles the how (execution, optimization, and recovery).

A major strength of DLT lies in its ability to ensure data quality and reliability. Built-in expectations let users define data quality rules that validate records in real time. Failed records can be quarantined for later review.

3. Portable.io

Portable.io describes itself as "the first ELT platform to build connectors on-demand for data teams." True to this mission, the team at Portable builds custom no-code integrations, ingesting data from SaaS providers and many other data sources that might not be supported because they are overlooked by other ETL providers. Potential customers can see for themselves their extensive connector catalog of over 1,300 hard-to-find ETL connectors.

Portable operates from the belief that companies should have data from every business application at their fingertips with no code. The team at Portable has created a product that enables efficient and timely data management and offers robust scalability and high performance. Additionally, it has cost-effective pricing to accommodate businesses of all sizes and advanced security features to ensure data protection and compliance with common standards.

4. IBM Infosphere Datastage

Infosphere Datastage is an ETL tool offered by IBM as part of its Infosphere Information Server ecosystem. With its graphical framework, users can design data pipelines that extract data from multiple sources, perform complex transformations, and deliver the data to target applications.

IBM Infosphere is known for its speed, thanks to features like load balancing and parallelization. It also supports metadata, automated failure detection, and a wide range of data services, from data warehousing to AI applications.

Like other enterprise ETL tools, Infosphere Datastage offers a range of connectors for integrating different data sources. It also integrates seamlessly with other components of the IBM Infosphere Information Server, allowing users to develop, test, deploy, and monitor ETL jobs.

5. Oracle Data Integrator

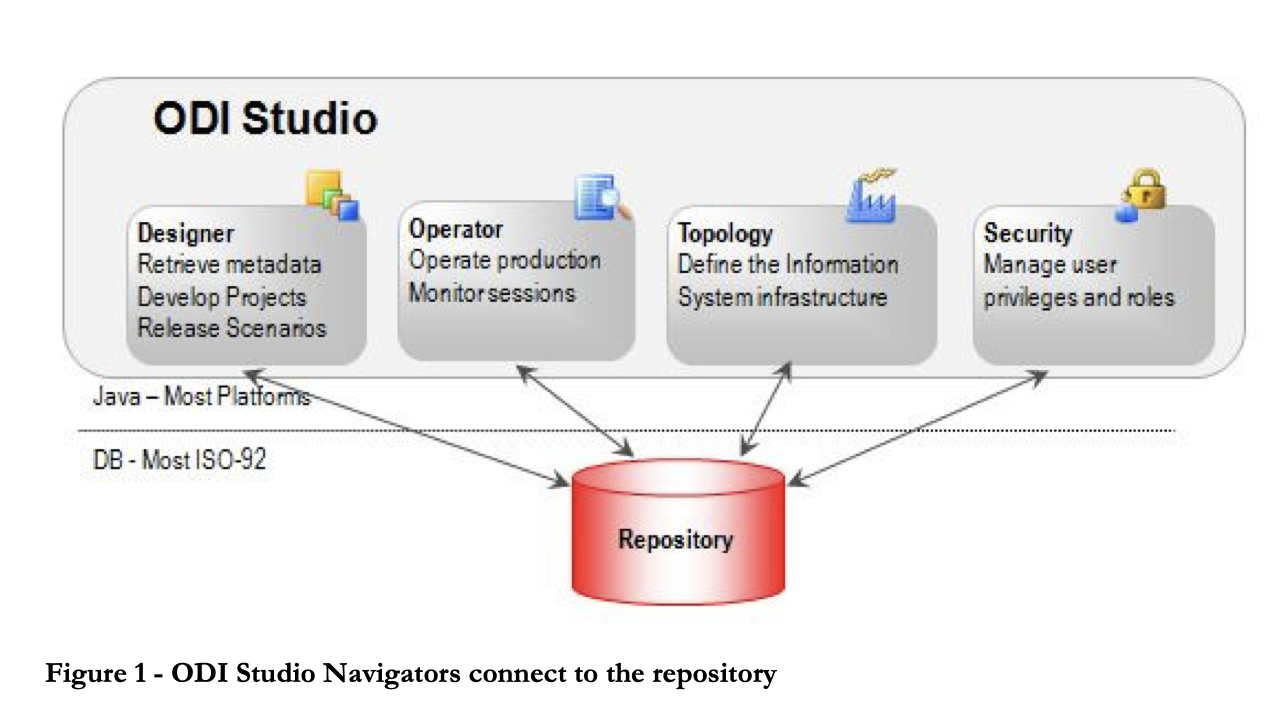

Oracle Data Integrator is an ETL tool that helps users build, deploy, and manage complex data warehouses. It comes with out-of-the-box connectors for many databases, including Hadoop, EREPs, CRMs, XML, JSON, LDAP, JDBC, and ODBC.

ODI includes Data Integrator Studio, which provides business users and developers with access to multiple artifacts through a graphical user interface. These artifacts offer all the elements of data integration, from data movement to synchronization, quality, and management.

6. Microsoft SQL Server Integration Services (SSIS)

SSIS is an enterprise-level platform for data integration and transformation. It comes with connectors for extracting data from sources like XML files, flat files, and relational databases. Practitioners can use SSIS designer’s graphical user interface to construct data flows and transformations.

The platform includes a library of built-in transformations that minimize the amount of code required for development. SSIS also offers comprehensive documentation for building custom workflows. However, the platform's steep learning curve and complexity may discourage beginners from quickly creating ETL pipelines.

7. Talend Open Studio (TOS)

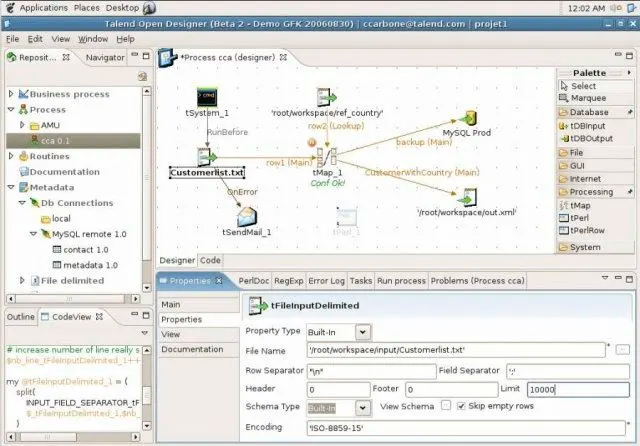

Talend Open Studio is a popular open-source data integration software that features a user-friendly GUI. Users can drag and drop components, configure them, and connect them to create data pipelines. Behind the scenes, Open Studio converts the graphical representation into Java and Perl code.

As an open-source tool, TOS is an affordable option with a wide variety of data connectors, including RDBMS and SaaS connectors. The platform also benefits from an active open-source community that regularly contributes to documentation and provides support.

8. Pentaho Data Integration (PDI)

Pentaho Data Integration (PDI) is an ETL tool offered by Hitachi. It captures data from various sources, cleans it, and stores it in a uniform and consistent format.

Formerly known as Kettle, PDI features multiple graphical user interfaces for defining data pipelines. Users can design data jobs and transformations using the PDI client, Spoon, and then run them using Kitchen. For example, the PDI client can be used for real-time ETL with Pentaho Reporting.

9. Hadoop

Hadoop is an open-source framework for processing and storing big data in clusters of computer servers. It is considered the foundation of big data and enables the storage and processing of large amounts of data.

The Hadoop framework consists of several modules, including the Hadoop Distributed File System (HDFS) for storing data, MapReduce for reading and transforming data, and YARN for resource management. Hive is commonly used to convert SQL queries into MapReduce operations.

Companies considering Hadoop should be aware of its costs. A significant portion of the cost of implementing Hadoop comes from the computing power required for processing and the expertise needed to maintain Hadoop ETL, rather than the tools or storage themselves.

10. AWS Glue

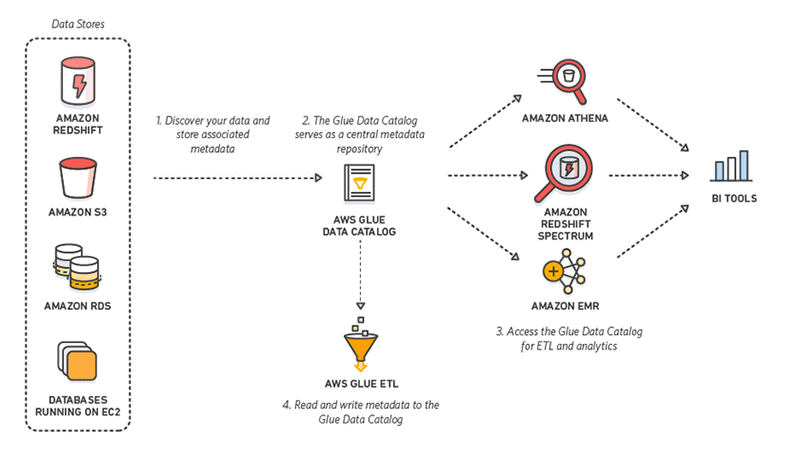

AWS Glue is a serverless ETL tool offered by Amazon. It discovers, prepares, integrates, and transforms data from multiple sources for analytics use cases. With no requirement to set up or manage infrastructure, AWS Glue promises to reduce the hefty cost of data integration.

Better yet, when interacting with AWS Glue, practitioners can choose between a drag-and-down GUI, a Jupyter notebook, or Python/Scala code. AWS Glue also offers support for various data processing and workloads that meet different business needs, including ETL, ELT, batch, and streaming.

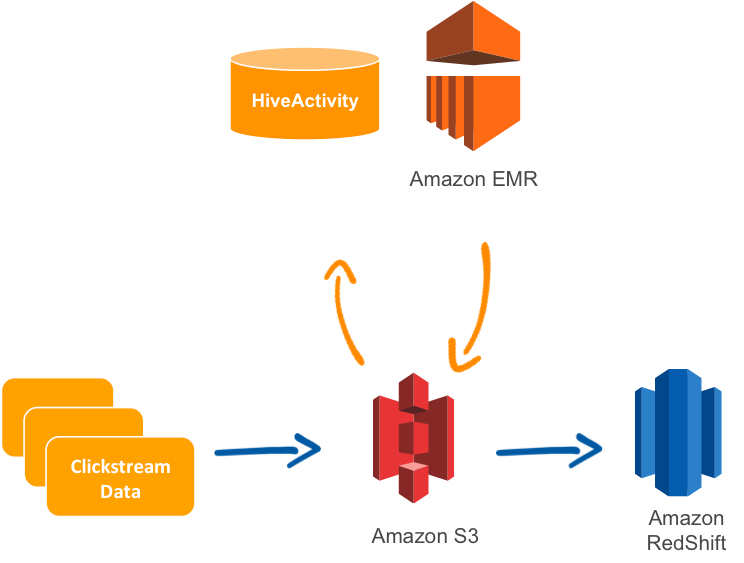

11. AWS Data Pipeline

AWS’s Data Pipeline is a managed ETL service that enables the movement of data across AWS services or on-premise resources. Users can specify the data to be moved, transformation jobs or queries, and a schedule for performing the transformations.

Data Pipeline is known for its reliability, flexibility, and scalability, as well as its fault-tolerance and configurability. The platform also features a drag-and-drop console for ease of use. Additionally, it is relatively inexpensive.

A common use case for AWS Data Pipeline is replicating data from Relational Database Service (RDS) and loading it onto Amazon Redshift.

However, it’s important to note that AWS is gradually shifting focus away from AWS Data Pipeline in favor of more modern solutions such as AWS Glue. AWS Glue offers serverless, automated data integration with support for both batch and streaming workloads. Additionally, AWS is exploring the zero-ETL concept, where services like Amazon Aurora and Amazon Redshift can integrate without requiring traditional ETL pipelines. As these advancements continue, AWS Data Pipeline is expected to be phased out, encouraging users to adopt more innovative and efficient solutions within the AWS ecosystem.

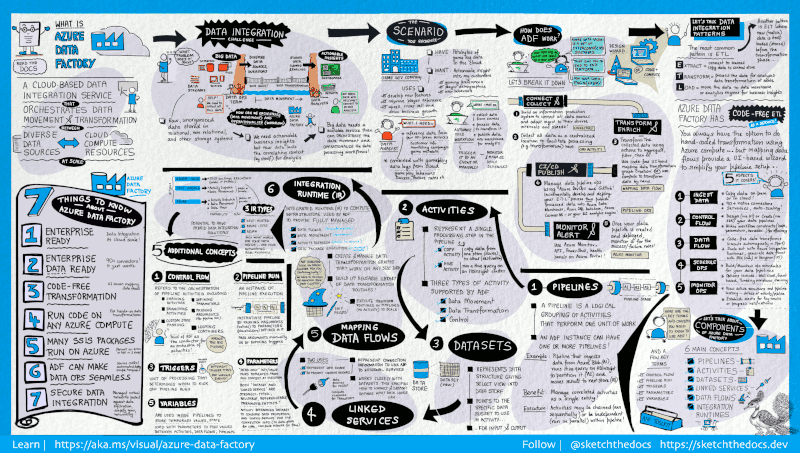

12. Azure Data Factory

Azure Data Factory is a cloud-based ETL service offered by Microsoft used to create workflows that move and transform data at scale.

It comprises a series of interconnected systems. Together, these systems allow engineers to not only ingest and transform data but also design, schedule, and monitor data pipelines.

The strength of Data Factory lies in the sheer number of its available connectors, from MySQL to AWS, MongoDB, Salesforce, and SAP. It is also lauded for its flexibility; users can choose to interact with either a no-code graphical user interface or a command-line interface.

13. Google Cloud Dataflow

Dataflow is the serverless ETL service offered by Google Cloud. It allows for both stream and batch data processing and does not require companies to own a server or cluster. Instead, users only pay for the resources consumed, which scale automatically based on requirements and workload.

Google Dataflow executes Apache Beam pipelines within the Google Cloud Platform ecosystem. Apache offers Java, Python, and Go SDKs for representing and transferring data sets, both batch and streaming. This allows users to choose the appropriate SDK for defining their data pipelines.

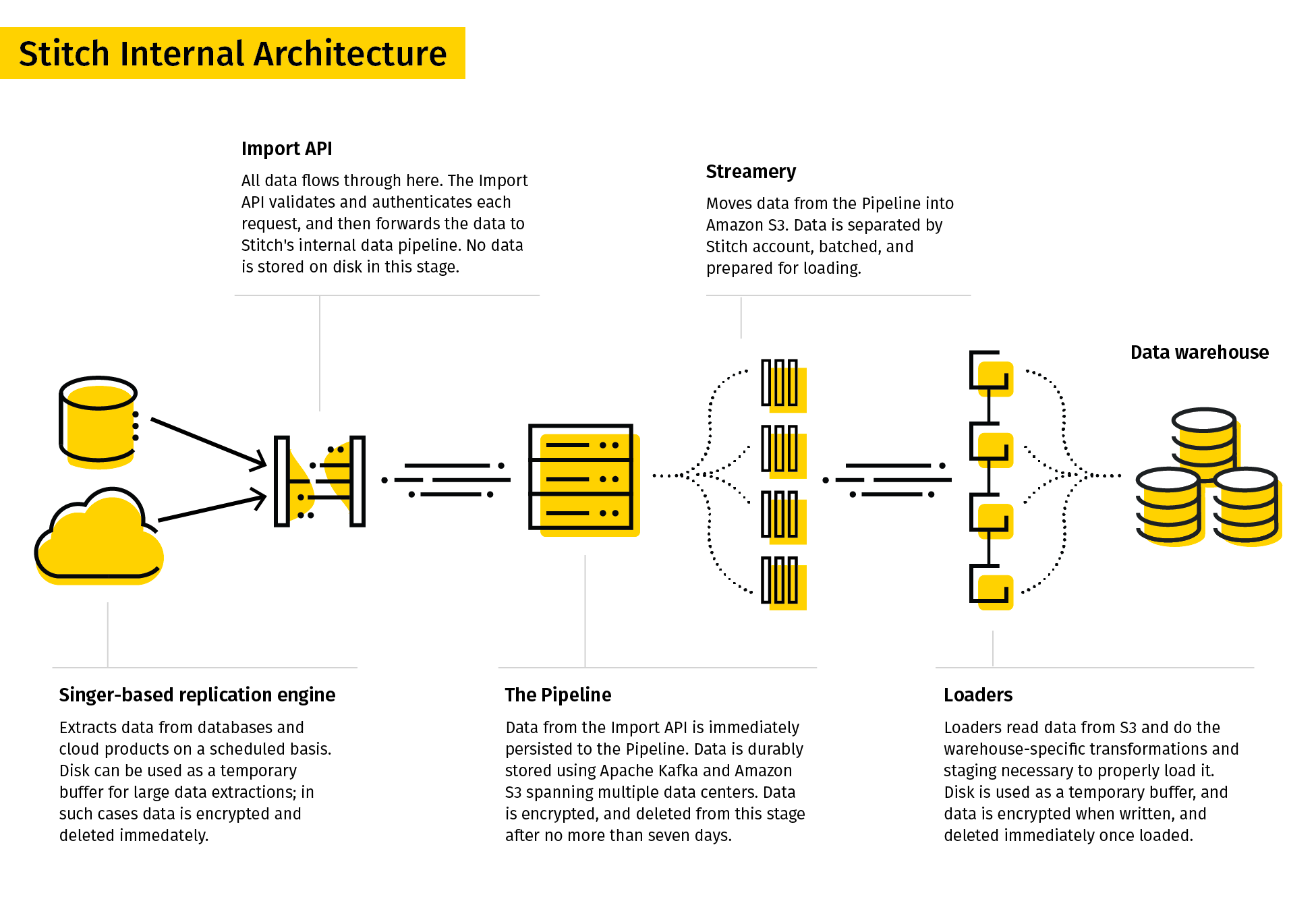

14. Stitch

Stitch describes itself as a simple, extensible ETL tool built for data teams.

Stitch’s replication process extracts data from various data sources, transforms it into a useful raw format, and loads it into the destination. Its data connectors included databases and SaaS applications. Destinations can include data lakes, data warehouses, and storage platforms.

Given its simplicity, Stitch only supports simple transformations and not user-defined transformations.

15. SAP BusinessObjects Data Services

SAP BusinessObjects Data Services is an enterprise ETL tool that allows users to extract data from multiple systems, transform it, and load it into data warehouses.

The Data Services Designer provides a graphical user interface for defining data pipelines and specifying data transformations. Rules and metadata are stored in a repository, and a job server runs the job in batch or real time.

However, SAP data services can be expensive, as the cost of the tool, server, hardware, and engineering team can quickly add up.

SAP Data Services is a good fit for companies that use SAP as their Enterprise Resource Planning (ERP) system, as it integrates seamlessly with SAP Data Services

16. Hevo

Hevo is a data integration platform for ETL and ELT that comes with over 150 connectors for extracting data from multiple sources. It is a low-code tool, making it easy for users to design data pipelines without needing extensive coding experience.

Hevo offers a range of features and benefits, including real-time data integration, automatic schema detection, and the ability to handle large volumes of data. The platform also comes with a user-friendly interface and 24/7 customer support.

17. Qlik Compose

Qlik Compose is a data warehousing solution that automatically designs data warehouses and generates ETL code. This tool automates tedious and error-prone ETL development and maintenance. This shortens the lead time of data warehousing projects.

To do so, Qlik Compose runs the auto-generated code, which loads data from sources and moves them to their data warehouses. Such workflows can be designed and scheduled using the Workflow Designer and Scheduler.

Qlik Compose also comes with the ability to validate the data and ensure data quality. Practitioners who need data in real-time can also integrate Compose with Qlik Replicate.

18. Integrate.io

Integrate.io, formerly known as Xplenty, earns a well-deserved spot on our list of top ETL tools. Its user-friendly, intuitive interface opens the door to comprehensive data management, even for team members with less technical know-how. As a cloud-based platform, Integrate.io removes the need for any bulky hardware or software installations and provides a highly scalable solution that evolves with your business needs.

Its ability to connect with a wide variety of data sources, from databases to CRM systems, makes it a versatile choice for diverse data integration requirements. Prioritizing data security, it offers features like field-level encryption and is compliant with key standards like GDPR and HIPAA. With powerful data transformation capabilities, users can easily clean, format, and enrich their data as part of the ETL process.

19. Airbyte

Airbyte is a leading open-source ELT platform. Airbyte offers the largest catalog of data connectors—350 and growing—and has more than 40,000 data engineers using it.

Airbyte integrates with dbt for its data transformation and Airflow / Prefect / Dagster for orchestration. It has an easy-to-use user interface and has an API and Terraform Provider available.

Airbyte differentiates itself by its open-sourceness; it takes 20 minutes to create a new connector with their no-code connector builder, and you can edit any off-the-shelf connector, given you have access to their code. In addition to its open-source version, Airbyte offers both a cloud-hosted (Airbyte Cloud) and a paid self-hosted version (Airbyte Enterprise) for when you want to productionalize your pipelines.

20. Astera Centerprise

Astera Centerprise is an enterprise-grade, 100% code-free ETL/ELT tool. As part of the Astera Data Stack, Centerprise features an intuitive and user-friendly interface that comes with a short learning curve and allows users of all technical levels to build data pipelines within minutes.

The automated data integration tool offers a range of capabilities, such as out-of-the box connectivity to several data sources and destinations, AI-powered data extraction, AI auto mapping, built-in advanced transformations, and data quality features. Users can easily extract unstructured and structured data, transform it, and load it into the destination of their choice using dataflows. These dataflows can be automated to run at specific intervals, conditions, or file drops using the built-in job scheduler.

21. Informatica PowerCenter

Informatica PowerCenter is one of the best ETL tools on the market. It has a wide range of connectors for cloud data warehouses and lakes, including AWS, Azure, Google Cloud, and SalesForce. Its low- and no-code tools are designed to save time and simplify workflows.

Informatica PowerCenter includes several services that allow users to design, deploy, and monitor data pipelines. For example, the Repository Manager helps with user management, the Designer allows users to specify the flow of data from source to target, and the Workflow Manager defines the sequence of tasks.

22. Estuary

Estuary is a cutting-edge real-time data integration platform that simplifies the creation and management of data pipelines. Designed to handle both batch and streaming data, Estuary enables you to build robust ETL workflows. Its intuitive user interface makes it accessible for both technical and non-technical users, allowing teams to focus on deriving value from their data rather than grappling with complex configurations.

The platform’s automation capabilities stand out, automatically managing schema evolution and adapting to changing data structures with ease. With integration into a wide range of data sources and destinations, Estuary caters to teams seeking real-time analytics, whether for monitoring sales trends in e-commerce or analyzing sensor data in IoT applications.

23. Fivetran

Fivetran has emerged as a leading ETL solution for fully automated data integration, enabling companies to centralize their data. By leveraging a library of pre-built connectors, Fivetran minimizes setup time, connecting databases, SaaS applications, and event streams to cloud data warehouses. The platform shines in its ability to handle schema changes automatically, ensuring that data flows smoothly even as source systems evolve.

With real-time replication capabilities, Fivetran supports near-instant data availability. Optimized for cloud-native environments like Snowflake, BigQuery, and Redshift, Fivetran is a go-to choice for teams looking to simplify data pipelines while maintaining scalability. It is particularly valuable for marketing and sales teams that need to integrate diverse data sources into unified analytics dashboards.

24. Matillion

Matillion is a cloud-native ETL tool designed to transform data directly within cloud data warehouses. Tailored for platforms like Snowflake, AWS Redshift, Google BigQuery, and Azure Synapse, Matillion provides a way to perform data transformations at scale. Its visual interface makes it easy for users to design workflows through a drag-and-drop environment, while more advanced users can leverage SQL-based transformations to handle complex data tasks.

With its focus on scalability and performance, Matillion is well-suited for teams that need to process large-scale transformations efficiently. From creating detailed customer 360 views to optimizing supply chain analytics, Matillion empowers data professionals to unlock the full potential of their cloud-based data infrastructures without the typical bottlenecks of traditional ETL processes.

Top ETL Tools Comparison

The following table compares the ETL tools mentioned side by side on many different categories:

| ETL tool | Open-source availability | Cloud compatibility | Ease of use | Number of integrations | Features and considerations | Ideal use case |

|---|---|---|---|---|---|---|

| Apache Airflow | Yes | Yes | Moderate | High | DAG-based workflow, scalability, extensive community support | Complex workflows and orchestrating large-scale, multi-step data pipelines |

| Databricks Delta Live Tables | No | Yes | High | High | Decarative pipeline design, automatic dependency management, built-in data quality checks | Enterprises using the Databricks Lakehouse seeking automated, reliable ETL with integrated data quality and real-time processing |

| Portable.io | No | Yes | High | Very High | On-demand connectors, no-code, cost-effective pricing | Small to mid-sized companies needing custom connectors for less common data sources |

| IBM Infosphere Datastage | No | Yes | Moderate | High | High-speed processing, metadata support, enterprise-grade | Enterprises with diverse and high-volume data pipelines requiring robust metadata management |

| Oracle Data Integrator | No | Yes | Moderate | High | Extensive connectors, graphical interface, robust management | Companies using Oracle ecosystems or those needing extensive database support |

| Microsoft SSIS | No | Limited | Moderate | Moderate | Built-in transformations, comprehensive documentation | Organizations already invested in Microsoft SQL Server |

| Talend Open Studio | Yes | Yes | High | High | Open-source, user-friendly GUI, active community | Small to mid-sized companies looking for an open-source ETL solution with extensive integration options |

| Pentaho Data Integration | Yes | Yes | Moderate | High | Real-time ETL, graphical interface, Spoon/Kitchen clients | Real-time ETL processing for companies needing flexible, GUI-based workflows |

| Hadoop | Yes | Yes | Low | High | Big data processing, HDFS, MapReduce, high implementation cost | Large enterprises handling massive datasets and requiring distributed data storage and processing |

| AWS Glue | No | Yes | High | High | Serverless, Python/Scala support, flexible processing workloads | Cloud-native companies needing serverless ETL for structured and unstructured data |

| AWS Data Pipeline | No | Yes | High | Moderate | Managed service, fault-tolerant, inexpensive | Basic ETL processes within AWS (but transitioning users should explore AWS Glue or zero-ETL solutions) |

| Azure Data Factory | No | Yes | High | Very High | Many connectors, flexible interfaces, cloud-based | Enterprises with diverse data sources using Microsoft’s Azure ecosystem |

| Google Cloud Dataflow | No | Yes | High | High | Serverless, Apache Beam integration, cost-efficient | Stream or batch data processing within the Google Cloud ecosystem |

| Stitch | No | Yes | High | Moderate | Simple transformations, SaaS connectors, user-friendly | Startups and small teams focusing on simple data replication to data warehouses |

| SAP BusinessObjects | No | Yes | Moderate | High | Enterprise-grade, integrates with SAP, expensive | SAP ERP system users looking for seamless integration |

| Hevo | No | Yes | High | High | Low-code, real-time integration, automatic schema detection | Small to mid-sized businesses needing real-time analytics |

| Qlik Compose | No | Yes | Moderate | High | Automates ETL development, real-time integration with Qlik Replicate | Businesses requiring automated ETL pipelines and integration with Qlik Replicate |

| Integrate.io | No | Yes | High | High | Intuitive interface, no hardware needed, strong security features | Companies prioritizing ease of use and data security |

| Airbyte | Yes | Yes | High | Very High | Open-source, easy connector creation, integrates with dbt | Organizations looking for open-source, customizable ELT solutions |

| Astera Centerprise | No | Yes | High | High | Code-free, AI-powered data extraction, user-friendly | Enterprises seeking no-code ETL tools with AI-powered automation |

| Informatica PowerCenter | No | Yes | High | Very High | Low/no-code tools, wide range of connectors, enterprise-grade | Enterprises handling complex data pipelines with extensive connector needs |

| Estuary | No | Yes | High | Moderate | Real-time data integration, automation, schema evolution, batch and streaming support | Businesses needing real-time analytics for IoT or e-commerce data |

| Fivetran | No | Yes | High | Very High | Automated schema updates, pre-built connectors, real-time replication, optimized for cloud | Companies needing automated, reliable data replication with minimal manual intervention |

| Matillion | No | Yes | High | High | Cloud-native, drag-and-drop interface, complex SQL transformations, scales with cloud infrastructures | Teams maximizing cloud-based data transformation workflows |

Enhancing Your Team's ETL Expertise

As data becomes central to business operations, effective ETL processes are crucial. To stay competitive, it's vital to continuously enhance your team's skills in data engineering and management. DataCamp for Business offers tailored solutions to help organizations upskill their employees, ensuring they are well-equipped to handle the complexities of modern data analytics. With DataCamp for business, your team can access:

- Focused learning paths: Provide your team with targeted training on ETL tools like Apache Airflow, AWS, and more to improve their ability to design and manage efficient data pipelines.

- Practical experience: Encourage hands-on projects that reflect your organization’s data challenges, helping your team build the confidence and expertise needed to handle complex data tasks.

- Scalable training solutions: Choose scalable training platforms that offer a range of resources in ETL and data management, ensuring your team can adapt as your organization grows.

- Progress tracking: Utilize tools to monitor your team’s development, providing regular feedback to ensure continuous improvement.

Investing in your data team’s skills not only enhances ETL efficiency but also drives better data strategies, contributing to your organization’s success. Request a demo today to learn more.

Additional Resources

In conclusion, there are many different ETL and data integration tools available, each with its own unique features and capabilities. Some popular options include SSIS, Talend Open Studio, Pentaho Data Integration, Hadoop, Airflow, AWS Data Pipeline, Google Dataflow, SAP BusinessObjects Data Services, and Hevo. Companies considering these tools should carefully evaluate their specific requirements and budget to choose the right solution for their needs. For more resources on ETL tools and more, check out the following links:

Get certified in your dream Data Engineer role

Our certification programs help you stand out and prove your skills are job-ready to potential employers.

FAQs

What is ETL?

ETL stands for Extract, Transform, Load. It is a process of extracting data from various sources, transforming it into a useful format, and loading it into a destination system, such as a data warehouse.

What are the benefits of using ETL tools?

ETL tools automate the data integration process, reducing the time and effort required to build and maintain data pipelines. They also help ensure data accuracy and consistency, improve data quality, and enable faster decision-making.

What are some common use cases for ETL?

Common use cases for ETL include data warehousing, business intelligence, data migration, data integration, and data consolidation.

What are some popular ETL tools?

Some popular ETL tools include IBM Infosphere Information Server, Oracle Data Integrator, Microsoft SQL Server Integration Services (SSIS), Talend Open Studio, Pentaho Data Integration (PDI), Hadoop, AWS Glue, AWS Data Pipeline, Azure Data Factory, Google Cloud Dataflow, Stitch, SAP BusinessObjects Data Services, and Hevo.

What should I consider when choosing an ETL tool?

When choosing an ETL tool, consider factors such as your specific requirements, budget, data sources and destinations, scalability, ease of use, and available support and documentation.

Are there any open-source ETL tools available?

Yes, there are several open-source ETL tools available, including Talend Open Studio, Pentaho Data Integration (PDI), and Apache NiFi.

What is the difference between ETL and ELT?

ETL stands for Extract, Transform, Load, while ELT stands for Extract, Load, Transform. In ETL, data is transformed before it is loaded into the destination system, while in ELT, data is loaded into the destination system first and then transformed.

Can I use ETL tools for real-time data integration?

Yes, some ETL tools, such as AWS Glue and Hevo, support real-time data integration.

What are some best practices for ETL development?

Some best practices for ETL development include designing for scalability, optimizing data quality and performance, testing and debugging thoroughly, and documenting the ETL process and data lineage.