Course

The rise of large language models (LLMs) has been a defining trend in the realm of Natural Language Processing (NLP), leading to their widespread adoption across various applications. However, this advancement has often been exclusive, with most LLMs developed by resource-rich organizations remaining inaccessible to the public.

This exclusivity poses a significant question: What if there was a way to democratize access to these powerful language models? This is where BLOOM enters the scene.

This article is a complete overview of what BLOOM is by first providing more details about its genesis. Then, it walks through the technical specifications of BLOOM and how to use it before highlighting its limitations and ethical considerations.

What is BLOOM?

BigScience Large Open-science Open-access Multilingual Language Model (BLOOM for short), represents a considerable advance in democratizing language model technology.

Developed collaboratively by over 1200 participants from 39 countries, including a significant number from the United States, BLOOM is the product of a global effort. Coordinated by BigScience in collaboration with Hugging Face and the French NLP community, this project transcends geographical and institutional boundaries.

It is an open-source and a decoder-only transformer model with 176B-parameter trained on the ROOTS corpus, which is a dataset of over hundreds of sources in 59 languages: 46 spoken languages and 13 programming languages.

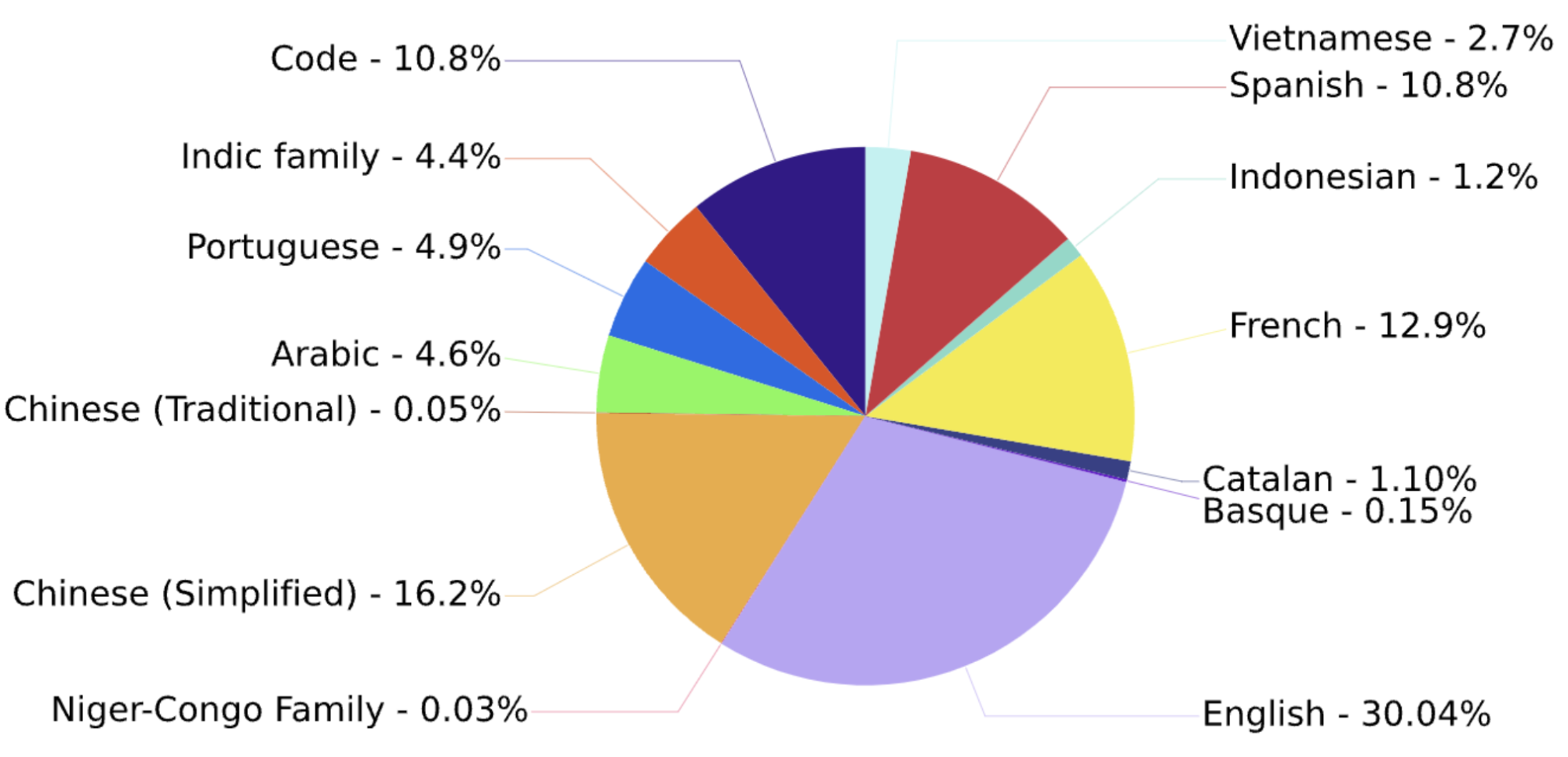

Below is the piechart of the distribution of the training languages.

Distribution of the training languages (source)

The model was found to achieve a remarkable performance on a wide variety of benchmarks, and it reached better results after multitask prompted finetuning.

The project culminated in a 117-day (March 11 - July 6) training session on the Jean Zay supercomputer in Paris, backed by a substantial compute grant from the French research agencies CNRS and GENCI.

BLOOM stands not only as a technological marvel but also as a symbol of international cooperation and the power of collective scientific pursuit.

BLOOM model architecture

Now, let's describe BLOOM’s architecture in more detailed manner, which involves multiple components.

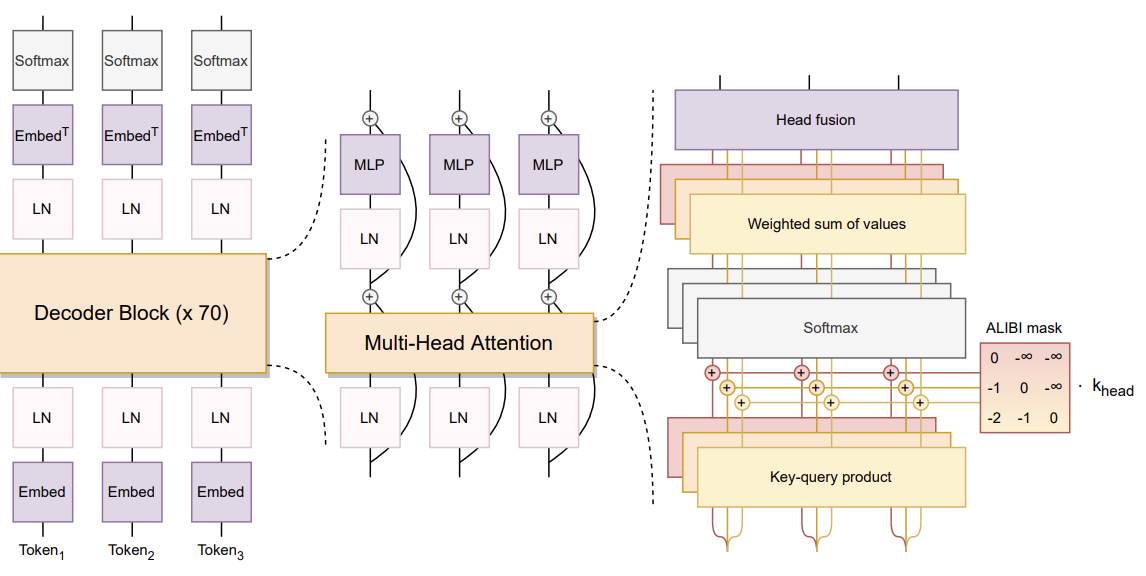

Bloom architecture (source)

The architecture of the BLOOM model, as detailed in the paper, includes several notable aspects:

- Design Methodology: The team focused on scalable model families with support in publicly available tools and codebases and conducted ablation experiments on smaller models to optimize components and hyperparameters. Zero-shot generalization was a key metric for evaluating architectural decisions.

- Architecture and Pretraining Objective: BLOOM is based on the Transformer architecture, specifically a causal decoder-only model. This approach was validated as the most effective for zero-shot generalization capabilities compared to encoder-decoder and other decoder-only architectures.

- Modeling Details:

- ALiBi Positional Embeddings: ALiBi was chosen over traditional positional embeddings, as it directly attenuates attention scores based on the distance between keys and queries. This led to smoother training and better performance.

- Embedding LayerNorm: An additional layer normalization was included immediately after the embedding layer, which improved training stability. This decision was partly influenced by the use of bfloat16 in the final training, which is more stable than float16.

These components reflect the team's focus on balancing innovation with proven techniques to optimize the model's performance and stability.

In addition to the architectural components of BLOOM, let’s understand two additional relevant components: data preprocessing and prompted datasets.

- Data preprocessing: This involved crucial steps like deduplication and privacy redaction, especially for sources with higher privacy risks.

- Prompted datasets: BLOOM employs multitask prompted finetuning, which has shown strong zero-shot task generalization abilities.

How to Use BLOOM

In this example, we'll use BLOOM to generate a creative story. The code provided is structured to set up the environment, prepare the model, and generate text based on a given prompt. The corresponding source code is available on GitHub, and it is highly inspired of the amrrs tutorial.

Configure workspace

The BLOOM model is resource intensive, hence a proper configuration of the workspace is crucial, and the main steps are described below.

First, the transformer library is used to provide the interfaces for working with the BLOOM model, and other transformer-based nodels in general.

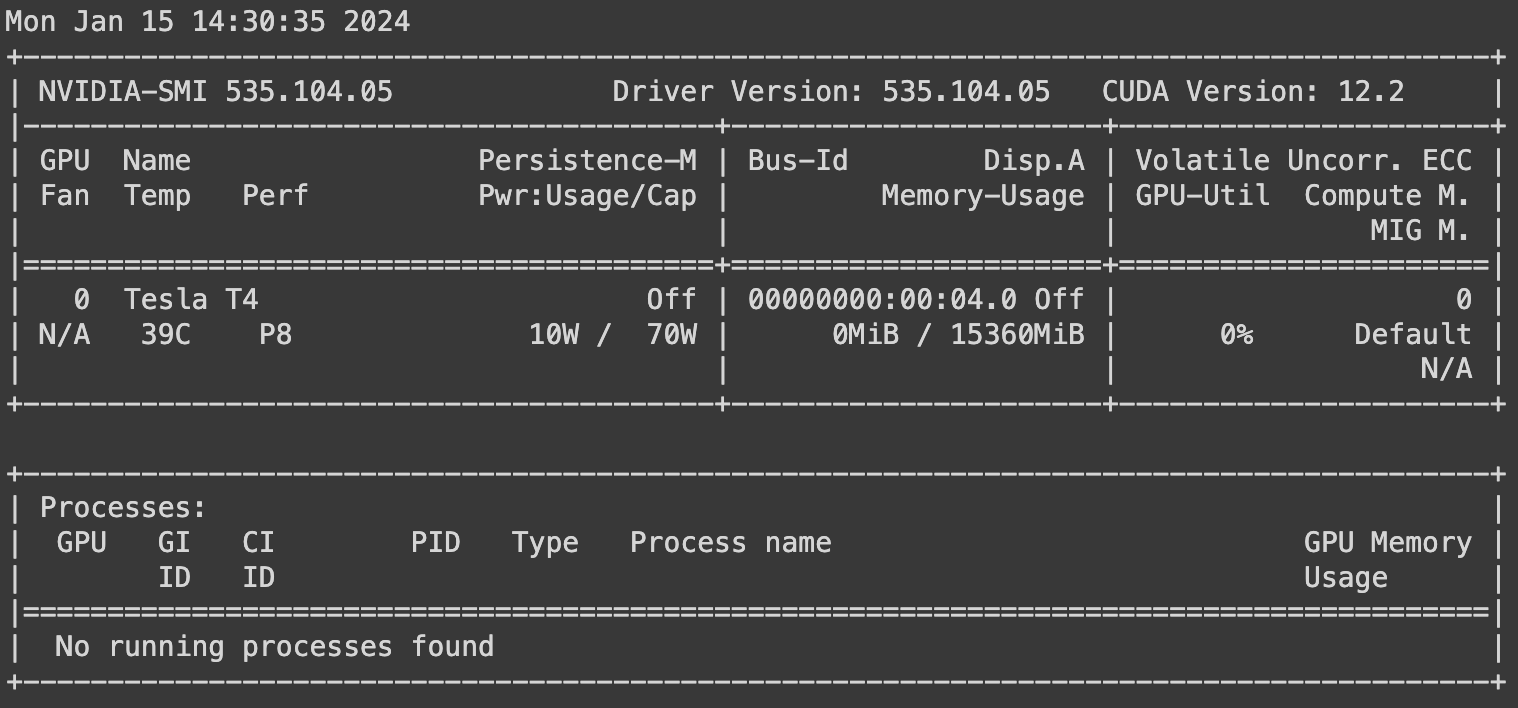

!pip install transformers -qUsing nvidia-smi, we check the properties of the available GPU to ensure we have the necessary computational resources to run the model

!nvidia-smi

We import required modules from transformers and torch. torch is used for setting the default tensor type to leverage GPU acceleration.

Then, since we are using a GPU, the torch library is set up using the set_default_tensor_type function to ensure the use of the GPU.

from transformers import AutoModelForCausalLM, AutoTokenizer, set_seed

import torch

torch.set_default_tensor_type(torch.cuda.FloatTensor)Using the BLOOM model

The target model being used is the 7 billion parameter BLOOM model, and it is accessible from BigScience’s Hubbing Face repository under bigscience/bloom-1b7, which corresponds to the unique identifier of the model.

model_ID = "bigscience/bloom-1b7"Next, we load the pre-trained BLOOM model and tokenizer from Hugging Face, and set the seed for reproducibility using the set_seed function with any number. The value of the number itself does not matter, but it is important to use a non-floating value.

To learn more about the untapped potentials of Large Language Models with Langchain, which is an open-source Python framework for building advanced AI applications, our tutorial How to Build LLM Applications with LangChain Tutorial is the right guide.

Furthermore, if you are data engineer and interested in the use of LangChain for data applications, our article Introduction to LangChain for Data Engineering & Data Applications provides an overview of what you can do with LangChain, including the problems that LangChain solves and examples of data use cases.

model = AutoModelForCausalLM.from_pretrained(model_ID, use_cache=True)

tokenizer = AutoTokenizer.from_pretrained(model_ID)

set_seed(2024)

Now, we can define the title of the story to be generated, along with the prompt.

story_title = 'An Unexpected Journey Through Time'

prompt = f'This is a creative story about {story_title}.\n'Finally, we tokenize the prompt and map to the appropriate model device before generating the model’s result after decoding.

input_ids = tokenizer(prompt, return_tensors="pt").to(0)

sample = model.generate(**input_ids,

max_length=200, top_k=1,

temperature=0, repetition_penalty=2.0)

generated_story = tokenizer.decode(sample[0], skip_special_tokens=True)The final result is formatted using the textwrap module, to ensure that the maximum number of characters per line is 80 for better readability.

import textwrap

wrapper = textwrap.TextWrapper(width=80)formated_story = wrapper.fill(text=generated_story)

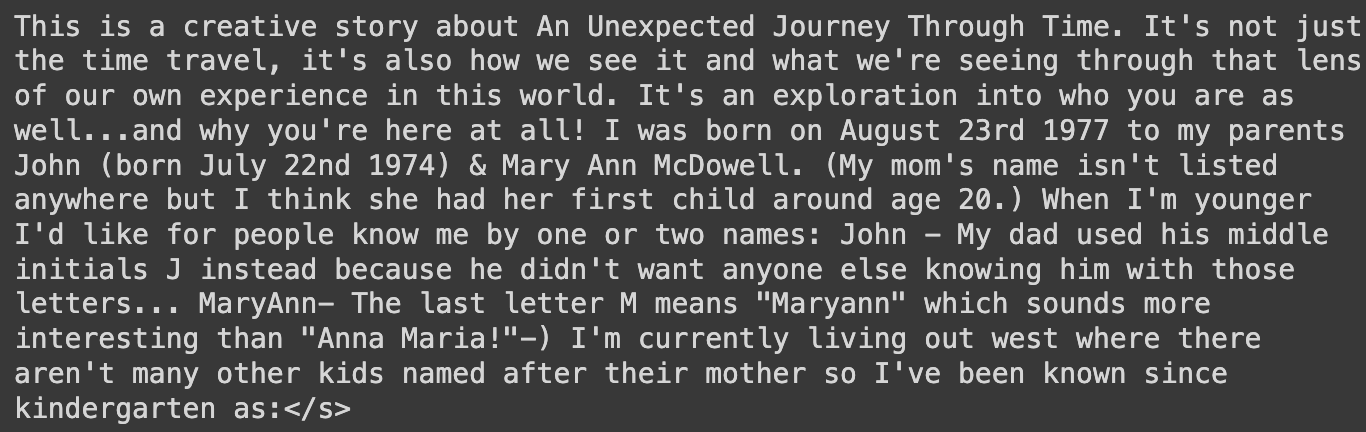

print(formated_story)The final result is given below:

Story generated using BLOOM model

With a few lines of code, we were able to generate meaningful content using the BLOOM model.

If you are interested in mastering the process of training large language models using PyTorch, from initial setup to final implementation, our tutorial How to Train an LLM with PyTorch provides a complete guide to reach that goal.

Intended and Out-of-Scope Uses

Like all technological advancements, BLOOM comes with its own set of suitable and unsuitable applications. This section delves into its appropriate and inappropriate use cases, highlighting where its capabilities can be best utilized and where caution is advised. Understanding these boundaries is crucial for harnessing BLOOM's potential responsibly and effectively.

Intended Uses of BLOOM

BLOOM is a versatile tool designed to push the boundaries of language processing and generation. Its intended uses span across various domains, each leveraging its expansive language capabilities.

- Multilingual Content Generation: With proficiency in 59 languages, BLOOM excels at creating diverse and inclusive content. This capability is particularly valuable in global communication, education, and media, where language inclusivity is crucial.

- Coding and Software Development: BLOOM's training in programming languages positions it as an asset in software development. It can assist in tasks like code generation, debugging, and serving as an educational tool for new programmers.

- Research and Academia: In academic circles, BLOOM serves as a powerful resource for linguistic analysis and AI research, providing insights into language patterns, AI behavior, and more.

Out-of-scope uses of BLOOM

Understanding the limitations of BLOOM is crucial to ensure its ethical and practical application. Some use cases fall outside the scope of what BLOOM LLM is intended for, primarily due to ethical considerations or technical constraints.

- Sensitive Data Handling: BLOOM is not designed for processing sensitive personal data or confidential information. The potential for privacy breaches or misuse of such data makes it unsuitable for these purposes.

- High-Stakes Decision Making: using BLOOM in scenarios that require critical accuracy, such as medical diagnostics or legal decisions, is not advisable. The model's limitations, like most large language models could lead to inaccurate or misleading outcomes in these sensitive areas.

- Human Interaction Replacement: BLOOM should not be seen as a replacement for human interaction, especially in fields requiring emotional intelligence, such as counseling, diplomacy, or personalized teaching. The model lacks the nuanced understanding and empathy that human interaction provides.

Direct and Indirect Users

BLOOM, as an advanced Large Language Model (LLM), offers a wide array of benefits that extend to various user groups. Its capabilities not only directly influence certain professionals and sectors but also have a broader effect, indirectly impacting a wider range of stakeholders.

This exploration of BLOOM's users aims to highlight how different groups leverage and are affected by this innovative tool.

By understanding the direct and indirect users of BLOOM, we can appreciate the model's extensive influence and the diverse ways in which it contributes to advancing technology and society.

Direct Users of BLOOM

- Developers and Data Scientists: Professionals in software development and data science are primary users. They utilize BLOOM for tasks like coding assistance, debugging, and data analysis.

- Researchers and Academics: This group includes linguists, AI researchers, and academics who use BLOOM for language studies, AI behavior analysis, and advancing NLP research.

- Content Creators and Translators: Writers, journalists, and translators use BLOOM for generating and translating content across different languages, enhancing their productivity and reach.

Indirect Users of BLOOM

- Businesses and Organizations: Companies across various sectors benefit indirectly from BLOOM through enhanced AI-driven services, improved customer interactions, and efficient data handling.

- Educational Institutions: Students and educators experience the benefits of BLOOM indirectly via educational tools and resources that incorporate its language processing capabilities for learning and teaching.

- General Public: The wider community is an indirect beneficiary of BLOOM through improved technology experiences, access to multilingual content, and enhanced software applications.

Ethical Considerations and Limitations

The deployment of BLOOM, like any Large Language Model (LLM), brings with it a range of ethical considerations and limitations. These aspects are crucial to understand for responsible usage and to anticipate the broader impact of the technology. This section addresses the ethical implications, risks, and inherent limitations associated with using BLOOM.

Ethical considerations

- Data Bias and Fairness: One of the primary ethical concerns is the potential for BLOOM to perpetuate or amplify biases present in its training data. This can affect the fairness and neutrality of its outputs, leading to ethical challenges in scenarios where unbiased processing is critical.

- Privacy Concerns: While BLOOM is not explicitly designed to handle sensitive personal information, the vastness of its training data may inadvertently include such information. There's a risk of privacy violations if BLOOM generates outputs based on or revealing sensitive data.

Risks associated with use

- Misinformation and Manipulation: The advanced capabilities of BLOOM can be misused for generating misleading information or manipulative content, posing a significant risk in areas like media, politics, and public opinion.

- Dependence and Skill Degradation: Over-reliance on BLOOM for tasks like content creation or translation might lead to a degradation of these skills in humans, affecting creativity and linguistic abilities.

Limitations of BLOOM

- Contextual Understanding: Despite its sophistication, BLOOM may lack the deep contextual and cultural understanding necessary for certain tasks, leading to inaccuracies or inappropriate outputs in nuanced scenarios.

- Evolving Nature of Language: BLOOM's training on a static dataset means it may not keep pace with the evolving nature of language, including new slang, terminology, or cultural references.

Real-world impact and controversies

The development and release of BLOOM have significant implications in the real world, both in terms of its impact and the controversies it raises. This section discusses these aspects, grounded in the insights from the BLOOM research paper.

Real-world impact of BLOOM

- Democratization of AI Technology: BLOOM represents a step towards democratizing AI technology. Developed by BigScience, a collaborative effort involving over 1200 people from 38 countries, BLOOM is an open-access model trained on a diverse corpus covering 59 languages. This wide-ranging participation and accessibility mark a shift from the exclusivity typically seen in large language model development.

- Diversity and Inclusivity: The project's commitment to linguistic, geographical, and scientific diversity is notable. The ROOTS corpus used for training BLOOM spans a vast range of languages and programming languages, reflecting an emphasis on inclusivity and representation in AI development

Controverses and challenges

- Social and Ethical Concerns: BLOOM's development acknowledges the social limitations and ethical challenges in large language model development. The BigScience workshop employed an Ethical Charter to guide the project, emphasizing inclusivity, diversity, openness, reproducibility, and responsibility. These principles were integrated into various aspects of the project, from dataset curation to model evaluation.

- Environmental and Resource Concerns: The development of large language models like BLOOM has raised environmental concerns due to the significant computational resources required. The training of these models, typically affordable only to well-resourced organizations, has implications for energy consumption and carbon footprint

Is BLOOM Still Relevant Today?

In the fast-paced world of Artificial Intelligence (AI), the relevance of Large Language Models (LLMs) like BLOOM is a topic of ongoing discussion.

Developed by a vast team of international researchers, BLOOM was a significant leap forward in language processing. However, the AI landscape is continually evolving, with new models emerging and shifting the focus.

Let's examine whether BLOOM still holds its ground in this competitive domain.

- Shift in Focus: Initially a major breakthrough, BLOOM now finds itself in the shadow of newer models. Unlike conversational AI like ChatGPT, BLOOM is tailored for content completion rather than interactive communication.

- Hardware Constraints: BLOOM's high hardware demands limit its accessibility compared to more user-friendly LLMs like GPT-3.5 and GPT4, which are easier to integrate into various applications.

- Rising Competition: The advent of models like LLaMA and tools like Alpaca has democratized access to LLMs, offering capabilities with reduced resource requirements, thereby widening their appeal and usage.

- Multilingual Capability: BLOOM's unique selling point is its extensive training in multiple languages, making it a valuable resource for translation and multilingual content creation.

Conclusion

This article provided an overview of the BLOOM project, a significant contribution to the evolving field of Large Language Models.

We explored BLOOM's development, highlighting its technical specifications and the collaboration behind its creation. The article also served as a guide for accessing and effectively utilizing BLOOM, emphasizing its appropriate uses and limitations.

Furthermore, it covered the ethical implications and real-world impact of BLOOM AI project, reflecting on its reception and relevance in today’s AI landscape. Whether you're directly involved in AI or an enthusiast, this exploration of BLOOM offers essential perspectives for navigating the complex world of large language models.

For those looking to deepen their engagement with advanced language models, our additional course, Large Language Models (LLMs) Concepts, provides a path to discover the full potential of LLMs with our conceptual course covering LLM applications, training methodologies, ethical considerations, and the latest research.

A multi-talented data scientist who enjoys sharing his knowledge and giving back to others, Zoumana is a YouTube content creator and a top tech writer on Medium. He finds joy in speaking, coding, and teaching . Zoumana holds two master’s degrees. The first one in computer science with a focus in Machine Learning from Paris, France, and the second one in Data Science from Texas Tech University in the US. His career path started as a Software Developer at Groupe OPEN in France, before moving on to IBM as a Machine Learning Consultant, where he developed end-to-end AI solutions for insurance companies. Zoumana joined Axionable, the first Sustainable AI startup based in Paris and Montreal. There, he served as a Data Scientist and implemented AI products, mostly NLP use cases, for clients from France, Montreal, Singapore, and Switzerland. Additionally, 5% of his time was dedicated to Research and Development. As of now, he is working as a Senior Data Scientist at IFC-the world Bank Group.