Course

Imagine scrolling through endless rows of data, unable to spot the insights hidden within. That’s the reality for many businesses today, with an estimated 402.74 million terabytes of data generated daily. So how do we make sense of it all? The answer lies in data visualization.

Organizations and individuals leverage data to determine the causes of problems and identify actionable steps. However, with an ever-growing amount of data, it becomes increasingly challenging to make sense of it all. Our innate nature is to search for patterns and find structure. This is how we store information and learn new insights. When incoming data is not presented in a visually pleasing way, seeing these patterns and finding structure can be difficult or, in some cases, impossible.

In this article, we will examine how data visualization can solve the above problem. We will also discuss what data visualization is, why it is important, tips for developing your skills, common graphs, and tools for visualizing your data.

What is Data Visualization?

Data visualization is the process of graphically representing data. It is the act of translating data into a visual context, which can be done using charts, plots, animations, infographics, etc. The idea behind it is to make it easier for us to identify trends, outliers, and patterns in data. We explore this concept more in our Understanding Data Visualization course.

Data professionals regularly leverage data visualizations to summarize key insights from data and relay that information back to the appropriate stakeholders.

For example, a data professional may create data visualizations for management personnel, who then leverage those visualizations to project the organizational structure. Another example is when data scientists use visualizations to uncover the underlying structure of the data to provide them with a better understanding of their data.

Given the defined purpose of data visualization stated above, there are two vital insights we can take away from what data visualization is:

1) A way to make data accessible: The best way to make something accessible is to keep things simple. The word ‘simple’ here needs to be put into context: what is straightforward for a ten-year-old to understand may not be the same for a Ph.D holder. Thus, data visualization is a technique used to make data accessible to whomever it may concern.

2) A way to communicate: This takeaway is an extension of the first. To communicate effectively, everyone in the room must be speaking the same language. It does not matter if you are working on a task independently or with a team, visualizations should derive interesting insights relative to those who may view them. A CEO may prefer to view insights that provide actionable steps, and a machine learning team may prefer to view insights into how their models perform.

In short, data visualization is a technique used to make it easier to recognize patterns or trends in data.

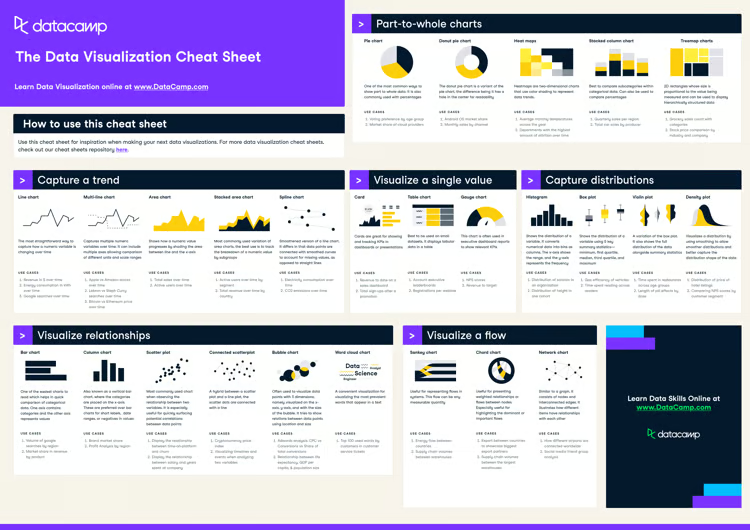

Our data visualization cheat sheet gives a visual representation of the concepts of data visualization

Why is data visualization so important for data scientists?

Data science is an interdisciplinary field that uses scientific methods, processes, algorithms, and systems to extract knowledge and insights from noisy, structured, and unstructured data (see our 'what is data science' guide for a deeper explanation). However, the challenge data scientists face is that it is not always possible to connect the dots when faced with raw data; this is where data visualization is extremely valuable. Let’s look at why data visualization is such a powerful tool for data scientists.

Discoverability and learning

Data scientists are required to have a good understanding of the data they use. Data visualization makes it possible for data scientists to bring out the most informative aspects of their data, making it quick and easy for them (and others) to grasp what is happening in the data. For example, identifying trends in a dataset represented in a table format is much more complicated than seeing it visually. We will talk more about this in more detail later in the article.

Storytelling

Stories have been used to share information for centuries. The reason stories are so compelling is that they create an emotional connection with the audience. This connection allows the audience to gain a deeper understanding of other people’s experiences, making the information more memorable. Data storytelling is a form of visualization: it helps data scientists to make sense of their data and share it with others in an easily understandable format.

Efficiency

Insights can undoubtedly be derived from databases (in some cases), but this endeavor requires immense focus and is a significant business expense. Using data visualization in such instances is much more efficient. For example, if the goal is to identify all of the companies that suffered a loss in 2024, it would be easier to use red to highlight all the companies with profits less than zero, and green to highlight those greater than zero. The companies that broke even remain neutral. This technique is much more efficient than manually parsing the database to mark the individual companies.

Aesthetics

While raw data may be informative, it is rarely visually pleasing or engaging. Data visualization is a technique used to take informative yet dull data and transform it into beautiful, insightful knowledge. Making data more visually appealing plays into our nature of being primarily optical beings. In other words, we process information much faster when it stimulates our visual senses.

How to Learn Data Visualization

Data visualization is a critical skill for anyone working with data, from business analysts to data scientists. Mastering it allows you to communicate insights effectively, making your data-driven decisions clearer and more impactful. If you’re wondering where to start, we offer a variety of resources designed to guide you at every stage of your data visualization journey—whether you’re a beginner or looking to sharpen your advanced skills.

Here’s how you can get started:

1. Start with the basics

If you’re completely new to data visualization, it’s essential to understand the fundamentals before diving into advanced tools and techniques. DataCamp provides structured learning paths to guide you through these basics.

- Recommended Resource: Understanding Data Visualization. This course introduces you to the core concepts of data visualization, teaching you how to design and interpret simple but effective visualizations. It covers essential chart types, visualization best practices, and how to think critically about data.

- Topics Covered:

- Basic chart types (bar charts, pie charts, line graphs)

- Common mistakes in data visualization

- How to choose the right type of chart for your data

2. Learn to visualize data with code-free tools

For those who aren’t yet familiar with programming, we have courses on tools that allow you to create visualizations without writing code. These tools are great for business users, managers, and anyone looking to quickly create insightful visualizations.

- Recommended resource: Power BI Fundamentals. Power BI is a powerful, code-free tool for creating interactive dashboards and reports. This skill track will teach you how to use its intuitive interface to create compelling visualizations that communicate data insights effectively.

- Recommended resource: Tableau Fundamentals. Tableau is another popular tool for creating interactive, visually appealing dashboards. With drag-and-drop functionality, you can easily explore data and create insightful visualizations.

- Topics covered:

- Building interactive dashboards

- Data cleaning and preparation for visualization

- Best practices for visual storytelling

3. Develop coding skills for advanced visualizations

If you’re ready to get hands-on with coding, learning to create visualizations using Python or R can greatly expand your ability to customize and automate your data visualizations.

- Recommended resource: Data Visualization with Python. Python offers a variety of powerful libraries (like Matplotlib, Seaborn, and Plotly) for creating everything from simple line graphs to complex interactive visualizations. This track will help you build a strong foundation in Python-based visualization tools.

- Recommended resource: Data Visualization with R. R is widely used for statistical computing and data visualization. The ggplot2 package, in particular, is an industry standard for creating beautiful, informative plots. In this track, you’ll learn how to create high-quality visualizations and understand the power of R's data manipulation capabilities.

- Topics covered:

- Creating custom visualizations using Matplotlib, Seaborn, and ggplot2

- Understanding advanced charts like histograms, heatmaps, and scatter plots

- Building interactive visualizations with Plotly and Shiny

4. Practice through projects

Once you’ve learned the basics, practice is key to mastering data visualization. DataCamp’s hands-on projects let you apply your skills to real-world datasets, helping you gain practical experience.

- Recommended resource: Data Visualization Projects. These guided projects let you work with real datasets, allowing you to practice what you’ve learned. You’ll create visualizations from scratch, gaining experience with both the process of data exploration and the design of effective visual communication.

- Example projects:

- Exploring the Evolution of LEGO – Learn how to create data visualizations that explore trends in LEGO set production using Python’s Matplotlib and Seaborn.

- Understanding the Gender Gap in College Degrees – Create line charts and area plots using Python to visualize trends in gender disparity across different college majors.

5. Continue learning and stay updated

Data visualization is an evolving field, with new tools and techniques emerging regularly. DataCamp regularly updates its courses and introduces new ones to keep you informed of the latest trends.

- Recommended resource: Data Visualization Cheat Sheet. Keep this cheat sheet handy as a reference for commonly used charts, design best practices, and tips on choosing the right visualization based on your data and audience.

- Explore new tools: Stay ahead by learning about cutting-edge tools like Plotly Dash or D3.js (a JavaScript library) as you advance in your journey.

Data Visualization Tools

Data professionals, such as data scientists and data analysts, would typically leverage data visualization tools as this helps them to work more efficiently and communicate their findings more effectively.

The tools can be broken down into two categories: 1) code free and 2) code based. Let’s take a look at some popular tools in each category.

Code-free tools

Not everyone in your organization is going to be tech-savvy. However, lacking the ability to program should not deter you from deriving insights from data. You can lack programming ability but still be data literate – someone who can read, write, communicate, and reason with data to make better data-driven decisions.

Thus, code-free tools serve as an accessible solution for people who may not have programming knowledge (although people with programming skills may still opt to use them. More formally: code-free tools are graphical user interfaces that come with the capabilities of running native scripts to process and augment the data.

Some example code-free tools include:

Power BI

Power BI is a highly-popular Microsoft solution for data visualization and business intelligence. It is among the world's most popular business intelligence tools used for reporting, self-service analytics, and predictive analytics. This platform service makes it easy for you to quickly clean, analyze, and begin finding insights into your organization's data.

If you are interested in learning Power BI, consider starting with Datacamp’s Power BI Fundamentals skill track.

Tableau

Tableau is also one of the world’s most popular business intelligence tools. Its simple drag-and-drop functionality makes it easily accessible for anyone to begin finding insights into their organization's data using interactive data visualizations.

DataCamp’s Tableau Fundamentals skill track is a great way to get started with Tableau.

ChatGPT

ChatGPT has quickly become a tool for helping people visualize their data. As we explore in our article on GPT-4o, with a few prompts, you can use the tool to visualize trends in your data. Generative AI is changing the way we think about our data, and there is much more to come! Check out our ChatGPT Fundamentals skill track to learn more about how to use this powerful tool.

Visualization with code

If you are more tech-savvy, you may prefer to use a programming language to visualize your data. The increase in data production has boosted the popularity of Python and R due to their various packages that support data processing.

Let’s take a look at some of these packages.

Python packages

Python is a high-level, interpreted, general-purpose programming language. It offers several great graphing packages for data visualization, such as:

The Data Visualization with Python skills track is a great sequence of courses to supercharge your data science skills using Python's most popular and robust data visualization libraries.

R packages

R is a programming language for statistical computing and graphics. It is a great tool for data analysis, as you can create almost any type of graph using its various packages. Popular R data visualization packages include:

- ggplot2

- Lattice

- highcharter

- Leaflet

- RColorBrewer

- Plotly

Check out the Data Visualization with R and Interactive Data Visualization in R skill tracks to level up your visualization skills with the R programming language.

Common Graphs Used for Data Visualization

We have established that data visualization is an effective way to present and communicate with data. In this section, we will cover how we can create some common types of charts & graphs, which are an effective starting point for most data visualization tasks, by using Python and the Matplotlib python package for data visualization. We will also share some use cases for each graph.

You can check out this DataLab workbook to access the full code.

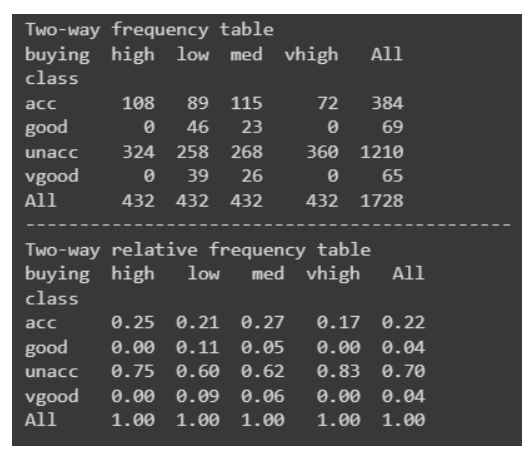

Frequency table

A frequency table is a great way to represent the number of times an event or value occurs. We typically use them to find descriptive statistics for our data. For example, we may wish to understand the effect of one feature on the final decision.

Let’s create an example frequency table. We will use the Car Evaluation Data Set from the UCI Machine Learning repository and Pandas to build our frequency table.

import pandas as pd"""source: https://heartbeat.comet.ml/exploratory-data-analysis-eda-for-categorical-data-870b37a79b65"""def frequency_table(data:pd.DataFrame, col:str, column:str): freq_table = pd.crosstab(index=data[col], columns=data[column], margins=True) rel_table = round(freq_table/freq_table.loc["All"], 2) return freq_table, rel_tablebuying_freq, buying_rel = frequency_table(car_data, "class", "buying")print("Two-way frequency table")print(buying_freq)print("---" * 15)print("Two-way relative frequency table")print(buying_rel)

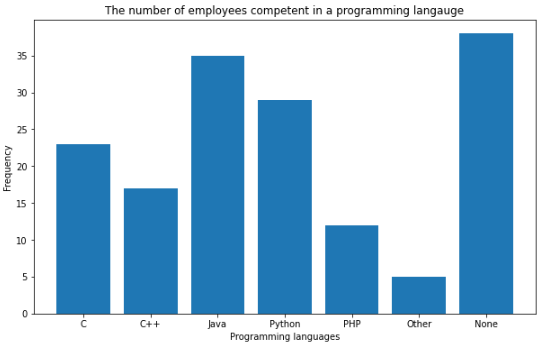

Bar graph

The bar graph is among the most simple yet effective data visualizations around. It is typically deployed to compare the differences between categories. For example, we can use a bar graph to visualize the number of fraudulent cases to non-fraudulent cases. Another use case for a bar graph may be to visualize the frequencies of each star rating for a movie.

Here is how we would create a bar graph in Python:

"""Starter code from tutorials pointsee: https://bit.ly/3x9Z6HU"""import matplotlib.pyplot as plt# Dataset creation.programming_languages = ['C', 'C++', 'Java', 'Python', 'PHP', "Other", "None"]employees_frequency = [23, 17, 35, 29, 12, 5, 38]# Bar graph creation.fig, ax = plt.subplots(figsize=(10, 5))plt.bar(programming_languages, employees_frequency)plt.title("The number of employees competent in a programming langauge")plt.xlabel("Programming languages")plt.ylabel("Frequency")plt.show()

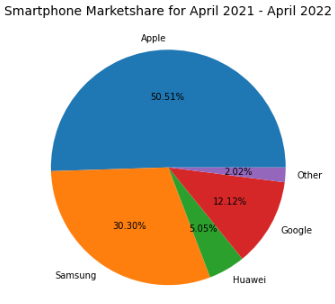

Pie charts

Pie charts are another simple and efficient visualization tool. They are typically used to visualize and compare parts of a whole. For example, a good use case for a pie chart would be to represent the market share for smartphones. Let’s implement this in Python.

"""Example to demonstrate how a pie chart can be used to represent the marketshare for smartphones.Note: These are not real figures. They were created for demonstration purposes."""import numpy as npfrom matplotlib import pyplot as plt# Dataset creation.smartphones = ["Apple", "Samsung", "Huawei", "Google", "Other"]market_share = [50, 30, 5, 12, 2]# Pie chart creationfig, ax = plt.subplots(figsize=(10, 6))plt.pie(market_share, labels = smartphones, autopct='%1.2f%%')plt.title("Smartphone Marketshare for April 2021 - April 2022", fontsize=14)plt.show()

Line graphs and area charts

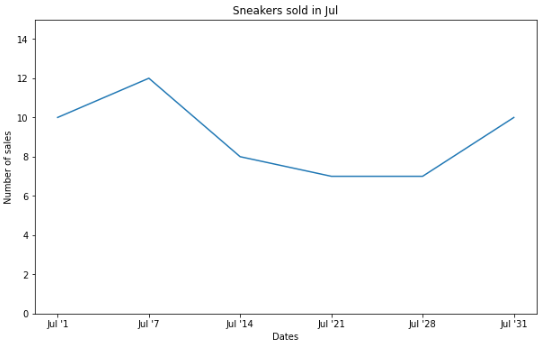

Line graphs are great for visualizing trends or progress in data over a period of time. For example, we can visualize the number of sneaker sales for the month of July with a line graph.

import matplotlib.pyplot as plt# Data creation.sneakers_sold = [10, 12, 8, 7, 7, 10]dates = ["Jul '1", "Jul '7", "Jul '14", "Jul '21", "Jul '28", "Jul '31"]# Line graph creationfig, ax = plt.subplots(figsize=(10, 6))plt.plot(dates, sneakers_sold)plt.title("Sneakers sold in Jul")plt.ylim(0, 15) # Change the range of y-axis.plt.xlabel("Dates")plt.ylabel("Number of sales")plt.show()

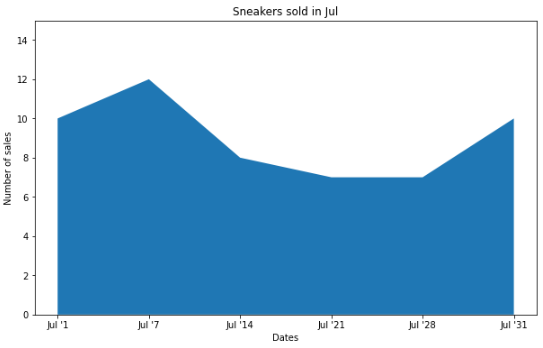

An area chart is an extension of the line graph, but they differ in that the area below the line is filled with a color or pattern.

Here is the exact same data above plotted in an area chart:

# Area chart creationfig, ax = plt.subplots(figsize=(10, 6))plt.fill_between(dates, sneakers_sold)plt.title("Sneakers sold in Jul")plt.ylim(0, 15) # Change the range of y-axis.plt.xlabel("Dates")plt.ylabel("Number of sales")plt.show()

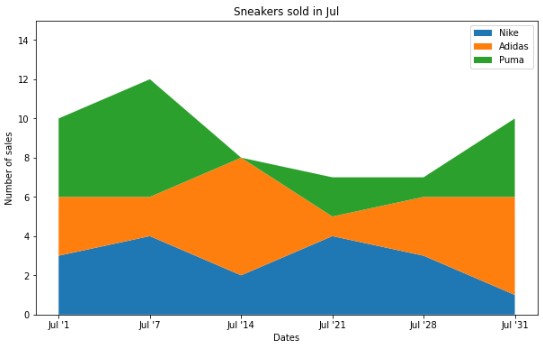

It is also quite common to see stacked area charts that illustrate the changes of multiple variables over time. For instance, we could visualize the brands of the sneakers sold in the month of July rather than the total sales with a stacked area chart.

# Data creation.sneakers_sold = [[3, 4, 2, 4, 3, 1], [3, 2, 6, 1, 3, 5], [4, 6, 0, 2, 1, 4]]dates = ["Jul '1", "Jul '7", "Jul '14", "Jul '21", "Jul '28", "Jul '31"]# Multiple area chart creationfig, ax = plt.subplots(figsize=(10, 6))plt.stackplot(dates, sneakers_sold, labels=["Nike", "Adidas", "Puma"])plt.title("Sneakers sold in Jul")plt.ylim(0, 15) # Change the range of y-axis.plt.xlabel("Dates")plt.ylabel("Number of sales")plt.legend()plt.show()

Each plot is showing the exact same data but in a different way.

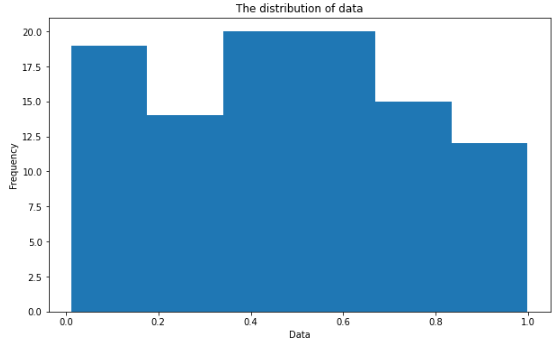

Histograms

Histograms are used to represent the distribution of a numerical variable.

import numpy as npimport matplotlib.pyplot as pltdata = np.random.sample(size=100) # Graph will change with each runfig, ax = plt.subplots(figsize=(10, 6))plt.hist(data, bins=6)plt.title("The distribution of data")plt.xlabel("Data")plt.ylabel("Frequency")plt.show()

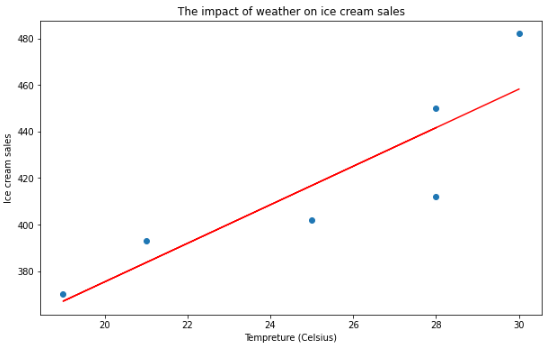

Scatter plots

Scatter plots are used to visualize the relationship between two different variables. It is also quite common to add a line of best fit to reveal the overall direction of the data. An example use case for a scatter plot may be to represent how the temperature impacts the number of ice cream sales.

import numpy as npimport matplotlib.pyplot as plt# Data creation.temperature = np.array([30, 21, 19, 25, 28, 28]) # Degree's celsiusice_cream_sales = np.array([482, 393, 370, 402, 412, 450])# Calculate the line of best fitX_reshape = temperature.reshape(temperature.shape[0], 1)X_reshape = np.append(X_reshape, np.ones((temperature.shape[0], 1)), axis=1)y_reshaped = ice_cream_sales.reshape(ice_cream_sales.shape[0], 1)theta = np.linalg.inv(X_reshape.T.dot(X_reshape)).dot(X_reshape.T).dot(y_reshaped)best_fit = X_reshape.dot(theta)# Create and plot scatter chartfig, ax = plt.subplots(figsize=(10, 6))plt.scatter(temperature, ice_cream_sales)plt.plot(temperature, best_fit, color="red")plt.title("The impact of weather on ice cream sales")plt.xlabel("Temperature (Celsius)")plt.ylabel("Ice cream sales")plt.show()

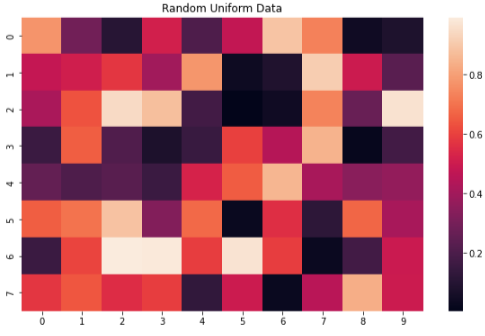

Heatmaps

Heatmaps use a color-coding scheme to depict the intensity between two items. One use case of a heatmap could be to illustrate the weather forecast (i.e. the areas in red show where there will be heavy rain). You can also use a heatmap to represent web traffic and almost any data that is three-dimensional.

To demonstrate how to create a heatmap in Python, we are going to use another library called Seaborn – a high-level data visualization library based on Matplotlib.

import numpy as npimport seaborn as snsimport matplotlib.pyplot as pltdata = np.random.rand(8, 10) # Graph will change with each runfig, ax = plt.subplots(figsize=(10, 6))sns.heatmap(data)plt.title("Random Uniform Data")plt.show()

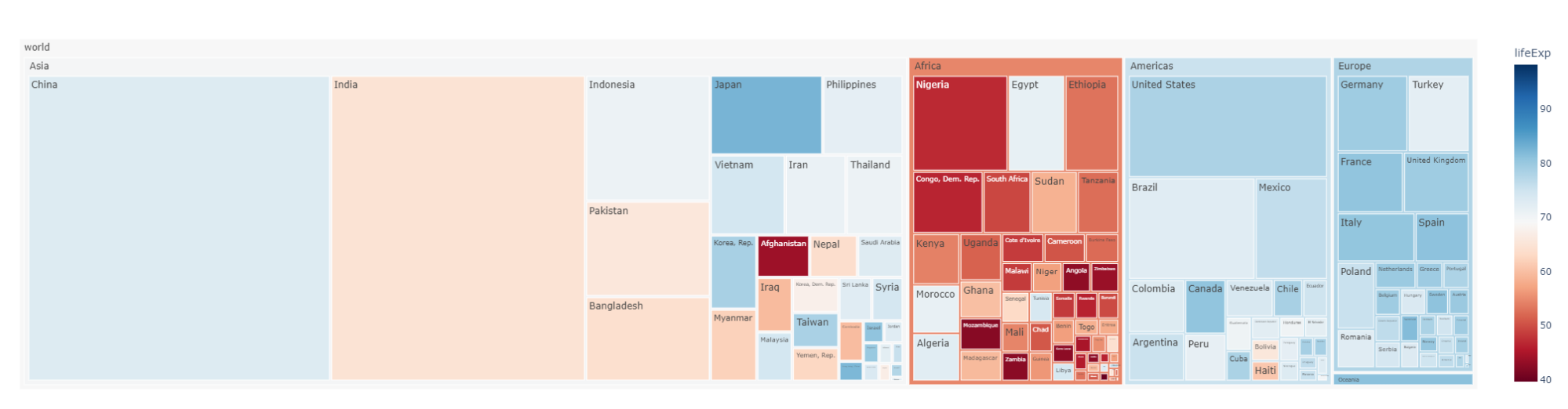

Treemaps

Treemaps are used to represent hierarchical data with nested rectangles. They are great for visualizing part-to-whole relationships among a large number of categories such as in sales data.

To help us build our treemap in Python, we are going to leverage another library called Plotly, which is used to make interactive graphs.

"""Source: https://plotly.com/python/treemaps/"""import plotly.express as pximport numpy as npdf = px.data.gapminder().query("year == 2007")fig = px.treemap(df, path=[px.Constant("world"), 'continent', 'country'], values='pop', color='lifeExp', hover_data=['iso_alpha'], color_continuous_scale='RdBu', color_continuous_midpoint=np.average(df['lifeExp'], weights=df['pop']))fig.update_layout(margin = dict(t=50, l=25, r=25, b=25))fig.show()

3 Tips for Effective Data Visualization

Data visualization is an art. Developing your skills will take time and practice. Here are three tips to get you moving in the right direction:

Tip #1: Ask a Specific Question

The first and most crucial step in creating an effective data visualization is having a clear and specific question in mind. Without this, you risk producing visualizations that are unfocused and difficult to interpret.

- Example: If you’re analyzing sales data, instead of asking, “How are sales trending this year?”, be more specific: “How have online sales in the U.S. for product X changed in Q3 compared to Q2?”

Having this precise question helps you focus the visualization on answering it directly, avoiding unnecessary information that could confuse or overwhelm the viewer.

- Bad example: A cluttered dashboard showing total sales across all regions, product types, and sales channels for the past five years.

- Good example: A simple bar chart focusing only on Q2 vs. Q3 U.S. online sales for a specific product.

Tip #2: Select the Appropriate Visualization

Not all visualizations are created equal. Choosing the wrong type of chart can distort your message and confuse your audience. Each chart type has its strengths and is suited to specific kinds of data.

- Example: If you’re trying to show the proportion of a whole, a pie chart may be suitable. But if you're comparing changes over time, a line chart or bar graph would be more appropriate.

Choosing the Right Chart Type:

- Bar chart: Use when comparing different categories.

- Line graph: Best for showing trends over time.

- Pie chart: Ideal for showing proportions of a whole.

- Scatter plot: Useful for identifying relationships between two variables.

- Bad Example: Using a pie chart to show changes in revenue over time.

- Good Example: Using a line graph to highlight monthly revenue changes, making trends easy to spot.

Takeaway: Match the chart type to the specific question or data you’re working with to enhance clarity.

Tip #3: Highlight the Most Important Information

While creating a visualization, it’s essential to direct your audience’s attention to the key insights. Effective use of color, size, and design elements can help you emphasize what matters most.

- Example: Imagine you're showing revenue data for multiple products. By using bold colors for the top-selling products and muted tones for the others, you make it easy for viewers to focus on the most critical information.

Here’s how you can highlight important information:

- Use Contrasting Colors: Highlight important data points with distinct colors.

- Vary Size: Make critical data points larger or bolder.

- Annotations: Add brief text explanations or highlights directly on the chart to draw attention to key areas.

- Bad Example: A scatter plot with every point the same color and size, making it difficult to identify patterns or key data points.

- Good Example: A scatter plot with large, bold points to emphasize outliers or trends, along with color gradients to indicate intensity.

Takeaway: Subtle design choices like color, size, and labels can greatly improve your audience’s ability to quickly grasp the key insights.

Final Thoughts

With all things said, an effective visualization leans on our natural tendencies to recognize patterns. The use of colors, shapes, size, etc is an extremely effective technique for emphasizing the most important information you want to display.

Data visualization is an art; doing it well involves asking a specific question, selecting the appropriate visualization to use, and highlighting the most important information. Do not be too discouraged if your first attempt is not the greatest; it takes time and practice to improve your skills and DataCamp's wide range of data visualization courses are an excellent resource to help you become a master data visualizer.

Data Visualization FAQs

What are the most common mistakes in data visualization?

Common mistakes include overloading the chart with too much data, which can overwhelm the viewer. Another mistake is choosing the wrong type of chart, such as using a pie chart when a bar or line chart would be more appropriate. Poor use of color, like not having enough contrast, can make the visualization difficult to interpret. Lastly, failing to consider the audience's level of expertise can lead to miscommunication, as the visualization may be too complex or too simple.

How can I improve storytelling with my visualizations?

To enhance storytelling, start by focusing on a clear question that your visualization aims to answer. Make sure to highlight key insights using color, size, or annotations to direct the viewer's attention. Organize your visualizations in a logical sequence, guiding the viewer through the data to reveal insights step by step, making the narrative flow more naturally.

When should I use interactive visualizations?

Interactive visualizations are ideal when dealing with large datasets that would be cumbersome to display all at once, allowing users to filter or zoom in on specific data points. They are also useful when you have a varied audience, enabling different stakeholders to explore the insights most relevant to them. Additionally, they are helpful in decision-making contexts, where stakeholders can interact with the data in real-time and explore various scenarios.

How do I choose between code-free and code-based tools?

Code-free tools, like Power BI and Tableau, are best for those who need quick, accessible visualizations without needing programming skills. These are great for business professionals and managers. Code-based tools, such as Python’s Matplotlib or R’s ggplot2, offer more flexibility and customization for users who are comfortable with programming. These are ideal for data scientists or analysts working with complex data and requiring full control over the visualization process.

How can I ensure accessibility in my data visualizations?

To make your visualizations accessible, use colorblind-friendly palettes so that individuals with color vision deficiencies can interpret the charts accurately. Include alternative text descriptions for visuals when they are part of reports or presentations to accommodate users who may rely on screen readers. For interactive visualizations, ensure that navigation is intuitive and easy to understand, so users with varying skill levels can interact with the data seamlessly.