Synthetic data, used in data science and machine learning, refers to artificially generated data, which allows researchers and developers to test and improve algorithms without risking the privacy or security of real-world data.

Synthetic Data Explained

Synthetic data is essentially artificial data created algorithmically. It is designed to mimic the characteristics of real-world data without containing any actual information. Used widely in data science and machine learning, synthetic data enables algorithms to be tested and improved without risking the privacy or security of real-world data. It can also be used to augment existing datasets, especially in cases where the original data is limited or biased.

Generating synthetic data is achieved by using statistical methods, machine learning, or a combination of both to generate data that mirrors the structure and patterns found in original data. For instance, Generative Adversarial Networks (GANs), a class of machine learning frameworks where two neural networks contest with each other, are often used. GANs work by having one network, the generator, that creates synthetic data instances, while the other, the discriminator, evaluates them for authenticity. Through this process, the generator learns to produce more and more realistic data.

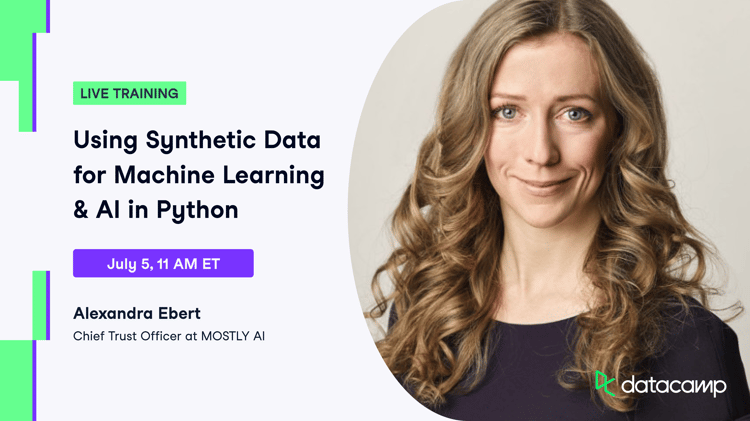

There are also several commercial tools that can be used to generate synthetic data, such as MOSTLY AI and Hazy.

Real-World Synthetic Data Applications

Synthetic data finds its application in various domains:

- Autonomous vehicles. Companies like Waymo and Tesla use synthetic data to train their self-driving algorithms. They create virtual environments that mimic real-world scenarios, allowing the algorithms to learn how to react in different situations without the risk of real-world testing.

- Healthcare. Synthetic data is used to generate health records for research purposes. This allows researchers to work with data that maintains the statistical properties of real patient data without compromising patient privacy. For example, synthetic data can be used to generate realistic images of organs or tissues, which can then be used to train algorithms to recognize patterns and detect abnormalities in real patient images. This allows for more accurate and efficient diagnosis and treatment planning, without the need for large amounts of real patient data. Learn to process sensitive information by taking a data privacy and anonymization course with Python or R.

- Finance. Synthetic data is used to simulate financial markets, enabling the testing of trading strategies and risk models without the need for actual market data. For instance, in credit risk modeling synthetic data can be used to simulate borrower characteristics and credit behavior, allowing lenders to test and refine their credit risk models without exposing sensitive customer information. This can help improve the accuracy of credit scoring and reduce the risk of default for lenders.

- Machine learning. One way to generally improve the performance and accuracy of machine learning models is to use synthetic data. This can help address problems like imbalanced data and also reduce biases in existing data sets.

What are the Limitations of Synthetic Data?

Despite its benefits, synthetic data does have its limitations:

- Quality. The quality of synthetic data is dependent on the algorithms used to generate it. If the algorithms do not accurately capture the underlying distribution of the real-world data, the synthetic data may not be representative.

- Bias. Synthetic data is generated based on certain assumptions, algorithms, or models. If these underlying assumptions are biased or do not accurately reflect the real-world scenarios, the synthetic data may inherit those biases. Biased synthetic data can lead to skewed or inaccurate models or predictions.

- Inability to capture rare events. Rare events or outliers in the real data may not be adequately captured in synthetic data. Generating synthetic data that accurately represents extremely rare occurrences or outliers can be challenging. This limitation can impact the performance of models trained solely on synthetic data when it comes to handling these exceptional cases.

- Complexity. Generating high-quality synthetic data can be a complex process, requiring advanced knowledge of machine learning techniques and significant computational resources.

Using Synthetic Data for Projects

Gartner predicts that by 2024, 60% of the data used to create machine learning and analytical applications will be artificially generated. This trend is due to the high costs and rarity of collecting and cleaning real-world data.

For instance, data related to bank fraud, breast cancer, self-driving cars, and malware attacks are hard to come by in the real world. And even if you do obtain the data, it can take a lot of time and resources to clean and process it for use in machine learning tasks.

Apart from that, I use the faker Python library to generate sample datasets for testing databases and various applications. However, it is not useful for machine learning applications as it generates data from a limited pool. For machine learning, I use Conditional GANs to generate synthetic tabular data. This has helped improve model performance and stability.

If you're tackling a specialized machine learning issue, you might need to explore synthetic data generation techniques to cater to your specific requirements. This is because obtaining outdated data on Kaggle and Accuriqin data is not adaptable.

Want to learn more about AI and machine learning? Check out the following resources:

FAQs

Is synthetic data real data?

No, synthetic data is not real data. It is artificial data generated algorithmically to mimic the characteristics of real-world data.

Can synthetic data be used for machine learning?

Yes, synthetic data is often used to train machine learning models, particularly in scenarios where real-world data is scarce, expensive to collect, or privacy-sensitive.

Is synthetic data privacy-compliant?

Yes, since synthetic data does not contain any real personal information, it can be used without the risk of violating privacy regulations.

How is synthetic data different from simulated data?

Simulated data is created using mathematical models to replicate a real-world scenario, while synthetic data is generated algorithmically to mimic the characteristics of real-world data without containing any actual information.

Can synthetic data completely replace real-world data in machine learning?

No, synthetic data can be used to supplement real-world data, but it cannot completely replace it. Real-world data is still necessary to validate and improve the accuracy of machine learning models.

How can synthetic data be validated for accuracy?

Synthetic data can be validated by comparing its statistical properties and patterns to those of real-world data. The accuracy of machine learning models trained on synthetic data can also be compared to those trained on real-world data.

Can synthetic data be used for all types of machine learning algorithms?

Synthetic data can be used for many types of machine learning algorithms, but its effectiveness may vary depending on the specific algorithm and the nature of the data being generated.

Is synthetic data expensive to generate?

Generating high-quality synthetic data can be a complex and resource-intensive process, but there are also commercial tools and platforms available that can make it more accessible and cost-effective.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.